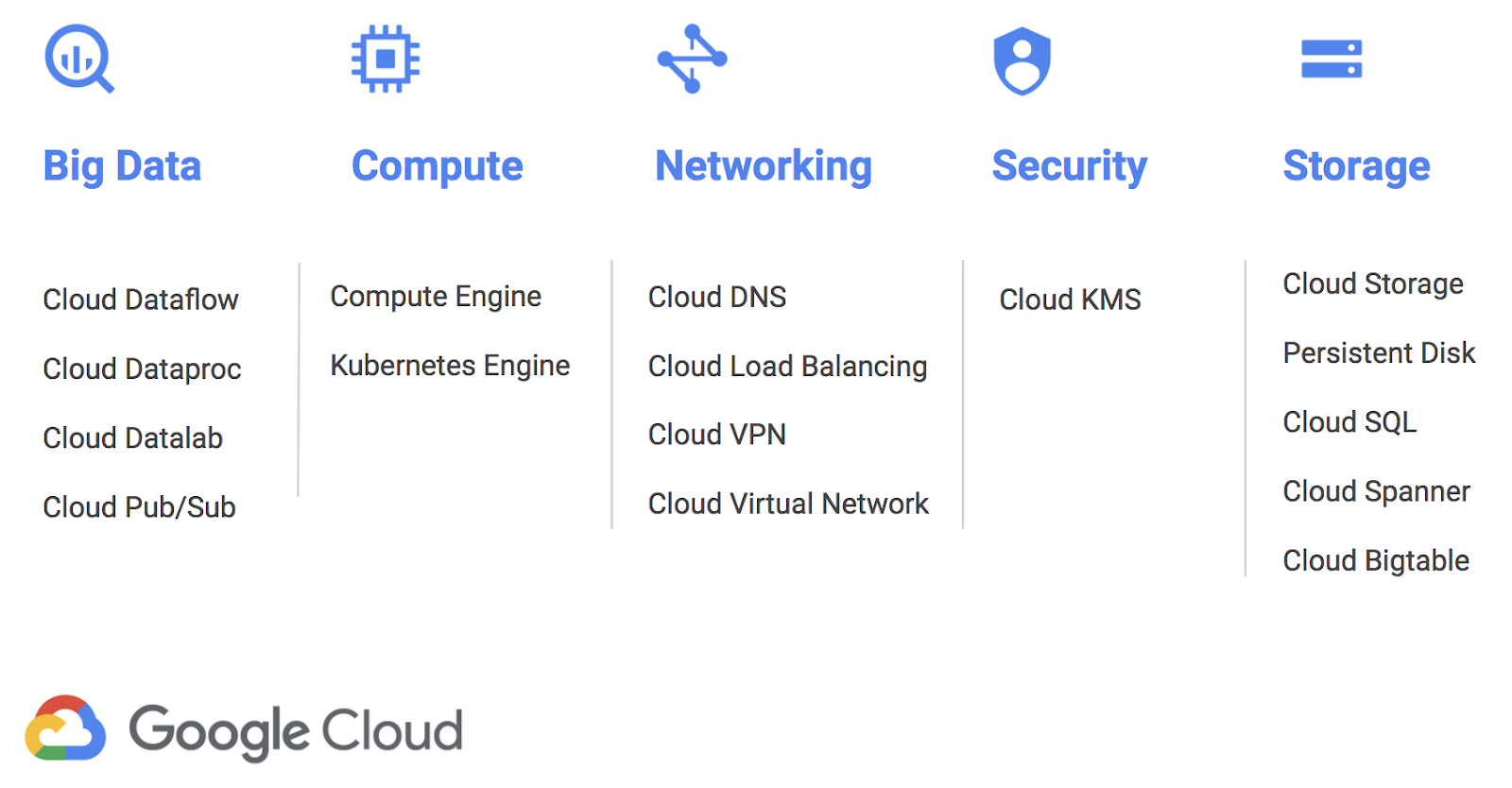

As we celebrate the upcoming Los Angeles Google Cloud Platform (GCP) region in one of the creative centers of the world, we are excited to share news about a product that can help you get your data there as fast as possible. Google Transfer Appliance is now generally available in the U.S., with a few new features that will simplify moving data to Google Cloud Storage. Customers have been using Transfer Appliance for almost a year, and we’ve heard great feedback.

The Transfer Appliance is a high-capacity server that lets you transfer large amounts of data to GCP, quickly and securely. It’s recommended if you’re moving more than 20TB of data, or data that would take more than a week to upload.

You can now request a Transfer Appliance directly from your Google Cloud Platform console. Indicate the amount of data you’re looking to transfer, and our team will help you choose the version that is the best fit for your needs.

The service comes in two configurations: 100TB or 480TB of raw storage capacity. We see typical data compression rates of 2x the raw capacity. The 100TB model is priced at $300, plus express shipping (approximately $500); the 480TB model is priced at $1,800, plus shipping (approximately $900).

You can mount Transfer Appliance as an NFS volume, making it easy to drag and drop files, or rsync, from your current NAS to the appliance. This feature simplifies the transfer of file-based content to Cloud Storage, and helps our migration partners expedite the move for customers.

"SADA Systems provides expert cloud consultation and technical services, helping customers get the most out of their Google Cloud investment. We found Transfer Appliance helps us transition the customer to the cloud faster and more efficiently by providing a secure data transfer strategy."Transfer Appliance can also help you transition your backup workflow to the cloud quickly. To do that, move the bulk of your current backup data offline using Transfer Appliance, and then incrementally back up to GCP over the network from there. Partners like Commvault can help you do this.

-Simon Margolis, Director of Cloud Platform, SADA Systems

With this release, you’ll also find a more visible end-to-end integrity check, so you can be confident that every bit was transferred as is, and have peace of mind in deleting source data.

Transfer Appliance in action

In developing Transfer Appliance, we built a device designed for the data center, so it slides into a standard 19” rack. That has been a positive experience for our early customers, even those with floating data centers (yes, actually floating--see below for more).We’ve seen our customers successfully use Transfer Appliance for the following use cases:

- Migrate your data center (or parts of it) to the cloud.

- Kick-start your ML or analytics project by transferring test data and staging it quickly.

- Move large archives of content like creative libraries, videos, images, regulatory or backup data to Cloud Storage.

- Collect data from research bodies or data providers and move it to Google Cloud for analysis.

One early adopter, Schmidt Ocean Institute, is a private non-profit foundation that combines advanced science with state-of-the-art technology to achieve lasting results in ocean research. Their goals are to catalyze sharing of information and to communicate this knowledge to audiences around the world. For example, the Schmidt Ocean Institute owns and operates research vessel Falkor, the first oceanographic research vessel with a high-performance cloud computing system installed onboard. Scientists run models and software and can plan missions in near-real time while at sea. With the state-of-the-art technologies onboard, scientists contribute scientific data to the oceanographic community at large, very quickly. Schmidt Ocean Institute uses Transfer Appliance to safely get the data back to shore and publicly available to the research community as fast as possible.

“We needed a way to simplify the manual and complex process of copying, transporting and mailing hard drives of research data, as well as making it available to the scientific community as quickly as possible. We are able to mount the Transfer Appliance onboard to store the large amounts of data that result from our research expeditions and easily transfer it to Google Cloud Storage post-cruise. Once the data is in Google Cloud Storage, it’s easy to disseminate research data quickly to the community.”

-Allison Miller, Research Program Manager, Schmidt Ocean Institute

Beatport, a division of LiveStyle, serves an audience of electronic music DJs, producers and their fans. Google Transfer Appliance afforded Beatport the opportunity to rethink their storage architecture in the cloud without affecting their customer-facing network in the process.

“DJs, music producers and fans all rely on Beatport as the home for the world’s electronic music. By moving our library to Google Cloud Storage, we can access our audio data with the advanced tools that Google Cloud Platform has to offer. Managing tens of millions of lossless quality files poses unique challenges. Migrating to the highly performant Cloud Storage puts our wealth of audio data instantly at the fingertips of our technology team. Transfer Appliance made that move easier for our team.”Eleven Inc. creates content, brand experiences and customer activation strategies for clients across the globe. Through years of work for their clients, Eleven built a large library of creative digital assets and wanted a way to cost-effectively store that data in the cloud. Facing ISP network constraints and a desire to free up space on their local asset server quickly, Eleven Inc. used Transfer Appliance to facilitate their migration.

-Jonathan Steffen, CIO, beatport

“Working with Transfer Appliance was a smooth experience. Rack, capture and ship. And now that our creative library is in Google Cloud Storage, it's much easier to think about ways to more efficiently manage the data throughout its life-cycle.”amplified ai combines extensive IP industry experience with deep learning to offer instant patent intelligence to inventors and attorneys. This requires a lot of patent data for building models. Transfer Appliance helped amplified ai move TBs of this specialized essential data to the cloud quickly.

-Joe Mitchell, Director of Information Systems

“My hands are already full building deep learning models on massive, disparate data without also needing to worry about physically moving data around. Transfer Appliance was easy to understand, easy to install, and made it easy to capture and transfer data. It just did what it was supposed to do and saved me time which, for a busy startup, is the most valuable asset.”Airbus Defence and Space Geo Inc. uses their exclusive access to radar and optical satellites to offer a stunning Earth observation images library. As part of a major cloud migration effort, Airbus moved hundreds of TBs of this data to the cloud with Transfer Appliance so they can better serve images to clients from Cloud Storage. They improved data quality along with the migration by using Transfer Appliance.

-Chris Grainger, Founder & CTO, amplified ai

“We needed to liberate. To flex on demand and scale in the cloud, and unleash our creativity. Transfer Appliance was a catalyst for that. In addition to migrating an amount of data that would not have been possible over the network, this transfer gave us the opportunity to improve our storage in the process—to clean out the clutter.”

-Dave Wright, CTO, Airbus Defense and Space Geo Inc.

National Collegiate Sports Archives (NCSA) is the creator and owner of the VAULT, which contains years worth of college sports footage. NCSA digitizes archival sports footage from leading schools and delivers it via mobile, advertising and social media platforms. With a lot of precious footage to deliver to college sports fans around the globe, NCSA needed a way to move data into Google Cloud Platform quickly and with zero disruption for their users.

“With a huge archive of collegiate sports moments, we wanted to get that content into the cloud and do it in a way that provides value to the business. I was looking for a solution that would cost-effectively, simply and safely execute the transfer and let our teams focus on improving the experience for our users. Transfer Appliance made it simple to capture data in our data center and ship it to Google Cloud. ”

-Jody Smith, Technology Lead, NCSA

Tackle your data migration needs with Transfer Appliance

To get detailed information on Transfer Appliance, check out our documentation. And visit our Data Transfer page to learn more about our other cloud data transfer options.We’re looking forward to bringing Transfer Appliance to regions outside of the U.S. in the coming months. But we need your help: Where should we deploy first? If you are interested in offline data transfer but not located in the U.S., please indicate so in the request form.

If you’re interested in learning more about cloud data migration strategies, check out this session at Next 2018 next month. For more information, and to register, visit the Next ‘18 website.