Posted by Mauricio Vergara, Product Marketing Manager, with contibutions by Thousand Ant.

Lyft is singularly committed to app excellence. As a rideshare company — providing a vital, time-sensitive service to tens of millions of riders and hundreds of thousands of drivers — they have to be. At that scale, every slowdown, frozen frame, or crash of their app can waste thousands of users’ time. Even a minor hiccup can mean a flood of people riding with (or driving for) the competition. Luckily, Lyft’s development team keeps a close eye on their app’s performance. That’s how they first noticed a slowdown in the startup time of their drivers’ Android app.

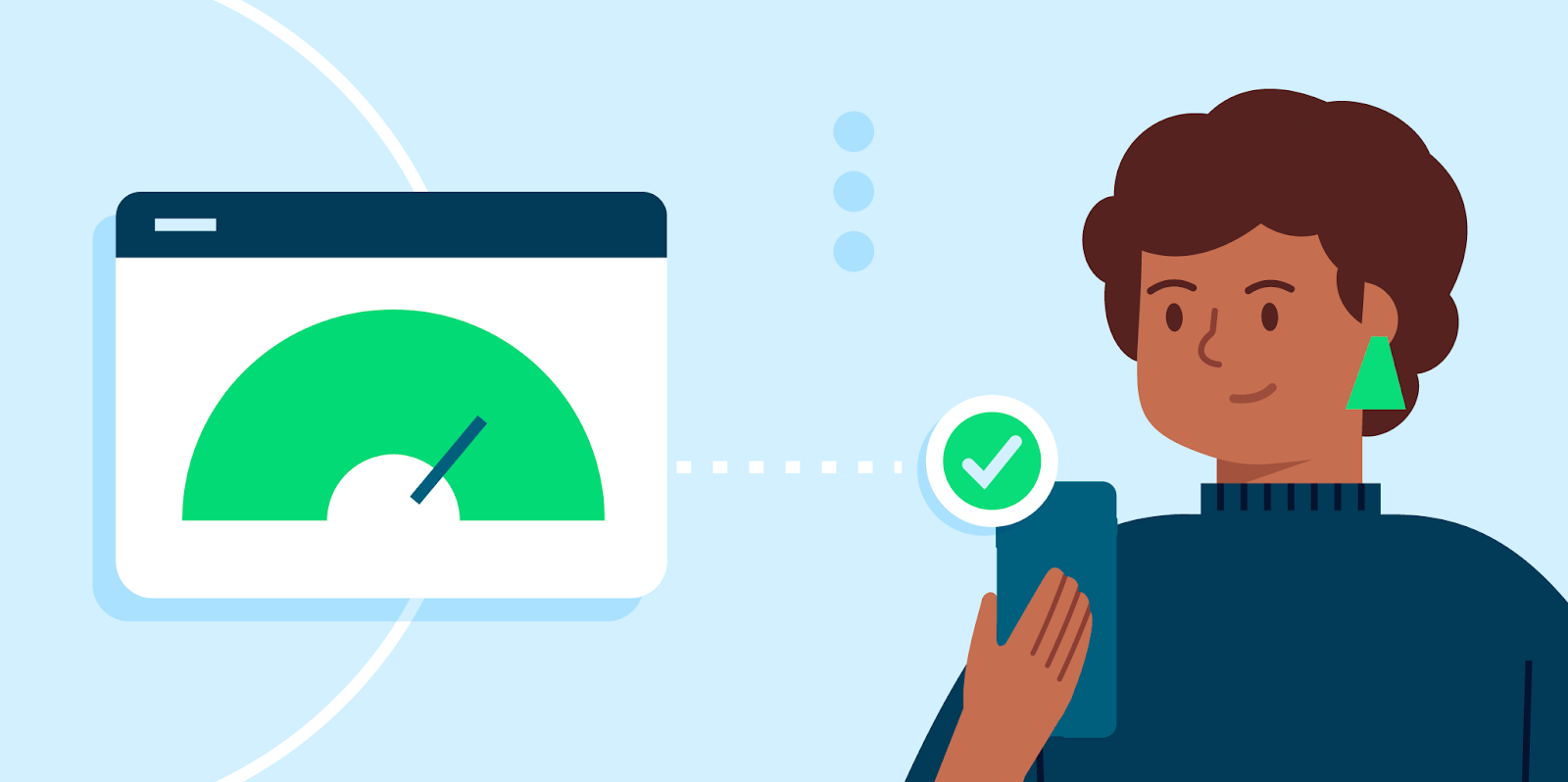

They needed to get to the bottom of the problem quickly — figure out what it would take to resolve and then justify such an investment to their leadership. That meant answering a number of tough questions. Where was the bottleneck? How was it affecting user experience? How great a priority should it be for their team at that moment? Luckily, they had a powerful tool at their disposal that could help them find answers. With the help of Android vitals, a Google Play tool for improving app stability and performance on Android devices, they located the problem, made a case for prioritizing it to their leadership, and dedicated the right amount of resources to solving it. Here’s how they did it.

New priorities

The first thing Lyft’s development team needed to do was figure out whether this was a pressing enough problem to convince their leadership to dedicate resources to it. Like any proposal to improve app quality, speeding up Lyft Driver’s start-up time had to be weighed out against other competing demands on developer bandwidth: introducing new product features, making architectural improvements, and improving data science. Generally, one of the challenges to convincing leadership to invest in app quality is that it can be difficult to correlate performance improvements with business metrics.

They turned to Android vitals to get an exact picture of what was at stake. Vitals gives developers access to data about the performance of their app, including app-not-responding errors, battery drainage, rendering, and app startup time. The current and historical performance of each metric is tracked on real devices and can be compared to the performance of other apps in the category. With the help of this powerful tool, the development team discovered that the Lyft Driver app startup time was 15–20% slower than 10 other apps in their category — a pressing issue.

Next, the team needed to establish the right scope for the project, one that would be commensurate with the slowdown’s impact on business goals and user experience. The data from Android vitals made the case clear, especially because it provided a direct comparison to competitors in the rideshare space. The development team estimated that a single developer working on the problem for one month would be enough to make a measurable improvement to app startup time.

Drawing on this wealth of data, and appealing to Lyft’s commitment to app excellence, the team made the case to their leadership. Demonstrating a clear opportunity to improve customer experience, a reasonably scoped and achievable goal, and clear-cut competitive intelligence, they got the go-ahead.

How They Did It

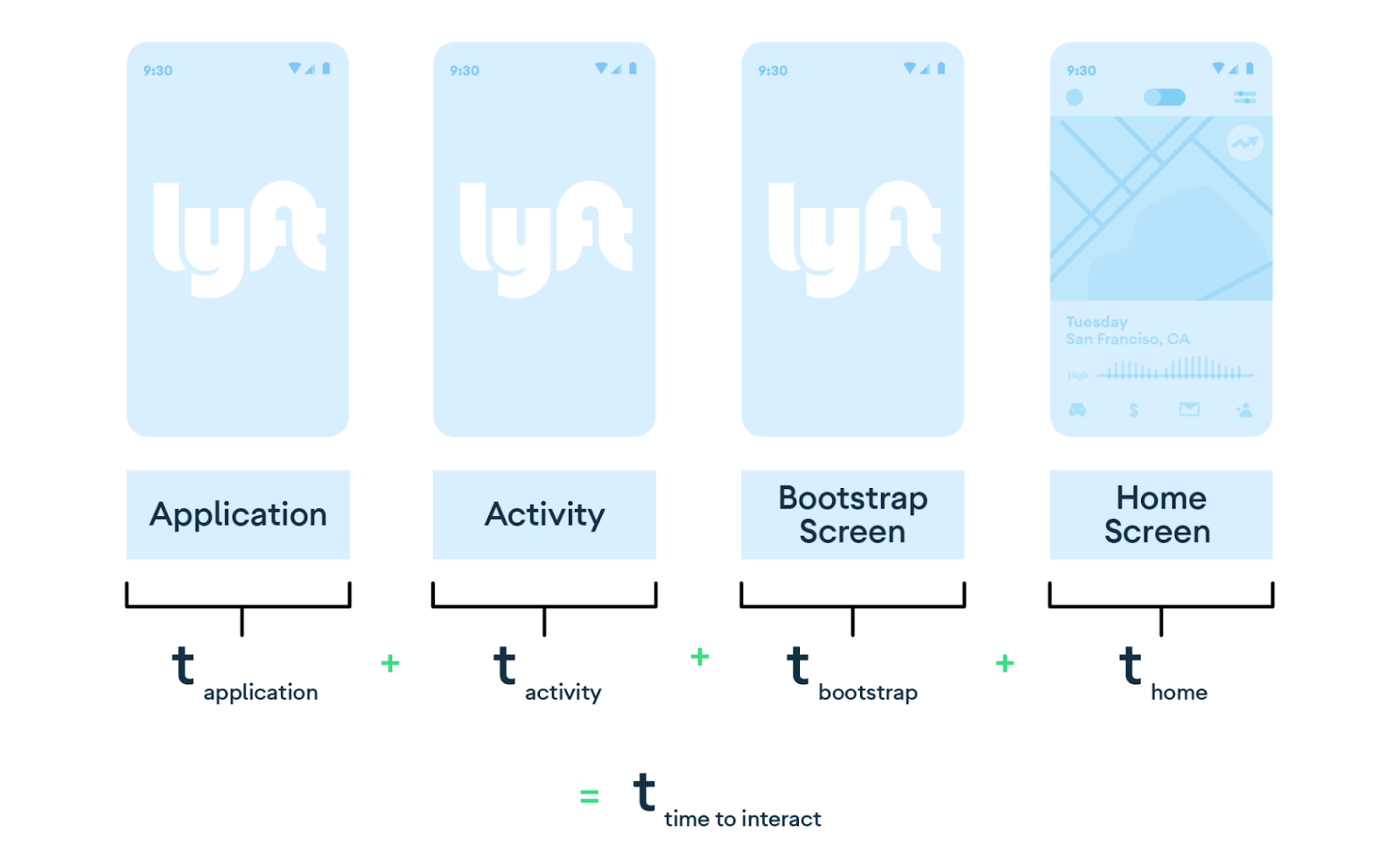

Lyft uses “Time to interact” as a primary startup metric (also known as Time to full display). To understand the factors that impact it, the Lyft team profiled each of their app’s launch stages, looking for the impasse. The Lyft Driver app starts up in four stages: 1) First, start the application process 2) “Activity” kicks off the UI rendering. 3) “Bootstrap” sends network requests for the data necessary to render the home screen. 4) Finally, “Display” opens the driver’s interface. Rigorous profiling revealed that the slowdown occurred in the third, bootstrapping, phase. With the bottleneck identified, the team took several steps to resolve it.

First, they reduced unneeded network calls on the critical launch path. After decomposing their backend services, they could safely remove some network calls in the launch path entirely. When possible, they also chose to execute network calls asynchronously. If some data was still required for the application to function, but was not needed during app launch, these calls were made non-blocking to allow the launch to proceed without them. Blocking network calls were able to be safely moved to the background. Finally, they chose to cache data between sessions.

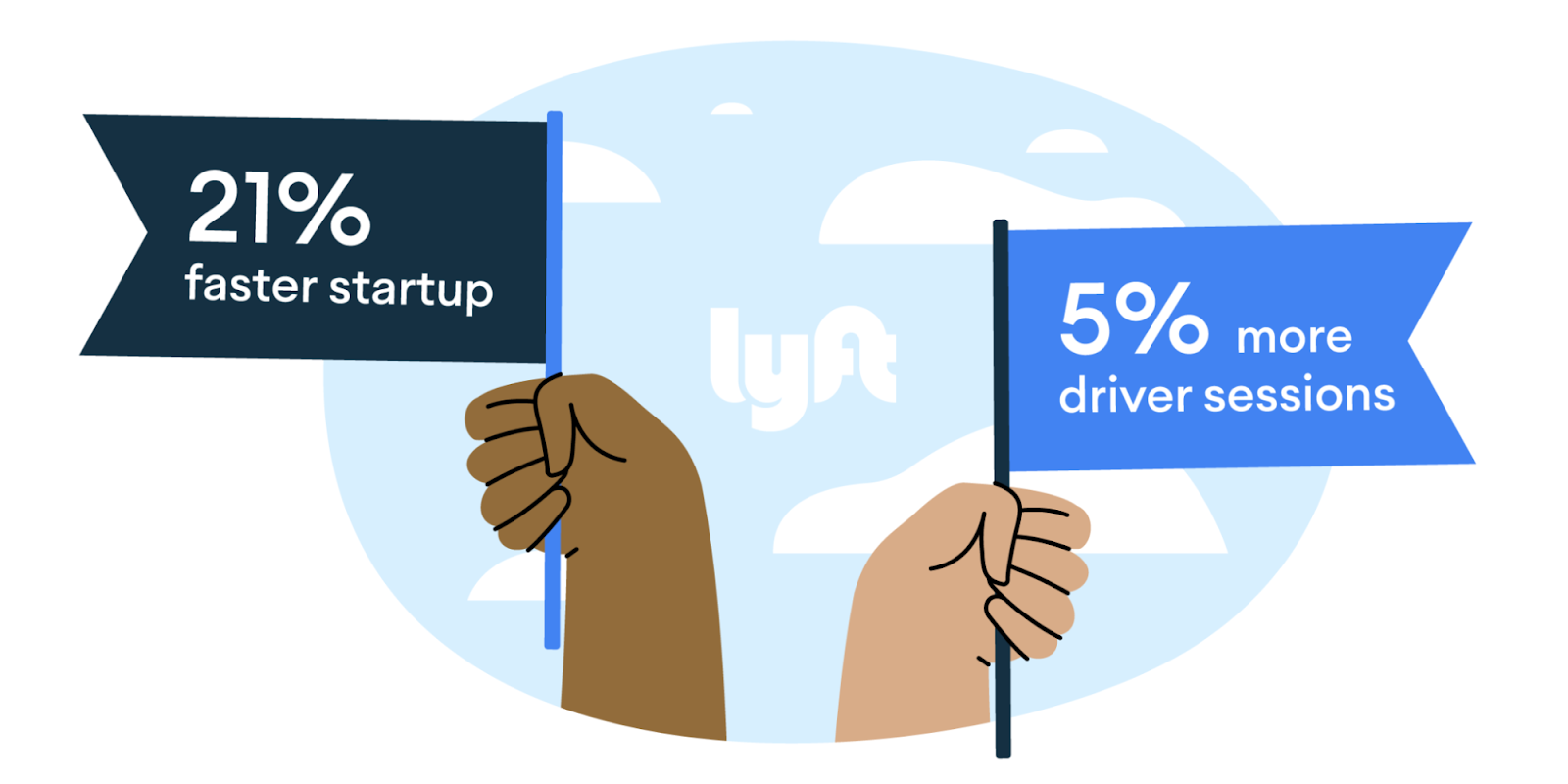

These may sound like relatively small changes, but they resulted in a dramatic 21% reduction in app startup time. This led to a 5% increase in driver sessions in Lyft Driver. With the results in hand, the team had enough buy-in from leadership to create a dedicated mobile performance workstream and add an engineer to the effort as they continued to make improvements. The success of the initiative caught on across the organization, with several managers reaching out to explore how they could make further investments in app quality.

Learnings

The success of these efforts contains several broader lessons, applicable to any organization.

As an app grows and the team grows with it, app excellence becomes more important than ever. Developers are often the first to recognize performance issues as they work closely on an app, but can find it difficult to raise awareness across an entire organization. Android vitals offers a powerful tool to do this. It provides a straightforward way to back up developer observations with data, making it easier to square performance metrics with business cases.

When starting your own app excellence initiative, it pays to first aim for small wins and build from there. Carefully pick actionable projects, which deliver significant results through an appropriate resource investment.

It’s also important to communicate early and often to involve the rest of the organization in the development team’s quality efforts. These constant updates about goals, plans, and results will help you keep your whole team on board.

Further Resources

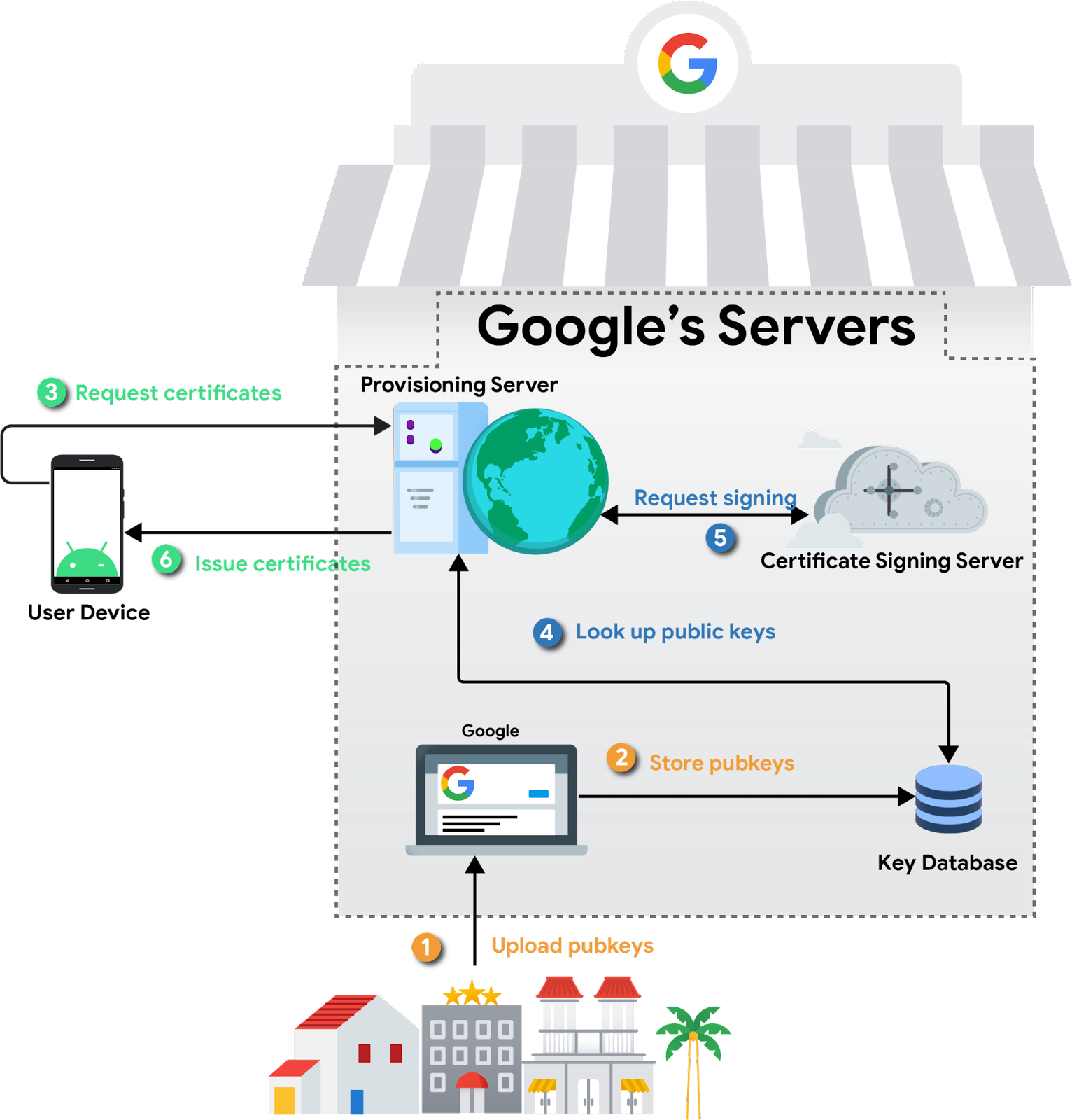

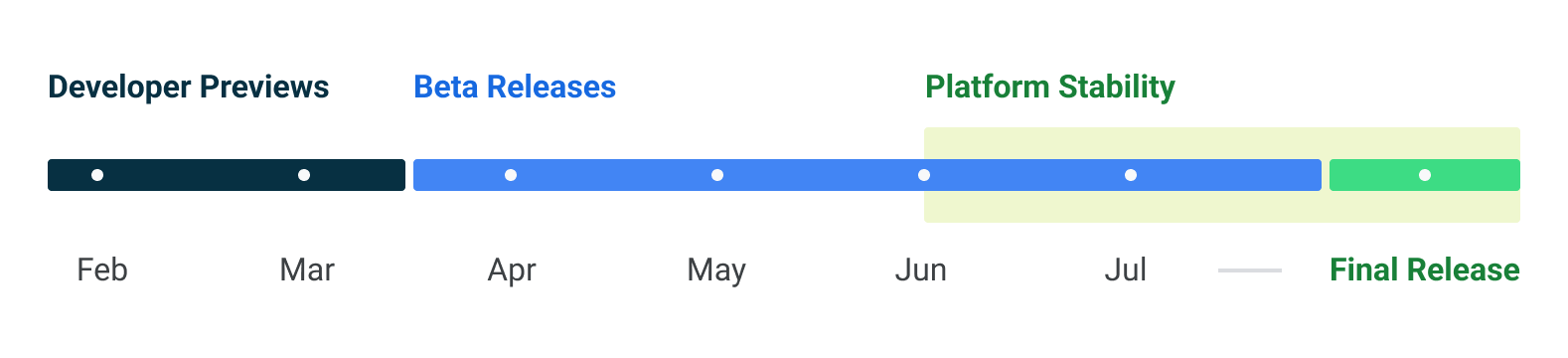

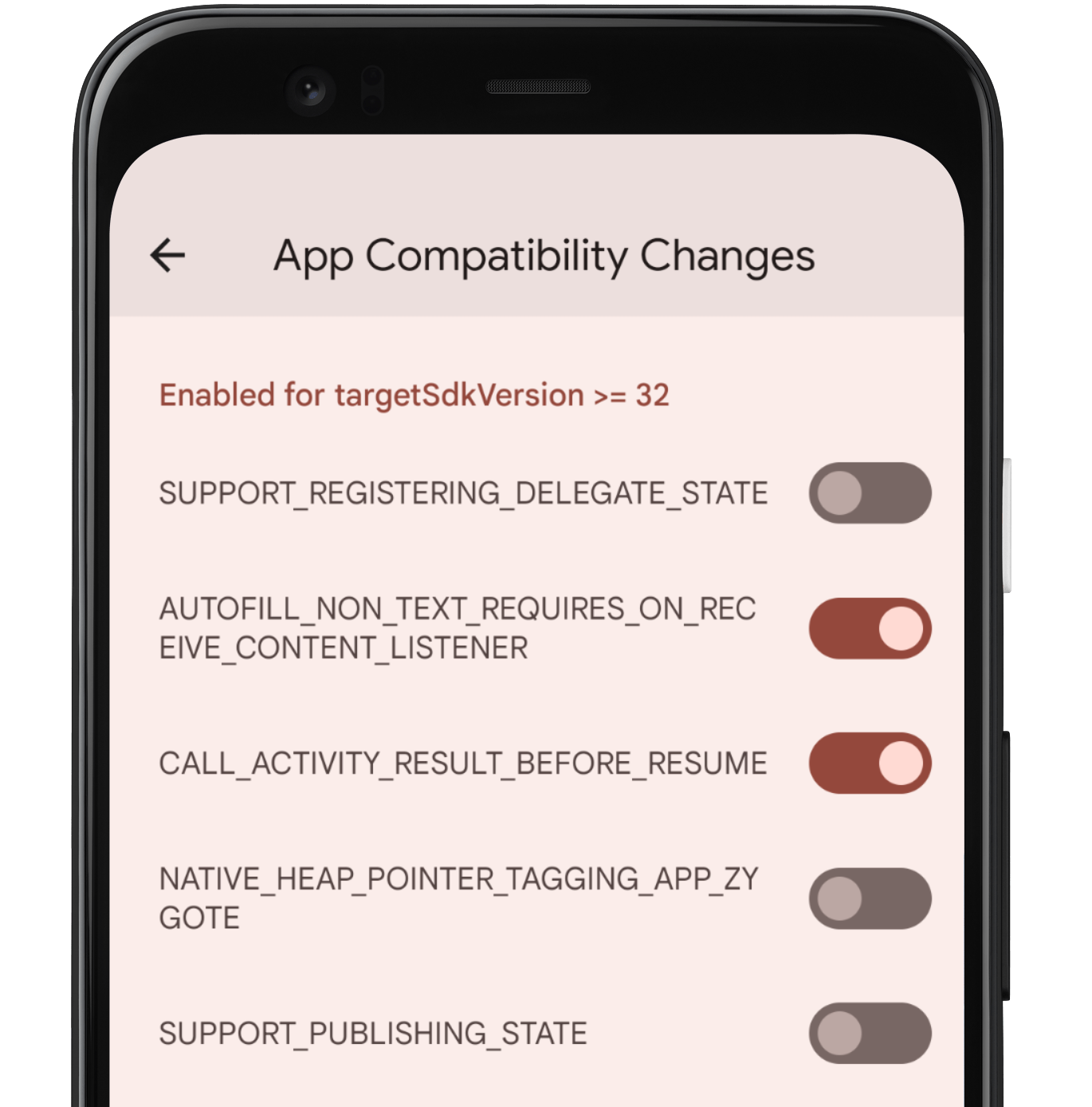

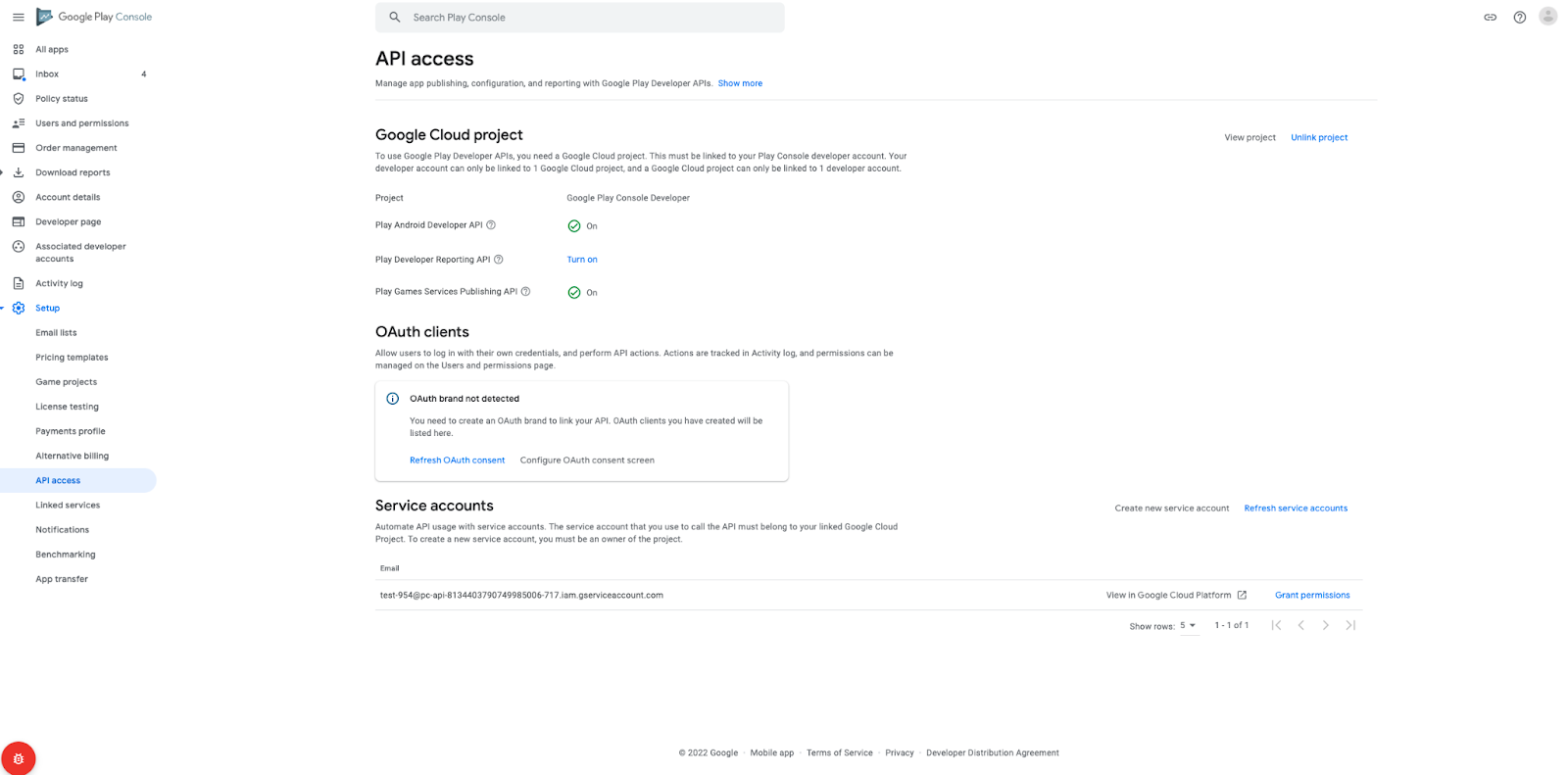

Android vitals is just one of the many tools in the Android ecosystem designed to help understand and improve app startup time and overall performance. Another complementary tool, Jetpack Macrobenchmark, can help provide intelligence during development and testing on a variety of metrics. In contrast to Android vitals, which provides data from real users’ devices, Macrobenchmark allows you to benchmark and test specific areas of your code locally, including app startup time.

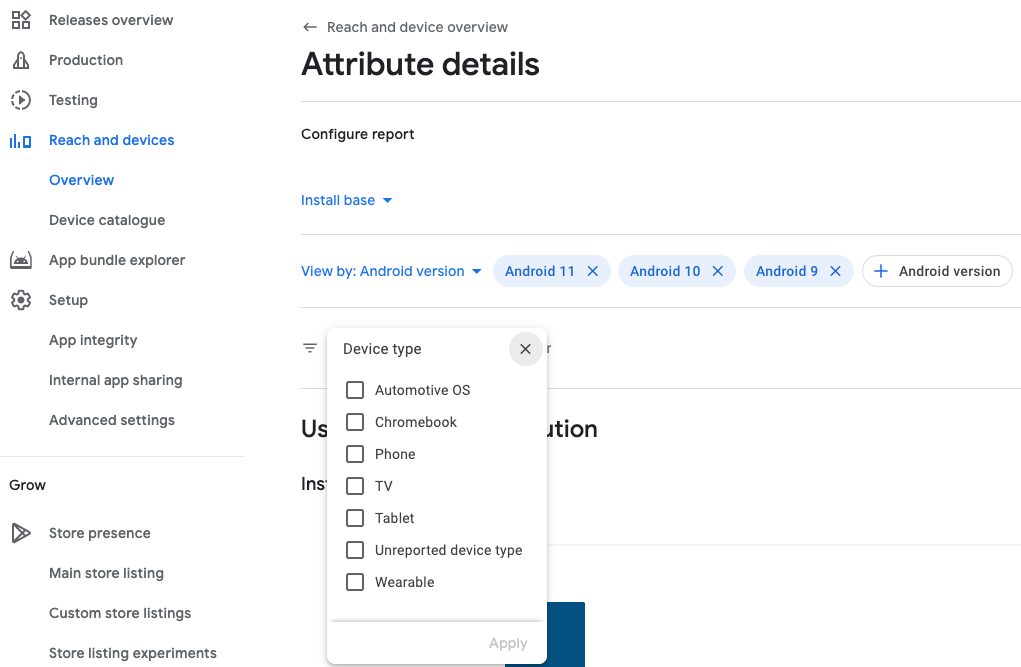

The Jetpack App startup library provides a straightforward, performant way to initialize components at application startup. Developers can use this library to streamline startup sequences and explicitly set the order of initialization. Meanwhile, Reach and devices can help you understand your user and issue distribution to make better decisions about which specs to build for, where to launch, and what to test. The data from the tool allows your team to prioritize quality efforts and determine where improvements will have the greatest impact for the most users. Perfetto is another invaluable asset: an open-source system tracing tool which you can use to instrument your code and diagnose startup problems. In concert, these tools can help you keep your app running smoothly, your users happy, and your whole organization supportive of your quality efforts.

If you’re interested in getting your own team on board for the pursuit of App Excellence (or join Lyft), check out our condensed case study for product owners and executives linked here.