Mirroring this trend in software, cloud providers and users are increasingly looking at building their servers using open source hardware, collaborating in initiatives such as the Open Compute Project or OpenPOWER.

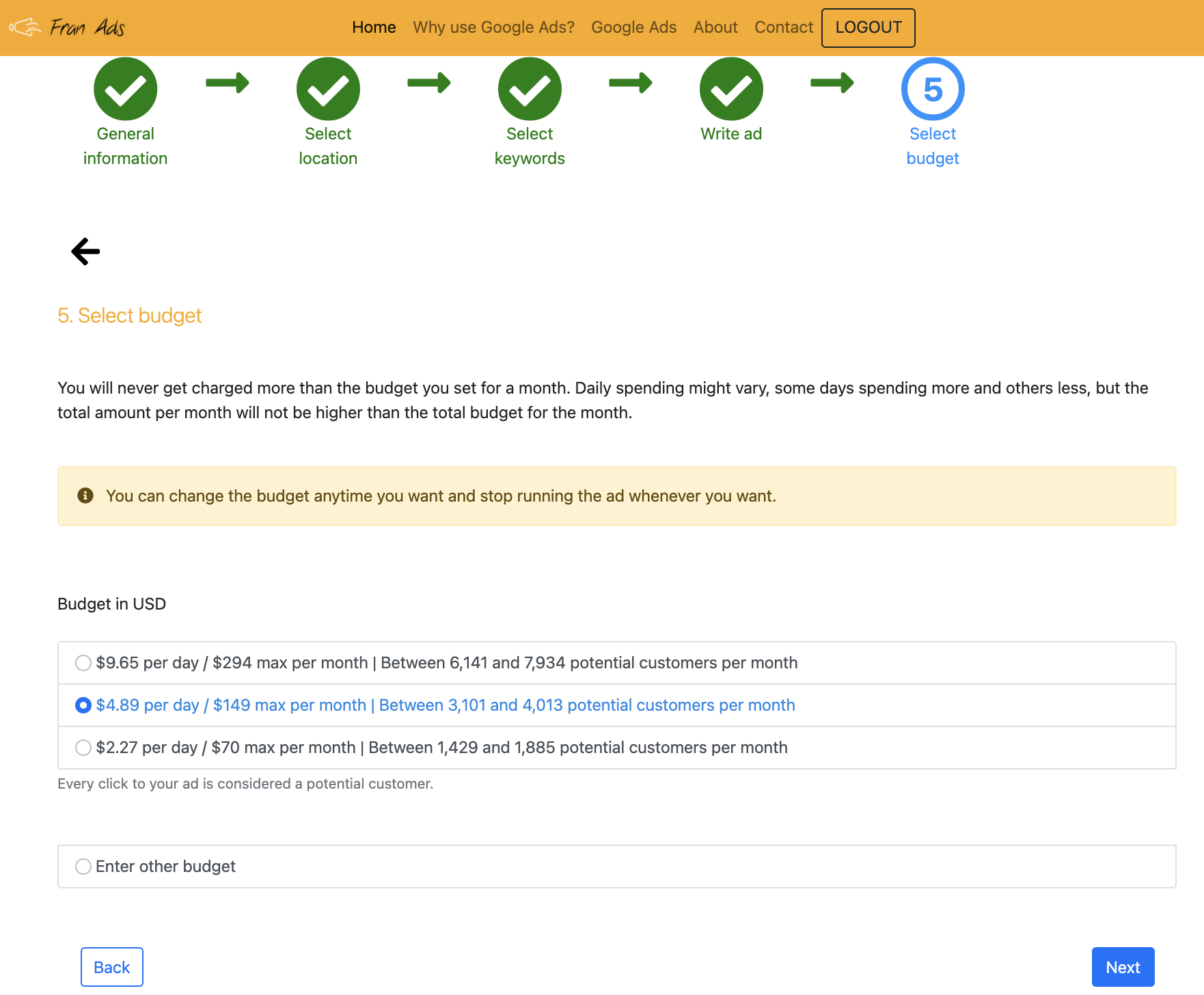

With Google, Antmicro has developed two open source hardware FPGA-based Baseboard Management Controller (BMC) platforms compliant with OCP’s DC-SCM ver 1.0 specification to help increase the security, configurability and flexibility of server management and monitoring infrastructure. These have since been adopted by OpenPOWER’s LibreBMC workgroup as the base hardware platform.

The DC-SCM spec

The Datacenter-ready Secure Control Module (DC-SCM) specification aims to move common server management, security and control features from a typical motherboard into a module designed in a normalized form factor, which can be used across various datacenter platforms.Currently rolling out in first DC-SCM compliant servers, the spec will help Cloud providers share costs, risks and increase reuse for the critical BMC component. Coupled with a fully open source implementation based on popular, inexpensive FPGA platforms will not only allow for more configurability and a tighter integration between hardware and software, but also tap into the momentum behind the broader open source hardware community via groups like CHIPS Alliance, OpenPOWER and RISC-V.

The hardware

Antmicro has developed two implementations of the DC-SCM-compatible BMC. Both designs meet the Open Compute Project specification for a Horizontal Form Factor 90x120 mm DC-SCM ver 1.0.The BMCs role is central to the server’s faultless operation, responsible for monitoring the system while preventing and mitigating failures. Essentially, acting as an external watchdog.

To be able to provide this functionality, according to the requirements, the module offers a feature-packed Secure Control Interface for communication with the host platform, including:

- PCI Express

- USB

- QSPI

- SGPIO

- NCSI

- multiple I2C, I3C and UART channels

One of our designs is based on Xilinx Artix-7, whereas the other one features a Lattice ECP5 - both low-cost and open source friendly FPGAs supported by the open source F4PGA toolchain project.

The FPGA is complemented by 512MB of DDR3 memory, 16GB of eMMC flash, as well as a dedicated Gigabit-Ethernet interface. To ensure the security of the Datacenter Control Module, external cryptographic modules: Root of Trust (RoT) and Trusted Platform Module (TPM) can be connected. This will allow future integration with e.g. open hardware Root of Trust projects such as OpenTitan and implementing various boot flow and authentication approaches.

Use in LibreBMC / OpenPOWER

Open source, configurable hardware platforms based on FPGA, open tooling and standards can make BMC more flexible for tomorrow's challenges. Antmicro’s DC-SCM boards have been adopted by the LibreBMC Workgroup, operating under the umbrella of the OpenPOWER Foundation, in a push to build a complete, fully transparent BMC solution. The workgroup, with participation from IBM, Google and Antmicro, among others, will be involved with creating FPGA gateware and software needed to make the hardware fully operational in real-world server solutions.Variants involving both Linux (in its default open source BMC- distribution, OpenBMC), and Zephyr RTOS, as well as with both POWER and RISC-V cores are planned and thanks to the flexibility of FPGA all of those options will be just one gateware update away. Of course, both the gateware and software will be open source as well.

If you’re looking to develop a secure and transparent DC-SCM spec-compatible BMC solution, reach out to [email protected]. See how you can collaborate with partners such as Antmicro, Google, and IBM around the open source FPGA-based hardware platform.

By Peter Katarzynski – Antmicro

.jpeg)