Time series forecasting is an important research area for machine learning (ML), particularly where accurate forecasting is critical, including several industries such as retail, supply chain, energy, finance, etc. For example, in the consumer goods domain, improving the accuracy of demand forecasting by 10-20% can reduce inventory by 5% and increase revenue by 2-3%. Current ML-based forecasting solutions are usually built by experts and require significant manual effort, including model construction, feature engineering and hyper-parameter tuning. However, such expertise may not be broadly available, which can limit the benefits of applying ML towards time series forecasting challenges.

To address this, automated machine learning (AutoML) is an approach that makes ML more widely accessible by automating the process of creating ML models, and has recently accelerated both ML research and the application of ML to real-world problems. For example, the initial work on neural architecture search enabled breakthroughs in computer vision, such as NasNet, AmoebaNet, and EfficientNet, and in natural language processing, such as Evolved Transformer. More recently, AutoML has also been applied to tabular data.

Today we introduce a scalable end-to-end AutoML solution for time series forecasting, which meets three key criteria:

- Fully automated: The solution takes in data as input, and produces a servable TensorFlow model as output with no human intervention.

- Generic: The solution works for most time series forecasting tasks and automatically searches for the best model configuration for each task.

- High-quality: The produced models have competitive quality compared to those manually crafted for specific tasks.

We demonstrate the success of this approach through participation in the M5 forecasting competition, where this AutoML solution achieved competitive performance against hand-crafted models with moderate compute cost.

Challenges in Time Series Forecasting

Time series forecasting presents several challenges to machine learning models. First, the uncertainty is often high since the goal is to predict the future based on historical data. Unlike other machine learning problems, the test set, for example, future product sales, might have a different distribution from the training and validation set, which are extracted from the historical data. Second, the time series data from the real world often suffers from missing data and high intermittency (i.e., when a high fraction of the time series has the value of zero). Some time series tasks may not have historical data available and suffer from the cold start problem, for example, when predicting the sales of a new product. Third, since we aim to build a fully automated generic solution, the same solution needs to apply to a variety of datasets, which can vary significantly in the domain (product sales, web traffic, etc), the granularity (daily, hourly, etc), the history length, the types of features (categorical, numerical, date time, etc), and so on.

An AutoML Solution

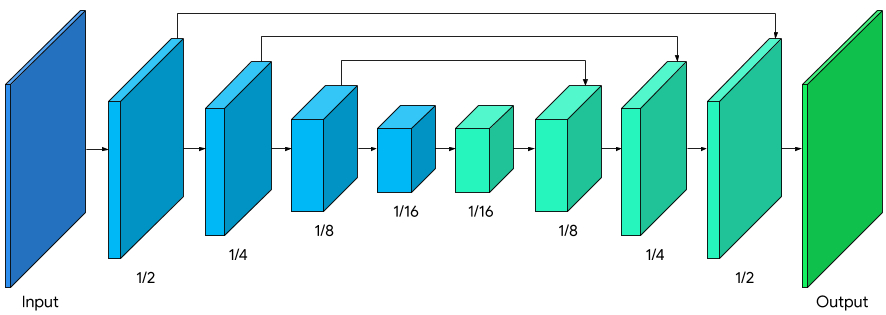

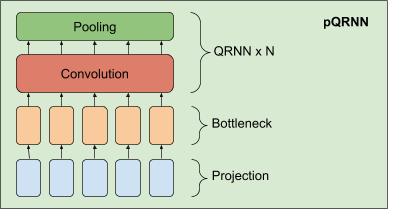

To tackle these challenges, we designed an end-to-end TensorFlow pipeline with a specialized search space for time series forecasting. It is based on an encoder-decoder architecture, in which an encoder transforms the historical information in a time series into a set of vectors, and a decoder generates the future predictions based on these vectors. Inspired by the state-of-the-art sequence models, such as Transformer and WaveNet, and best practices in time series forecasting, our search space included components such as attention, dilated convolution, gating, skip connections, and different feature transformations. The resulting AutoML solution searches for the best combination of these components as well as core hyperparameters.

To combat the uncertainty in predicting the future of a time series, an ensemble of the top models discovered in the search is used to make final predictions. The diversity in the top models made the predictions more robust to uncertainty and less prone to overfitting the historical data. To handle time series with missing data, we fill in the gaps with a trainable vector and let the model learn to adapt to the missing time steps. To address intermittency, we predict, for each future time step, not only the value, but also the probability that the value at this time step is non-zero, and combine the two predictions. Finally, we found that the automated search is able to adjust the architecture and hyperparameter choices for different datasets, which makes the AutoML solution generic and automates the modeling efforts.

Benchmarking in Forecasting Competitions

To benchmark our AutoML solution, we participated in the M5 forecasting competition, the latest in the M-competition series, which is one of the most important competitions in the forecasting community, with a long history spanning nearly 40 years. This most recent competition was hosted on Kaggle and used a dataset from Walmart product sales, the real-world nature of which makes the problem quite challenging.

We participated in the competition with our fully automated solution and achieved a rank of 138 out of 5558 participants (top 2.5%) on the final leaderboard, which is in the silver medal zone. Participants in the competition had almost four months to produce their models. While many of the competitive forecasting models required months of manual effort to create, our AutoML solution found the model in a short time with only a moderate compute cost (500 CPUs for 2 hours) and no human intervention.

We also benchmarked our AutoML forecasting solution on several other Kaggle datasets and found that on average it outperforms 92% of hand-crafted models, despite its limited resource use.

|

| Evaluation of the AutoML Forecasting solution on other Kaggle Datasets (Rossman Store Sales, Web Traffic, Favorita Grocery Sales) besides M5. |

This work demonstrates the strength of an end-to-end AutoML solution for time series forecasting, and we are excited about its potential impact on real-world applications.

Acknowledgements

This project was a joint effort of Google Brain team members Chen Liang, Da Huang, Yifeng Lu and Quoc V. Le. We also thank Junwei Yuan, Xingwei Yang, Dawei Jia, Chenyu Zhao, Tin-yun Ho, Meng Wang, Yaguang Li, Nicolas Loeff, Manish Kurse, Kyle Anderson and Nishant Patil for their collaboration.