Posted by Matthew McCullough, Vice President, Product Management, Android Developer

Just now, we kicked off the first day of Android Dev Summit in the Bay Area, where my team and I covered a number of ways we’re helping you build excellent experiences for users by leveraging Modern Android Development, which can help you extend those apps across the many devices Android has to offer - across all screen sizes from the one on your wrist, to large screens like a tablet or foldables.

Here’s a recap of what we covered, and don’t forget to watch the full keynote!

Modern Android Development: Compose October ‘22

A few years ago, we introduced a set of libraries, tools, services, and guidance we call Modern Android Development, or MAD. From Android Studio, Kotlin, Jetpack libraries and powerful Google & Play Services, our goal is to make it faster and easier for you to build high quality apps across all Android devices.For building rich, beautiful UIs, we introduced Jetpack Compose several years ago - this is our recommended UI framework for new Android applications.

We’re introducing a Gradle Bill of Materials (BOM) specifying the stable version of each Compose library. The first BOM release, Compose October 22, contains Material Design 3 components, lazy staggered grids, variable fonts, pull to refresh, snapping in lazy lists, draw text in canvas, URL annotations in text, hyphenation, and LookAheadLayout. The team at Lyft has benefited from using Compose. They shared “Over 90% of all new feature code is now developed in Compose.”

Modern Android Development comes to life in Android Studio, our official IDE that provides powerful tools for building apps on every type of Android device. Today, we’re releasing a number of new features for you to test out, including updated templates that are Compose by default and feature Material 3, Live Edit on by default for Compose, Composition Tracing, Android SDK Upgrade Assistant, App Quality Insights Improvements and more. Download the latest preview version of Android Studio Flamingo to try out all the features and to give us feedback.

|

Wear OS: the time is now!

A key device that users are turning to is the smallest and most personal — the watch. We launched our joint platform – Wear OS – with Samsung just last year, and this year, we have seen 3X as many device activations, with amazing new devices hitting the market, like Samsung Galaxy Watch 5 and Google Pixel Watch. Compose for Wear OS, which makes it faster and easier to build Apps for Wear OS, went to 1.0 this summer, and is our recommended approach for building user interfaces for Wear OS apps. More than 20 UI components specifically designed for Wearables, with built-in material theming and accessibility.Today, we’re sharing updated templates for Wear OS in Android Studio, as well as a stable Android R emulator system image for WearOS.

With personalized data from a wearable, it’s important to keep the data completely private and safe, which is why we’ve been working on a solution to make this easier – Health Connect. It’s an API that we built in close collaboration with Samsung for storing and sharing health data - all with a single place for users to easily manage permissions.

Developers who invest in Wear OS are seeing big results: Todoist increased their install growth rate by 50% since rebuilding their app for Wear 3, and Outdooractive reduced development time by 30% using Compose for Wear OS. Now is the time to bring a unique, engaging experience to your users on Wear OS!

Making your app work great on tablets & large screens

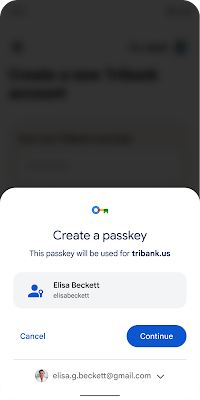

As you heard earlier this year: Google is all in on tablets, foldables, and ChromeOS. With amazing new hardware–like Samsung Galaxy Z Fold4, Lenovo P12 Tab Pro, and Google’s upcoming Pixel Tablet, there has never been a better time to review your apps and get them ready for large screens. We’ve been hard at work, with updates to Android, improved Google apps and exciting changes to the Play store making optimized Tablet apps more discoverable.We’ve made it easier than ever to test your app on the large screen in Android Studio Electric Eel, including resizable and desktops emulators and visual linting to help you adhere to best practices on any sized screen.

We’ve also heard that we can help you by providing more design and layout guidance for these devices. To help today, we added new layout guidance for apps by vertical to developer.android.com, as well as developer guidance for Canonical layouts with samples.

Apps that invest in large screen features are seeing that work pay off when it comes to engagement; take Concepts, which enables amazing stylus interactions like drawing and shape guides for ChromeOS and stylus devices, and saw a 70% higher usage for tablets compared to phones!

Be on the lookout for more updates on our improvements to Android Studio, Window Manager Jetpack, and more with the Form Factors track, broadcast live on November 9.

Making it easier to take advantage of platform features in Android 13

At the heart of a successful platform is the operating system, and Android 13, released in August, brings developer enhancements too many facets of the platform, including personalization, privacy, security, connectivity, and media.For example per-app language preferences, improve the experience for multilingual users, allowing people to experience their device in different languages in different contexts.

The new Photo picker is a permission free way to allow the user to browse and select photos and videos they explicitly want to share with your app, a great example of how Android is focused on privacy.

To help you target new API levels, we're introducing the Android SDK Upgrade Assistant tool within the latest preview of Android Studio Flamingo, which gives you step-by-step documentation for the most important changes to look for when updating the target SDK of your app.

These are just a few examples of how we're making it easier than ever to adapt your app to platform changes, while enabling you to take advantage of the latest features Android has to offer.

Connecting with you around the world at Android Dev Summit

This is just the first day of Android Dev Summit - where we kicked off with the keynote and dove into our first track on Modern Android Development, we’ve still got a lot more over the coming weeks. Tune in on November 9, when we livestream our next track: Form Factors. Our final technical track will be Platform, livestreamed on November 14.If you’ve got a burning question, tweet us using #AskAndroid; we’ll be wrapping up each track livestream with a live Q&A from the team, so you can tune in and hear your question answered live.

This year, we’re also really excited to get the opportunity to meet with developers around the world in person, including today in the Bay Area. On November 9, Android Dev Summit moves to London. And the fun will continue in Asia in December with more roadshow stops: in Tokyo on December 16 (more details to come) at Android Dev Summit with Google DevFest, and in Bangalore in mid-December (you can express interest to join here).

Whether you’re tuning in online, or joining us in-person around the world, it’s feedback from developers like you that help us make Android a better platform. We thank you for the opportunity to work together with you, building excellent apps and delighting users across all of the different devices Android has to offer - enjoy your 2022 Android Dev Summit!

.png)