By Mykola Komarevskyy, Product Manager, Google Compute Engine

Containers are a popular way to deploy software thanks to their lightweight size and resource requirements, dependency isolation and portability. Today, we’re introducing an easy way to

deploy and run containers on Google Compute Engine virtual machines and managed instance groups. This feature, which is currently in beta, allows you to take advantage of container deployment consistency while staying in your familiar IaaS environment.

Now you can easily deploy containers wherever you may need them on Google Cloud:

Google Kubernetes Engine for multi-workload, microservice friendly container orchestration,

Google App Engine flexible environment, a fully managed application platform, and now

Compute Engine for VM-level container deployment.

Running containers on Compute Engine instances is handy in a number of scenarios: when you need to optimize CI/CD pipeline for applications running on VMs, finetune VM shape and infrastructure configuration for a specialized workload, integrate a containerized application into your existing IaaS infrastructure or launch a one-off instance of an application.

To run your container on a VM instance, or a

managed instance group, simply provide an image name and specify your container runtime options when creating a VM or an

instance template. Compute Engine takes care of the rest including supplying an up-to-date

Container-Optimized OS image with Docker and starting the container upon VM boot with your runtime options.

You can now easily use containers without having to write startup scripts or learn about container orchestration tools, and can migrate to full container orchestration with Kubernetes Engine when you’re ready. Better yet, standard

Compute Engine pricing applies

— VM instances running containers cost the same as regular VMs.

How to deploy a container to a VM

To see the new container deployment method in action, let’s deploy an NGINX HTTP server to a virtual machine. To do this, you only need to configure three settings when creating a new instance:

- Check Deploy a container image to this VM instance.

- Provide Container image name.

- Check Allow HTTP traffic so that the VM instance can receive HTTP requests on port 80.

Here's how the flow looks in

Google Cloud Console:

Run a container from the gcloud command line

You can run a container on a VM instance with just one gcloud command:

gcloud beta compute instances create-with-container nginx-vm \

--container-image gcr.io/cloud-marketplace/google/nginx1:1.12 \

--tags http-server

Then, create a firewall rule to allow HTTP traffic to the VM instance so that you can see the NGINX welcome page:

gcloud compute firewall-rules create allow-http \

--allow=tcp:80 --target-tags=http-server

To update such a container is just as easy:

gcloud beta compute instances update-container nginx-vm \

--container-image gcr.io/cloud-marketplace/google/nginx1:1.13

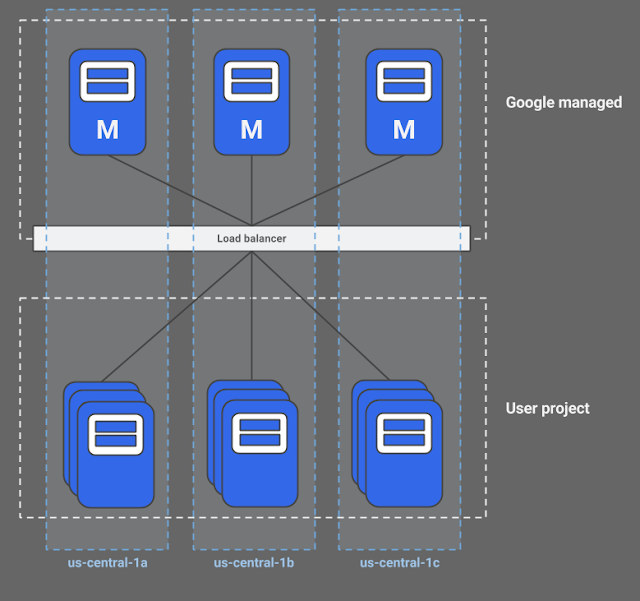

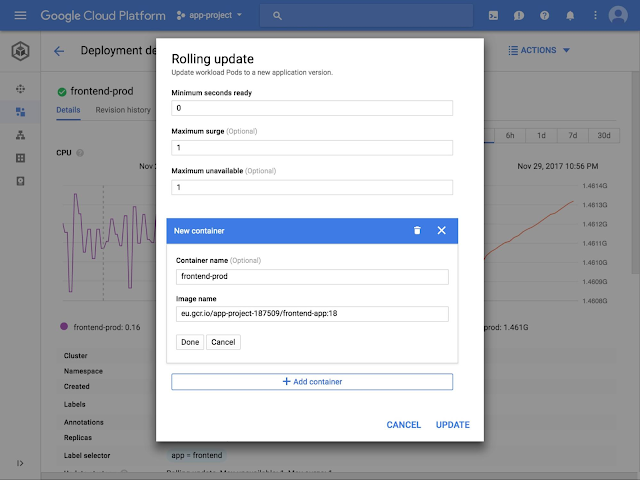

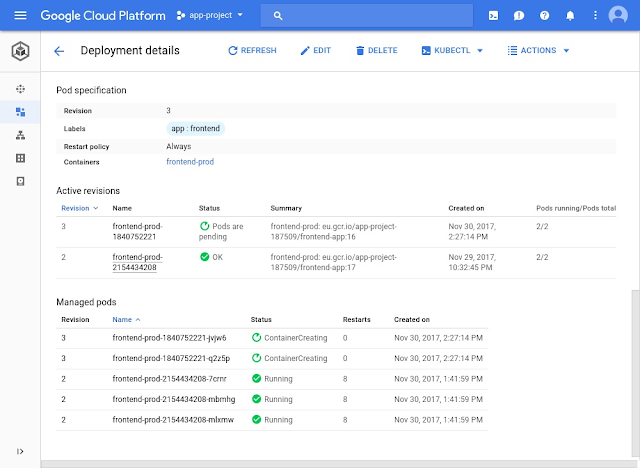

Run a container on a managed instance group

With managed instance groups, you can take advantage of VM-level features like autoscaling, automatic recreation of unhealthy virtual machines, rolling updates, multi-zone deployments and load balancing. Running containers on managed instance groups is just as easy as on individual VMs and takes only two steps: (1) create an instance template and (2) create a group.

Let’s deploy the same NGINX server to a managed instance group of three virtual machines.

Step 1: Create an instance template with a container.

gcloud beta compute instance-templates create-with-container nginx-it \

--container-image gcr.io/cloud-marketplace/google/nginx1:1.12 \

--tags http-server

The http-server tag allows HTTP connections to port 80 of the VMs, created from the instance template. Make sure to keep the firewall rule from the previous example.

Step 2: Create a managed instance group.

gcloud compute instance-groups managed create nginx-mig \

--template nginx-it \

--size 3

The group will have three VM instances, each running the NGINX container.

Get started!

Interested in deploying containers on Compute Engine VM instances or managed instance groups? Take a look at the

detailed step-by-step instructions and learn how to configure a range of

container runtime options including environment variables, entrypoint command with parameters and volume mounts. Then, help us help you make using containers on Compute Engine even easier! Send your feedback, questions or requests to

[email protected].

Sign up for Google Cloud today and get $300 in credits to try out running containers directly on Compute Engine instances.