Over the last few weeks, we've been discussing structured data: first providing

best practices and then showing how to

monitor it with Search Console. Today we are announcing support for FAQ and How-to structured data on Google Search and the Google Assistant, including new reports in Search Console to monitor how your site is performing.

In this post, we provide details to help you implement structured data on your FAQ and how-to pages in order to make your pages eligible to feature on Google Search as rich results and How-to Actions for the Assistant. We also show examples of how to monitor your search appearance with new Search Console enhancement reports.

Disclaimer: Google does not guarantee that your structured data will show up in search results, even if your page is marked up correctly. To determine whether a result gets a rich treatment, Google algorithms use a variety of additional signals to make sure that users see rich results when their content best serves the user’s needs. Learn more about structured data guidelines.

How-to on Search and the Google Assistant

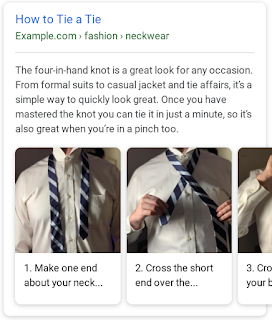

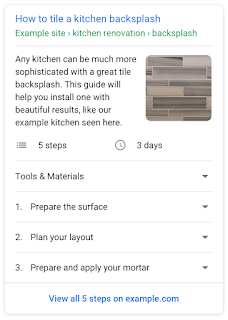

How-to rich results provide users with richer previews of web results that guide users through step-by-step tasks. For example, if you provide information on how to tile a kitchen backsplash, tie a tie, or build a treehouse, you can add How-to structured data to your pages to enable the page to appear as a rich result on Search and a How-to Action for the Assistant.

Add structured data to the steps, tools, duration, and other properties to enable a How-to rich result for your content on the search page. If your page uses images or video for each step, make sure to mark up your visual content to enhance the preview and expose a more visual representation of your content to users. Learn more about the required and recommended properties you can use on your markup in the

How-to developer documentation.

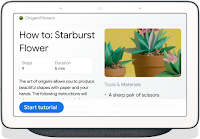

Your content can also start surfacing on the Assistant through new voice guided experiences. This feature lets you expand your content to new surfaces, to help users complete tasks wherever they are, and interactively progress through the steps using voice commands.

As shown in the Google Home Hub example below, the Assistant provides a conversational, hands-free experience that can help users complete a task. This is an incredibly lightweight way for web developers to expand their content to the Assistant. For more information about How-to for the Assistant, visit

Build a How-to Guide Action with Markup.

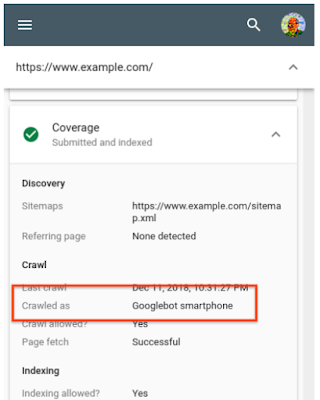

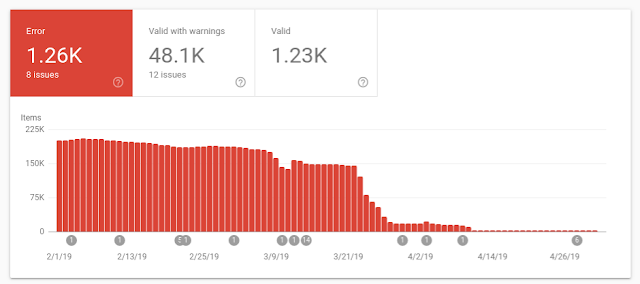

To help you monitor How-to markup issues, we launched a

report in Search Console that shows all errors, warnings and valid items for pages with HowTo structured data. Learn more about how to use the report to

monitor your results.

FAQ on Search and the Google Assistant

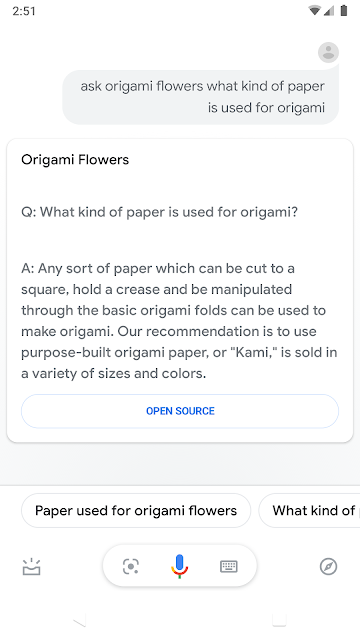

An FAQ page provides a list of frequently asked questions and answers on a particular topic. For example, an FAQ page on an e-commerce website might provide answers on shipping destinations, purchase options, return policies, and refund processes. By using FAQPage structured data, you can make your content eligible to display these questions and answers to display directly on Google Search and the Assistant, helping users to quickly find answers to frequently asked questions.

FAQ structured data is only for official questions and answers; don't add FAQ structured data on forums or other pages where users can submit answers to questions - in that case, use the

Q&A Page markup.

You can learn more about implementation details in the

FAQ developer documentation.

To provide more ways for users to access your content, FAQ answers can also be surfaced on the Google Assistant. Your users can invoke your FAQ content by asking direct questions and get the answers that you marked up in your FAQ pages. For more information, visit

Build an FAQ Action with Markup.

To help you monitor FAQ issues and search appearance, we also launched an

FAQ report in Search Console that shows all errors, warnings and valid items related to your marked-up FAQ pages.

We would love to hear your thoughts on how FAQ or How-to structured data works for you. Send us any feedback either through

Twitter or

our forum.

Posted by

Daniel Waisberg, Damian Biollo, Patrick Nevels, and Yaniv Loewenstein