[Editor’s Note: Today we announced the beta of Kubernetes Network Policy in Google Container Engine, a feature we implemented in close collaboration with our partner Tigera, the company behind Project Calico. Read on for more details from Tigera co-founder and vice president of product, Andy Randall.]

When it comes to network policy, a lot has changed. Back in the day, we protected enterprise data centers with a big expensive network appliance called a firewall that allowed you to define rules about what traffic to allow in and out of the data center. In the cloud world, virtual firewalls provide similar functionality for virtual machines. For example, Google Compute Engine allows you to configure firewall rules on VPC networks.

In a containerized microservices environment such as Google Container Engine, network policy is particularly challenging. Traditional firewalls provide great perimeter security for intrusion from outside the cluster (i.e. “north-south” traffic), but aren’t designed for “east-west” traffic within the cluster at a finer-grained level. And while Container Engine automates the creation and destruction of containers (each with its own IP address), not only do you have many more IP endpoints than you used to, the automated create-run-destroy life-cycle of a container can result in churn up to 250x that of virtual machines.

Traditional firewall rules are no longer sufficient for containerized environments; we need a more dynamic, automated approach that is integrated with the orchestrator. (For those interested in why we can’t just continue with traditional virtual network / firewall approaches, see Christopher Liljenstolpe’s blog post, Micro-segmentation in the Cloud Native World.)

We think the Kubernetes Network Policy API and the Project Calico implementation present a solution to this challenge. Given Google’s leadership role in the community, and its commitment to running Container Engine on the latest Kubernetes release, it’s only natural that they would be the first to include this capability in their production hosted Kubernetes service, and we at Tigera are delighted to have helped support this effort.

Kubernetes Network Policy 1.0

What exactly does Kubernetes Network Policy let you do? Kubernetes Network Policy allows you to easily specify the connectivity allowed within your cluster, and what should be blocked. (It is a stable API as of Kubernetes v1.7.)You can find the full API definition in the Kubernetes documentation but the key points are as follows:

- Network policies are defined by the NetworkPolicy resource type. These are applied to the Kubernetes API server like any other resource (e.g., kubectl apply -f my-network-policy.yaml).

- By default, all pods in a namespace allow unrestricted access. That is, they can accept incoming network connections from any source.

- A NetworkPolicy object contains a selector expression (“podSelector”) that selects a set of pods to which the policy applies, and the rules about which incoming connections will be allowed (“ingress” rules). Ingress rules can be quite flexible, including their own namespace selector or pod selector expressions.

- Policies apply to a namespace. Every pod in that namespace selected by the policy’s podSelector will have the ingress rules applied, so any connection attempts that are not explicitly allowed are rejected. Calico enforces this policy extremely efficiently using iptables rules programmed into the underlying host’s kernel.

Here is an example NetworkPolicy resource to give you a sense of how this all fits together:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

spec:

podSelector:

matchLabels:

role: db

ingress:

- from:

- namespaceSelector:

matchLabels:

project: myproject

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 6379

In this case, the policy is called “test-network-policy” and applies to the default namespace. It restricts inbound connections to every pod in the default namespace that has the label “role: db” applied. The selectors are disjunctive, i.e., either can be true. That means that connections can come from any pod in a namespace with label “project: myproject”, OR any pod in the default namespace with the label “role: frontend”. Further, these connections must be on TCP port 6379 (the standard port for Redis).

As you can see, Network Policy is an intent-based policy model, i.e., you specify your desired end-state and let the system ensure it happens. As pods are created and destroyed, or a label (such as “role: db”) is applied or deleted from existing pods, you don’t need to update anything: Calico automatically takes care of things behind the scenes and ensures that every pod on every host has the right access rules applied.

As you can imagine, that’s quite a computational challenge at scale, and Calico’s policy engine contains a lot of smarts to meet Container Engine’s production performance demands. The good news is that you don’t need to worry about that. Just apply your policies and Calico takes care of the rest.

As you can see, Network Policy is an intent-based policy model, i.e., you specify your desired end-state and let the system ensure it happens. As pods are created and destroyed, or a label (such as “role: db”) is applied or deleted from existing pods, you don’t need to update anything: Calico automatically takes care of things behind the scenes and ensures that every pod on every host has the right access rules applied.

As you can imagine, that’s quite a computational challenge at scale, and Calico’s policy engine contains a lot of smarts to meet Container Engine’s production performance demands. The good news is that you don’t need to worry about that. Just apply your policies and Calico takes care of the rest.

Enabling Network Policy in Container Engine

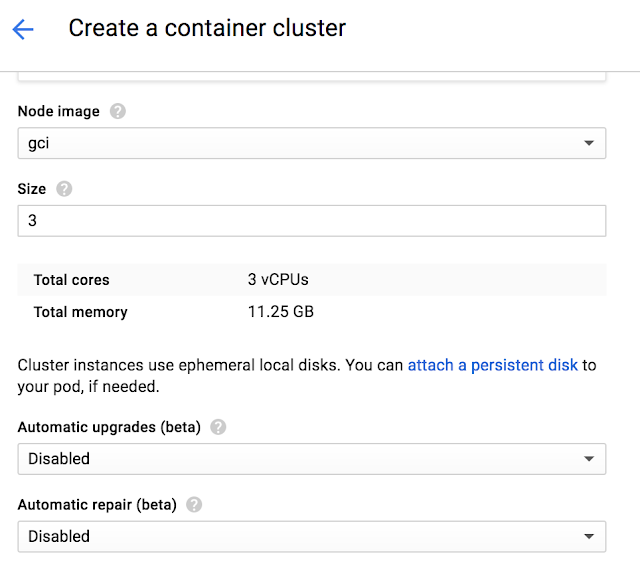

For new and existing clusters running at least Kubernetes v1.7.6, you can enable network policy on Container Engine via the UI, CLI or API. For new clusters, simply set the flag (or check the box in the UI) when creating the cluster. For existing clusters there is a two-step process:

- Enable the network policy add-on on the master.

- Enable network policy for the entire cluster’s node-pools.

Here’s how to do that during cluster creation:

# Create a cluster with Network Policy Enabled

gcloud beta container clusters create <CLUSTER> --project=<PROJECT_ID>

--zone=<ZONE> --enable-network-policy --cluster-version=1.7.6Here’s how to do it for existing clusters:

# Create a cluster with Network Policy Enabled

# Enable the addon

gcloud beta container clusters update <CLUSTER> --project=<PROJECT_ID>

--zone=<ZONE>--update-addons=NetworkPolicy=ENABLE

# Enable on nodes (This re-creates the node pools)

gcloud beta container clusters update <CLUSTER> --project=<PROJECT_ID>

--zone=<ZONE> --enable-network-policyLooking ahead

Environments running Kubernetes 1.7 can use the NetworkPolicy API capabilities that we discussed above, essentially ingress rules defined by selector expressions. However, you can imagine wanting to do more, such as:- Applying egress rules (restricting which outbound connections a pod can make)

- Referring to IP addresses or ranges within rules

The good news is that the new Kubernetes 1.8 includes these capabilities, and Google and Tigera are working together to make them available in Container Engine. And, beyond that, we are working on even more advanced policy capabilities. Watch this space!