Today, we’re excited to announce that Cloud IoT Core, our fully managed service to help securely connect and manage IoT devices at scale, is now generally available.

With Cloud IoT Core, you can easily connect and centrally manage millions of globally dispersed connected devices. When used as part of the broader Google Cloud IoT solution, you can ingest all your IoT data and connect to our state-of-the-art analytics and machine learning services to gain actionable insights.

Already, Google Cloud Platform (GCP) customers are using connected devices and Cloud IoT Core as the foundation of their IoT solutions. Whether it’s smart cities, the sharing economy or next-generation seismic research, we’re thrilled that Cloud IoT Core is helping innovative companies build the future.

Customers share feedback

Schlumberger is the world's leading provider of technology for reservoir characterization, drilling, production, and processing to the oil and gas industry.

"As part of our IoT integration strategy, Google Cloud IoT Core has helped us focus our engineering efforts on building oil and gas applications by leveraging existing IoT services to enable fast, reliable and economical deployment. We have been able to build quick prototypes by connecting a large number of devices over MQTT and perform real-time monitoring using Cloud Dataflow and BigQuery."

— Chetan Desai, VP Digital Technology, Schlumberger Limited

Smart Parking is a New Zealand-based company that has used Cloud IoT Core from its earliest days to build out a smart city platform, helping direct traffic, parking and city services.

"Using Google Cloud IoT Core, we have been able to completely redefine how we manage the deployment, activation and administration of sensors and devices. Previously, we needed to individually set up each sensor/device. Now we allocate manufactured batches of devices into IoT Core for site deployments and then, using a simple activation smartphone app, the onsite installation technician can activate the sensor or device in moments. Job done!"

— John Heard, Group CTO, Smart Parking LimitedBike-sharing pioneer Blaze uses Cloud IoT Core to manage its Blaze Future Data Platform, which uses a combination of GPS, accelerometers and atmospheric sensing for its smart bikes. Its capabilities include air pollution sensing, pothole detection, recording accidents and near misses, and capturing insights around journeys.

"Blaze is able to rapidly build the technology platform our customers and cyclists require on Google Cloud by more securely connecting our products and fleets of bikes to Cloud IoT Core and then run demand forecasting using BigQuery and Machine Learning."

— Philip Ellis, Co-Founder & COO, Blaze

Grupo ADO is the largest bus operator in Latin America. It operates inter-city routes as well as short routes and tourist charters.

"Agosto, a Google Cloud Premier partner, performed business and technical reviews of MOBILITY ADO’s existing architecture, applications and core data workflows which had been in place for about 12 years. These systems were originally very robust, but over time, we faced challenges with innovating on the existing technology stack, as well as with the optimization of operational costs. Agosto created a proof-of-concept which showcased that a Cloud IoT Core-based architecture was a viable path to modernization and functional optimization of many of our existing, core components. MOBILITY ADO now has real time access to bus diagnostic data via Google Cloud data and analytics services and a clear path to future-proof our platform."

— Humberto Campos, IT Director, MOBILITY ADO

Enabling the Cloud IoT Core partner ecosystem

At the same time, we continue to grow our ecosystem of partners, providing companies with the insight and staff to build custom IoT solution that best fits their needs. On the device side, we have a variety of partners whose hardware works seamlessly with IoT Core. Application partners, meanwhile, help customers build solutions using IoT Core and other Google Cloud services. |

| (click to enlarge) |

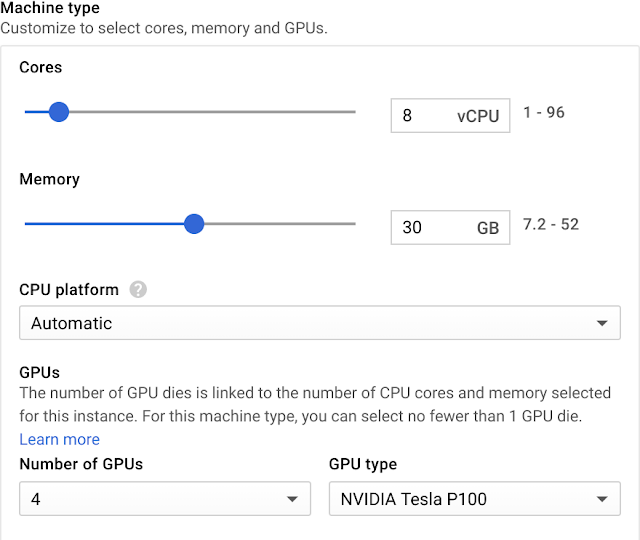

Improving the Cloud IoT Core experience

Since we announced the public beta of Cloud IoT Core last fall, we’ve been actively listening to your feedback. This general availability release incorporates an important new feature: You can now publish data streams from the IoT Core protocol bridge to multiple Cloud Pub/Sub topics, simplifying deployments.

For example, imagine you have a device that publishes multiple types of data, such as temperature, humidity and logging data. By directing these data streams to their own individual Pub/Sub topics, you can eliminate the need to separate the data into different categories after publishing.

And that’s just the beginning—watch this space as we build out Cloud IoT Core with additional features and enhancements. We look forward to helping you scale your production IoT deployments. To get started check out this quick-start tutorial on Cloud IoT Core, and provide us with your feedback—we’d love to hear from you!