Category Archives: Online Security Blog

Announcing our open source security key test suite

Source: Google Online Security Blog

Privacy-preserving features in the Mobile Driving License

In the United States and other countries a Driver's License is not only used to convey driving privileges, it is also commonly used to prove identity or personal details.

Presenting a Driving License is simple, right? You hand over the card to the individual wishing to confirm your identity (the so-called “Relying Party” or “Verifier”); they check the security features of the plastic card (hologram, micro-printing, etc.) to ensure it’s not counterfeit; they check that it’s really your license, making sure you look like the portrait image printed on the card; and they read the data they’re interested in, typically your age, legal name, address etc. Finally, the verifier needs to hand back the plastic card.

Most people are so familiar with this process that they don’t think twice about it, or consider the privacy implications. In the following we’ll discuss how the new and soon-to-be-released ISO 18013-5 standard will improve on nearly every aspect of the process, and what it has to do with Android.

Mobile Driving License ISO Standard

The ISO 18013-5 “Mobile driving licence (mDL) application” standard has been written by a diverse group of people representing driving license issuers (e.g. state governments in the US), relying parties (federal and state governments, including law enforcement), academia, industry (including Google), and many others. This ISO standard allows for construction of Mobile Driving License (mDL) applications which users can carry in their phone and can use instead of the plastic card.

Instead of handing over your plastic card, you open the mDL application on your phone and press a button to share your mDL. The Verifier (aka “Relying Party”) has their own device with an mDL reader application and they either scan a QR code shown in your mDL app or do an NFC tap. The QR code (or NFC tap) conveys an ephemeral cryptographic public key and hardware address the mDL reader can connect to.

Once the mDL reader obtains the cryptographic key it creates its own ephemeral keypair and establishes an encrypted and authenticated, secure wireless channel (BLE, Wifi Aware or NFC)). The mDL reader uses this secure channel to request data, such as the portrait image or what kinds of vehicles you're allowed to drive, and can also be used to ask more abstract questions such as “is the holder older than 18?”

Crucially, the mDL application can ask the user to approve which data to release and may require the user to authenticate with fingerprint or face — none of which a passive plastic card could ever do.

With this explanation in mind, let’s see how presenting an mDL application compares with presenting a plastic-card driving license:

- Your phone need not be handed to the verifier, unlike your plastic card. The first step, which requires closer contact to the Verifier to scan the QR code or tap the NFC reader, is safe from a data privacy point of view, and does not reveal any identifying information to the verifier. For additional protection, mDL apps will have the option of both requiring user authentication before releasing data and then immediately placing the phone in lockdown mode, to ensure that if the verifier takes the device they cannot easily get information from it.

- All data is cryptographically signed by the Issuing Authority (for example the DMV who issued the mDL) and the verifier's app automatically validates the authenticity of the data transmitted by the mDL and refuses to display inauthentic data. This is far more secure than holograms and microprinting used in plastic cards where verification requires special training which most (human) verifiers don't receive. With most plastic cards, fake IDs are relatively easy to create, especially in an international context, putting everyone’s identity at risk.

- The amount of data presented by the mDL is minimized — only data the user elects to release, either explicitly via prompts or implicitly via e.g. pre-approval and user settings, is released. This minimizes potential data abuse and increases the personal safety of users.

For example, any bartender who checks your mDL for the sole purpose of verifying you’re old enough to buy a drink needs only a single piece of information which is whether the holder is e.g. older than 21, yes or no. Compared to the plastic card, this is a huge improvement; a plastic card shows all your data even if the verifier doesn’t need it.

Additionally, all of this information is available via a 2D barcode on the back so if you use your plastic card driving license to buy beer, tobacco, or other restricted items at a store it’s common in some states for the cashier to scan your license. In some cases, this means you may get advertising in the mail but they may sell your identifying information to the highest bidder or, worst case, leak their whole database.

- The amount of data presented by the mDL is minimized — only data the user elects to release, either explicitly via prompts or implicitly via e.g. pre-approval and user settings, is released. This minimizes potential data abuse and increases the personal safety of users.

These are some of the reasons why we think mDL is a big win for end users in terms of privacy.

One commonality between plastic-card driving licences and the mDL is how the relying party verifies that the person presenting the license is the authorized holder. In both cases, the verifier manually compares the appearance of the individual against a portrait photo, either printed on the plastic or transmitted electronically and research has shown that it’s hard for individuals to match strangers to portrait images.

The initial version of ISO 18013-5 won’t improve on this but the ISO committee working on the standard is already investigating ways to utilize on-device biometrics sensors to perform this match in a secure and privacy-protecting way. The hope is that improved fidelity in the process helps reduce unauthorized use of identity documents.

mDL support in Android

Through facilities such as hardware-based Keystore, Android already offers excellent support for security and privacy-sensitive applications and in fact it’s already possible to implement the ISO 18013-5 standard on Android without further platform changes. Many organizations participating in the ISO committee have already implemented 18013-5 Android apps.

That said, with purpose-built support in the operating system it is possible to provide better security and privacy properties. Android 11 includes the Identity Credential APIs at the Framework level along with a Hardware Abstraction Layer interface which can be implemented by Android OEMs to enable identity credential support in Secure Hardware. Using the Identity Credential API, the Trusted Computing Base of mDL applications does not include the application or even Android itself. This will be particularly important for future versions where the verifier must trust the device to identify and authenticate the user, for example through fingerprint or face matching on the holder's own device. It’s likely such a solution will require certified hardware and/or software and certification is not practical if the TCB includes the hundreds of millions of lines of code in Android and the Linux kernel.

One advantage of plastic cards is that they don't require power or network communication to be useful. Putting all your licenses on your phone could seem inconvenient in cases where your device is low on battery, or does not have enough battery life to start. The Android Identity Credential HAL therefore provides support for a mode called Direct Access, where the license is still available through an NFC tap even when the phone's battery is too low to boot it up. Device makers can implement this mode, but it will require hardware support that will take several years to roll out.

For devices without the Identity Credential HAL, we have an Android Jetpack which implements the same API and works on nearly every Android device in the world (API level 24 or later). If the device has hardware-backed Identity Credential support then this Jetpack simply forwards calls to the platform API. Otherwise, an Android Keystore-backed implementation will be used. While the Android Keystore-backed implementation does not provide the same level of security and privacy, it is perfectly adequate for both holders and issuers in cases where all data is issuer-signed. Because of this, the Jetpack is the preferred way to use the Identity Credential APIs. We also made available sample open-source mDL and mDL Reader applications using the Identity Credential APIs.

Conclusion

Android now includes APIs for managing and presenting with identity documents in a more secure and privacy-focused way than was previously possible. These can be used to implement ISO 18013-5 mDLs but the APIs are generic enough to be usable for other kinds of electronic documents, from school ID or bonus program club cards to passports.

Additionally, the Android Security and Privacy team actively participates in the ISO committees where these standards are written and also works with civil liberties groups to ensure it has a positive impact on our end users.

Source: Google Online Security Blog

Fuzzing internships for Open Source Software

Open source software is the foundation of many modern software products. Over the years, developers increasingly have relied on reusable open source components for their applications. It is paramount that these open source components are secure and reliable, as weaknesses impact those that build upon it.

Google cares deeply about the security of the open source ecosystem and recently launched the Open Source Security Foundation with other industry partners. Fuzzing is an automated testing technique to find bugs by feeding unexpected inputs to a target program. At Google, we leverage fuzzing at scale to find tens of thousands of security vulnerabilities and stability bugs. This summer, as part of Google’s OSS internship initiative, we hosted 50 interns to improve the state of fuzz testing in the open source ecosystem.

The fuzzing interns worked towards integrating new projects and improving existing ones in OSS-Fuzz, our continuous fuzzing service for the open source community (which has 350+ projects, 22,700 bugs, 89% fixed). Several widely used open source libraries including but not limited to nginx, postgresql, usrsctp, and openexr, now have continuous fuzzing coverage as a result of these efforts.

Another group of interns focused on improving the security of the Linux kernel. syzkaller, a kernel fuzzing tool from Google, has been instrumental in finding kernel vulnerabilities in various operating systems. The interns were tasked with improving the fuzzing coverage by adding new descriptions to syzkaller like ip tunnels, io_uring, and bpf_lsm for example, refining the interface description language, and advancing kernel fault injection capabilities.

Some interns chose to write fuzzers for Android and Chrome, which are open source projects that billions of internet users rely on. For Android, the interns contributed several new fuzzers for uncovered areas - network protocols such as pppd and dns, audio codecs like monoblend, g722, and android framework. On the Chrome side, interns improved existing blackbox fuzzers, particularly in the areas: DOM, IPC, media, extensions, and added new libprotobuf-based fuzzers for Mojo.

Our last set of interns researched quite a few under-explored areas of fuzzing, some of which were fuzzer benchmarking, ML based fuzzing, differential fuzzing, bazel rules for build simplification and made useful contributions.

Over the course of the internship, our interns have reported over 150 security vulnerabilities and 750 functional bugs. Given the overall success of these efforts, we plan to continue hosting fuzzing internships every year to help secure the open source ecosystem and teach incoming open source contributors about the importance of fuzzing. For more information on the Google internship program and other student opportunities, check out careers.google.com/students. We encourage you to apply.

Source: Google Online Security Blog

Privacy-Preserving Smart Input with Gboard

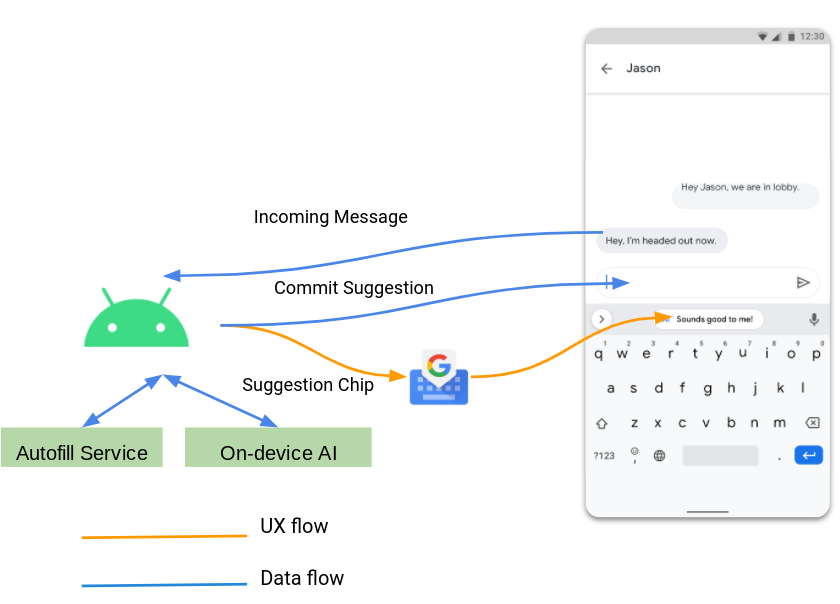

Google Keyboard (a.k.a Gboard) has a critical mission to provide frictionless input on Android to empower users to communicate accurately and express themselves effortlessly. In order to accomplish this mission, Gboard must also protect users' private and sensitive data. Nothing users type is sent to Google servers. We recently launched privacy-preserving input by further advancing the latest federated technologies. In Android 11, Gboard also launched the contextual input suggestion experience by integrating on-device smarts into the user's daily communication in a privacy-preserving way.

Before Android 11, input suggestions were surfaced to users in several different places. In Android 11, Gboard launched a consistent and coordinated approach to access contextual input suggestions. For the first time, we've brought Smart Replies to the keyboard suggestions - powered by system intelligence running entirely on device. The smart input suggestions are rendered with a transparent layer on top of Gboard’s suggestion strip. This structure maintains the trust boundaries between the Android platform and Gboard, meaning sensitive personal content cannot be not accessed by Gboard. The suggestions are only sent to the app after the user taps to accept them.

For instance, when a user receives the message “Have a virtual coffee at 5pm?” in Whatsapp, on-device system intelligence predicts smart text and emoji replies “Sounds great!” and “?”. Android system intelligence can see the incoming message but Gboard cannot. In Android 11, these Smart Replies are rendered by the Android platform on Gboard’s suggestion strip as a transparent layer. The suggested reply is generated by the system intelligence. When the user taps the suggestion, Android platform sends it to the input field directly. If the user doesn't tap the suggestion, gBoard and the app cannot see it. In this way, Android and Gboard surface the best of Google smarts whilst keeping users' data private: none of their data goes to any app, including the keyboard, unless they've tapped a suggestion.

Additionally, federated learning has enabled Gboard to train intelligent input models across many devices while keeping everything individual users type on their device. Today, the emoji is as common as punctuation - and have become the way for our users to express themselves in messaging. Our users want a way to have fresh and diversified emojis to better express their thoughts in messaging apps. Recently, we launched new on-device transformer models that are fine-tuned with federated learning in Gboard, to produce more contextual emoji predictions for English, Spanish and Portuguese.

Furthermore, following the success of privacy-preserving machine learning techniques, Gboard continues to leverage federated analytics to understand how Gboard is used from decentralized data. What we've learned from privacy-preserving analysis has let us make better decisions in our product.

When a user shares an emoji in a conversation, their phone keeps an ongoing count of which emojis are used. Later, when the phone is idle, plugged in, and connected to WiFi, Google’s federated analytics server invites the device to join a “round” of federated analytics data computation with hundreds of other participating phones. Every device involved in one round will compute the emoji share frequency, encrypt the result and send it a federated analytics server. Although the server can’t decrypt the data individually, the final tally of total emoji counts can be decrypted when combining encrypted data across devices. The aggregated data shows that the most popular emoji is ? in Whatsapp, ? in Roblox(gaming), and ✔ in Google Docs. Emoji ? moved up from 119th to 42nd in terms of frequency during COVID-19.

Gboard always has a strong commitment to Google’s Privacy Principles. Gboard strives to build privacy-preserving effortless input products for users to freely express their thoughts in 900+ languages while safeguarding user data. We will keep pushing the state of the art in smart input technologies on Android while safeguarding user data. Stay tuned!

Source: Google Online Security Blog

New Password Protections (and more!) in Chrome

To check whether you have any compromised passwords, Chrome sends a copy of your usernames and passwords to Google using a special form of encryption. This lets Google check them against lists of credentials known to be compromised, but Google cannot derive your username or password from this encrypted copy.

We notify you when you have compromised passwords on websites, but it can be time-consuming to go find the relevant form to change your password. To help, we’re adding support for ".well-known/change-password" URLs that let Chrome take users directly to the right “change password” form after they’ve been alerted that their password has been compromised.

Along with these improvements, Chrome is also bringing Safety Check to mobile. In our next release, we will launch Safety Check on iOS and Android, which includes checking for compromised passwords, telling you if Safe Browsing is enabled, and whether the version of Chrome you are running is updated with the latest security protections. You will also be able to use Chrome on iOS to autofill saved login details into other apps or browsers.

In Chrome 86 we’ll also be launching a number of additional features to improve user security, including:

Enhanced Safe Browsing for Android

Earlier this year, we launched Enhanced Safe Browsing for desktop, which gives Chrome users the option of more advanced security protections.

When you turn on Enhanced Safe Browsing, Chrome can proactively protect you against phishing, malware, and other dangerous sites by sharing real-time data with Google’s Safe Browsing service. Among our users who have enabled checking websites and downloads in real time, our predictive phishing protections see a roughly 20% drop in users typing their passwords into phishing sites.

Improvements to password filling on iOS

We recently launched Touch-to-fill for passwords on Android to prevent phishing attacks. To improve security on iOS too, we’re introducing a biometric authentication step before autofilling passwords. On iOS, you’ll now be able to authenticate using Face ID, Touch ID, or your phone passcode. Additionally, Chrome Password Manager allows you to autofill saved passwords into iOS apps or browsers if you enable Chrome autofill in Settings.

Secure HTTPS pages may sometimes still have non-secure features. Earlier this year, Chrome began securing and blocking what’s known as “mixed content”, when secure pages incorporate insecure content. But there are still other ways that HTTPS pages can create security risks for users, such as offering downloads over non-secure links, or using forms that don’t submit data securely.

To better protect users from these threats, Chrome 86 is introducing mixed form warnings on desktop and Android to alert and warn users before submitting a non-secure form that’s embedded in an HTTPS page.

Additionally, Chrome 86 will block or warn on some insecure downloads initiated by secure pages. Currently, this change affects commonly abused file types, but eventually secure pages will only be able to initiate secure downloads of any type. For more details, see Chrome’s plan to gradually block mixed downloads altogether

We encourage developers to update their forms and downloads to use secure connections for the safety and privacy of their users.

Source: Google Online Security Blog

Announcing the launch of the Android Partner Vulnerability Initiative

Google’s Android Security & Privacy team has launched the Android Partner Vulnerability Initiative (APVI) to manage security issues specific to Android OEMs. The APVI is designed to drive remediation and provide transparency to users about issues we have discovered at Google that affect device models shipped by Android partners.

Another layer of security

Android incorporates industry-leading security features and every day we work with developers and device implementers to keep the Android platform and ecosystem safe. As part of that effort, we have a range of existing programs to enable security researchers to report security issues they have found. For example, you can report vulnerabilities in Android code via the Android Security Rewards Program (ASR), and vulnerabilities in popular third-party Android apps through the Google Play Security Rewards Program. Google releases ASR reports in Android Open Source Project (AOSP) based code through the Android Security Bulletins (ASB). These reports are issues that could impact all Android based devices. All Android partners must adopt ASB changes in order to declare the current month’s Android security patch level (SPL). But until recently, we didn’t have a clear way to process Google-discovered security issues outside of AOSP code that are unique to a much smaller set of specific Android OEMs. The APVI aims to close this gap, adding another layer of security for this targeted set of Android OEMs.

Improving Android OEM device security

The APVI covers Google-discovered issues that could potentially affect the security posture of an Android device or its user and is aligned to ISO/IEC 29147:2018 Information technology -- Security techniques -- Vulnerability disclosure recommendations. The initiative covers a wide range of issues impacting device code that is not serviced or maintained by Google (these are handled by the Android Security Bulletins).

Protecting Android users

The APVI has already processed a number of security issues, improving user protection against permissions bypasses, execution of code in the kernel, credential leaks and generation of unencrypted backups. Below are a few examples of what we’ve found, the impact and OEM remediation efforts.

Permission Bypass

Permission Bypass In some versions of a third-party pre-installed over-the-air (OTA) update solution, a custom system service in the Android framework exposed privileged APIs directly to the OTA app. The service ran as the system user and did not require any permissions to access, instead checking for knowledge of a hardcoded password. The operations available varied across versions, but always allowed access to sensitive APIs, such as silently installing/uninstalling APKs, enabling/disabling apps and granting app permissions. This service appeared in the code base for many device builds across many OEMs, however it wasn’t always registered or exposed to apps. We’ve worked with impacted OEMs to make them aware of this security issue and provided guidance on how to remove or disable the affected code.

Credential Leak

Credential Leak A popular web browser pre-installed on many devices included a built-in password manager for sites visited by the user. The interface for this feature was exposed to WebView through JavaScript loaded in the context of each web page. A malicious site could have accessed the full contents of the user’s credential store. The credentials are encrypted at rest, but used a weak algorithm (DES) and a known, hardcoded key. This issue was reported to the developer and updates for the app were issued to users.

Overly-Privileged Apps

Overly-Privileged Apps The checkUidPermission method in the PackageManagerService class was modified in the framework code for some devices to allow special permissions access to some apps. In one version, the method granted apps with the shared user ID com.google.uid.shared any permission they requested and apps signed with the same key as the com.google.android.gsf package any permission in their manifest. Another version of the modification allowed apps matching a list of package names and signatures to pass runtime permission checks even if the permission was not in their manifest. These issues have been fixed by the OEMs.

More information

Keep an eye out at https://bugs.chromium.org/p/apvi/ for future disclosures of Google-discovered security issues under this program, or find more information there on issues that have already been disclosed.

Acknowledgements: Scott Roberts, Shailesh Saini and Łukasz Siewierski, Android Security and Privacy Team

Source: Google Online Security Blog

Lockscreen and Authentication Improvements in Android 11

[Cross-posted from the Android Developers Blog]

As phones become faster and smarter, they play increasingly important roles in our lives, functioning as our extended memory, our connection to the world at large, and often the primary interface for communication with friends, family, and wider communities. It is only natural that as part of this evolution, we’ve come to entrust our phones with our most private information, and in many ways treat them as extensions of our digital and physical identities.

This trust is paramount to the Android Security team. The team focuses on ensuring that Android devices respect the privacy and sensitivity of user data. A fundamental aspect of this work centers around the lockscreen, which acts as the proverbial front door to our devices. After all, the lockscreen ensures that only the intended user(s) of a device can access their private data.

This blog post outlines recent improvements around how users interact with the lockscreen on Android devices and more generally with authentication. In particular, we focus on two categories of authentication that present both immense potential as well as potentially immense risk if not designed well: biometrics and environmental modalities.

The tiered authentication model

Before getting into the details of lockscreen and authentication improvements, we first want to establish some context to help relate these improvements to each other. A good way to envision these changes is to fit them into the framework of the tiered authentication model, a conceptual classification of all the different authentication modalities on Android, how they relate to each other, and how they are constrained based on this classification.

The model itself is fairly simple, classifying authentication modalities into three buckets of decreasing levels of security and commensurately increasing constraints. The primary tier is the least constrained in the sense that users only need to re-enter a primary modality under certain situations (for example, after each boot or every 72 hours) in order to use its capability. The secondary and tertiary tiers are more constrained because they cannot be set up and used without having a primary modality enrolled first and they have more constraints further restricting their capabilities.

- Primary Tier - Knowledge Factor: The first tier consists of modalities that rely on knowledge factors, or something the user knows, for example, a PIN, pattern, or password. Good high-entropy knowledge factors, such as complex passwords that are hard to guess, offer the highest potential guarantee of identity.

Knowledge factors are especially useful on Android becauses devices offer hardware backed brute-force protection with exponential-backoff, meaning Android devices prevent attackers from repeatedly guessing a PIN, pattern, or password by having hardware backed timeouts after every 5 incorrect attempts. Knowledge factors also confer additional benefits to all users that use them, such as File Based Encryption (FBE) and encrypted device backup.

- Secondary Tier - Biometrics: The second tier consists primarily of biometrics, or something the user is. Face or fingerprint based authentications are examples of secondary authentication modalities. Biometrics offer a more convenient but potentially less secure way of confirming your identity with a device.

We will delve into Android biometrics in the next section.

- The Tertiary Tier - Environmental: The last tier includes modalities that rely on something the user has. This could either be a physical token, such as with Smart Lock’s Trusted Devices where a phone can be unlocked when paired with a safelisted bluetooth device. Or it could be something inherent to the physical environment around the device, such as with Smart Lock’s Trusted Places where a phone can be unlocked when it is taken to a safelisted location.

Improvements to tertiary authentication

While both Trusted Places and Trusted Devices (and tertiary modalities in general) offer convenient ways to get access to the contents of your device, the fundamental issue they share is that they are ultimately a poor proxy for user identity. For example, an attacker could unlock a misplaced phone that uses Trusted Place simply by driving it past the user's home, or with moderate amount of effort, spoofing a GPS signal using off-the-shelf Software Defined Radios and some mild scripting. Similarly with Trusted Device, access to a safelisted bluetooth device also gives access to all data on the user’s phone.

Because of this, a major improvement has been made to the environmental tier in Android 10. The Tertiary tier was switched from an active unlock mechanism into an extending unlock mechanism instead. In this new mode, a tertiary tier modality can no longer unlock a locked device. Instead, if the device is first unlocked using either a primary or secondary modality, it can continue to keep it in the unlocked state for a maximum of four hours.

A closer look at Android biometrics

Biometric implementations come with a wide variety of security characteristics, so we rely on the following two key factors to determine the security of a particular implementation:

- Architectural security: The resilience of a biometric pipeline against kernel or platform compromise. A pipeline is considered secure if kernel and platform compromises don’t grant the ability to either read raw biometric data, or inject synthetic data into the pipeline to influence an authentication decision.

- Spoofability: Is measured using the Spoof Acceptance Rate (SAR). SAR is a metric first introduced in Android P, and is intended to measure how resilient a biometric is against a dedicated attacker. Read more about SAR and its measurement in Measuring Biometric Unlock Security.

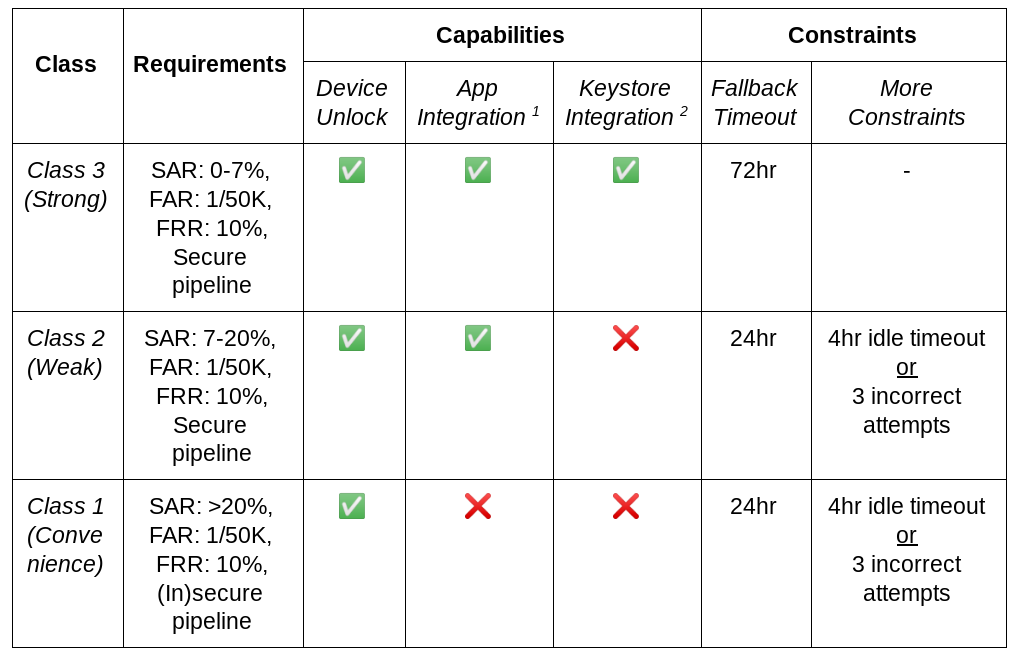

We use these two factors to classify biometrics into one of three different classes in decreasing order of security:

- Class 3 (formerly Strong)

- Class 2 (formerly Weak)

- Class 1 (formerly Convenience)

Each class comes with an associated set of constraints that aim to balance their ease of use with the level of security they offer.

These constraints reflect the length of time before a biometric falls back to primary authentication, and the allowed application integration. For example, a Class 3 biometric enjoys the longest timeouts and offers all integration options for apps, while a Class 1 biometric has the shortest timeouts and no options for app integration. You can see a summary of the details in the table below, or the full details in the Android Android Compatibility Definition Document (CDD).

1 App integration means exposing an API to apps (e.g., via integration with BiometricPrompt/BiometricManager, androidx.biometric, or FIDO2 APIs)

2 Keystore integration means integrating Keystore, e.g., to release app auth-bound keys

Benefits and caveats

Biometrics provide convenience to users while maintaining a high level of security. Because users need to set up a primary authentication modality in order to use biometrics, it helps boost the lockscreen adoption (we see an average of 20% higher lockscreen adoption on devices that offer biometrics versus those that do not). This allows more users to benefit from the security features that the lockscreen provides: gates unauthorized access to sensitive user data and also confers other advantages of a primary authentication modality to these users, such as encrypted backups. Finally, biometrics also help reduce shoulder surfing attacks in which an attacker tries to reproduce a PIN, pattern, or password after observing a user entering the credential.

However, it is important that users understand the trade-offs involved with the use of biometrics. Primary among these is that no biometric system is foolproof. This is true not just on Android, but across all operating systems, form-factors, and technologies. For example, a face biometric implementation might be fooled by family members who resemble the user or a 3D mask of the user. A fingerprint biometric implementation could potentially be bypassed by a spoof made from latent fingerprints of the user. Although anti-spoofing or Presentation Attack Detection (PAD) technologies have been actively developed to mitigate such spoofing attacks, they are mitigations, not preventions.

One effort that Android has made to mitigate the potential risk of using biometrics is the lockdown mode introduced in Android P. Android users can use this feature to temporarily disable biometrics, together with Smart Lock (for example, Trusted Places and Trusted Devices) as well as notifications on the lock screen, when they feel the need to do so.

To use the lockdown mode, users first need to set up a primary authentication modality and then enable it in settings. The exact setting where the lockdown mode can be enabled varies by device models, and on a Google Pixel 4 device it is under Settings > Display > Lock screen > Show lockdown option. Once enabled, users can trigger the lockdown mode by holding the power button and then clicking the Lockdown icon on the power menu. A device in lockdown mode will return to the non-lockdown state after a primary authentication modality (such as a PIN, pattern, or password) is used to unlock the device.

BiometricPrompt - New APIs

In order for developers to benefit from the security guarantee provided by Android biometrics and to easily integrate biometric authentication into their apps to better protect sensitive user data, we introduced the BiometricPrompt APIs in Android P.

There are several benefits of using the BiometricPrompt APIs. Most importantly, these APIs allow app developers to target biometrics in a modality-agnostic way across different Android devices (that is, BiometricPrompt can be used as a single integration point for various biometric modalities supported on devices), while controlling the security guarantees that the authentication needs to provide (such as requiring Class 3 or Class 2 biometrics, with device credential as a fallback). In this way, it helps protect app data with a second layer of defenses (in addition to the lockscreen) and in turn respects the sensitivity of user data. Furthermore, BiometricPrompt provides a persistent UI with customization options for certain information (for example, title and description), offering a consistent user experience across biometric modalities and across Android devices.

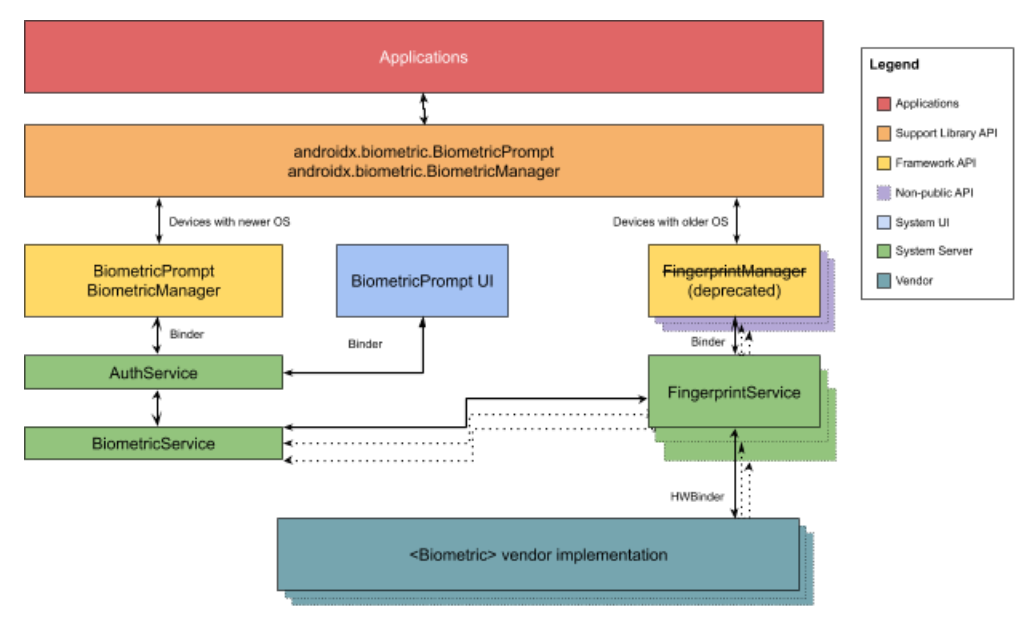

As shown in the following architecture diagram, apps can integrate with biometrics on Android devices through either the framework API or the support library (that is, androidx.biometric for backward compatibility). One thing to note is that FingerprintManager is deprecated because developers are encouraged to migrate to BiometricPrompt for modality-agnostic authentications.

Improvements to BiometricPrompt

Android 10 introduced the BiometricManager class that developers can use to query the availability of biometric authentication and included fingerprint and face authentication integration for BiometricPrompt.

In Android 11, we introduce new features such as the BiometricManager.Authenticators interface which allows developers to specify the authentication types accepted by their apps, as well as additional support for auth-per-use keys within the BiometricPrompt class.

More details can be found in the Android 11 preview and Android Biometrics documentation. Read more about BiometricPrompt API usage in our blog post Using BiometricPrompt with CryptoObject: How and Why and our codelab Login with Biometrics on Android.

Source: Google Online Security Blog

Lockscreen and Authentication Improvements in Android 11

[Cross-posted from the Android Developers Blog]

As phones become faster and smarter, they play increasingly important roles in our lives, functioning as our extended memory, our connection to the world at large, and often the primary interface for communication with friends, family, and wider communities. It is only natural that as part of this evolution, we’ve come to entrust our phones with our most private information, and in many ways treat them as extensions of our digital and physical identities.

This trust is paramount to the Android Security team. The team focuses on ensuring that Android devices respect the privacy and sensitivity of user data. A fundamental aspect of this work centers around the lockscreen, which acts as the proverbial front door to our devices. After all, the lockscreen ensures that only the intended user(s) of a device can access their private data.

This blog post outlines recent improvements around how users interact with the lockscreen on Android devices and more generally with authentication. In particular, we focus on two categories of authentication that present both immense potential as well as potentially immense risk if not designed well: biometrics and environmental modalities.

The tiered authentication model

Before getting into the details of lockscreen and authentication improvements, we first want to establish some context to help relate these improvements to each other. A good way to envision these changes is to fit them into the framework of the tiered authentication model, a conceptual classification of all the different authentication modalities on Android, how they relate to each other, and how they are constrained based on this classification.

The model itself is fairly simple, classifying authentication modalities into three buckets of decreasing levels of security and commensurately increasing constraints. The primary tier is the least constrained in the sense that users only need to re-enter a primary modality under certain situations (for example, after each boot or every 72 hours) in order to use its capability. The secondary and tertiary tiers are more constrained because they cannot be set up and used without having a primary modality enrolled first and they have more constraints further restricting their capabilities.

- Primary Tier - Knowledge Factor: The first tier consists of modalities that rely on knowledge factors, or something the user knows, for example, a PIN, pattern, or password. Good high-entropy knowledge factors, such as complex passwords that are hard to guess, offer the highest potential guarantee of identity.

Knowledge factors are especially useful on Android becauses devices offer hardware backed brute-force protection with exponential-backoff, meaning Android devices prevent attackers from repeatedly guessing a PIN, pattern, or password by having hardware backed timeouts after every 5 incorrect attempts. Knowledge factors also confer additional benefits to all users that use them, such as File Based Encryption (FBE) and encrypted device backup.

- Secondary Tier - Biometrics: The second tier consists primarily of biometrics, or something the user is. Face or fingerprint based authentications are examples of secondary authentication modalities. Biometrics offer a more convenient but potentially less secure way of confirming your identity with a device.

We will delve into Android biometrics in the next section.

- The Tertiary Tier - Environmental: The last tier includes modalities that rely on something the user has. This could either be a physical token, such as with Smart Lock’s Trusted Devices where a phone can be unlocked when paired with a safelisted bluetooth device. Or it could be something inherent to the physical environment around the device, such as with Smart Lock’s Trusted Places where a phone can be unlocked when it is taken to a safelisted location.

Improvements to tertiary authentication

While both Trusted Places and Trusted Devices (and tertiary modalities in general) offer convenient ways to get access to the contents of your device, the fundamental issue they share is that they are ultimately a poor proxy for user identity. For example, an attacker could unlock a misplaced phone that uses Trusted Place simply by driving it past the user's home, or with moderate amount of effort, spoofing a GPS signal using off-the-shelf Software Defined Radios and some mild scripting. Similarly with Trusted Device, access to a safelisted bluetooth device also gives access to all data on the user’s phone.

Because of this, a major improvement has been made to the environmental tier in Android 10. The Tertiary tier was switched from an active unlock mechanism into an extending unlock mechanism instead. In this new mode, a tertiary tier modality can no longer unlock a locked device. Instead, if the device is first unlocked using either a primary or secondary modality, it can continue to keep it in the unlocked state for a maximum of four hours.

A closer look at Android biometrics

Biometric implementations come with a wide variety of security characteristics, so we rely on the following two key factors to determine the security of a particular implementation:

- Architectural security: The resilience of a biometric pipeline against kernel or platform compromise. A pipeline is considered secure if kernel and platform compromises don’t grant the ability to either read raw biometric data, or inject synthetic data into the pipeline to influence an authentication decision.

- Spoofability: Is measured using the Spoof Acceptance Rate (SAR). SAR is a metric first introduced in Android P, and is intended to measure how resilient a biometric is against a dedicated attacker. Read more about SAR and its measurement in Measuring Biometric Unlock Security.

We use these two factors to classify biometrics into one of three different classes in decreasing order of security:

- Class 3 (formerly Strong)

- Class 2 (formerly Weak)

- Class 1 (formerly Convenience)

Each class comes with an associated set of constraints that aim to balance their ease of use with the level of security they offer.

These constraints reflect the length of time before a biometric falls back to primary authentication, and the allowed application integration. For example, a Class 3 biometric enjoys the longest timeouts and offers all integration options for apps, while a Class 1 biometric has the shortest timeouts and no options for app integration. You can see a summary of the details in the table below, or the full details in the Android Android Compatibility Definition Document (CDD).

1 App integration means exposing an API to apps (e.g., via integration with BiometricPrompt/BiometricManager, androidx.biometric, or FIDO2 APIs)

2 Keystore integration means integrating Keystore, e.g., to release app auth-bound keys

Benefits and caveats

Biometrics provide convenience to users while maintaining a high level of security. Because users need to set up a primary authentication modality in order to use biometrics, it helps boost the lockscreen adoption (we see an average of 20% higher lockscreen adoption on devices that offer biometrics versus those that do not). This allows more users to benefit from the security features that the lockscreen provides: gates unauthorized access to sensitive user data and also confers other advantages of a primary authentication modality to these users, such as encrypted backups. Finally, biometrics also help reduce shoulder surfing attacks in which an attacker tries to reproduce a PIN, pattern, or password after observing a user entering the credential.

However, it is important that users understand the trade-offs involved with the use of biometrics. Primary among these is that no biometric system is foolproof. This is true not just on Android, but across all operating systems, form-factors, and technologies. For example, a face biometric implementation might be fooled by family members who resemble the user or a 3D mask of the user. A fingerprint biometric implementation could potentially be bypassed by a spoof made from latent fingerprints of the user. Although anti-spoofing or Presentation Attack Detection (PAD) technologies have been actively developed to mitigate such spoofing attacks, they are mitigations, not preventions.

One effort that Android has made to mitigate the potential risk of using biometrics is the lockdown mode introduced in Android P. Android users can use this feature to temporarily disable biometrics, together with Smart Lock (for example, Trusted Places and Trusted Devices) as well as notifications on the lock screen, when they feel the need to do so.

To use the lockdown mode, users first need to set up a primary authentication modality and then enable it in settings. The exact setting where the lockdown mode can be enabled varies by device models, and on a Google Pixel 4 device it is under Settings > Display > Lock screen > Show lockdown option. Once enabled, users can trigger the lockdown mode by holding the power button and then clicking the Lockdown icon on the power menu. A device in lockdown mode will return to the non-lockdown state after a primary authentication modality (such as a PIN, pattern, or password) is used to unlock the device.

BiometricPrompt - New APIs

In order for developers to benefit from the security guarantee provided by Android biometrics and to easily integrate biometric authentication into their apps to better protect sensitive user data, we introduced the BiometricPrompt APIs in Android P.

There are several benefits of using the BiometricPrompt APIs. Most importantly, these APIs allow app developers to target biometrics in a modality-agnostic way across different Android devices (that is, BiometricPrompt can be used as a single integration point for various biometric modalities supported on devices), while controlling the security guarantees that the authentication needs to provide (such as requiring Class 3 or Class 2 biometrics, with device credential as a fallback). In this way, it helps protect app data with a second layer of defenses (in addition to the lockscreen) and in turn respects the sensitivity of user data. Furthermore, BiometricPrompt provides a persistent UI with customization options for certain information (for example, title and description), offering a consistent user experience across biometric modalities and across Android devices.

As shown in the following architecture diagram, apps can integrate with biometrics on Android devices through either the framework API or the support library (that is, androidx.biometric for backward compatibility). One thing to note is that FingerprintManager is deprecated because developers are encouraged to migrate to BiometricPrompt for modality-agnostic authentications.

Improvements to BiometricPrompt

Android 10 introduced the BiometricManager class that developers can use to query the availability of biometric authentication and included fingerprint and face authentication integration for BiometricPrompt.

In Android 11, we introduce new features such as the BiometricManager.Authenticators interface which allows developers to specify the authentication types accepted by their apps, as well as additional support for auth-per-use keys within the BiometricPrompt class.

More details can be found in the Android 11 preview and Android Biometrics documentation. Read more about BiometricPrompt API usage in our blog post Using BiometricPrompt with CryptoObject: How and Why and our codelab Login with Biometrics on Android.

Source: Google Online Security Blog

Improved malware protection for users in the Advanced Protection Program

Advanced Protection users are already well-protected from phishing. As a result, we’ve seen that attackers target these users through other means, such as leading them to download malware. In August 2019, Chrome began warning Advanced Protection users when a downloaded file may be malicious.

Now, in addition to this warning, Chrome is giving Advanced Protection users the ability to send risky files to be scanned by Google Safe Browsing’s full suite of malware detection technology before opening the file. We expect these cloud-hosted scans to significantly improve our ability to detect when these files are malicious.

When a user downloads a file, Safe Browsing will perform a quick check using metadata, such as hashes of the file, to evaluate whether it appears potentially suspicious. For any downloads that Safe Browsing deems risky, but not clearly unsafe, the user will be presented with a warning and the ability to send the file to be scanned. If the user chooses to send the file, Chrome will upload it to Google Safe Browsing, which will scan it using its static and dynamic analysis techniques in real time. After a short wait, if Safe Browsing determines the file is unsafe, Chrome will warn the user. As always, users can bypass the warning and open the file without scanning, if they are confident the file is safe. Safe Browsing deletes uploaded files a short time after scanning.

Online threats are constantly changing, and it's important that users’ security protections automatically evolve as well. With the US election fast approaching, for example, Advanced Protection could be useful to members of political campaigns whose accounts are now more likely to be targeted. If you’re a user at high-risk of attack, visit g.co/advancedprotection to enroll in the Advanced Protection Program.

Posted by Kylie McRoberts, Program Manager and Alec Guertin, Security Engineer

Posted by Kylie McRoberts, Program Manager and Alec Guertin, Security Engineer