This post focuses on what you can do with Search Console to monitor and make the most out of structured data for your site. In addition, we have some new features that will help you even more. Below are the new additions, read on to learn more about them.

- Unparsable structured data is a new report that aggregates structured data syntax errors.

- New enhancement reports for Sitelinks searchbox and Logo.

Monitoring overall structured data performance

Every time Search Console detects a new issue related to structured data on a website, we send an email to account owners - but if an existing issue gets worse, it won’t trigger an email, so it is still important for you to check your account sporadically.This is not something you need to do every day, but we recommend you check it once in a while to make sure everything is working as intended. If your website development has defined cycles, it might be a good practice to log in to Search Console after changes are made to the website to monitor your performance.

If you’d like to have an overall idea of all the errors for a specific structured data feature in your site, you can navigate to the Enhancements menu in the left sidebar and click a feature. You'll find a summary of all errors and warnings, as well as the valid items.

As mentioned above, we added a new set of reports to help you understand more types of structured data on your site: Sitelinks searchbox and Logo. They are joining the existing set of reports on Recipe, Event, Job Posting and others. You can read more about the reports in the Search Console Help Center.

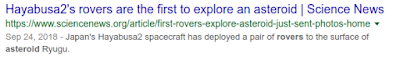

Here's an example of an Enhancement report, note that you can only see enhancements that have been detected in your pages. The report helps you with the following actions:

- Review the trends of errors, warnings and valid items: To view each status issue separately, click the colored boxes above the bar chart.

- Review warnings and errors per page: To see examples of pages which are currently affected by the issues, click a specific row below the bar chart.

|

| Image: Enhancements report |

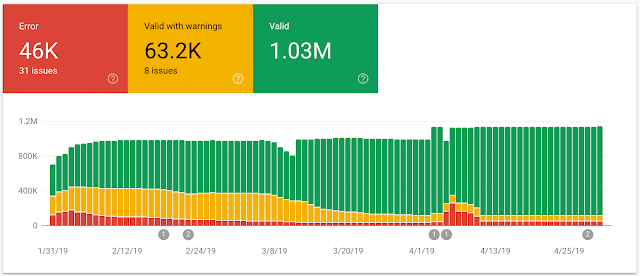

Check this report to see if Google was unable to parse any of the structured data you tried to add to your site. Parsing issues could point you to lost opportunities for rich results for your site. Below is a screenshot showing how the report looks like. You can access the report directly and read more about the report in our help center.

|

| Image: Unparsable Structured Data report |

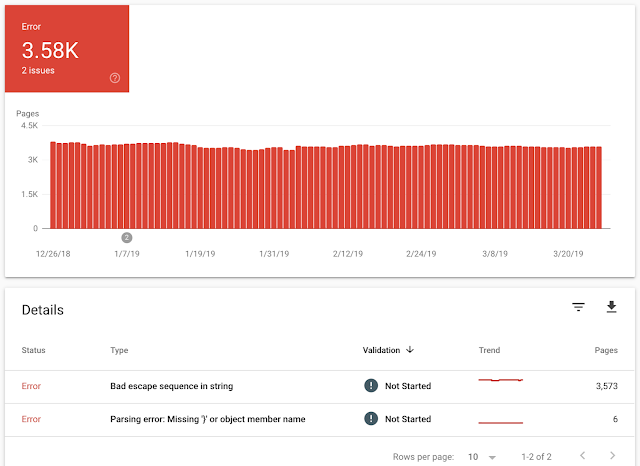

Testing structured data on a URL level

To make sure your pages were processed correctly and are eligible for rich results or as a way to diagnose why some rich result are not surfacing for a specific URL, you can use the URL Inspection tool. This tool helps you understand areas of improvement at a URL level and helps you get an idea on where to focus.When you paste a URL into the search box at the top of Search Console, you can find what’s working properly and warnings or errors related to your structured data in the enhancements section, as seen below for Recipes.

|

| Image: URL Inspection tool |

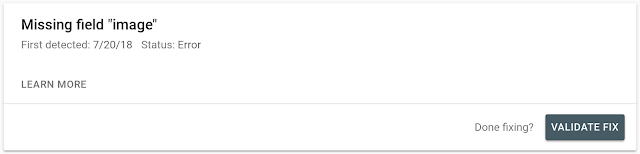

Once you understand and fix the error, you can click Validate Fix (see screenshot below) so Google can start validating whether the issue is indeed fixed. When you click the Validate Fix button, Google runs several instantaneous tests. If your pages don’t pass this test, Search Console provides you with an immediate notification. Otherwise, Search Console reprocesses the rest of the affected pages.

|

| Image: Structured data error detail |

Posted by Daniel Waisberg, Search Advocate & Na'ama Zohary, Search Console team