Being able to diagnose application logs, errors and latency is key to understanding failures, but it can be tricky and time-consuming to implement correctly. That’s why we're happy to announce general availability of Stackdriver Diagnostics integration for ASP.NET Core applications, providing libraries to easily integrate Stackdriver Logging, Error Reporting and Trace into your ASP.NET Core applications, with a minimum of effort and code. While on the road to GA, we’ve fixed bugs, listened to and applied customer feedback, and have done extensive testing to make sure it's ready for your production workloads.

The Google.Cloud.Diagnostics.AspNetCore package is available on NuGet. ASP.NET Classic is also supported with the Google.Cloud.Diagnostics.AspNet package.

Now, let’s look at the various Google Cloud Platform (GCP) components that we integrated into this release, and how to begin using them to troubleshoot your ASP.NET Core application.

Stackdriver Logging

Stackdriver Logging allows you to store, search, analyze, monitor and alert on log data and events from GCP and AWS. Logging to Stackdriver is simple with Google.Cloud.Diagnostics.AspNetCore. The package uses ASP.NET Core’s built in logging API; simply add the Stackdriver provider and then create and use a logger as you normally would. Your logs will then show up in the Stackdriver Logging section of the Google Cloud Console. Initializing and sending logs to Stackdriver Logging only requires a few lines of code:public void Configure(IApplicationBuilder app, ILoggerFactory loggerFactory)

{

// Initialize Stackdriver Logging

loggerFactory.AddGoogle("YOUR-GOOGLE-PROJECT-ID");

...

}

public void LogMessage(ILoggerFactory loggerFactory)

{

// Send a log to Stackdriver Logging

var logger = loggerFactory.CreateLogger("NetworkLog");

logger.LogInformation("This is a log message.");

}This shows two different logs that were reported to Stackdriver. An expanded log shows its severity, timestamp, payload and many other useful pieces of information.

Stackdriver Error Reporting

Adding the Stackdriver Error Reporting middleware to the beginning of your middleware flow reports all uncaught exceptions to Stackdriver Error Reporting. Exceptions are grouped and shown in the Stackdriver Error Reporting section of Cloud Console. Here’s how to initialize Stackdriver Error Reporting in your ASP.NET Core application:public void ConfigureServices(IServiceCollection services)

{

services.AddGoogleExceptionLogging(options =>

{

options.ProjectId = "YOUR-GOOGLE-PROJECT-ID";

options.ServiceName = "ImageGenerator";

options.Version = "1.0.2";

});

...

}

public void Configure(IApplicationBuilder app)

{

// Use before handling any requests to ensure all unhandled exceptions are reported.

app.UseGoogleExceptionLogging();

...

}You can also report caught and handled exceptions with the IExceptionLogger interface:

public void ReadFile(IExceptionLogger exceptionLogger)

{

try

{

string scores = File.ReadAllText(@"C:\Scores.txt");

Console.WriteLine(scores);

}

catch (IOException e)

{

exceptionLogger.Log(e);

}

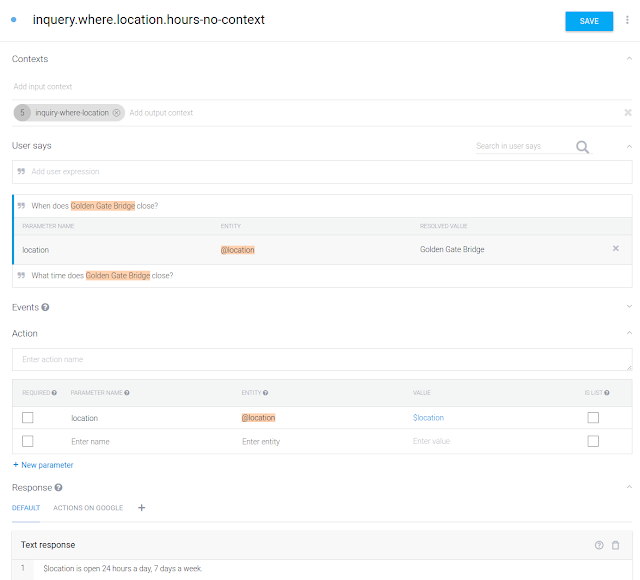

}This shows the occurrence of an error over time for a specific application and version. The exact error is shown on the bottom.

Stackdriver Trace

Stackdriver Trace captures latency information on all of your applications. For example, you can diagnose if HTTP requests are taking too long by using a Stackdriver Trace integration point. Similar to Error Reporting, Trace hooks into your middleware flow and should be added at the beginning of your middleware flow. Initializing Stackdriver Trace is similar to setting up Stackdriver Error Reporting:public void ConfigureServices(IServiceCollection services)

{

string projectId = "YOUR-GOOGLE-PROJECT-ID";

services.AddGoogleTrace(options =>

{

options.ProjectId = projectId;

});

...

}

public void Configure(IApplicationBuilder app)

{

// Use at the start of the request pipeline to ensure the entire request is traced.

app.UseGoogleTrace();

...

}public void TraceHelloWorld(IManagedTracer tracer)

{

using (tracer.StartSpan(nameof(TraceHelloWorld)))

{

Console.Out.WriteLine("Hello, World!");

}

}This shows the time spent for portions of an HTTP request. The timeline shows both time spent on the front-end and on the back-end.

Not using ASP.NET Core?

If you are haven’t made the switch to ASP.NET Core but still want to use Stackdriver diagnostics tools, we also provide a package for ASP.NET accordingly named Google.Cloud.Diagnostics.AspNet. It provides simple Stackdriver diagnostics integration into ASP.NET applications. You can add Error Reporting and Tracing for MVC and Web API with a line of code to your ASP.NET application. And while ASP.NET does not have a logging API, we have also integrated Stackdriver Logging with log4net in our Google.Cloud.Logging.Log4Net package.

Our goal is to make GCP a great place to build and run ASP.NET and ASP.NET Core applications, and troubleshooting performance and errors is a big part of that. Let us know what you think of this new functionality, and leave us your feedback on GitHub.