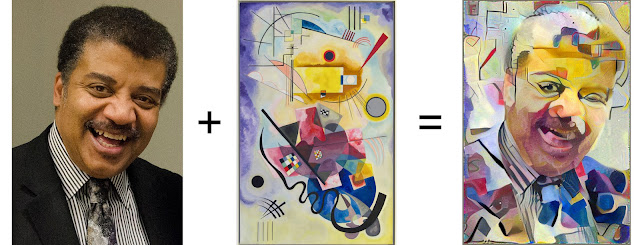

In 2015, our early attempts to visualize how neural networks understand images led to psychedelic images. Soon after, we open sourced our code as DeepDream and it grew into a small art movement producing all sorts of amazing things. But we also continued the original line of research behind DeepDream, trying to address one of the most exciting questions in Deep Learning: how do neural networks do what they do?

Last year in the online journal Distill, we demonstrated how those same techniques could show what individual neurons in a network do, rather than just what is “interesting to the network” as in DeepDream. This allowed us to see how neurons in the middle of the network are detectors for all sorts of things — buttons, patches of cloth, buildings — and see how those build up to be more and more sophisticated over the networks layers.

|

| Visualizations of neurons in GoogLeNet. Neurons in higher layers represent higher level ideas. |

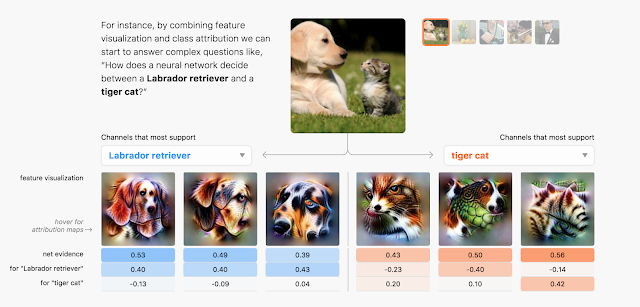

Today, we’re excited to publish “The Building Blocks of Interpretability,” a new Distill article exploring how feature visualization can combine together with other interpretability techniques to understand aspects of how networks make decisions. We show that these combinations can allow us to sort of “stand in the middle of a neural network” and see some of the decisions being made at that point, and how they influence the final output. For example, we can see things like how a network detects a floppy ear, and then that increases the probability it gives to the image being a “Labrador retriever” or “beagle”.

We explore techniques for understanding which neurons fire in the network. Normally, if we ask which neurons fire, we get something meaningless like “neuron 538 fired a little bit,” which isn’t very helpful even to experts. Our techniques make things more meaningful to humans by attaching visualizations to each neuron, so we can see things like “the floppy ear detector fired”. It’s almost a kind of MRI for neural networks.

We can also zoom out and show how the entire image was “perceived” at different layers. This allows us to really see the transition from the network detecting very simple combinations of edges, to rich textures and 3d structure, to high-level structures like ears, snouts, heads and legs.

These insights are exciting by themselves, but they become even more exciting when we can relate them to the final decision the network makes. So not only can we see that the network detected a floppy ear, but we can also see how that increases the probability of the image being a labrador retriever.

In addition to our paper, we’re also releasing Lucid, a neural network visualization library building off our work on DeepDream. It allows you to make the sort lucid feature visualizations we see above, in addition to more artistic DeepDream images.

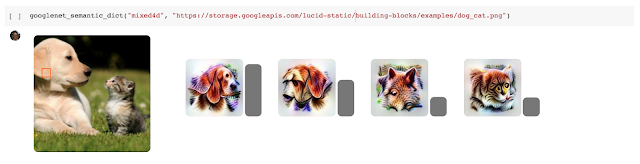

We’re also releasing colab notebooks. These notebooks make it extremely easy to use Lucid to reproduce visualizations in our article! Just open the notebook, click a button to run code — no setup required!

|

| In colab notebooks you can click a button to run code, and see the result below. |

By Chris Olah, Research Scientist and Arvind Satyanarayan, Visiting Researcher, Google Brain Team