Posted by Bruno Oliveira, Software EngineerAs developers, we all know that having the right assets is crucial to the success of a 3D application, especially with AR and VR apps. Since we launched Poly a few weeks ago, many developers have been downloading and using Poly models in their apps and games. To make this process easier and more powerful, today we launched the Poly API, which allows applications to dynamically search and download 3D assets at both edit and run time.

The API is REST-based, so it's inherently cross-platform. To help you make the API calls and convert the results into objects that you can display in your app, we provide several toolkits and samples for some common game engines and platforms. Even if your engine or platform isn't included in this list, remember that the API is based on HTTP, which means you can call it from virtually any device that's connected to the Internet.

Here are some of the things the API allows you to do:

- List assets, with many possible filters:

- keyword

- category ("Animals", "Technology", "Transportation", etc.)

- asset type (Blocks, Tilt Brush, etc)

- complexity (low, medium, high complexity)

- curated (only curated assets or all assets)

- Get a particular asset by ID

- Get the user's own assets

- Get the user's liked assets

- Download assets. Formats vary by asset type (OBJ, GLTF1, GLTF2).

- Download material files and textures for assets.

- Get asset metadata (author, title, description, license, creation time, etc)

- Fetch thumbnails for assets

Poly Toolkit for Unity Developers

If you are using Unity, we offer Poly Toolkit for Unity, a plugin that includes all the necessary functionality to automatically wrap the API calls and download and convert assets, exposing it through a simple C# API. For example, you can fetch and import an asset into your scene at runtime with a single line of code:

PolyApi.GetAsset(ASSET_ID,

result => { PolyApi.Import(result.Value, PolyImportOptions.Default()); });

Poly Toolkit optionally also handles authentication for you, so that you can list the signed in user's own private assets, or the assets that the user has liked on the Poly website.

In addition, Poly Toolkit for Unity also comes with an editor window, where you can search for and import assets from Poly into your Unity scene directly from the editor.

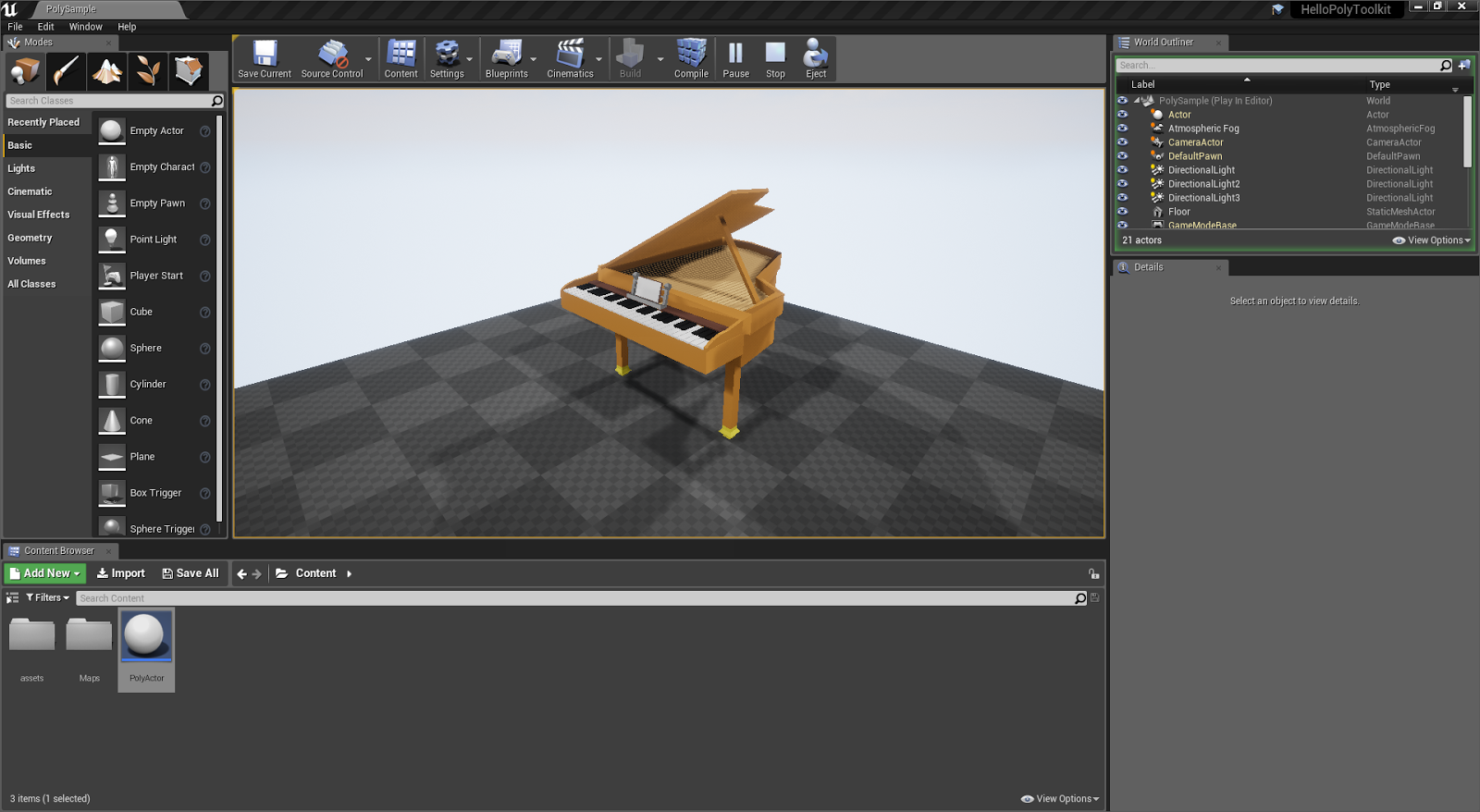

Poly Toolkit for Unreal Developers

If you are using Unreal, we also offer Poly Toolkit for Unreal, which wraps the API and performs automatic download and conversion of OBJs and Blocks models from Poly. It allows you to query for assets and filter results, download assets and import assets as ready-to-use Unreal actors that you can use in your game.

Credit: Piano by Bruno OliveiraHow to use Poly API with Android, Web or iOS app

Not using a game engine? No problem! If you are developing for Android, check out our Android sample code, which includes a basic sample with no external dependencies, and also a sample that shows how to use the Poly API in conjunction with ARCore. The samples include:

- Asynchronous HTTP connections to the Poly API.

- Asynchronous downloading of asset files.

- Conversion of OBJ and MTL files to OpenGL-compatible VBOs and IBOs.

- Examples of basic shaders.

- Integration with ARCore (dynamically downloads an object from Poly and lets the user place it in the scene).

Credit: Cactus wrenby Poly by GoogleIf you are an iOS developer, we have two samples for you as well: one using SceneKit and one using ARKit, showing how to build an iOS app that downloads and imports models from Poly. This includes all the logicnecessary to open an HTTP connection, make the API requests, parse the results, build the 3D objects from the data and place them on the scene.

For web developers, we also offer a complete WebGL sample using Three.js, showing how to get and display a particular asset, or perform searches. There is also a sample showing how to import and display Tilt Brush sketches.

Credit: Forest by Alex "SAFFY" SafayanNo matter what engine or platform you are using, we hope that the Poly API will help bring high quality assets to your app and help you increase engagement with your users! You can find more information about the Poly API and our toolkits and samples on our developers site.