By Ian Maddox, GCP Solutions Architect

If information is gold, the database is a treasure chest. Web applications store their most valuable data in a database, and lots of sites would cease to exist if their data were stolen or deleted. This post aims to give you a series of best practices to help protect and defend the databases you host on

Google Cloud Platform (GCP).

Database security starts before the first record is ever stored. You must consider the impact on security as you design the hosting environment. From firewall rules to logging, there's a lot to think about both inside and outside the database.

First considerations

When it comes to database security, one of the fundamental things to consider is whether to deploy your own database servers or to use one of

Google's managed storage services. This decision should be influenced heavily by your existing architecture, staff skills, policies and need for specialized functionality.

This post is not intended to sell you specific GCP products, but absent any overwhelming reasons to host your own database, we recommend using a managed version thereof. Managed database services are designed to work at Google scale, with all of the benefits of our

security model. Organizations seeking compliance with PCI, SOX, HIPAA, GDPR, and other compliance regimes will appreciate the significant reduction in effort with a

shared responsibility model. And even if these rules and regulations don't apply to your organization, I recommend following the

PCI SAQ A (payment card industry self-assessment questionnaire type A) as a baseline set of best practices.

Access controls

You should limit access to the database itself as much as possible. Self-hosted databases should have

VPC firewall rules locked down to only allow ingress from and egress to authorized hosts. All ports and endpoints not specifically required should be blocked. If possible, ensure changes to the firewall are logged and alerts are configured for unexpected changes. This happens automatically for firewall changes in GCP. Tools like

Forseti Security can monitor and manage security configurations for both Google managed services and custom databases hosted on

Google Compute Engine instances.

As you prepare to launch your database, you should also consider the environment in which it operates. Service accounts streamline authorization to Google databases using automatically rotating keys, and you can manage rotation for self-hosted databases in GCP using

Cloud Key Management System (KMS).

Data security

Always keep your data retention policy in mind as you implement your schema. Sensitive data that you have no use for is a liability and should be archived or pruned. Many compliance regulations provide specific guidance (

HIPAA,

PCI,

GDPR,

SOX) to identify that data. You may find it helpful to operate under the pessimistic security model in which you assume your application will be cracked and your database will be exfiltrated. This can help clarify some of the decisions you need to make regarding retention, encryption at rest, etc.

Should the worst happen and your database is compromised, you should receive alerts about unexpected behavior such as spikes in egress traffic. Your organization may also benefit from using "canary" data—specially crafted information that should never be seen in the real world by your application under normal circumstances. Your application should be designed to detect a canary account logging in or canary values transiting the network. If found, your application should send you alerts and take immediate action to stem the possible compromise. In a way, canary data is similar to retail store

anti-theft tags. These security devices have known detection characteristics and can be hidden inside a product. A security-conscious retailer will set up sensors at their store exists to detect unauthorized removal of inventory.

Of course, you should develop and test a disaster recovery plan. Having a copy of the database protects you from some failures, but it won't protect you if your data has been altered or deleted. A

good disaster recovery plan will cover you in the event of data loss, hardware issues, network availability and any other disaster you might expect. And as always, you must regularly test and monitor the backup system to ensure reliable disaster recovery.

Configuration

If your database was deployed with a default login, you should make it a top priority to change or disable that account. Further, if any of your database accounts are password-protected, make sure those passwords are

long and complex; don’t use simple or empty passwords used under any circumstances. If you're able to use Cloud KMS, that should be your first choice. Beyond that, be sure to develop a schedule for credential rotation and define criteria for out-of-cycle rekeying.

Regardless of which method you use for authentication, you should have different credentials for read, write and admin-level privileges. Even if an application performs both read and write operations, separate credentials can limit the damage caused by bad code or unauthorized access.

Everyone who needs to access the database should have their own private credentials. Create

service accounts for each discrete application with only the permissions required for that service.

Cloud Identity and Access Management is a powerful tool for managing user privileges in GCP; generic administrator accounts should be avoided as they mask the identity of the user. User credentials should restrict rights to the minimum required to perform their duties. For example, a user that creates ad-hoc reports should not be able to alter schema. Consider using views, stored procedures and granular permissions to further restrict access to

only what a user needs to know and further mitigate SQL injection vulnerabilities.

Logging is a critical part of any application. Databases should produce logs for all key events, especially login attempts and administrative actions. These logs should be aggregated in an immutable logging service hosted apart from the database. Whether you're using

Stackdriver or some other service, credentials and read access to the logs should be completely separate from the database credentials.

Whenever possible, you should implement a monitor or proxy that can detect and block brute force login attempts. If you’re using Stackdriver, you could set up an

alerting policy to a

Cloud Function webhook that keeps track of recent attempts and creates a firewall rule to block potential abuse.

The database should run as an application-specific user, not root or admin. Any host files should be secured with appropriate file permissions and ownership to prevent unauthorized execution or alteration. POSIX compliant operating systems offer

chown and

chmod to set file permissions, and Windows servers offer

several tools as well. Tools such as

Ubuntu's AppArmor go even further to confine applications to a limited set of resources.

Application considerations

When designing application, an important best practice is to only employ encrypted connections to the database, which eliminates the possibility of an inadvertent leak of data or credentials via network sniffing. Cloud SQL users can do this using

Google's encrypting SQL proxy. Some encryption methods also allow your application to authenticate the database host to reduce the threat of impersonation or man-in-the-middle attacks.

If your application deals with particularly sensitive information, you should consider whether you actually need to retain all of its data in the first place. In some cases, handling this data is governed by a compliance regime and the decision is made for you. Even so, additional controls may be prudent to ensure data security. You may want to encrypt some data at the application level in addition to automatic at-rest encryption. You might reduce other data to a hash if all you need to know is whether the same data is presented again in the future. If using your data to train machine learning models, consider reading this article on

managing sensitive data in machine learning.

Application security can be used to enhance database security, but must not be used in place of database security. Safeguards such as

sanitization must be in place for any input sent to the database, whether it’s data for storage or parameters for querying. All application code should be peer-reviewed. Security scans for common vulnerabilities, including SQL injection and XSS, should be automated and run frequently.

The computers of anyone with access rights to the database should be subject to an organizational security policy. Some of the most audacious breaks in security history were due to malware, lax updates or mishandled portable data storage devices. Once a workstation is infected, every other system it touches is suspect. This also applies to printers, copiers and any other connected device.

Do not allow unsanitized production data to be used in development or test environments under any circumstances. This one policy will not only increase database security, but will all but eliminate the possibility of a non-production environment inadvertently emailing customers, charging accounts, changing states, etc.

Self-hosted database concerns

While Google's shared responsibility model allows managed database users to relieve themselves of some security concerns, we can't offer equally comprehensive controls for databases that you host yourself. When self-hosting, it's incumbent on the database administrator to ensure every attack vector is secured.

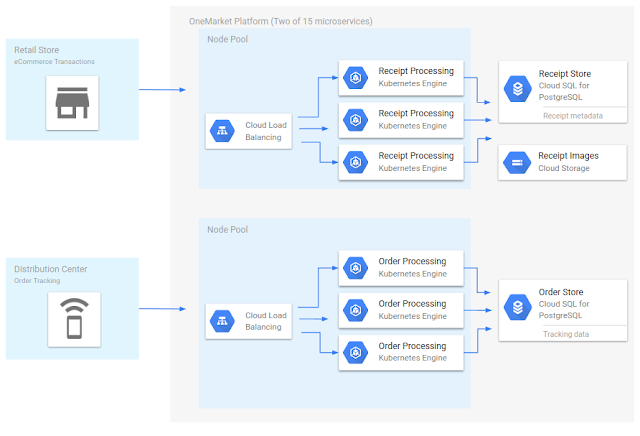

If you’re running your own database, make sure the service is running on its own host(s), with no other significant application functions allowed on it. The database should certainly not share a logical host with a web host or other web-accessible services. If this isn’t possible, the service and databases should reside in a restricted network shared and accessible by a minimum number of other hosts. A logical host is the lowest level computer or virtual computer in which an application itself is running. In GCP, this may be a container or virtual machine instance, while on-premises, logical hosts can be physical servers, VMs or containers.

A common use case for running your own database is to replicate across a hybrid cloud. For example, a hybrid cloud database deployment may have a master and one or more replicas in the cloud, with a replica on-premises. In that event, don’t connect the database to the corporate LAN or other broadly available networks. Similarly, on-premises hardware should be

physically secure so it can't be stolen or tampered with. A proper physical security regime employs physical access controls, automated physical access logs and remote video monitoring.

Self-hosted databases also require your regular attention, to make sure they’ve been updated. Craft a software update policy and set up regular alerts for out-of-date packages. Consider using a system management (

Ubuntu,

Red Hat) or

configuration management tool that lets you easily perform actions in bulk across all your instances, monitor and upgrade package versions and configure new instances. Be sure to monitor for out-of-cycle changes to key parts of the filesystem such as directories that contain configuration and executable files.

Several compliance regimes recommend an intrusion detection system or IDS. The basic function of an IDS is to monitor and react to unauthorized system access. There are

many products available that run either on each individual server or on a dedicated gateway host. Your choice in IDS may be influenced by several factors unique to your organization or application. An IDS may also be able to serve as a monitor for canary data, a security tactic described above.

All databases have specific controls that you must adjust to harden the service. You should always start with articles written by your database maker for software-specific advice on hardening the server. Hardening guides for several popular databases are linked in the further reading section below.

The underlying operating system should also be

hardened as much as possible, and all applications that are not critical for database function should be disabled. You can achieve further isolation by sandboxing or containerizing the database. Use articles written by your OS maker for variant-specific advice on how to harden the platform. Guides for the most common operating systems available in Compute Engine are linked in the further reading section below.

Organizational security

Staff policies to enforce security is an important but often overlooked part of IT security. It's a very nuanced and deep topic, but here are a few general tips that will aid in securing your database:

All staff with access to sensitive data should be considered for a criminal background check. Insist on strict adherence to a policy of eliminating or locking user accounts immediately upon transfer or termination. Human account password policies should follow the

2017 NIST Digital Identity Guidelines, and consider running social engineering penetration tests and training to reduce the chance of staff inadvertently enabling an attack.

Further reading

Security is a journey, not a destination. Even after you've tightened security on your database, application, and hosting environment, you must remain vigilant of emerging threats. In particular, self-hosted DBs come with additional responsibilities that you must tend to. For your convenience, here are some OS- and database-specific resources that you may find useful.