Tag Archives: George Pirocanac

I have often been asked, “What is the most memorable bug that you have encountered in your testing career?” For me, it is hands down a bug that happened quite a few years ago. I was leading an Engineering Productivity team that supported Google App Engine. At that time App Engine was still in its early stages, and there were many challenges associated with testing rapidly evolving features. Our testing frameworks and processes were also evolving, so it was an exciting time to be on the team.

What makes this bug so memorable is that I spent so much time developing a comprehensive suite of test scenarios, yet a failure occurred during such an obvious use case that it left me shaking my head and wondering how I had missed it. Even with many years of testing experience it can be very humbling to construct scenarios that adequately mirror what will happen in the field.

I’ll try to provide enough background for the reader to play along and see if they can determine the anomalous scenario. As a further hint, the problem resulted from the interaction of two App Engine features, so I like calling this story A Tale of Two Features.

Google App Engine was released 13 years ago as Google’s first Cloud product. It allowed users to build and deploy highly scalable web applications in the Cloud. To support this, it had its own scalable database called the Datastore. An administration console allowed users to manage the application and its Datastore through a web interface. Users wrote applications that consisted of request handlers that App Engine invoked according to the URL that was specified. The handlers could call App Engine services like Datastore through a remote procedure call (RPC) mechanism. Figure 1 illustrates this flow.

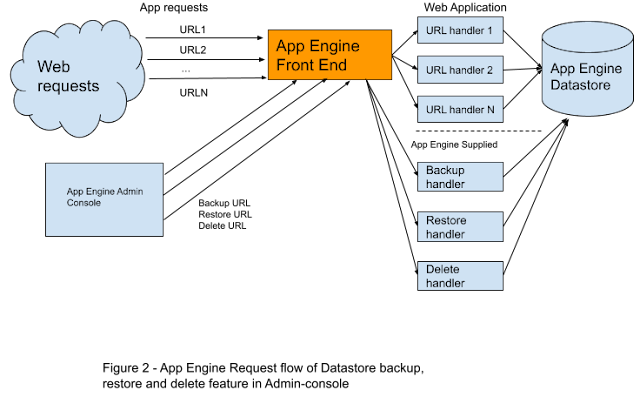

The first feature in this Tale of Two Features resided in the administration console, providing the ability to back up, restore, and delete selected or all of an application’s entities in the Datastore. It was implemented in a clever way that incorporated it directly into the application, rather than as an independent utility. As part of the application it could freely operate on the Datastore and incur the same billing charges as other datastore operations within the application. When the feature was invoked, traffic would be sent to its handler and the application would process it. Figure 2 illustrates this request flow.

By the time this memorable bug occurred, this Datastore administration feature was mature, well tested, and stable. No changes were being made to it.

The second feature (or more accurately, set of features) came at least a year after the first feature was released and stable. It helped users migrate their applications to a new High Replication (HR) Datastore. The HR Datastore was more reliable than its predecessor, but using it meant creating a new application and copying over all the data from the old Datastore. To support such migrations, App Engine developers added two new features to the administration console. The first copied all the data from the Datastore of one application to another, and the second redirected all traffic from one application to another. The latter was particularly useful because it meant the new application would seamlessly receive the traffic after a migration. This set of features was written by another team, and we in Engineering Productivity supported them by creating processes for testing various Datastore migrations. The migration-support features were thoroughly tested and released to developers. Figure 3 illustrates the request flow of the redirection feature.

So this was the situation when we released these utilities for migrating to the new Datastore. We were very confident that they worked, as we had tested migrations of many different types and sizes of Datastore entities. We had also tested that a migration could be interrupted without data loss. All checked out fine. I was confident that this new feature would work, yet soon after we released it, we started getting problem reports.

If you have been playing along, now is the time to ask yourself, “What could possibly go wrong?” As an added hint, the problem reports claimed that all the data in the newly migrated application was disappearing.

As mentioned above, developers began to report that data was disappearing from their newly migrated applications. It wasn’t at all common, yet of course it is most disconcerting when data “just disappears.” We had to investigate how this could occur. Our standard processes ensured that we had internal backups of the data, which were promptly restored. In parallel we tried to reproduce the problem, but we couldn’t—at least until we figured out what was happening. As I mentioned earlier, once we understood it, it was quite obvious, but that only made it all the more painful that we missed it.

What was happening was that, after migrating and automatically redirecting traffic to the new application, a number of customers thought they still needed to delete the data from their old application, so they used the first Datastore admin feature to do that. As expected, the feature sent traffic to that application to delete the entities from the Datastore. But that traffic was now being automatically redirected to the new application, and voila—all the data that had been copied earlier was now deleted there. Since only a handful of developers tried to delete the data from their old applications, this explained why the problem occurred only rarely. Figure 4 illustrates this request flow.

Obvious, isn’t it, once you know what is happening.

This all occurred years ago, and App Engine is based on a far different and more robust framework today. Datastore migrations are but a memory from the past, yet this experience made a great impression on me.

The most important thing I learned from this experience is that, while it is important to test features for their functionality, it’s also important to think of them as part of workflows. In performing our testing we exercised a very limited number of steps in the migration process workflow and omitted a very reasonable step at the end: trying to delete the data from the old application. Our focus was in testing the variability of contents in the Datastore rather than different steps in the migration process. It was this focus that kept our eyes away from the relatively obvious failure case.

Another thing I learned was that this bug might have been caught if the developer of the first feature had been in the design review for the second set of migration features (particularly the feature that automatically redirects traffic). Unfortunately, that person had already joined a new team. A key step in reducing bugs can occur at the design stage if “what-if” questions are being asked.

Finally, I was enormously impressed that we were able to recover so quickly. Protecting against data loss is one of the most important aspects of Cloud management, and being able to recover from mistakes is at least as important as trying to prevent them. I have the utmost respect for my coworkers in Site Reliability Engineering (SRE).

How Much Testing is Enough?

By George Pirocanac

A familiar question every software developer and team grapples with is, “How much testing is enough to qualify a software release?” A lot depends on the type of software, its purpose, and its target audience. One would expect a far more rigorous approach to testing commercial search engne than a simple smartphone flashlight application. Yet no matter what the application, the question of how much testing is sufficient can be hard to answer in definitive terms. A better approach is to provide considerations or rules of thumb that can be used to define a qualification process and testing strategy best suited for the case at hand. The following tips provide a helpful rubric:

- Document your process or strategy.

- Have a solid base of unit tests.

- Don’t skimp on integration testing.

- Perform end-to-end testing for Critical User Journeys.

- Understand and implement the other tiers of testing.

- Understand your coverage of code and functionality.

- Use feedback from the field to improve your process.

Document your process or strategy

If you are already testing your product, document the entire process. This is essential for being able to both repeat the test for a later release and to analyze it for further improvement. If this is your first release, it’s a good idea to have a written test plan or strategy. In fact, having a written test plan or strategy is something that should accompany any product design.

Have a solid base of unit tests

A great place to start is writing unit tests that accompany the code. Unit tests test the code as it is written at the functional unit level. Dependencies on external services are either mocked or faked.

A mock has the same interface as the production dependency, but only checks that the object is used according to set expectations and/or returns test-controlled values, rather than having a full implementation of its normal functionality.

A fake, on the other hand, is a shallow implementation of the dependency but should ideally have no dependencies of it’s own. Fakes provide a wider range of functionality than mocks and should be maintained by the team providing the production version of the dependency. That way, as the dependency evolves so does the fake and the unit-test writer can be confident that the fake mirrors the functionality of the production dependency.

At many companies, including Google, there are best practices of requiring any code change to have corresponding unit test cases that pass. As the code base expands, having a body of such tests that is executed before code is submitted is an important part of catching bugs before they creep into the code base. This saves time later both in writing integration tests, debugging, and verifying fixes to existing code.

Don’t skimp on integration testing

As the codebase grows and reaches a point where numbers of functional units are available to test as a group, it’s time to have a solid base of integration tests. An integration test takes a small group of units, often only two units, and tests their behavior as a whole, verifying that they coherently work together.

Often developers think that integration tests can be deprioritized or even skipped in favor of full end-to-end tests. After all, the latter really tests the product as the user would exercise it. Yet, having a comprehensive set of integration tests is just as important as having a solid unit-test base (see the earlier Google Blog article, Fixing a test hourglass).

The reason lies in the fact that integration tests have less dependencies than full end-to-end tests. As a result, integration tests, with smaller environments to bring up, will be faster and more reliable than the full end-to-end tests with their full set of dependencies (see the earlier Google Blog article, Test Flakiness - One of the Main Challenges of Automated Testing).

Perform end-to-end testing for Critical User Journeys

The discussion thus far covers testing the product at its component level, first as individual components (unit-testing), then as groups of components and dependencies (integration testing). Now it’s time to test the product end to end as a user would use it. This is quite important because it’s not just independent features that should be tested but entire workflows incorporating a variety of features. At Google these workflows - the combination of a critical goal and the journey of tasks a user undertakes to achieve that goal - are called Critical User Journeys (CUJs). Understanding CUJs, documenting them, and then verifying them using end-to-end testing (hopefully in an automated fashion) completes the Testing Pyramid.

Understand and implement the other tiers of testing

Unit, integration, and end-to-end testing address the functional level of your product. It is important to understand the other tiers of testing, including:

- Performance testing - Measuring the latency or throughput of your application or service.

- Load and scalability testing - Testing your application or service under higher and higher load.

- Fault-tolerance testing - Testing your application’s behavior as different dependencies either fail or go down entirely.

- Security testing - Testing for known vulnerabilities in your service or application.

- Accessibility testing - Making sure the product is accessible and usable for everyone, including people with a wide range of disabilities.

- Localization testing - Making sure the product can be used in a particular language or region.

- Globalization testing - Making sure the product can be used by people all over the world.

- Privacy testing - Assessing and mitigating privacy risks in the product.

- Usability testing - Testing for user friendliness.

Again, it is important to have these testing processes occur as early as possible in your review cycle. Smaller performance tests can detect regressions earlier and save debugging time during the end-to-end tests.

Understand your coverage of code and functionality

So far, the question of how much testing is enough, from a qualitative perspective, has been examined. Different types of tests were reviewed and the argument made that smaller and earlier is better than larger or later. Now the problem will be examined from a quantitative perspective, taking code coverage techniques into account.

Wikipedia has a great article on code coverage that outlines and discusses different types of coverage, including statement, edge, branch, and condition coverage. There are several open source tools available for measuring coverage for most of the popular programming languages such as Java, C++, Go and Python. A partial list is included in the table below:

| Language | Tool |

|---|---|

| Java | JaCoCo |

| Java | JCov |

| Java | OpenClover |

| Python | Coverage.py |

| C++ | Bullseye |

| Go | Built in coverage support (go -cover) |

Table 1 - Open source coverage tools for different languages

Most of these tools provide a summary in percentage terms. For example, 80% code coverage means about 80% of the code is covered and about 20% of the code is uncovered. At the same time, It is important to understand that, just because you have coverage for a particular area of code, this code can still have bugs.

Another concept in coverage is called changelist coverage. Changelist coverage measures the coverage in changed or added lines. It is useful for teams that have accumulated technical debt and have low coverage in their entire codebase. These teams can institute a policy where an increase in their incremental coverage will lead to overall improvement.

So far the coverage discussion has centered around coverage of the code by tests (functions, lines, etc.). Another type of coverage is feature coverage or behavior coverage. For feature coverage, the emphasis is on identifying the committed features in a particular release and creating tests for their implementation. For behavior coverage, the emphasis is on identifying the CUJs and creating the appropriate tests to track them. Again, understanding your “uncovered” features and behaviors can be a useful metric in your understanding of the risks.

Use feedback from the field to improve your process

A very important part of understanding and improving your qualification process is the feedback received from the field once the software has been released. Having a process that tracks outages and bugs and other issues, in the form of action items to improve qualification, is critical for minimizing the risks of regressions in subsequent releases. Moreover, the action items should be such that they (1) emphasize filling the testing gap as early as possible in the qualification process and (2) address strategic issues such as the lack of testing of a particular type such as load or fault tolerance testing. And again, this is why it is important to document your qualification process so that you can reevaluate it in light of the data you obtain from the field.

Summary

Creating a comprehensive qualification process and testing strategy to answer the question “How much testing is enough?” can be a complex task. Hopefully the tips given here can help you with this. In summary:

- Document your process or strategy.

- Have a solid base of unit tests.

- Don’t skimp on integration testing.

- Perform end-to-end testing for Critical User Journeys.

- Understand and implement the other tiers of testing.

- Understand your coverage of code and functionality.

- Use feedback from the field to improve your process.

References

- Fixing a test hourglass

- Testing Pyramid

- The Inquiry Method for test Planning

- Test Flakiness - One of the Main Challenges of Automated Testing

- Testing on the Toilet: Know Your Test Doubles

- Testing on the Toilet: Fake Your Way to Better Tests

Source: Google Testing Blog

Test Flakiness – One of the main challenges of automated testing (Part II)

By George Pirocanac

This is part two of a series on test flakiness. The first article discussed the four components under which tests are run and the possible reasons for test flakiness. This article will discuss the triage tips and remedies for flakiness for each of these possible reasons.

Components

To review, the four components where flakiness can occur include:

- The tests themselves

- The test-running framework

- The application or system under test (SUT) and the services and libraries that the SUT and testing framework depend upon

- The OS and hardware and network that the SUT and testing framework depend upon

This was captured and summarized in the following diagram.

The reasons, triage tips, and remedies for flakiness are discussed below, by component.

The tests themselves

The tests themselves can introduce flakiness. This can include test data, test workflows, initial setup of test prerequisites, and initial state of other dependencies.

Table 1 - Reasons, triage tips, and remedies for flakiness in the tests themselves

The test-running framework

An unreliable test-running framework can introduce flakiness.

Table 2 - Reasons, triage tips, and remedies for flakiness in the test running framework

The application or SUT and the services and libraries that the SUT and testing framework depend upon

Of course, the application itself (or the SUT) could be the source of flakiness.

An application can also have numerous dependencies on other services, and each of those services can have their own dependencies. In this chain, each of the services can introduce flakiness.

Table 3 - Reasons, triage tips, and remedies for flakiness in the application or SUT

The OS and hardware that the SUT and testing framework depend upon

Finally, the underlying hardware and operating system can be sources of test flakiness.

Table 4 - Reasons, triage tips, and remedies for flakiness in the OS and hardware of the SUT

Conclusion

As can be seen from the wide variety of failures, having low flakiness in automated testing can be quite a challenge. This article has outlined both the components under which tests are run and the types of flakiness that can occur, and thus can serve as a cheat sheet when triaging and fixing flaky tests.

References

Source: Google Testing Blog

Test Flakiness – One of the main challenges of automated testing

By George Pirocanac

Dealing with test flakiness is a critical skill in testing because automated tests that do not provide a consistent signal will slow down the entire development process. If you haven’t encountered flaky tests, this article is a must-read as it first tries to systematically outline the causes for flaky tests. If you have encountered flaky tests, see how many fall into the areas listed.

A follow-up article will talk about dealing with each of the causes.

Over the years I’ve seen a lot of reasons for flaky tests, but rather than review them one by one, let’s group the sources of flakiness by the components under which tests are run:

- The tests themselves

- The test-running framework

- The application or system under Test (SUT) and the services and libraries that the SUT and testing framework depend upon

- The OS and hardware that the SUT and testing framework depend upon

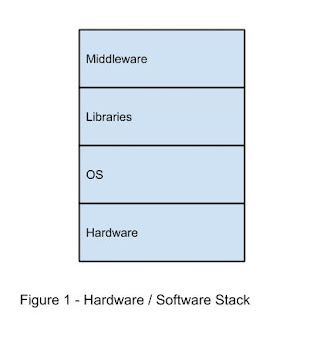

This is illustrated below. Figure 1 first shows the hardware/software stack that supports an application or system under test. At the lowest level is the hardware. The next level up is the operating system followed by the libraries that provide an interface to the system. At the highest level, is the middleware, the layer that provides application specific interfaces.

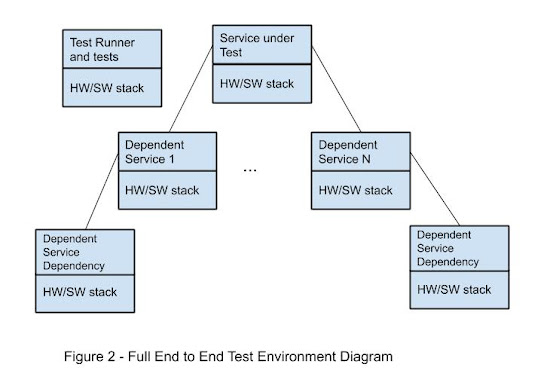

In a distributed system, however, each of the services of the application and the services it depends upon can reside on a different hardware / software stack as can the test running service. This is illustrated in Figure 2 as the full test running environment.

As discussed above, each of these components is a potential area for flakiness.

The tests themselves

The tests themselves can introduce flakiness. Typical causes include:

- Improper initialization or cleanup.

- Invalid assumptions about the state of test data.

- Invalid assumptions about the state of the system. An example can be the system time.

- Dependencies on the timing of the application.

- Dependencies on the order in which the tests are run. (Similar to the first case above.)

The test-running framework

An unreliable test-running framework can introduce flakiness. Typical causes include:

- Failure to allocate enough resources for the system under test thus causing it to fail coming up.

- Improper scheduling of the tests so they “collide” and cause each other to fail.

- Insufficient system resources to satisfy the test requirements.

The application or system under test and the services and libraries that the SUT and testing framework depend upon

Of course, the application itself (or the system under test) could be the source of flakiness. An application can also have numerous dependencies on other services, and each of those services can have their own dependencies. In this chain, each of the services can introduce flakiness. Typical causes include:

- Race conditions.

- Uninitialized variables.

- Being slow to respond or being unresponsive to the stimuli from the tests.

- Memory leaks.

- Oversubscription of resources.

- Changes to the application (or dependent services) happening at a different pace than those to the corresponding tests.

Testing environments are called hermetic when they contain everything that is needed to run the tests (i.e. no external dependencies like servers running in production). Hermetic environments, in general, are less likely to be flaky.

The OS and hardware that the SUT and testing framework depend upon

Finally, the underlying hardware and operating system can be the source of test flakiness. Typical causes include:

- Networking failures or instability.

- Disk errors.

- Resources being consumed by other tasks/services not related to the tests being run.

As can be seen from the wide variety of failures, having low flakiness in automated testing can be quite a challenge. This article has both outlined the areas and the types of flakiness that can occur in those areas, so it can serve as a cheat sheet when triaging flaky tests.

In the follow-up of this blog we’ll look at ways of addressing these issues.