Earlier this week, Gateway API v1.0 was released, marking the significant milestone of General Availability. This Kubernetes API represents the future of load balancing, routing, and service mesh configuration. It already has more than 20 implementations, including GKE and Istio. In this post, we’ll take a look back at some of the key moments that led to this point, starting with the proposal that started it all.

Initial Proposal

The core ideas for this new API were initially proposed by Bowei Du (Software Engineer, Google) at KubeCon San Diego as “Ingress v2”, the next generation of the Ingress API for Kubernetes. This proposal came as the shortcomings of the original Ingress API were becoming apparent. The community had started to develop alternative APIs, notably including Istio’s VirtualService API and Contour’s IngressRoute API. We had reached an inflection point where the Kubernetes ecosystem was diverging, and Bowei believed it was important to develop a new standard that would expose all these advanced features in a portable way.

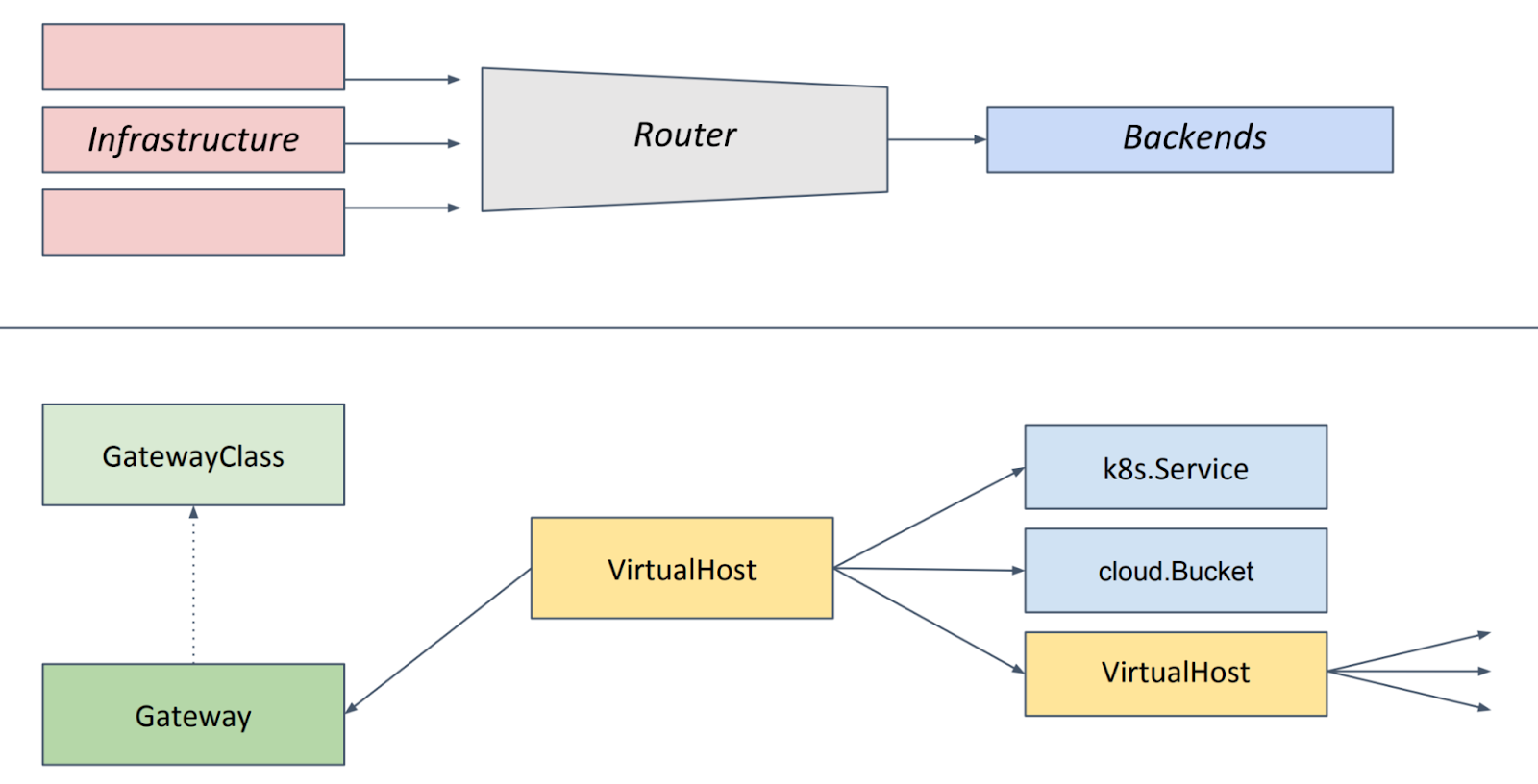

The initial proposal for this API provided a great foundation to build on. Specifically, this proposal focused on a role-oriented model that split capabilities into resources that were aligned with 3 different personas. It emphasized both expressiveness and extensibility as core design principles. This early sketch from that proposal closely resembles the API today:

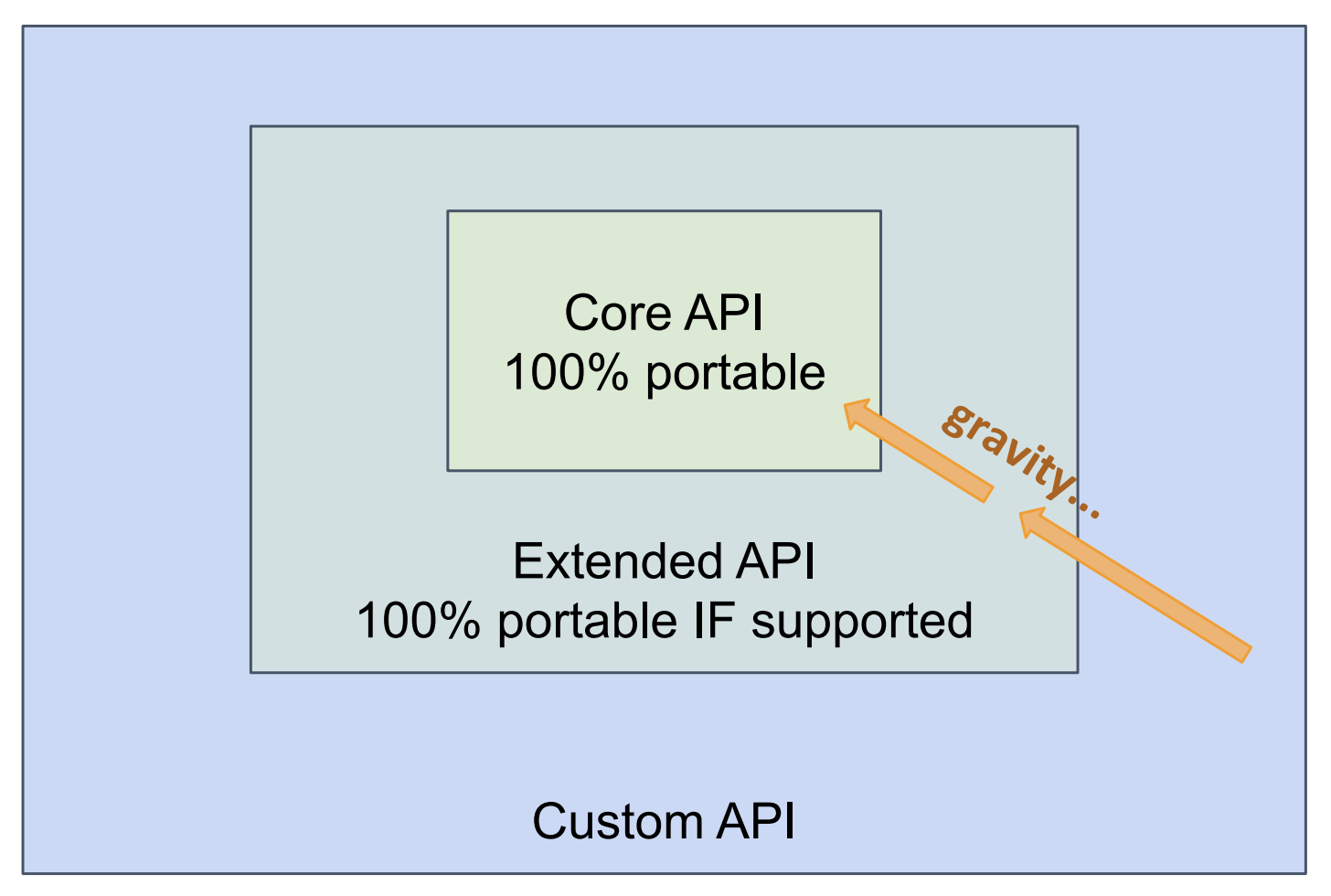

One of the key limitations of the Ingress API was that it was designed with the lowest common denominator in mind: every feature included in the API needed to be implemented by everyone. This meant that the API surface was very small, and implementations that wanted to support more advanced features either relied on long lists of implementation-specific annotations or developing new custom APIs.

Bowei proposed that this new API could introduce a concept of “support levels.” This would allow us to add features to the API even if not every implementation could support them, for example “Extended” features would be fully portable if they were supported.

Evolution of the API

Since that initial proposal, the API has evolved significantly, benefiting from the expertise of many in the community. Gateway has been referred to as the “most collaborative API in Kubernetes history” due to the hundreds of contributors representing dozens of companies that have helped refine the API over the years.

One of the things that makes this API unique is that it is built on top of Custom Resource Definitions (CRDs). This has meant that Gateway API is developed and released outside of the main Kubernetes project, enabling broader collaboration and shorter feedback loops. For example, each new release of this API supports the 5 most recent versions of Kubernetes, covering the vast majority of clusters in use today. So, instead of waiting until you can upgrade to the latest version of Kubernetes, most will be able to try out these APIs close to the time they’re released.

As the first official Kubernetes API to take this approach, it has developed several unique concepts along the way:

GEPs

Similar to Kubernetes Enhancement Proposals (KEPs), Gateway Enhancement Proposals provide a streamlined approach for proposing significant new enhancements to Gateway API. As the API grew and attracted more contributors, it became critical to have a better way to document key design decisions. The concept of GEPs was initially proposed by Bowei in 2021.

More than 30 of these have already merged, with many more in progress right now. This pattern has been invaluable in keeping track of when and why key design decisions were made. All key parts of the API now have GEPs documenting when and why they were proposed, along with alternatives considered.

Release Channels

In 2021 we proposed a simplified approach to versioning that would introduce the concept of release channels, our own version of Kubernetes’ “feature gates”, which denote the stability of individual fields and features.

All new resources, fields, and features start in the “Experimental” release channel. As the name implies, this channel provides no stability guarantees and can include breaking changes to enable us to iterate more quickly on APIs.

As these experimental APIs stabilize, individual resources, fields, and features can graduate to the “Standard” release channel when they meet predefined graduation criteria. These two release channels enable us to both provide a stable and predictable API with the “Standard” release channel while still iterating on experimental concepts with the “Experimental” release channel.

Conformance Tests

We added the first conformance tests in 2022, before this API reached beta, and since then these tests have become a key part of every new feature in Gateway API, ensuring that implementations were truly providing a portable experience. Before a feature can graduate to the “Standard” release channel, thorough conformance tests need to be developed, and multiple implementations need to pass them.

Service Mesh Support

Earlier this year, mesh support launched its “Experimental” version, marking the first time a Kubernetes API has ever officially underpinned the concept of Service Mesh. In 2022, key Service Mesh projects came together to form the GAMMA initiative (Gateway API for Mesh Management and Administration). The core idea was that the Gateway API was sufficiently modular that the Routing and Policy layers could be used for both mesh and ingress use cases.

Trying it Out

Gateway API enables great new features on GKE, such as advanced multi-cluster routing. Yesterday GKE announced GA support for multi-cluster Gateways. In the coming weeks, GKE will also be rolling out the v1.0 CRDs for all customers that have enabled Gateway API in their clusters. In the meantime, you can access all of the same features with the v1beta1 CRDs already supported by GKE. For more information on how to get started with the Gateway API on GKE, refer to the GKE Gateway documentation.

If you’re interested in Gateway API’s support for Service Mesh, you can try it out with Anthos Service Mesh.

Alternatively, if you’d like to use this API with another implementation, refer to the open source project’s Getting Started documentation.

By Rob Scott – GKE Networking