Posted by Isai Damier, Android Developer Platform Engineering (@isaidamier)

Kevin Chyn, Android Framework

Curtis Belmonte, Android Framework

With the launch of Android 10 (API level 29), developers can now use the Biometric API, part of the AndroidX Biometric Library, for all their on-device user authentication needs. The Android Framework and Security team has added a number of significant features to the AndroidX Biometric Library, which makes all of the biometric behavior from Android 10 available to all devices that run Android 6.0 (API level 23) or higher. In addition to supporting multiple biometric authentication form factors, the API has made it much easier for developers to check whether a given device has biometric sensors. And if there are no biometric sensors present, the API allows developers to specify whether they want to use device credentials in their apps.

The features do not just benefit developers. Device manufacturers and OEMs have a lot to celebrate as well. The framework is now providing a friendly, standardized API for OEMs to integrate support for all types of biometric sensors on their devices. In addition, the framework has built-in support for facial authentication in Android 10 so that vendors don’t need to create a custom implementation.

A bit of background

The FingerprintManager class was introduced in Android 6.0 (API level 23). At the time -- and up until Android 9 (API level 28) -- the API provided support only for fingerprint sensors, and with no UI. Developers needed to build their own fingerprint UI.

Based on developer feedback, Android 9 introduced a standardized fingerprint UI policy. In addition, BiometricPrompt was introduced to encompass more sensors than just fingerprint. In addition to providing a safe, familiar UI for user authentication, it enabled a small, maintainable API surface for developers to access the variety of biometric hardware available on OEM devices. OEMs can now customize the UI with necessary affordances and iconography to expose new biometrics, such as outlines for in-display sensors. With this, app developers don’t need to worry about implementing customized, device-specific implementations for biometric authentication.

Then, in Android 10, the team introduced some pivotal features to turn the biometric API into a one-stop-shop for in-app user authentication. BiometricManager enables developers to check whether a device supports biometric authentication. Furthermore, the setDeviceCredentialAllowed() method was added to allow developers the option to use a device’s PIN/pattern/password instead of biometric credentials, if it makes sense for their app.

The team has now packaged every biometric feature you get in Android 10 into the androidx.biometric Gradle dependency so that a single, consistent, interface is available all the way back to Android 6.0 (API level 23).

How it works

The androidx.biometric Gradle dependency is a support library for the Android framework Biometric classes. On API 29 and above, the library uses the classes under android.hardware.biometrics, FingerprintManager back to API 23, and Confirm Credential all the way back to API 21. Because of the variety of APIs, we strongly recommend using the androidx support library regardless of which API level your app targets.

To use the Biometric API in your app, do the following.

1. Add the Gradle dependency to your app module

$biometric_version is the latest release of the library

def biometric_version= '1.0.0-rc02'

implementation "androidx.biometric:biometric:$biometric_version"

2. Check whether the device supports biometric authentication

The BiometricPrompt needs to be recreated every time the Activity/Fragment is created; this should be done inside onCreate() or onCreateView() so that BiometricPrompt.AuthenticationCallback can start receiving callbacks properly.

To check whether the device supports biometric authentication, add the following logic:

val biometricManager = BiometricManager.from(context)

if (biometricManager.canAuthenticate() == BiometricManager.BIOMETRIC_SUCCESS){

// TODO: show in-app settings, make authentication calls.

}

3. Create an instance of BiometricPrompt

The BiometricPrompt constructor requires both an Executor and an AuthenticationCallback object. The Executor allows you to specify a thread on which your callbacks should be run.

The AuthenticationCallback has three methods:

onAuthenticationSucceeded() is called when the user has been authenticated using a credential that the device recognizes.

onAuthenticationError() is called when an unrecoverable error occurs.

onAuthenticationFailed() is called when the user is rejected, for example when a non-enrolled fingerprint is placed on the sensor, but unlike with onAuthenticationError(), the user can continue trying to authenticate.

The following snippet shows one way of implementing the Executor and how to instantiate the BiometricPrompt:

private fun instanceOfBiometricPrompt(): BiometricPrompt {

val executor = ContextCompat.getmainExecutor(context)

val callback = object: BiometricPrompt.AuthenticationCallback() {

override fun onAuthenticationError(errorCode: Int, errString: CharSequence) {

super.onAuthenticationError(errorCode, errString)

showMessage("$errorCode :: $errString")

}

override fun onAuthenticationFailed() {

super.onAuthenticationFailed()

showMessage("Authentication failed for an unknown reason")

}

override fun onAuthenticationSucceeded(result: BiometricPrompt.AuthenticationResult) {

super.onAuthenticationSucceeded(result)

showMessage("Authentication was successful")

}

}

val biometricPrompt = BiometricPrompt(context, executor, callback)

return biometricPrompt

}

Instantiating the BiometricPrompt should be done early in the lifecycle of your fragment or activity (e.g., in onCreate or onCreateView). This ensures that the current instance will always properly receive authentication callbacks.

4. Build a PromptInfo object

Once you have a BiometricPrompt object, you ask the user to authenticate by calling biometricPrompt.authenticate(promptInfo). If your app requires the user to authenticate using a Strong biometric or needs to perform cryptographic operations in KeyStore, you should use authenticate(PromptInfo, CryptoObject) instead.

This call will show the user the appropriate UI, based on the type of biometric credential being used for authentication – such as fingerprint, face, or iris. As a developer you don’t need to know which type of credential is being used for authentication; the API handles all of that for you.

This call requires a BiometricPrompt.PromptInfo object. A PromptInfo is where you define the text that appears in the prompt: such as title, subtitle, description. Without a PromptInfo, it is not clear to the end user which app is asking for their biometric credentials. PromptInfo also allows you to specify whether it’s OK for devices that do not support biometric authentication to grant access through the device credentials, such as password, PIN, or pattern that are used to unlock the device.

Here is an example of a PromptInfo declaration:

private fun getPromptInfo(): BiometricPrompt.PromptInfo {

val promptInfo = BiometricPrompt.PromptInfo.Builder()

.setTitle("My App's Authentication")

.setSubtitle("Please login to get access")

.setDescription("My App is using Android biometric authentication")

.setDeviceCredentialAllowed(true)

.build()

return promptInfo

}

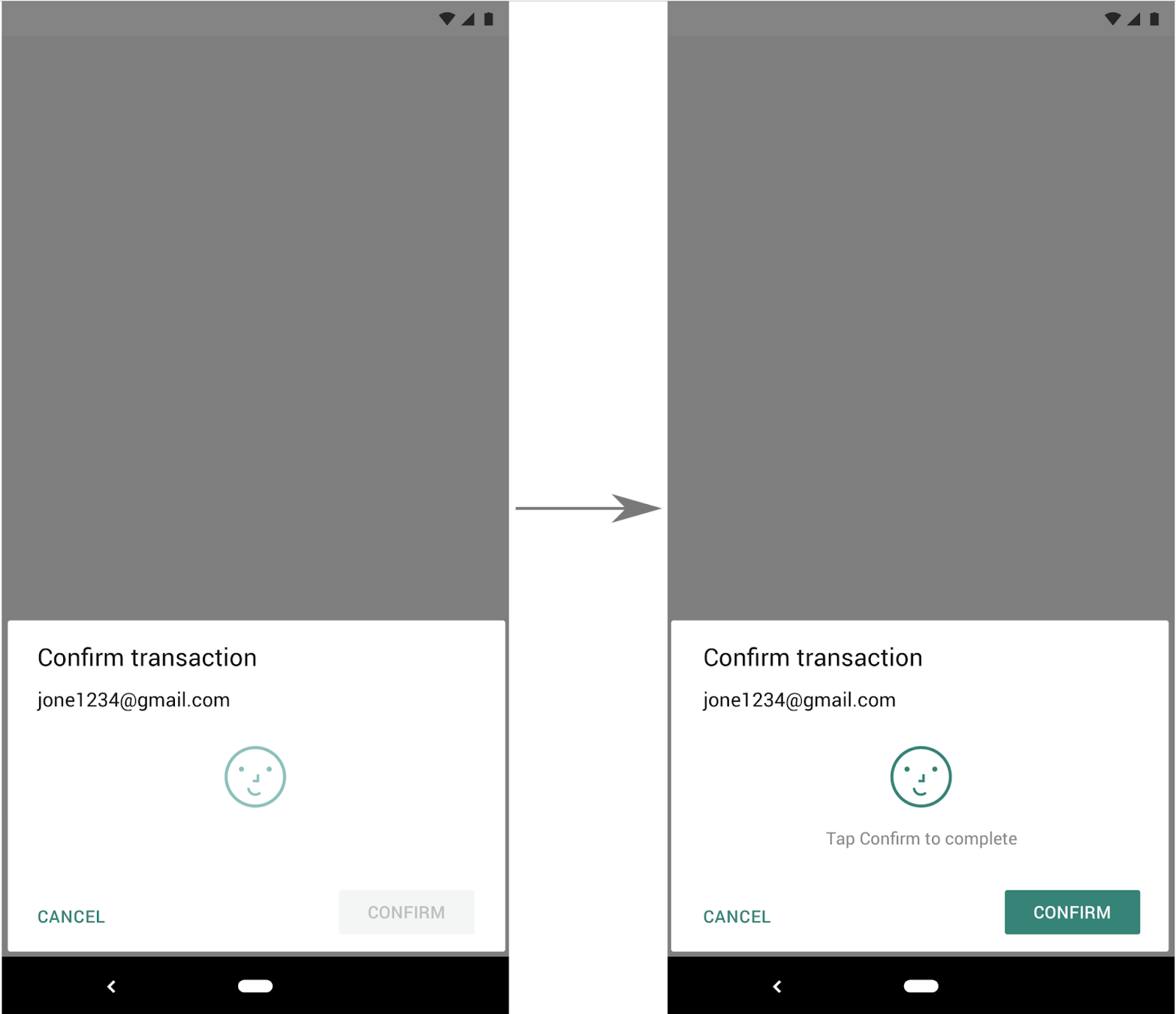

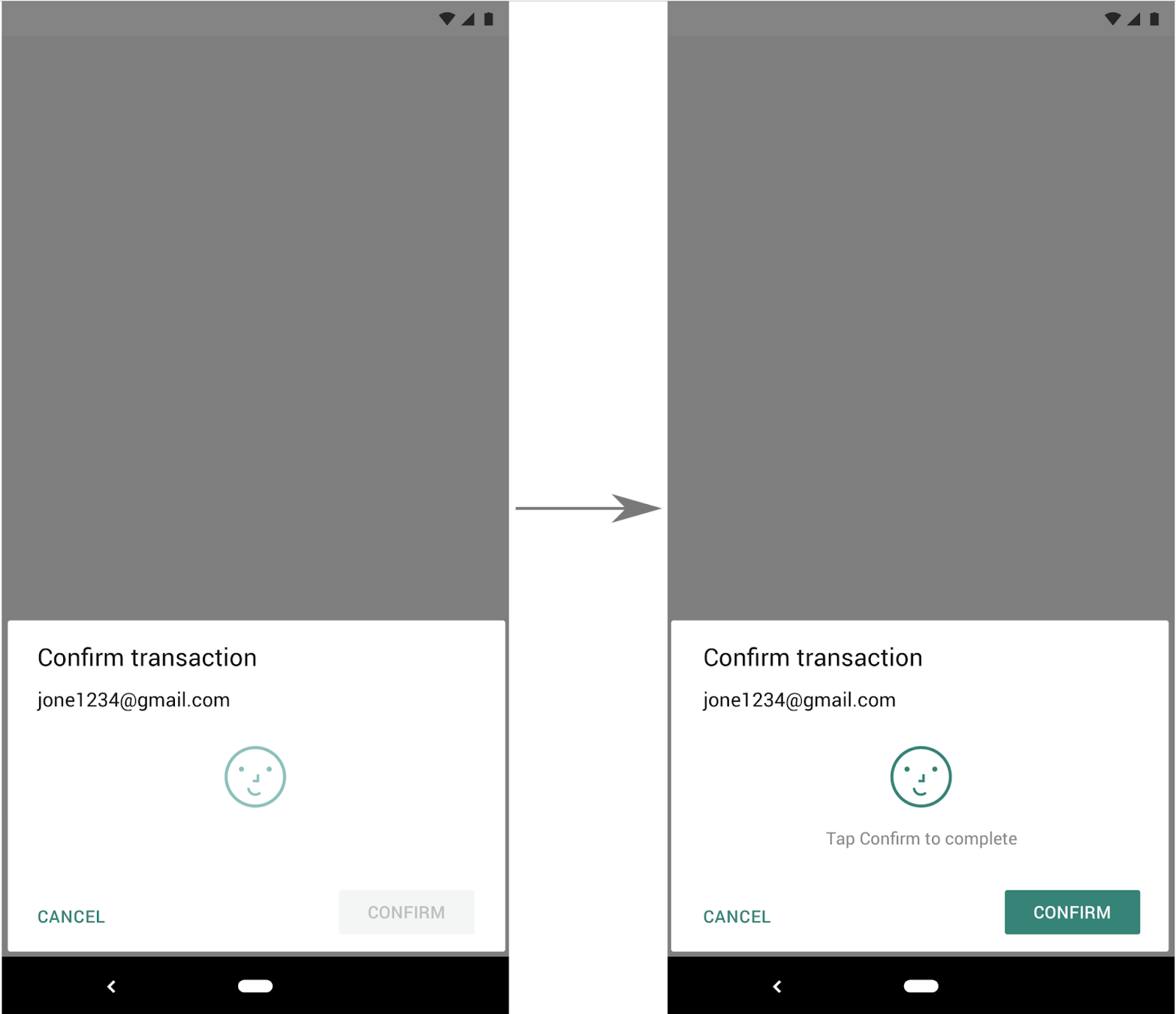

For actions that require a confirmation step, such as transactions and payments, we recommend using the default option -- setConfirmationRequired(true) -- which will add a confirmation button to the UI, as shown in Figure 2.

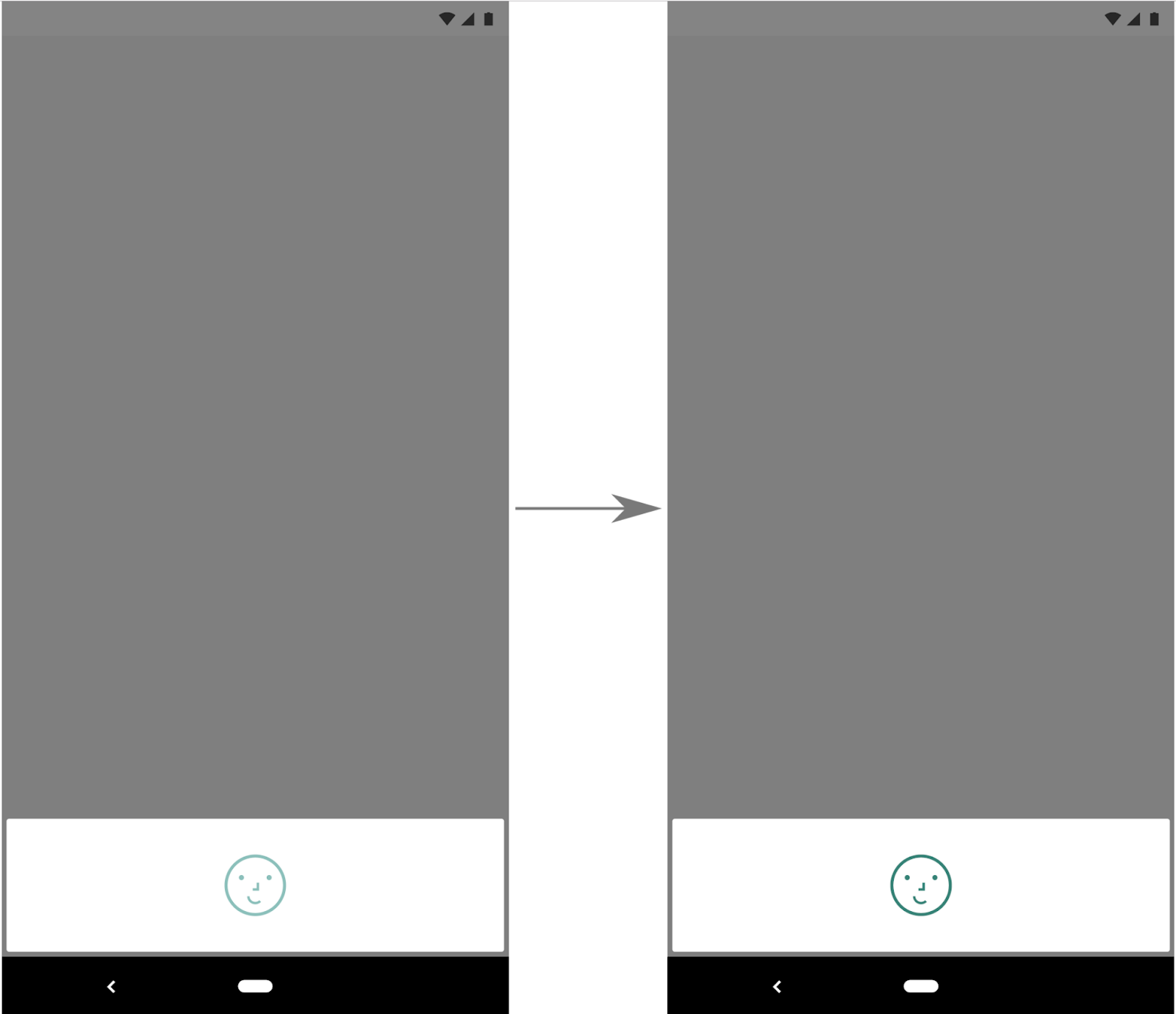

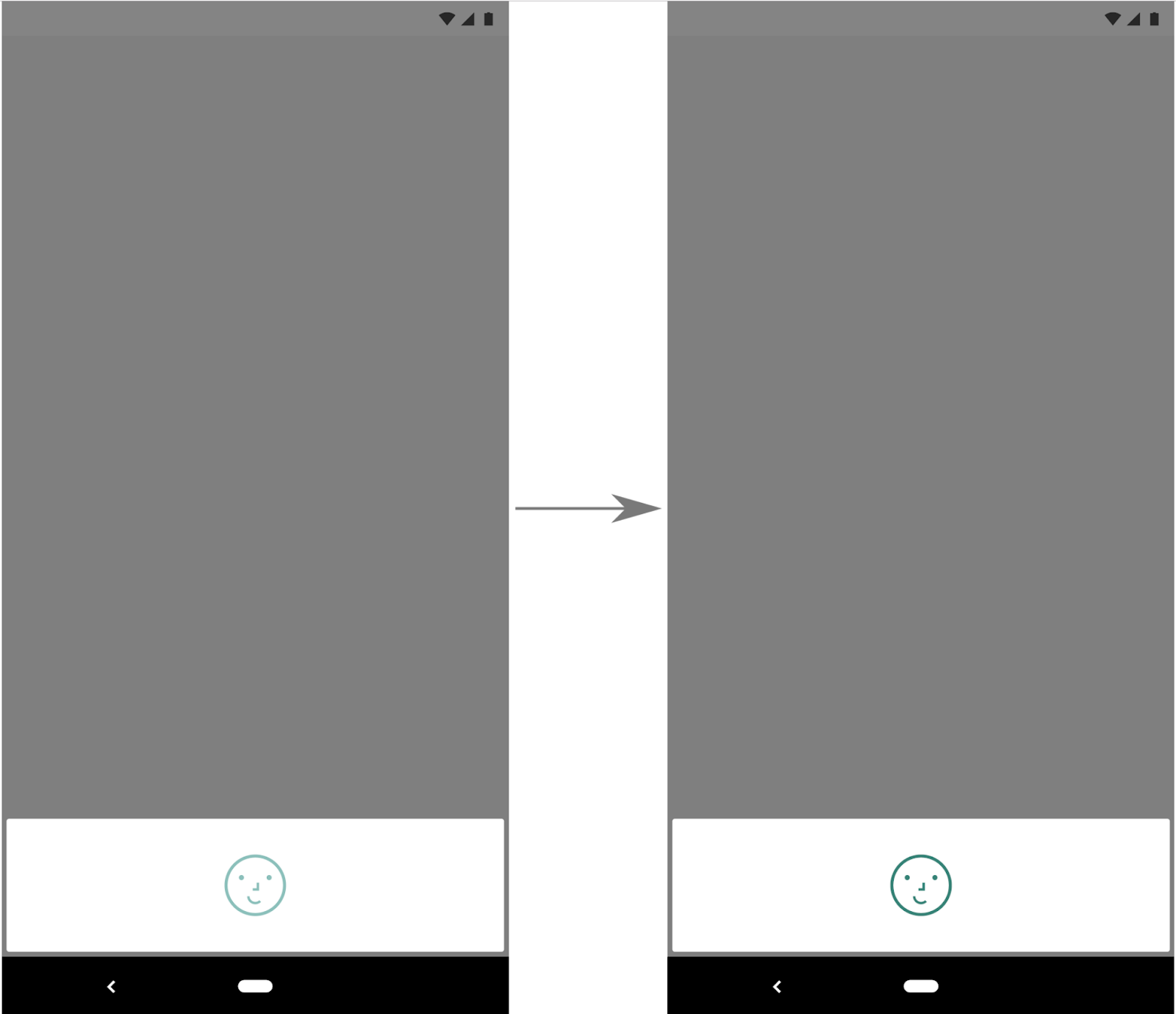

Figure 1. Example face authentication flow using BiometricPrompt with setConfirmationRequired(false).

Figure 2. Example face authentication flow using

Figure 2. Example face authentication flow using BiometricPrompt with setConfirmationRequired(true) (default behavior).

5. Ask the user to authenticate

Now that you have all the required pieces, you can ask the user to authenticate.

val canAuthenticate = biometricManager.canAuthenticate()

if (canAuthenticate == BiometricManager.BIOMETRIC_SUCCESS) {

biometricPrompt.authenticate(promptInfo)

} else {

Log.d(TAG, "could not authenticate because: $canAuthenticate")

}

And that’s it! You should now be able to perform authentication, using biometric credentials, on any device that runs Android 6.0 (API level 23) or higher.

Going forward

Because the ecosystem continues to evolve rapidly, the Android Framework team is constantly thinking about how to provide long-term support for both OEMs and developers. Given the biometric library’s consistent, system-themed UI, developers don’t need to worry about device-specific requirements, and users get a more trustworthy experience.

We welcome any feedback from developers and OEMs on how to make it more robust, easier to use, and supportive of a wider range of use cases.

For in-depth examples that showcase additional use cases and demonstrate how you might integrate this library into your app, check out our repo, which contains functional sample apps that make use of the library. You can also read the associated developer guide and API reference for more information.

Posted by Stephanie Cuthbertson, Director, Product Management

Posted by Stephanie Cuthbertson, Director, Product Management

Posted by The #AndroidDevSummit team

Posted by The #AndroidDevSummit team