Posted by Aaron Wade, Creative Technologist

Google Lab Sessions is a series of experimental AI collaborations with innovators. In our latest Lab Session we wanted to explore specifically how AI could expand human creativity. So we turned to GRAMMY® Award-winning rapper and MIT Visiting Scholar Lupe Fiasco to build an AI experiment called TextFX.

The discovery process

We started by spending time with Lupe to observe and learn about his creative process. This process was invariably marked by a sort of linguistic “tinkering”—that is, deconstructing language and then reassembling it in novel and innovative ways. Some of Lupe’s techniques, such as simile and alliteration, draw from the canon of traditional literary devices. But many of his tactics are entirely unique. Among them was a clever way of creating phrases that sound identical to a given word but have different meanings, which he demonstrated for us using the word “expressway”:

express whey (speedy delivery of dairy byproduct)

express sway (to demonstrate influence)

ex-press way (path without news media)

These sorts of operations played a critical role in Lupe’s writing. In light of this, we began to wonder: How might we use AI to help Lupe explore creative possibilities with text and language?

When it comes to language-related applications, large language models (LLMs) are the obvious choice from an AI perspective. LLMs are a category of machine learning models that are specially designed to perform language-related tasks, and one of the things we can use them for is generating text. But the question still remained as to how LLMs would actually fit into Lupe’s lyric-writing workflow.

Some LLMs such as Google’s Bard are fine-tuned to function as conversational agents. Others such as the PaLM API’s Text Bison model lack this conversational element and instead generate text by extending or fulfilling a given input text. One of the great things about this latter type of LLM is their capacity for few-shot learning. In other words, they can recognize patterns that occur in a small set of training examples and then replicate those patterns for novel inputs.

As an initial experiment, we had Lupe provide more examples of his same-sounding phrase technique. We then used those examples to construct a prompt, which is a carefully crafted string of text that primes the LLM to behave in a certain way. Our initial prompt for the same-sounding phrase task looked like this:

After successfully codifying the same-sounding word task into a few-shot prompt, we worked with Lupe to identify additional creative tasks that we might be able to accomplish using the same few-shot prompting strategy. In the end, we devised ten prompts, each uniquely designed to explore creative possibilities that may arise from a given word, phrase, or concept:

SIMILE - Create a simile about a thing or concept.

EXPLODE - Break a word into similar-sounding phrases.

UNEXPECT - Make a scene more unexpected and imaginative.

CHAIN - Build a chain of semantically related items.

POV - Evaluate a topic through different points of view.

ALLITERATION - Curate topic-specific words that start with a chosen letter.

ACRONYM - Create an acronym using the letters of a word.

FUSE - Create an acronym using the letters of a word.

SCENE - Create an acronym using the letters of a word.

UNFOLD - Slot a word into other existing words or phrases.

We were able to quickly prototype each of these ideas using MakerSuite, which is a platform that lets users easily build and experiment with LLM prompts via an interactive interface.

|

How we made it: building using the PaLM API

After we finalized the few-shot prompts, we built an app to house them. We decided to call it TextFX, drawing from the idea that each tool has a different “effect” on its input text. Like a sound effect, but for text.

|

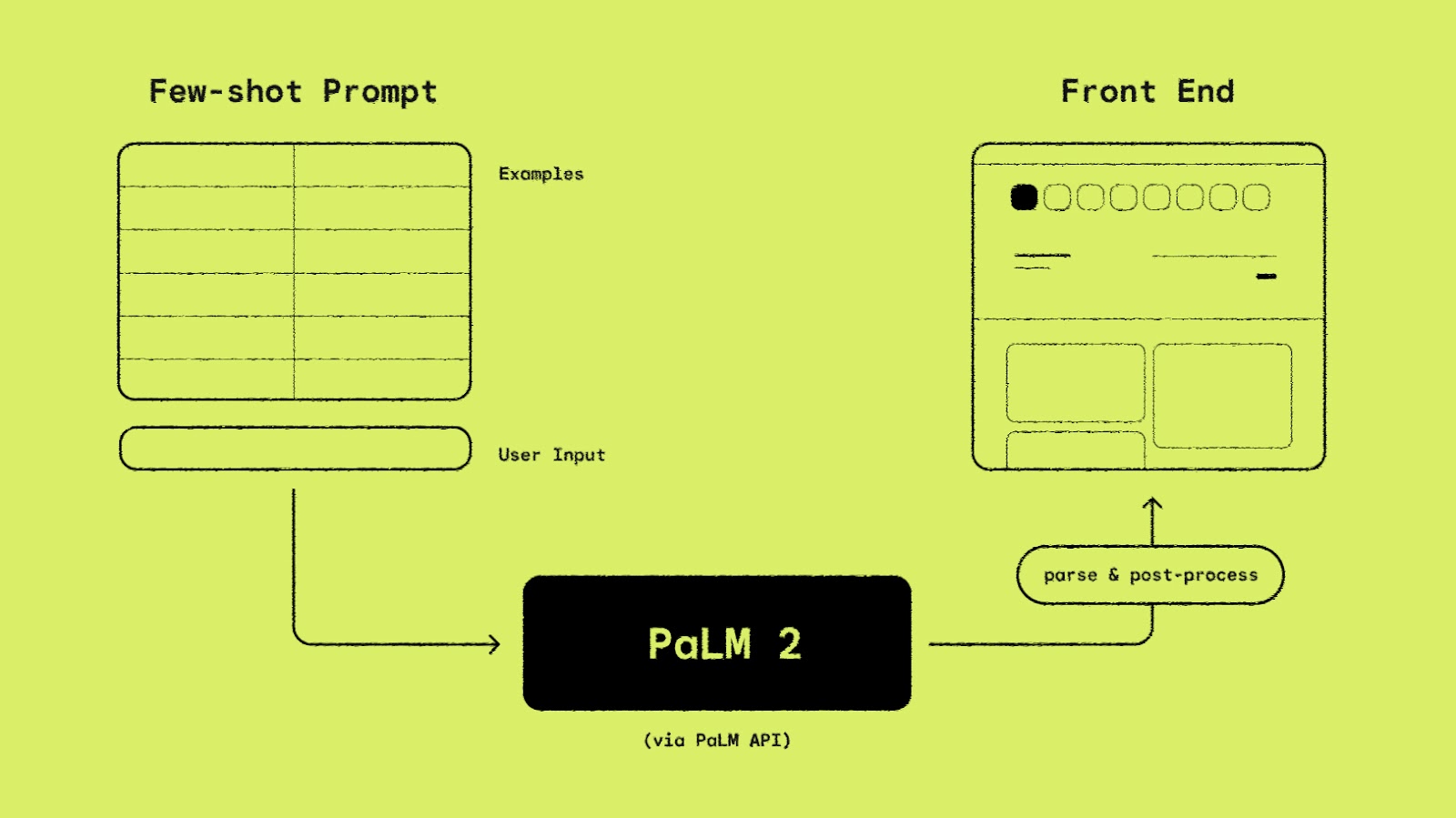

We save our prompts as strings in the source code and send them to Google’s PaLM 2 model using the PaLM API, which serves as an entry point to Google’s large language models.

All of our prompts are designed to terminate with an incomplete input-output pair. When a user submits an input, we append that input to the prompt before sending it to the model. The model predicts the corresponding output(s) for that input, and then we parse each result from the model response and do some post-processing before finally surfacing the result in the frontend.

|

Users may optionally adjust the model temperature, which is a hyperparameter that roughly corresponds to the amount of creativity allowed in the model outputs.

Try it yourself

You can try TextFX for yourself at textfx.withgoogle.com.

We’ve also made all of the LLM prompts available in MakerSuite. If you have access to the public preview for the PaLM API and MakerSuite, you can create your own copies of the prompts using the links below. Otherwise, you can join the waitlist.

And in case you’d like to take a closer look at how we built TextFX, we’ve open-sourced the code here.

If you want to try building with the PaLM API and MakerSuite, join the waitlist.

A final word

TextFX is an example of how you can experiment with the PaLM API and build applications that leverage Google’s state of the art large language models. More broadly, this exploration speaks to the potential of AI to augment human creativity. TextFX targets creative writing, but what might it mean for AI to enter other creative domains as a collaborator? Creators play a crucial role in helping us imagine what these collaborations might look like. Our hope is that this Lab Session gives you a glimpse of what’s possible using the PaLM API and inspires you to use Google’s AI offerings to bring your own ideas to life, in whatever your craft may be.

If you’d like to explore more Lab Sessions like this one, head over to labs.google.com.