By Marco Cavalli, Product Manager

As your cloud footprint grows, it becomes harder to answer questions like

"How do I best organize my resources?" "How do I separate departments, teams, environments and applications?" "How do I delegate administrative responsibilities in a way that maintains central visibility?" and "How do I manage billing and cost allocation?"

Google Cloud Platform (GCP) tools like

Cloud Identity & Access Management,

Cloud Resource Manager, and

Organization policies let you tackle these problems in a way that best meets your organization’s requirements.

Specifically, the

Organization resource, which represents a company in GCP and is the root of the

resource hierarchy, provides centralized visibility and control over all its GCP resources.

Now, we're excited to announce the beta launch of

Folders, an additional layer under Organization that provides greater flexibility in arranging GCP resources to match your organizational structure.

"As our work with GCP scaled, we started looking for ways to streamline our projects, Thanks to Cloud Resource Manager, we now centrally control and monitor how resources are created and billed in our domain. We use IAM and Folders to provide our departments with the autonomy and velocity they need, without losing visibility into resource access and usage. This has significantly reduced our management overhead, and had a direct positive effect on our ability to support our customers at scale.” — Marcin Kołda, Senior Software Engineer at Ocado Technology.

The Google Cloud resource hierarchy

Organization, Projects and now Folders comprise the GCP resource hierarchy. You can think of the hierarchy as the equivalent of the filesystem in traditional operating systems. It provides

ownership, in that each GCP resource has exactly one parent that controls its lifecycle. It provides

grouping, as resources can be assembled into Projects and Folders that logically represent services, applications or organizational entities, such as departments and teams in your organization. Furthermore, it provides the “

scaffolding” for access control and configuration policies, which you can attach at any node and propagate down the hierarchy, simplifying management and improving security.

The diagram below shows an example of the GCP resource hierarchy.

Projects are the first level of ownership, grouping and policy attach point. At the other end of the spectrum, the Organization contains all the resources that belong to a company and provides the high-level scope for centralized visibility and control. A policy defined at the Organization level is inherited by all the resources in the hierarchy. In the middle, Folders can contain Projects or other Folders and provide the flexibility to organize and create the boundaries for your isolation requirements.

As the Organization Admin for your company, you can, for example, create first-level Folders under the Organization to map your departments: Engineering, IT, Operations, Marketing, etc. You can then delegate full control of each Folder to the lead of the corresponding department by assigning them the Folder Admin IAM role. Each department can organize their own resources by creating sub-folders for teams, or applications. You can define Organization-wide policies centrally at the Organization level, and they're inherited by all resources in the Organization, ensuring central visibility and control. Similarly, policies defined at the Folder level are propagated down the corresponding subtree, providing teams and departments with the appropriate level of autonomy.

What to consider when mapping your organization onto GCP

Each organization has a unique structure, culture, velocity and autonomy requirements. While there isn’t a predefined recipe that fits all scenarios, here are some criteria to consider as you organize your resources in GCP.

Isolation: Where do you want to establish trust boundaries: at the department and team level, at the application or service level, or between production, test and dev environments? Use Folders with their nested hierarchy and Projects to create isolation between your cloud resources. Set

IAM policies at the different levels of the hierarchy to determine who has access to which resources.

Delegation: How do you balance autonomy with centralized control? Folders and IAM help you establish compartments where you can allow more freedom for developers to create and experiment, and reserve areas with stricter control. You can for example create a Development Folder where users are allowed to create Projects, spin up virtual machines (VMs) and enable services. You can also safeguard your production workflows by collecting them in dedicated Projects and Folders where

least privilege is enforced through IAM.

Inheritance: How can inheritance optimize policy management? As we mentioned, you can define policies at every node of the hierarchy and propagate them down. IAM policies are additive. If, for example,

[email protected] is granted Compute Engine instanceAdmin role for a Folder, he will be able to start VMs in each Project under that Folder.

Shared resources: Are there resources that need to be shared across your organization, like networks, VM images, service accounts? Use Projects and Folders to build central repositories for your shared resources and limit administrative privileges over these resources to only selected users. Use least privilege principle to allow access to other users.

Managing the GCP resource hierarchy

As part of the Folders beta launch, we've redesigned the Cloud Console user interface to improve visibility and management of the resource hierarchy. You can now effortlessly browse the hierarchy, manage resources and define IAM policies via the new scope picker and the Manage Resources page shown below.

In this example, the Organization “myorganization.com” is structured in two top-level folders for the Engineering and IT departments. The Engineering department then creates two sub-folders for Product_A and Product_B, which in turn contain folders for the production, development and test environments. You can define IAM permissions for each Folder from within the same UI, by selecting the resources of interest and accessing the control pane on the right hand side, as shown below.

By leveraging IAM permissions, the Organization Admin can restrict visibility to users within portions of the tree, creating isolation and enforcing trust boundaries between departments, products or environments. In order to maximize security of the production environment for Product_A for example, only selected users may be granted access or visibility to the corresponding Folder. Developer

[email protected], for instance, is working on new features for Product_A, but in order to minimize risk of mistakes in the production environment, he's not given visibility to the Production Folder. You can see his visibility of the Organization hierarchy in the diagram below:

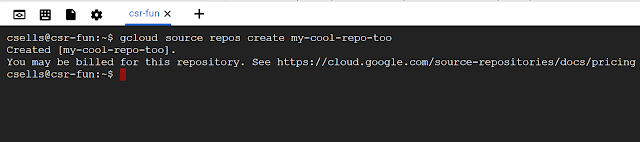

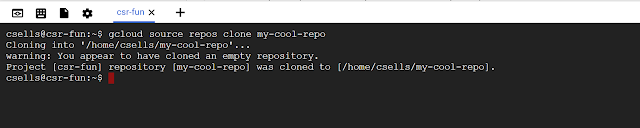

As with any other GCP component, alongside the UI, we've provided API and command line (gcloud) interfaces to programmatically manage the entire resource hierarchy, enabling automation and standardization of policies and environments.

The following script creates the resource hierarchy above programmatically using the gcloud command line tool.

# Find your Organization ID

me@cloudshell:~$ gcloud organizations list

DISPLAY_NAME ID DIRECTORY_CUSTOMER_ID

myorganization.com 358981462196 C03ryezon

# Create first level folder “Engineering” under the Organization node

me@cloudshell:~$ gcloud alpha resource-manager folders create

--display-name=Engineering --organization=358981462196

Waiting for [operations/fc.2201898884439886347] to finish...done. Created [<Folder

createTime: u'2017-04-16T22:49:10.144Z'

displayName: u'Engineering'

lifecycleState: LifecycleStateValueValuesEnum(ACTIVE, 1)

name: u'folders/1000107035726'

parent: u'organizations/358981462196'>].

# Add a Folder Admin role to the “Engineering” folder

me@cloudshell:~$ gcloud alpha resource-manager folders add-iam-policy-binding

1000107035726 --member=user:[email protected]

--role=roles/resourcemanager.folderAdmin

bindings:

- members:

- user:[email protected]

- user:[email protected]

role: roles/resourcemanager.folderAdmin

- members:

- user:[email protected]

role: roles/resourcemanager.folderEditor

etag: BwVNX61mPnc=

# Check the IAM policy set on the “Engineering” folder

me@cloudshell:~$ gcloud alpha resource-manager folders get-iam-policy

1000107035726

bindings:

- members:

- user:[email protected]

- user:[email protected]

role: roles/resourcemanager.folderAdmin

- members:

- user:[email protected]

role: roles/resourcemanager.folderEditor

etag: BwVNX61mPnc=

# Create second level folder “Product_A” under folder “Engineering”

me@cloudshell:~$ gcloud alpha resource-manager folders create

--display-name=Product_A --folder=1000107035726

Waiting for [operations/fc.2194220672620579778] to finish...done. Created [].

# Crate third level folder “Development” under folder “Product_A”

me@cloudshell:~$ gcloud alpha resource-manager folders create

--display-name=Development --folder=1000107035726

Waiting for [operations/fc.3497651884412259206] to finish...done. Created [].

# List all the folders under the Organization

me@cloudshell:~$ gcloud alpha resource-manager folders list

--organization=358981462196

DISPLAY_NAME PARENT_NAME ID

IT organizations/358981462196 575615098945

Engineering organizations/358981462196 661646869517

Operations organizations/358981462196 895951706304

# List all the folders under the “Engineering” folder

me@cloudshell:~$ gcloud alpha resource-manager folders list

--folder=1000107035726

DISPLAY_NAME PARENT_NAME ID

Product_A folders/1000107035726 732853632103

Product_B folders/1000107035726 941564020040

# Create a new project in folder “Product_A”

me@cloudshell:~$ gcloud alpha projects create my-awesome-service-2 --folder

732853632103

Create in progress for [https://cloudresourcemanager.googleapis.com/v1/projects/my-awesome-service-3].Waiting for [operations/pc.2821699584791562398] to finish...done.

# List projects under folder “Production”

me@cloudshell:~$ gcloud alpha projects list --filter 'parent.id=725271112613'

PROJECT_ID NAME PROJECT_NUMBER

my-awesome-service-1 my-awesome-service-1 869942226409

my-awesome-service-2 my-awesome-service-2 177629658252

As you can see, Cloud Resource Manager is a powerful way to manage and organize GCP resources that belong to an organization. To learn more, check out the

Quickstarts, and stay tuned as we add additional capabilities in the months to come.