[Editor’s note: Today we hear from Boston, MA-based Silicon Therapeutics, which is applying computational methods in the context of complex biochemical problems relevant in human biology.]

As an integrated computational drug discovery firm, we recently deployed our INSITE Screening platform on Google Cloud Platform (GCP) to analyze over 10 million commercially available molecular compounds as potential starting materials for next-generation medicines. In one week, we performed over 500 million docking computations to evaluate how a protein responds to a given molecule. Each computation involved a docking program that predicted the preferred orientation of a small molecule to a protein and the associated energetics so we could assess whether or not it will bind and alter the function of the target protein.

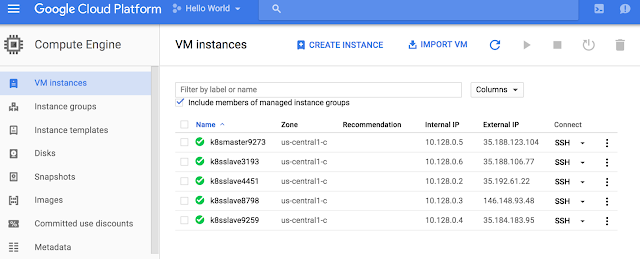

With a combination of Google Compute Engine standard and Preemptible VMs, we used up to 16,000 cores, for a total of 3 million core-hours and a cost of about $30,000. While this might sound like a lot of time and money, it's a lot less expensive and a lot faster than experimentally screening all compounds. Using a physics-based approach such as our INSITE platform is much more computationally expensive than some other computational screening approaches, but it allows us to find novel binders without the use of any prior information about active compounds (this particular target has no drug-like compounds known to bind). In a final stage of the calculations we performed all-atom molecular dynamics (MD) simulations on the top 1,000 molecules to determine which ones to purchase and experimentally assay for activity.

The bottom line: We successfully completed the screen using our INSITE platform on GCP and found several molecules that have recently been experimentally verified to have on-target and cell-based activity.

We chose to run this high-performance computing (HPC) job on GCP over other public cloud providers for a number of reasons:

- Availability of high-performance compute infrastructure. Compute Engine has a good inventory of high-performance processors that can be configured with large amounts of cores and memory. It also offers GPUs — a great fit for some of our computations, such as molecular dynamics and free energy calculations. SSD made a big difference in performance, as our total I/O for this screen exceeded 40 TB of raw data. Fast connectivity between the front-end and the compute nodes was also a big factor, as the front-end disk was NFS-mounted on the compute nodes.

- Support for industry standard tools. As a startup, we value the ability to run our workloads wherever we see fit. Our priorities can change rapidly based on project challenges (chemistry and biology), competition, opportunities and the availability of compute resources. Our INSITE platform is built on a combination of open-source and proprietary in-house software, so portability and repeatability across in-house and public clouds is essential.

- An attractive pricing model. Preemptible VMs are great combination of cost-effective and predictable, offering up to 80% off standard instances — no bidding and no surprises. That means we don't have to worry about jobs being killed due to a bidding war, which can create significant delays in completing our screens and requires unnecessary human overhead to manage the jobs.

To manage compute jobs, we enlisted the help of two popular open-source tools: Slurm, a workload manager used by 60% of the world’s TOP500 clusters, and ElastiCluster, which provides a command-line tool to create, manage and setup compute clusters hosted on a variety of cloud infrastructures. Using these open-source packages is economical, provides the lion’s share of the functionality of paid software solutions and ensures we can run our workloads in-house or elsewhere.

More compute = better results

But ultimately, the biggest benefit of using GCP was being able to more thoroughly screen compounds than we could have done with in-house resources. The target protein in this particular study was highly flexible, and having access to massive amounts of compute power allowed us to more accurately model the underlying physics of the system by accounting for protein flexibility. This yielded more active compounds than we would have found without the GCP resources.The reality is that all proteins are flexible, and undergo some form of induced fit upon ligand binding, so treating protein flexibility is always important in virtual screening if you want the best results. Most molecular docking programs only account for ligand flexibility, so if the receptor structure is not quite right then active compounds might not fit and therefore be missed, no matter how good the docking program is. Our INSITE screening platform incorporates protein flexibility in a novel way that can greatly improve the hit rate in virtual screening, even as it requires a lot of computational resources when screening millions of commercially available compounds.

| Example of the dynamic nature of protein target (Interleukin018, IL18) |

To learn more about the science at Silicon Therapeutics, please visit our website. And if you’re an engineer with expertise in high performance computing, GPUs and/or molecular simulations, be sure to visit our job listings.