We know that DBAs have different preferences in how and where software gets installed and we believe that making different options available will ultimately empower users. With the exception of Express Edition, redistributing is not a right granted by Oracle licenses, preventing the community from providing a public container registry with usable images. Rather than that, each user will have to build their own image based on binaries they download from Oracle themselves, using their own license agreement with Oracle. All of the other containers used by the El Carro operator use open source software and are made available on our public registry, so that you do not have to build and host them yourself.

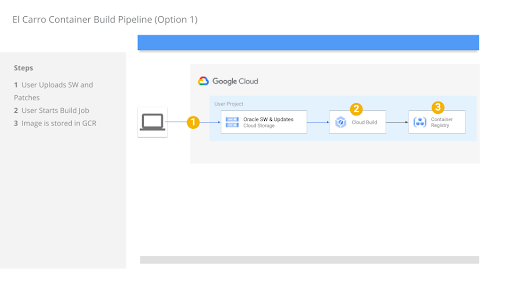

Option 1 - Use El Carro to build your own image with GCP

If you are using GCP, then we have an easy way for you to create custom images, you just upload Oracle binaries and patches to your own GCS bucket and start a Cloud Build job that will create the container image for you and upload it to your own, private container registry. A single build script and serverless cloud services take care of the whole process, so that you don’t have to worry about building locally and moving more images across the internet. In addition to creating seeded images (see below), this method also allows you to build containers with the Oracle Patches such as Release Update Revisions (RURs).Option 2 - Use El Carro to build you own image locally

You can also use the same Dockerfile and build process from Option 1—but without Google Cloud. Download Oracle installers and patches locally or to a VM used for the builds—then start a script that invokes Docker and builds the image on that machine. Lastly, tag and push the container image to a container registry of your choice. You will have to do a few more steps yourself if you don’t use Cloud Build, but you get the same image and customization options as with Option 1.Option 3 - Use Oracle build scripts to build your own image

Oracle also maintains an open source repository of scripts to build container images with their database. Maybe you are already using those images either with docker or Kubernetes, or you prefer to use Oracle’s own build method over ours. We recently added functionality to El Carro to make sure that the resulting images work just as well as the ones that El Carro can build for you.Option 4 - Use Oracle’s Container Registry directly

There is a way to avoid building your own images: The Oracle Container Registry contains pre-built images that can be used with our Kubernetes operator directly and without modification. But since Oracle’s registry can only be accessed by customers, it is protected with a password. After accepting Oracle’s license conditions, one can either copy images to their own registry, or configure OCR as a private repository in Kubernetes.The Power of Seeding

Aside from the installation, it is the creation of a database that takes the longest time in the initial provisioning process and it is often a frustrating wait time before you can log in and use your database for the first time after creation. To reduce this wait time, the first two options allow you to build a pre-seeded database image that already contains a snapshot of a created and configured database. That way this initialization step is moved to the container build process and minimizes the startup time of new database instances.Aside from the wait time, relying on a seeded image (i.e. including an empty database in the image can provide consistency in config options if the same image is to be used in multiple deployments).

Conclusion

We believe in an open cloud approach and empowering users with choice and flexibility. In the context of running Oracle databases on Kubernetes that means that you get to choose your database container images. El Carro provides build scripts that allow you to not only customize containers but also to increase security and robustness with the ability to bake patches and updates into the container image. Seeding container images with a database further reduces the deployment time by avoiding this step on first startup - which is especially useful in environments that create many databases - such as automatic test pipelines.But other users may feel more comfortable in receiving support when they use Oracle’s pre-built images from their registry.

The choice is yours. Just know that El Carro is here to help you modernize your Oracle database workloads with Kubernetes. And if you have any other feature requests or choices that matter to you—let us know by filing an issue on Github.

By Bjoern Rost, Product Manager and Ash Gbadamassi, Software Engineer – Cloud Databases