When a person navigates around an unfamiliar building, they take advantage of many visual, spatial and semantic cues to help them efficiently reach their goal. For example, even in an unfamiliar house, if they see a dining area, they can make intelligent predictions about the likely location of the kitchen and lounge areas, and therefore the expected location of common household objects. For robotic agents, taking advantage of semantic cues and statistical regularities in novel buildings is challenging. A typical approach is to implicitly learn what these cues are, and how to use them for navigation tasks, in an end-to-end manner via model-free reinforcement learning. However, navigation cues learned in this way are expensive to learn, hard to inspect, and difficult to re-use in another agent without learning again from scratch.

An appealing alternative for robotic navigation and planning agents is to use a world model to encapsulate rich and meaningful information about their surroundings, which enables an agent to make specific predictions about actionable outcomes within their environment. Such models have seen widespread interest in robotics, simulation, and reinforcement learning with impressive results, including finding the first known solution for a simulated 2D car racing task, and achieving human-level performance in Atari games. However, game environments are still relatively simple compared to the complexity and diversity of real-world environments.

In “Pathdreamer: A World Model for Indoor Navigation”, published at ICCV 2021, we present a world model that generates high-resolution 360º visual observations of areas of a building unseen by an agent, using only limited seed observations and a proposed navigation trajectory. As illustrated in the video below, the Pathdreamer model can synthesize an immersive scene from a single viewpoint, predicting what an agent might see if it moved to a new viewpoint or even a completely unseen area, such as around a corner. Beyond potential applications in video editing and bringing photos to life, solving this task promises to codify knowledge about human environments to benefit robotic agents navigating in the real world. For example, a robot tasked with finding a particular room or object in an unfamiliar building could perform simulations using the world model to identify likely locations before physically searching anywhere. World models such as Pathdreamer can also be used to increase the amount of training data for agents, by training agents in the model.

|

| Provided with just a single observation (RGB, depth, and segmentation) and a proposed navigation trajectory as input, Pathdreamer synthesizes high resolution 360º observations up to 6-7 meters away from the original location, including around corners. For more results, please refer to the full video. |

How Does Pathdreamer Work?

Pathdreamer takes as input a sequence of one or more previous observations, and generates predictions for a trajectory of future locations, which may be provided up front or iteratively by the agent interacting with the returned observations. Both inputs and predictions consist of RGB, semantic segmentation, and depth images. Internally, Pathdreamer uses a 3D point cloud to represent surfaces in the environment. Points in the cloud are labelled with both their RGB color value and their semantic segmentation class, such as wall, chair or table.

To predict visual observations in a new location, the point cloud is first re-projected into 2D at the new location to provide ‘guidance’ images, from which Pathdreamer generates realistic high-resolution RGB, semantic segmentation and depth. As the model ‘moves’, new observations (either real or predicted) are accumulated in the point cloud. One advantage of using a point cloud for memory is temporal consistency — revisited regions are rendered in a consistent manner to previous observations.

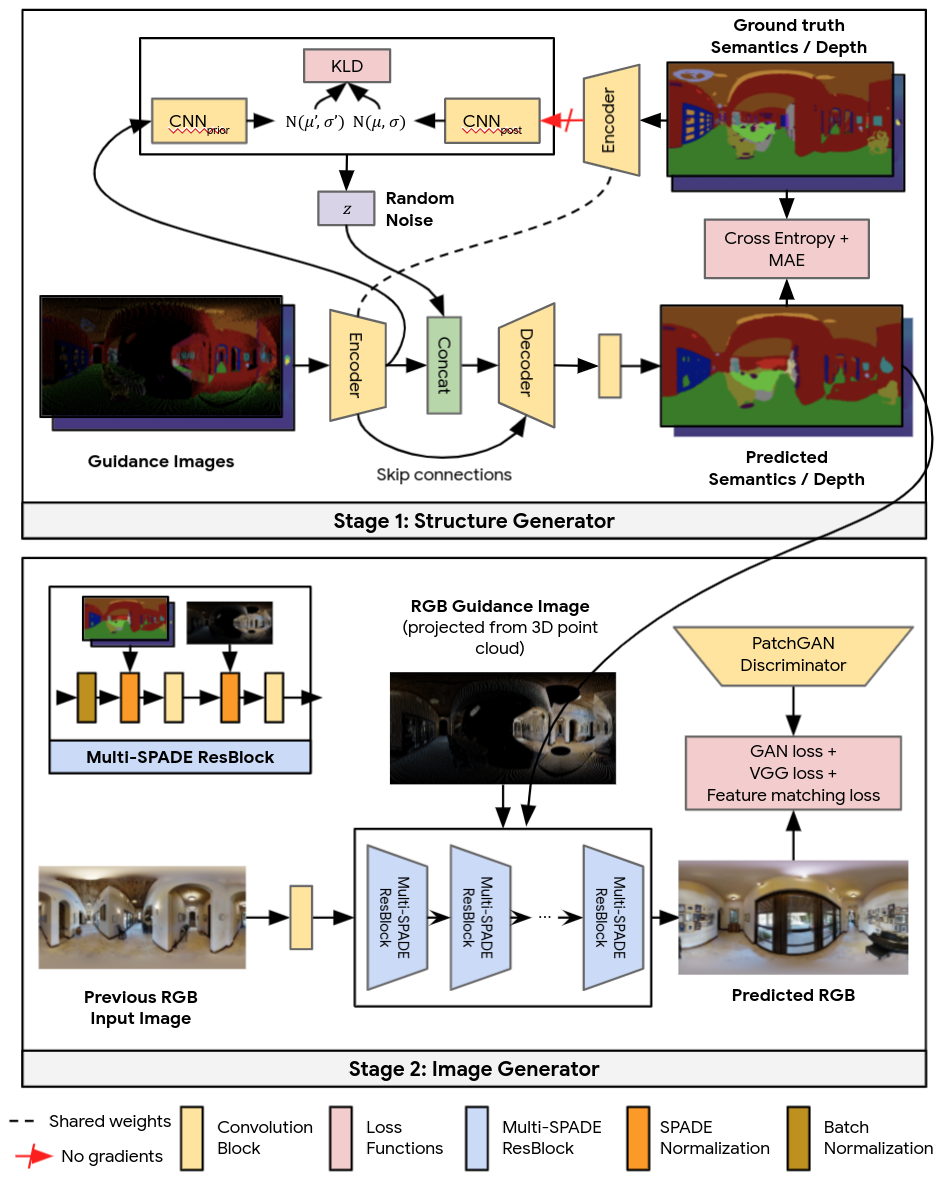

To convert guidance images into plausible, realistic outputs Pathdreamer operates in two stages: the first stage, the structure generator, creates segmentation and depth images, and the second stage, the image generator, renders these into RGB outputs. Conceptually, the first stage provides a plausible high-level semantic representation of the scene, and the second stage renders this into a realistic color image. Both stages are based on convolutional neural networks.

Diverse Generation Results

In regions of high uncertainty, such as an area predicted to be around a corner or in an unseen room, many different scenes are possible. Incorporating ideas from stochastic video generation, the structure generator in Pathdreamer is conditioned on a noise variable, which represents the stochastic information about the next location that is not captured in the guidance images. By sampling multiple noise variables, Pathdreamer can synthesize diverse scenes, allowing an agent to sample multiple plausible outcomes for a given trajectory. These diverse outputs are reflected not only in the first stage outputs (semantic segmentation and depth images), but in the generated RGB images as well.

Pathdreamer is trained with images and 3D environment reconstructions from Matterport3D, and is capable of synthesizing realistic images as well as continuous video sequences. Because the output imagery is high-resolution and 360º, it can be readily converted for use by existing navigation agents for any camera field of view. For more details and to try out Pathdreamer yourself, we recommend taking a look at our open source code.

Application to Visual Navigation Tasks

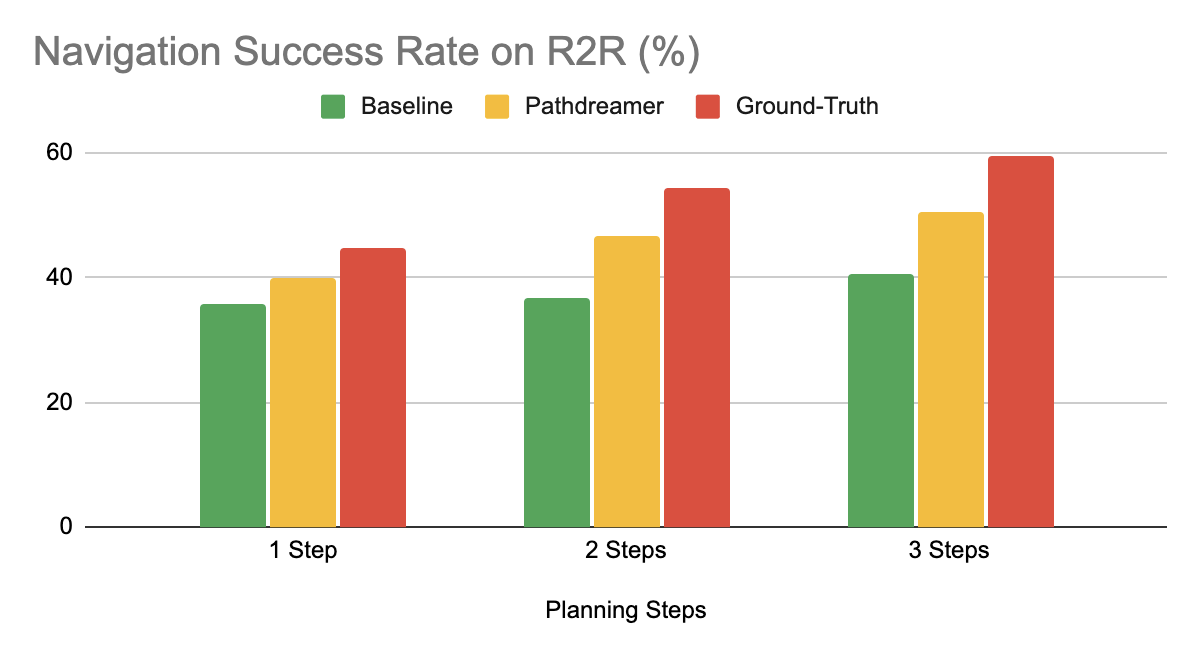

As a visual world model, Pathdreamer shows strong potential to improve performance on downstream tasks. To demonstrate this, we apply Pathdreamer to the task of Vision-and-Language Navigation (VLN), in which an embodied agent must follow a natural language instruction to navigate to a location in a realistic 3D environment. Using the Room-to-Room (R2R) dataset, we conduct an experiment in which an instruction-following agent plans ahead by simulating many possible navigable trajectory through the environment, ranking each against the navigation instructions, and choosing the best ranked trajectory to execute. Three settings are considered. In the Ground-Truth setting, the agent plans by interacting with the actual environment, i.e. by moving. In the Baseline setting, the agent plans ahead without moving by interacting with a navigation graph that encodes the navigable routes within the building, but does not provide any visual observations. In the Pathdreamer setting, the agent plans ahead without moving by interacting with the navigation graph and also receives corresponding visual observations generated by Pathdreamer.

When planning ahead for three steps (approximately 6m), in the Pathdreamer setting the VLN agent achieves a navigation success rate of 50.4%, significantly higher than the 40.6% success rate in the Baseline setting without Pathdreamer. This suggests that Pathdreamer encodes useful and accessible visual, spatial and semantic knowledge about real-world indoor environments. As an upper bound illustrating the performance of a perfect world model, under the Ground-Truth setting (planning by moving) the agent’s success rate is 59%, although we note that this setting requires the agent to expend significant time and resources to physically explore many trajectories, which would likely be prohibitively costly in a real-world setting.

Conclusions and Future Work

These results showcase the promise of using world models such as Pathdreamer for complicated embodied navigation tasks. We hope that Pathdreamer will help unlock model-based approaches to challenging embodied navigation tasks such as navigating to specified objects and VLN.

Applying Pathdreamer to other embodied navigation tasks such as Object-Nav, continuous VLN, and street-level navigation are natural directions for future work. We also envision further research on improved architecture and modeling directions for the Pathdreamer model, as well as testing it on more diverse datasets, including but not limited to outdoor environments. To explore Pathdreamer in more detail, please visit our GitHub repository.

Acknowledgements

This project is a collaboration with Jason Baldridge, Honglak Lee, and Yinfei Yang. We thank Austin Waters, Noah Snavely, Suhani Vora, Harsh Agrawal, David Ha, and others who provided feedback throughout the project. We are also grateful for general support from Google Research teams. Finally, we thank Tom Small for creating the animation in the third figure.