Keeping Google Play safe for users and developers remains a top priority for Google. Google Play Protect continues to scan billions of installed apps each day across billions of Android devices to keep users safe from threats like malware and unwanted software.

In 2022, we prevented 1.43 million policy-violating apps from being published on Google Play in part due to new and improved security features and policy enhancements — in combination with our continuous investments in machine learning systems and app review processes. We also continued to combat malicious developers and fraud rings, banning 173K bad accounts, and preventing over $2 billion in fraudulent and abusive transactions. We’ve raised the bar for new developers to join the Play ecosystem with phone, email, and other identity verification methods, which contributed to a reduction in accounts used to publish violative apps. We continued to partner with SDK providers to limit sensitive data access and sharing, enhancing the privacy posture for over one million apps on Google Play.

With strengthened Android platform protections and policies, and developer outreach and education, we prevented about 500K submitted apps from unnecessarily accessing sensitive permissions over the past 3 years.

Developer Support and Collaboration to Help Keep Apps SafeAs the Android ecosystem expands, it’s critical for us to work closely with the developer community to ensure they have the tools, knowledge, and support to build secure and trustworthy apps that respect user data security and privacy.

In 2022, the App Security Improvements program helped developers fix ~500K security weaknesses affecting ~300K apps with a combined install base of approximately 250B installs. We also launched the Google Play SDK Index to help developers evaluate an SDK’s reliability and safety and make informed decisions about whether an SDK is right for their business and their users. We will keep working closely with SDK providers to improve app and SDK safety, limit how user data is shared, and improve lines of communication with app developers.

We also recently launched new features and resources to give developers a better policy experience. We’ve expanded our Helpline pilot to give more developers direct policy phone support. And we piloted the Google Play Developer Community so more developers can discuss policy questions and exchange best practices on how to build safe apps.

More Stringent App Requirements and Guidelines

In addition to the Google Play features and policies that are central to providing a safe experience for users, each Android OS update brings privacy, security, and user experience improvements. To ensure users realize the full benefits of these advances — and to maintain the trusted experience people expect on Google Play — we collaborate with developers to ensure their apps work seamlessly on newer Android versions. With the new Target API Level policy, we’re strengthening user security and privacy by protecting users from installing apps that may not have the full set of privacy and security features offered by the latest versions of Android.

This past year, we rolled out new license requirements for personal loan apps in key geographies – Kenya, Nigeria, and Philippines – with more stringent requirements for loan facilitator apps in India to combat fraud. We also clarified that our impersonation policy prohibits the impersonation of an entity or organization – helping to give users more peace of mind that they are downloading the app they’re looking for.

We are also working to help fight fraudulent and malicious ads on Google Play. With an updated ads policy for developers, we are providing key guidelines that will improve the in-app user experience and prohibit unexpected full screen interstitial ads. This update is inspired by the Mobile Apps Experiences - Better Ads Standards.

Improving Data Transparency, Security Controls and Tools

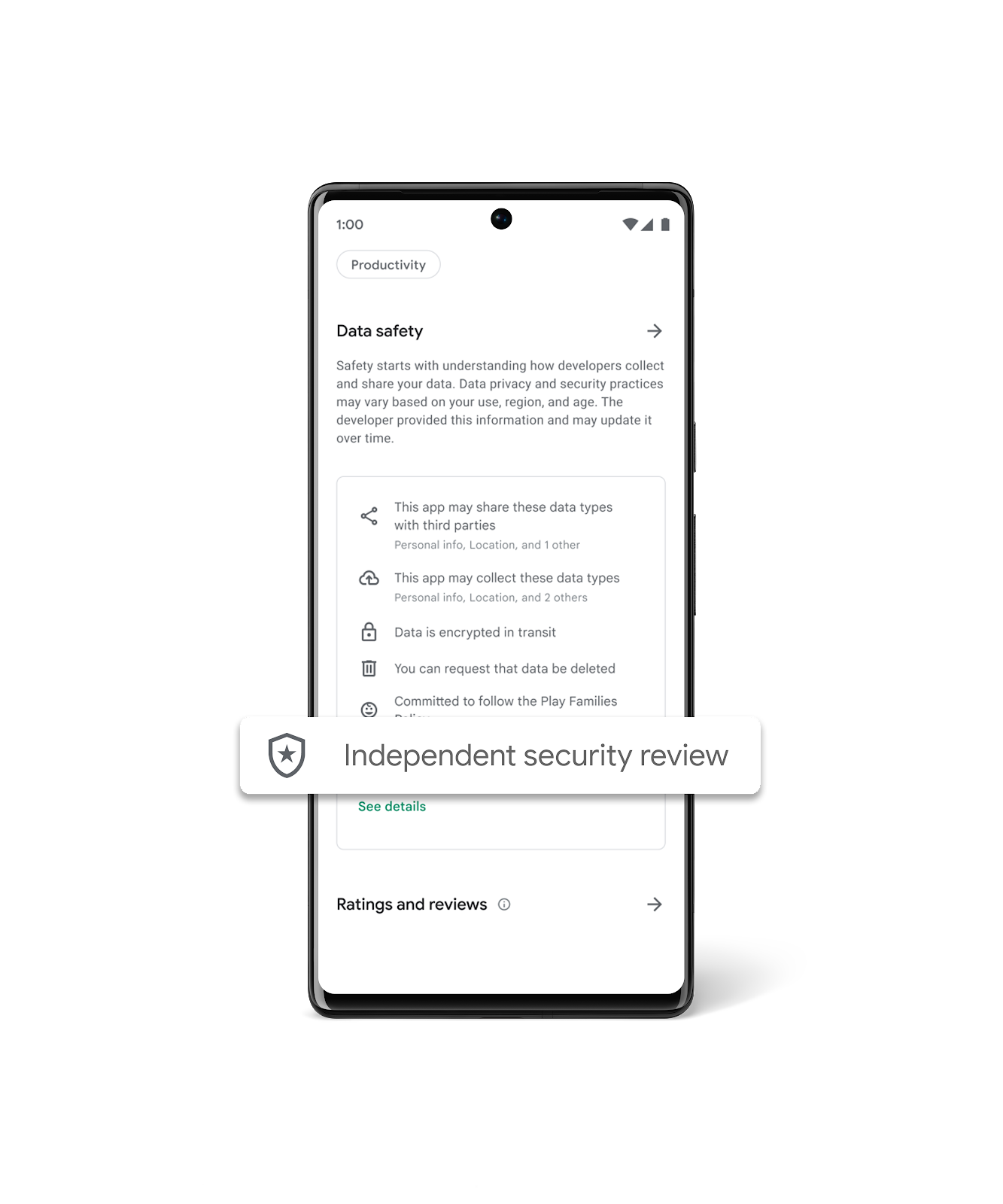

We launched the Data safety section in Google Play last year to give users more clarity on how their app data is being collected, shared, and protected. We’re excited to work with developers on enhancing the Data safety section to share their data collection, sharing, and safety practices with their users.

In 2022, the Google Play Store was the first commercial app store to recognize and display a badge for any app that has completed an independent security review through App Defense Alliance’s Mobile App Security Assessment (MASA). The badge is displayed within an app’s respective Data Safety section. MASA leverages OWASP’s Mobile Application Security Verification Standard, which is the most widely adopted set of security requirements for mobile applications. We’re seeing strong developer interest in MASA with widely used apps across major app categories, e.g., Roblox, Uber, PayPal, Threema, YouTube, and many more.

This past year, we also expanded the App Defense Alliance, an alliance of partners with a mission to protect Android users from bad apps through shared intelligence and coordinated detection. McAfee and Trend Micro joined Google, ESET, Lookout, and Zimperium, to reduce the risk of app-based malware and better protect Android users.

We’ve also continued to enhance protections for developers and their apps, such as hardening Play Integrity API with KeyMint and Remote Key Provisioning.

Bringing Continuous Security and Privacy Enhancements to Pixel Users

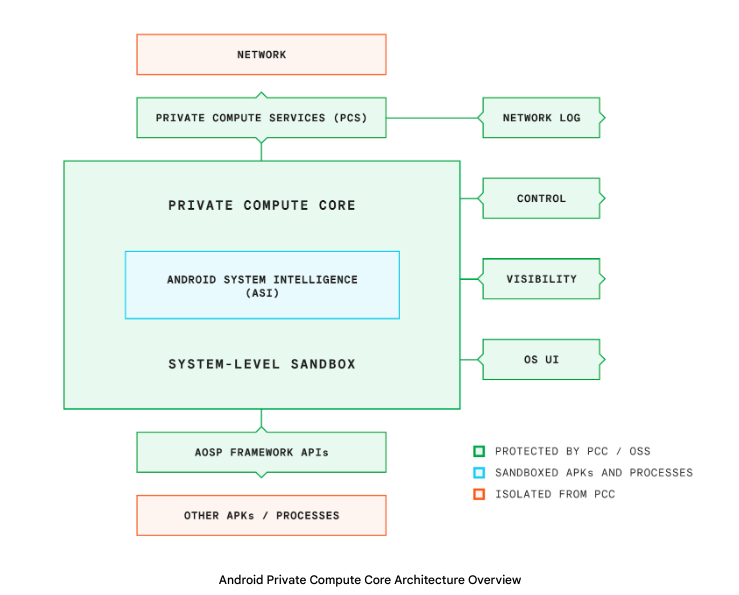

For Pixel users, we added more powerful features to help keep our users safe. The new security and privacy settings have been launched to all Pixel devices running Android 13, improving the security and privacy posture for millions of users’ around the world every month. Private Compute Core also allows Pixel phones to detect harmful apps in a privacy preserving way.

Looking Ahead

We remain committed to keeping Google Play and our ecosystem of users and developers safe, and we look forward to many exciting security and safety announcements in 2023.