By Luke Stone and Jian Ma

Editor’s note: We’re excited to bring you this blog post from the team of Google experts who wrote the book (really!) on Site Reliability Engineering (SRE) a few years back. The second edition of the book is underway, and this post delves into one area of SRE that’s relevant to many IT teams today: troubleshooting in the age of cloud computing. This is part two of two. Check out part one on writing better issue reports for cloud provider support.

Troubleshooting computer systems is an act as old as computers themselves. Some might even call it an art. The cloud computing paradigm entails a fundamental change to how IT teams conduct troubleshooting.

Successful IT troubleshooting doesn’t depend only on luck or experience, but is a deliberate process that can be taught. When you’re using cloud-based infrastructure, you’re often troubleshooting via a cloud provider’s help desk, adding another layer to helping users. Because of this shift away from the traditional IT team model, your communications with the provider are essential. (See part one for more on putting together an effective issue report to improve troubleshooting from the start.)

Once you’ve communicated the issue to your provider, you’ll be working with the provider’s support team to get the issue fixed.

The essentials of cloud troubleshooting

Those diagnosing a technical problem with cloud infrastructure are seeking possible explanations (hypotheses) and evidence that explains the problem. In the short term, they look for changes in the system that roughly correlate with the problem, and consider rolling back, as a first step to mitigate the problem and stop the bleeding. The longer-term goal is to identify and fix the root cause so the problem will not recur.

From the site reliability engineering (SRE) perspective, the general approach for troubleshooting is as follows:

- Triage: Mitigate the impact if possible

- Examine: Gather observations and share them

- Diagnose: Create a hypothesis that explains the observations

- Test and treat:

- Identify tests that may prove or disprove the hypothesis

- Execute the tests and agree on the meaning of the result

- Move on to the next hypothesis; repeat until solved

When you’re working with a cloud provider on troubleshooting an issue, there are parts of the process you’re unable to control. But you can follow the steps on your end. Here’s what you can do when submitting a report to your cloud provider support team.

1. Communicate any troubleshooting you've already done

By the time you open an issue report, you've probably done some troubleshooting already. You may have checked the provider’s status page, for example. Share the steps you've taken and any key findings. Keep a timeline and log book of what you have done and share it with the provider. This means that you should start keeping a log book as soon as possible, from the start of detection of your problem. Keep in mind that while cloud providers may have telemetry that provides real-time omniscient awareness of the state of their infrastructure, the dependencies that result from your particular implementation may be less obvious. By design, your particular use of cloud resources is proprietary and private, so your troubleshooting vantage point is vital.

If you think you have a diagnosis, explain how you came to that conclusion. If you think others can reproduce the issue, include the steps to do so. A reproducible test in an issue report usually leads to the fastest resolution.

You may have an idea or guess about what's causing the problem. Be careful to avoid confirmation bias—looking for evidence to support your guess without considering evidence to the contrary.

2. Be specific and explicit about the issue

If you've ever played the

telephone game, in which players whisper a message from person to person, you've seen how human translation and interpretation can lead to communication gaps. Rather than describing information in your provider communications, try to share it. Doing so reduces the chance that your reader will misinterpret what you're saying and can help speed up troubleshooting. Don’t assume that your provider has access to all of this information; customer privacy means that they may not, by design.

For example:

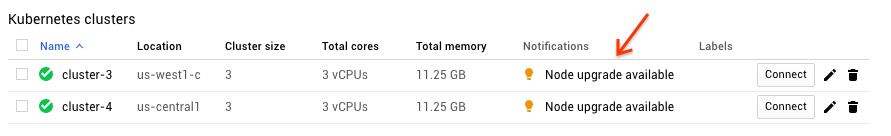

- Use a screenshot to show exactly what you see

- For web-based interfaces, provide a .HAR (Http ARchive) file

- Attach information like tcpdump output, logs snippets and example stack traces

3. Report production outages quickly

An issue is considered to be a production outage if your application has stopped serving traffic to users or is experiencing similar business-critical impact. Report production outages to your cloud provider support as soon as possible. Issues that block a small number of developers in a developer test environment are normally not considered production outages, so they should be reported at lower priorities.

Normally, when cloud provider support is alerted about a production outage, they quickly triage the situation with the following steps:

- Immediately check for known issues affecting the infrastructure.

- Confirm the nature of the issue.

- Establish communication channels.

Typically, you can expect a quick response with a brief message, which might contain:

- Whether or not there is a known issue affecting multiple customers

- An acknowledgement that they can observe the issue you've reported or a request for more details

- How they intend to communicate (for example, phone, Skype, or issue report)

It’s important to quickly create an issue report including the four critical details (described in part one and then begin deeper troubleshooting on your side of the equation. If your organization has a defined incident management process (see

Managing Incidents), escalating to your cloud provider should be among your initial steps.

4. Report networking issues with specificity

Most cloud providers’ networks are huge and complex, composed of many technologies and teams. It's important to quickly identify a networking-specific problem as such and engage with the team that can repair it.

Many networking issues have similar symptoms, like "can't connect to server," at a high level. This level of detail is typically too generic to be useful in identifying the root cause, so you need to provide more diagnostic information. Network issues relate to connectivity, which always involves at least two specific points: source and destination. Always include information about these points when reporting network issues.

To structure your issue report, use the conceptual tool of a packet flow diagram:

- Describe the important hops that a packet takes along a path from source to destination, along with any significant transformations (e.g., NAT) along the way.

- Start by identifying the affected network endpoints by Internet IP address or by RFC 1918 private address, plus an ASN for the network.

- Note anything meaningful about the endpoints, such as who controls them and whether they are associated with a DNS hostname.

- Note any intermediate encapsulation and/or indirection. For example: VPN tunneling, proxies or NAT gateways.

- Note any intermediate filtering, like firewalls, CDN or WAF.

Many problems that manifest as high latency or intermittent packet loss will require a path analysis and/or a packet capture for diagnosis. Path analysis is a list of all hops that packets traverse (for example,

MTR or tcptraceroute). A packet capture (a.k.a. pcap, derived from the name of the library libpcap) is an observation of real network traffic. It's important to take a packet capture for both endpoints, at the same time, which can be tricky. Practice with the necessary tools (for example

tcpdump or

Wireshark) and make sure they are installed before you need them.

5. Escalate when appropriate

If circumstances change, you may need to escalate the urgency of an issue so it receives attention quickly. Take this step if business impact increases, if an issue is stuck without progress after a lot of back-and-forth with support, or if some other factor calls for quicker resolution.

The most explicit way to escalate an issue is to change the priority of the issue report (for example, from P3 to P2). Provide comments about why you need to escalate so support can respond appropriately.

6. Create a summary document for long-running or difficult issues

Issue state and relevant information change over time as new facts come to light and hypotheses are ruled out. In the meantime, new people join the investigation. Help communicate relevant, up-to-date information by collecting information in a summary document.

A good summary document has the following dimensions:

- The latest state summarized at the top

- Links to all relevant issue reports and internal tracking bugs

- A list of hypotheses which are potentially true, and hypotheses that have been ruled out already. When you start investigating a particular hypothesis, note that you are doing so, and mention the tests or tools that you intend to use. Often, you can get good advice or prevent duplicate work.

SAMPLE summary document format:

$TIMESTAMP

<Current customer impact> <Working theory and actions being taken> <Next steps>

13:00:00

Customer impact has been mitigated and resolved. Our networking provider was throttling our traffic because we forgot to pay our bill last month. Next step is to be nicer to our finance team.

12:00:00

More than 100 customers are actively complaining about not being able to reach our service. Our networking provider is throttling customer traffic to one of our load balancers. The response team is actively working with our networking provider’s tier 1 support to understand why and how this happened.

11:00:00

We have now received 100 complaints from 50 customers from four different geos that they cannot consistently reach our API at api.acme.com. Our engineers currently believe that an upstream networking issue is causing this. Next steps are to reach out to our networking provider to see if there are any upstream issues.

10:00:00

We have received five complaints from five customers that they are unable to reach api.acme.com. Our engineers are looking into the issue.

Try to keep each issue report focused on a single issue. Don't reopen an issue report to bring up a new issue, even if it's related to the original issue. Do reference similar issues in your new report to help your provider recognize patterns from systemic root causes.

Keep your communication skills sharp

Communicating highly detailed technical information in a clear and actionable manner can be difficult. Doing so requires focus and specific skills. This task is particularly challenging in stressful situations, because our biological response to stress works against the need for clear cognitive reasoning. The following techniques help make communication easier for everyone.

Help reduce cognitive load by writing a detailed issue report

Many issue reports require the reader to make inferences or calculations. This introduces

cognitive load, which decreases the mental energy available for solving the technical problem.

When writing an issue report, be as specific and detailed as possible. While this attention to detail requires more time on the part of the writer, consider that an issue report is written once but read many times by many people. People can solve the problem faster together when equipped with comprehensive information. Avoid acronyms and internal company code names. Also, be mindful of protecting customer privacy when disclosing any information to any third party.

Use narrative techniques

Once upon a time, in a land far, far away...

Humans are very good at absorbing information in the form of stories, so you can get your point across quite effectively this way. Start with the context: What was happening when you first observed the problem? What potential fixes did you try? Who are the characters involved, and why does the issue matter to them?

Include visuals Illustrate your issue report with any supporting images you have available, like text formatting or charts and screenshots.

Text formatting

Formatted text like log lines, code excerpts or MySQL results often become illegible when sent through plain-text emails. Add explicit markers (for example, <<<<<< at the end of the line) to help direct attention to important sections. You can use footnotes to point to long-form URLs, or use a URL shortener.

Use bullet points to format lists, and to call out important details like instance names. Use numbered lists to enumerate series of steps.

Charts

Charts are very useful for understanding time-series data. When you’re sending charts with an issue report, keep these best practices in mind:

- Take a screenshot, including title and axis labels. For absolute values, specify units (requests per minute, errors per second, etc).

- Annotate the screenshot with arrows or circles to call out important points.

- Briefly describe what the chart is measuring.

- Briefly describe how the chart normally looks.

- In a few sentences, describe your interpretation of the chart and why it is relevant to the problem.

Avoid the following antipatterns:

- The Y-axis represents a specific error (e.g., exceptions in my-handler) and has no clear relationship to the problem under investigation (e.g., high persistence-layer latency). To remedy this situation, explain why the graph is relevant to the problem.

- The Y-axis is an absolute number (e.g., 1M per minute) that provides no context about the relative impact.

- The X-axis doesn't have a time zone.

- The Y-axis is not zero-based. This can make minor changes in the Y value seem very large.

- Axis labels are missing or cut off.

Well-crafted issue reports, along with strong communication with your cloud provider, can speed the resolution process and time it takes. The cloud computing model has drastically changed the way that IT teams troubleshoot computer systems. Technical savvy is no longer the sole necessary skill set for effective troubleshooting--you must also be able to communicate clearly and efficiently with cloud providers. While the reality of your deployment may be unique, nuanced, and complex, these building blocks can help you navigate this territory.

Related content:

SLOs, SLIs, SLAs, oh my - CRE life lessons

SRE vs. DevOps: competing standards or close friends?

Introducing Google Customer Reliability Engineering

Special thanks to Ralph Pearson, J.C. van Winkel, John Lowry, Dermot Duffy and Dave Rensin

You can even use exclusion filters to collect a percentage of logs, such as 1% of successful HTTP responses. Plus, exclusion filters are easy to update, so if you’re troubleshooting your system, you can always temporarily increase the logs you’re ingesting.

You can even use exclusion filters to collect a percentage of logs, such as 1% of successful HTTP responses. Plus, exclusion filters are easy to update, so if you’re troubleshooting your system, you can always temporarily increase the logs you’re ingesting.