The jury’s still out whether that rectangle in the Google Maps image identified by 15-year old Canadian William Gadoury is a lost Mayan city . . . or merely an abandoned field.

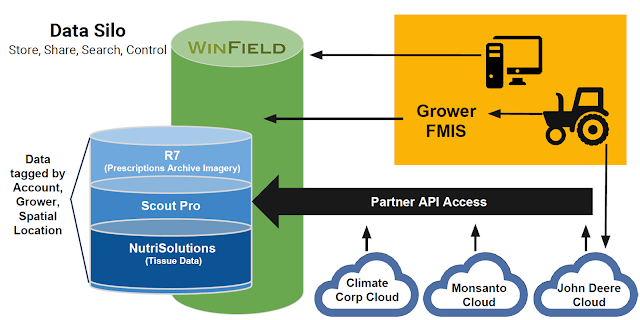

Meanwhile, Google Cloud Platform customers have no doubts about the value of geospatial data. This week, Land O’Lakes announced its new WinField Data Silo tool that runs on top of Google Compute Engine and Google Cloud Storage, and integrates with the Google Maps API to display real-time agronomic data stored in the system to its users. The fact that those users can be anywhere — sitting at their desks, or on the console of their combine harvesters — was cited as a unique differentiator for GCP.

Speaking of unique, cloud architect Janakiram MSV shares on Forbes the five unique things about GCE that no IaaS provider can match. First on his list is Google Compute Engine’s sustained usage discount. No argument from us. The longer a VM runs on GCE, the greater the discount — up to 30% for instances that run an entire month. Further, customers don’t need to commit to the instance up front, and any discounts are automatically applied by Google on their bill.

No argument from GCP customer Geofeedia either. According to the market intelligence provider, reserved instances have no place in provisioning cloud compute resources. “In the world of agile software, making a one, let alone a three year prediction about your hardware needs is extremely difficult,” writes Charlie Moad, Geofeedia director of production engineering. Moad also gives shout outs to GCP networking, firewall rules and its project-centric approach to building multi-region applications.

That’s it for this week on Google Cloud Platform. If you happen to be at Google I/0 2016 next week, check out the cloud sessions. And be sure to come back next Friday for the latest on the lost Mayan City/abandoned field debate.