The Chrome Media team has created Draco, an open source compression library to improve the storage and transmission of 3D graphics. Draco can be used to compress meshes and point-cloud data. It also supports compressing points, connectivity information, texture coordinates, color information, normals and any other generic attributes associated with geometry.

With Draco, applications using 3D graphics can be significantly smaller without compromising visual fidelity. For users this means apps can now be downloaded faster, 3D graphics in the browser can load quicker, and VR and AR scenes can now be transmitted with a fraction of the bandwidth, rendered quickly and look fantastic.

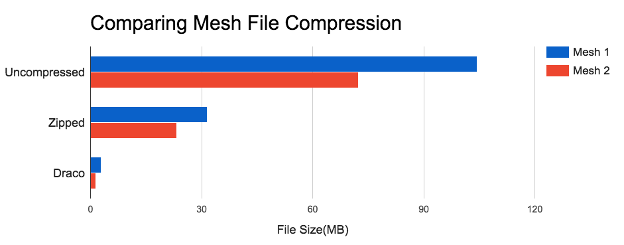

|

| Sample Draco compression ratios and encode/decode performance* |

Transmitting 3D graphics for web-based applications is significantly faster using Draco’s JavaScript decoder, which can be tied to a 3D web viewer. The following video shows how efficient transmitting and decoding 3D objects in the browser can be - even over poor network connections.

Video and audio compression have shaped the internet over the past 10 years with streaming video and music on demand. With the emergence of VR and AR, on the web and on mobile (and the increasing proliferation of sensors like LIDAR) we will soon be swimming in a sea of geometric data. Compression technologies, like Draco, will play a critical role in ensuring these experiences are fast and accessible to anyone with an internet connection. More exciting developments are in store for Draco, including support for creating multiple levels of detail from a single model to further improve the speed of loading meshes.

We look forward to seeing what people do with Draco now that it's open source. Check out the code on GitHub and let us know what you think. Also available is a JavaScript decoder with examples on how to incorporate Draco into the three.js 3D viewer.

By Jamieson Brettle and Frank Galligan, Chrome Media Team

* Specifications: Tests ran with textures and positions quantized at 14-bit precision, normal vectors at 7-bit precision. Ran on a single-core of a 2013 MacBook Pro. JavaScript decoded using Chrome 54 on Mac OS X.