Google is constantly working to improve our systems that protect users from Potentially Harmful Applications (PHAs). Usually, PHA authors attempt to install their harmful apps on as many devices as possible. However, a few PHA authors spend substantial effort, time, and money to create and install their harmful app on a small number of devices to achieve a certain goal.

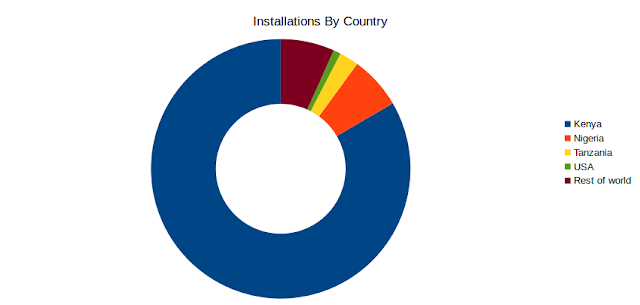

This blog post covers Tizi, a backdoor family with some rooting capabilities that was used in a targeted attack against devices in African countries, specifically: Kenya, Nigeria, and Tanzania. We'll talk about how the Google Play Protect and Threat Analysis teams worked together to detect and investigate Tizi-infected apps and remove and block them from Android devices.

What is Tizi?

Tizi is a fully featured backdoor that installs spyware to steal sensitive data from popular social media applications. The Google Play Protect security team discovered this family in September 2017 when device scans found an app with rooting capabilities that exploited old vulnerabilities. The team used this app to find more applications in the Tizi family, the oldest of which is from October 2015. The Tizi app developer also created a website and used social media to encourage more app installs from Google Play and third-party websites.

Here is an example social media post promoting a Tizi-infected app:

What is the scope of Tizi?

What are we doing?

To protect Android devices and users, we used Google Play Protect to disable Tizi-infected apps on affected devices and have notified users of all known affected devices. The developers' accounts have been suspended from Play.

The Google Play Protect team also used information and signals from the Tizi apps to update Google's on-device security services and the systems that search for PHAs. These enhancements have been enabled for all users of our security services and increases coverage for Google Play users and the rest of the Android ecosystem.

Additionally, there is more technical information below to help the security industry in our collective work against PHAs.

What do I need to do?

Through our investigation, we identified around 1,300 devices affected by Tizi. To reduce the chance of your device being affected by PHAs and other threats, we recommend these 5 basic steps:

- Check permissions: Be cautious with apps that request unreasonable permissions. For example, a flashlight app shouldn't need access to send SMS messages.

- Enable a secure lock screen: Pick a PIN, pattern, or password that is easy for you to remember and hard for others to guess.

- Update your device: Keep your device up-to-date with the latest security patches. Tizi exploited older and publicly known security vulnerabilities, so devices that have up-to-date security patches are less exposed to this kind of attack.

- Google Play Protect: Ensure Google Play Protect is enabled.

- Locate your device: Practice finding your device, because you are far more likely to lose your device than install a PHA.

How does Tizi work?

The Google Play Protect team had previously classified some samples as spyware or backdoor PHAs without connecting them as a family. The early Tizi variants didn't have rooting capabilities or obfuscation, but later variants did.

After gaining root, Tizi steals sensitive data from popular social media apps like Facebook, Twitter, WhatsApp, Viber, Skype, LinkedIn, and Telegram. It usually first contacts its command-and-control servers by sending an SMS with the device's GPS coordinates to a specific number. Subsequent command-and-control communications are normally performed over regular HTTPS, though in some specific versions, Tizi uses the MQTT messaging protocol with a custom server. The backdoor contains various capabilities common to commercial spyware, such as recording calls from WhatsApp, Viber, and Skype; sending and receiving SMS messages; and accessing calendar events, call log, contacts, photos, Wi-Fi encryption keys, and a list of all installed apps. Tizi apps can also record ambient audio and take pictures without displaying the image on the device's screen.

Tizi can root the device by exploiting one of the following local vulnerabilities:

- CVE-2012-4220

- CVE-2013-2596

- CVE-2013-2597

- CVE-2013-2595

- CVE-2013-2094

- CVE-2013-6282

- CVE-2014-3153

- CVE-2015-3636

- CVE-2015-1805

Most of these vulnerabilities target older chipsets, devices, and Android versions. All of the listed vulnerabilities are fixed on devices with a security patch level of April 2016 or later, and most of them were patched considerably prior to this date. Devices with this patch level or later are far less exposed to Tizi's capabilities. If a Tizi app is unable to take control of a device because the vulnerabilities it tries to use are are all patched, it will still attempt to perform some actions through the high level of permissions it asks the user to grant to it, mainly around reading and sending SMS messages and monitoring, redirecting, and preventing outgoing phone calls.

Samples uploaded to VirusTotal

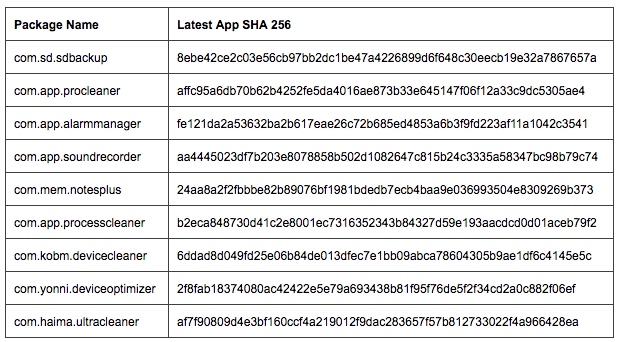

To encourage further research in the security community, here are some sample applications embedding Tizi that were already on VirusTotal.

Package name | SHA256 digest | SHA1 certificate |

com.press.nasa.com.tanofresh | 4d780a6fc18458311250d4d1edc750 | 816bbee3cab5eed00b8bd16df56032 |

com.dailyworkout.tizi | 7c6af091a7b0f04fb5b212bd3c180d | 404b4d1a7176e219eaa457b0050b40 |

com.system.update.systemupdate | 7a956c754f003a219ea1d2205de3ef | 4d2962ac1f6551435709a5a874595d |

Additional digests linked to Tizi

To encourage further research in the security community, here are some sample digests of exploits and utilities that were used or abused by Tizi.

Filename | SHA256 digest |

run_root_shell | f2e45ea50fc71b62d9ea59990ced75 |

iovyroot | 4d0887f41d0de2f31459c14e3133de |

filesbetyangu.tar | 9869871ed246d5670ebca02bb265a5 |