We are excited to announce passkey support on Android and Chrome for developers to test today, with general availability following later this year. In this post we cover details on how passkeys stored in the Google Password Manager are kept secure. See our post on the Android Developers Blog for a more general overview.

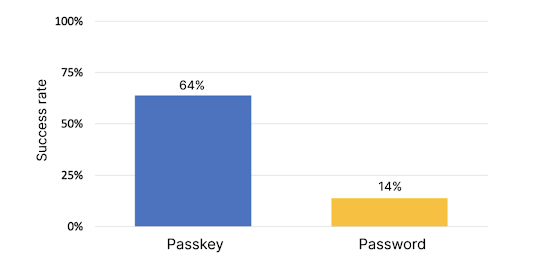

Passkeys are a safer and more secure alternative to passwords. They also replace the need for traditional 2nd factor authentication methods such as text message, app based one-time codes or push-based approvals. Passkeys use public-key cryptography so that data breaches of service providers don't result in a compromise of passkey-protected accounts, and are based on industry standard APIs and protocols to ensure they are not subject to phishing attacks.

Passkeys are the result of an industry-wide effort. They combine secure authentication standards created within the FIDO Alliance and the W3C Web Authentication working group with a common terminology and user experience across different platforms, recoverability against device loss, and a common integration path for developers. Passkeys are supported in Android and other leading industry client OS platforms.

A single passkey identifies a particular user account on some online service. A user has different passkeys for different services. The user's operating systems, or software similar to today's password managers, provide user-friendly management of passkeys. From the user's point of view, using passkeys is very similar to using saved passwords, but with significantly better security.

The main ingredient of a passkey is a cryptographic private key. In most cases, this private key lives only on the user's own devices, such as laptops or mobile phones. When a passkey is created, only its corresponding public key is stored by the online service. During login, the service uses the public key to verify a signature from the private key. This can only come from one of the user's devices. Additionally, the user is also required to unlock their device or credential store for this to happen, preventing sign-ins from e.g. a stolen phone.

To address the common case of device loss or upgrade, a key feature enabled by passkeys is that the same private key can exist on multiple devices. This happens through platform-provided synchronization and backup.

Passkeys in the Google Password Manager

On Android, the Google Password Manager provides backup and sync of passkeys. This means that if a user sets up two Android devices with the same Google Account, passkeys created on one device are available on the other. This applies both to the case where a user has multiple devices simultaneously, for example a phone and a tablet, and the more common case where a user upgrades e.g. from an old Android phone to a new one.

Passkeys in the Google Password Manager are always end-to-end encrypted: When a passkey is backed up, its private key is uploaded only in its encrypted form using an encryption key that is only accessible on the user's own devices. This protects passkeys against Google itself, or e.g. a malicious attacker inside Google. Without access to the private key, such an attacker cannot use the passkey to sign in to its corresponding online account.

Additionally, passkey private keys are encrypted at rest on the user's devices, with a hardware-protected encryption key.

Creating or using passkeys stored in the Google Password Manager requires a screen lock to be set up. This prevents others from using a passkey even if they have access to the user's device, but is also necessary to facilitate the end-to-end encryption and safe recovery in the case of device loss.

Recovering access or adding new devices

When a user sets up a new Android device by transferring data from an older device, existing end-to-end encryption keys are securely transferred to the new device. In some cases, for example, when the older device was lost or damaged, users may need to recover the end-to-end encryption keys from a secure online backup.

To recover the end-to-end encryption key, the user must provide the lock screen PIN, password, or pattern of another existing device that had access to those keys. Note, that restoring passkeys on a new device requires both being signed in to the Google Account and an existing device's screen lock.

Since screen lock PINs and patterns, in particular, are short, the recovery mechanism provides protection against brute-force guessing. After a small number of consecutive, incorrect attempts to provide the screen lock of an existing device, it can no longer be used. This number is always 10 or less, but for safety reasons we may block attempts before that number is reached. Screen locks of other existing devices may still be used.

If the maximum number of attempts is reached for all existing devices on file, e.g. when a malicious actor tries to brute force guess, the user may still be able to recover if they still have access to one of the existing devices and knows its screen lock. By signing in to the existing device and changing its screen lock PIN, password or pattern, the count of invalid recovery attempts is reset. End-to-end encryption keys can then be recovered on the new device by entering the new screen lock of the existing device.

Screen lock PINs, passwords or patterns themselves are not known to Google. The data that allows Google to verify correct input of a device's screen lock is stored on Google's servers in secure hardware enclaves and cannot be read by Google or any other entity. The secure hardware also enforces the limits on maximum guesses, which cannot exceed 10 attempts, even by an internal attack. This protects the screen lock information, even from Google.

When the screen lock is removed from a device, the previously configured screen lock may still be used for recovery of end-to-end encryption keys on other devices for a period of time up to 64 days. If a user believes their screen lock is compromised, the safer option is to configure a different screen lock (e.g. a different PIN). This disables the previous screen lock as a recovery factor immediately, as long as the user is online and signed in on the device.

Recovery user experience

If end-to-end encryption keys were not transferred during device setup, the recovery process happens automatically the first time a passkey is created or used on the new device. In most cases, this only happens once on each new device.

From the user's point of view, this means that when using a passkey for the first time on the new device, they will be asked for an existing device's screen lock in order to restore the end-to-end encryption keys, and then for the current device's screen lock or biometric, which is required every time a passkey is used.

Passkeys and device-bound private keys

Passkeys are an instance of FIDO multi-device credentials. Google recognizes that in certain deployment scenarios, relying parties may still require signals about the strong device binding that traditional FIDO credentials provide, while taking advantage of the recoverability and usability of passkeys.

To address this, passkeys on Android support the proposed Device-bound Public Key WebAuthn extension (devicePubKey). If this extension is requested when creating or using passkeys on Android, relying parties will receive two signatures in the result: One from the passkey private key, which may exist on multiple devices, and an additional signature from a second private key that only exists on the current device. This device-bound private key is unique to the passkey in question, and each response includes a copy of the corresponding device-bound public key.

Observing two passkey signatures with the same device-bound public key is a strong signal that the signatures are generated by the same device. On the other hand, if a relying party observes a device-bound public key it has not seen before, this may indicate that the passkey has been synced to a new device.

On Android, device-bound private keys are generated in the device's trusted execution environment (TEE), via the Android Keystore API. This provides hardware-backed protections against exfiltration of the device-bound private keys to other devices. Device-bound private keys are not backed up, so e.g. when a device is factory reset and restored from a prior backup, its device-bound key pairs will be different.

The device-bound key pair is created and stored on-demand. That means relying parties can request the devicePubKey extension when getting a signature from an existing passkey, even if devicePubKey was not requested when the passkey was created.