With the recent launch of Chrome 83, and the upcoming release of Mozilla Firefox 79, web developers are gaining powerful new security mechanisms to protect their applications from common web vulnerabilities. In this post we share how our Information Security Engineering team is deploying Trusted Types, Content Security Policy, Fetch Metadata Request Headers and the Cross-Origin Opener Policy across Google to help guide and inspire other developers to similarly adopt these features to protect their applications.

History

Since the advent of modern web applications, such as email clients or document editors accessible in your browser, developers have been dealing with common web vulnerabilities which may allow user data to fall prey to attackers. While the web platform provides robust isolation for the underlying operating system, the isolation between web applications themselves is a different story. Issues such as XSS, CSRF and cross-site leaks have become unfortunate facets of web development, affecting almost every website at some point in time.

These vulnerabilities are unintended consequences of some of the web's most wonderful characteristics: composability, openness, and ease of development. Simply put, the original vision of the web as a mesh of interconnected documents did not anticipate the creation of a vibrant ecosystem of web applications handling private data for billions of people across the globe. Consequently, the security capabilities of the web platform meant to help developers safeguard their users' data have evolved slowly and provided only partial protections from common flaws.

Web developers have traditionally compensated for the platform's shortcomings by building additional security engineering tools and processes to protect their applications from common flaws; such infrastructure has often proven costly to develop and maintain. As the web continues to change to offer developers more impressive capabilities, and web applications become more critical to our lives, we find ourselves in increasing need of more powerful, all-encompassing security mechanisms built directly into the web platform.

Over the past two years, browser makers and security engineers from Google and other companies have collaborated on the design and implementation of several major security features to defend against common web flaws. These mechanisms, which we focus on in this post, protect against injections and offer isolation capabilities, addressing two major, long-standing sources of insecurity on the web.

Injection Vulnerabilities

In the design of systems, mixing code and data is one of the canonical security anti-patterns, causing software vulnerabilities as far back as in the 1980s. It is the root cause of vulnerabilities such as SQL injection and command injection, allowing the compromise of databases and application servers.

On the web, application code has historically been intertwined with page data. HTML markup such as <script> elements or event handler attributes (onclick or onload) allow JavaScript execution; even the familiar URL can carry code and result in script execution when navigating to a javascript: link. While sometimes convenient, the upshot of this design is that – unless the application takes care to protect itself – data used to compose an HTML page can easily inject unwanted scripts and take control of the application in the user's browser.

Addressing this problem in a principled manner requires allowing the application to separate its data from code; this can be done by enabling two new security features: Trusted Types and Content Security Policy based on script nonces.

Trusted Types

Main article: web.dev/trusted-types by Krzysztof Kotowicz

JavaScript functions used by developers to build web applications often rely on parsing arbitrary structure out of strings. A string which seems to contain data can be turned directly into code when passed to a common API, such as innerHTML. This is the root cause of most DOM-based XSS vulnerabilities.

Trusted Types make JavaScript code safe-by-default by restricting risky operations, such as generating HTML or creating scripts, to require a special object – a Trusted Type. The browser will ensure that any use of dangerous DOM functions is allowed only if the right object is provided to the function. As long as an application produces these objects safely in a central Trusted Types policy, it will be free of DOM-based XSS bugs.

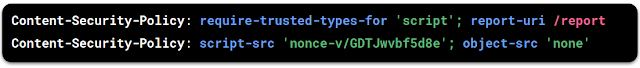

You can enable Trusted Types by setting the following response header:

We have recently launched Trusted Types for all users of My Google Activity and are working with dozens of product teams across Google as well as JavaScript framework owners to make their code support this important safety mechanism.

Trusted Types are supported in Chrome 83 and other Chromium-based browsers, and a polyfill is available for other user agents.

Content Security Policy based on script nonces

Main article: Reshaping web defenses with strict Content Security Policy

Content Security Policy (CSP) allows developers to require every <script> on the page to contain a secret value unknown to attackers. The script nonce attribute, set to an unpredictable number for every page load, acts as a guarantee that a given script is under the control of the application: even if part of the page is injected by an attacker, the browser will refuse to execute any injected script which doesn't identify itself with the correct nonce. This mitigates the impact of any server-side injection bugs, such as reflected XSS and stored XSS.

CSP can be enabled by setting the following HTTP response header:

This header requires all scripts in your HTML templating system to include a nonce attribute with a value matching the one in the response header:

Our CSP Evaluator tool can help you configure a strong policy. To help deploy a production-quality CSP in your application, check out this presentation and the documentation on csp.withgoogle.com.

Since the initial launch of CSP at Google, we have deployed strong policies on 75% of outgoing traffic from our applications, including in our flagship products such as GMail and Google Docs & Drive. CSP has mitigated the exploitation of over 30 high-risk XSS flaws across Google in the past two years.

Nonce-based CSP is supported in Chrome, Firefox, Microsoft Edge and other Chromium-based browsers. Partial support for this variant of CSP is also available in Safari.

Isolation Capabilities

Many kinds of web flaws are exploited by an attacker's site forcing an unwanted interaction with another web application. Preventing these issues requires browsers to offer new mechanisms to allow applications to restrict such behaviors. Fetch Metadata Request Headers enable building server-side restrictions when processing incoming HTTP requests; the Cross-Origin Opener Policy is a client-side mechanism which protects the application's windows from unwanted DOM interactions.

Fetch Metadata Request Headers

Main article: web.dev/fetch-metadata by Lukas Weichselbaum

A common cause of web security problems is that applications don't receive information about the source of a given HTTP request, and thus aren't able to distinguish benign self-initiated web traffic from unwanted requests sent by other websites. This leads to vulnerabilities such as cross-site request forgery (CSRF) and web-based information leaks (XS-leaks).

Fetch Metadata headers, which the browser attaches to outgoing HTTP requests, solve this problem by providing the application with trustworthy information about the provenance of requests sent to the server: the source of the request, its type (for example, whether it's a navigation or resource request), and other security-relevant metadata.

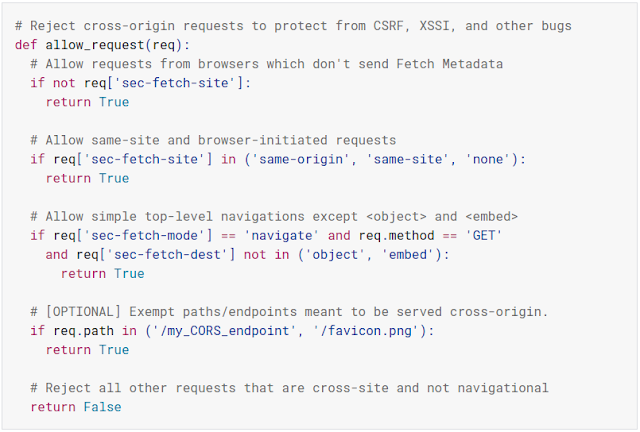

By checking the values of these new HTTP headers (Sec-Fetch-Site, Sec-Fetch-Mode and Sec-Fetch-Dest), applications can build flexible server-side logic to reject untrusted requests, similar to the following:

We provided a detailed explanation of this logic and adoption considerations at web.dev/fetch-metadata. Importantly, Fetch Metadata can both complement and facilitate the adoption of Cross-Origin Resource Policy which offers client-side protection against unexpected subresource loads; this header is described in detail at resourcepolicy.fyi.

At Google, we've enabled restrictions using Fetch Metadata headers in several major products such as Google Photos, and are following up with a large-scale rollout across our application ecosystem.

Fetch Metadata headers are currently sent by Chrome and Chromium-based browsers and are available in development versions of Firefox.

Cross-Origin Opener Policy

Main article: web.dev/coop-coep by Eiji Kitamura

By default, the web permits some interactions with browser windows belonging to another application: any site can open a pop-up to your webmail client and send it messages via the postMessage API, navigate it to another URL, or obtain information about its frames. All of these capabilities can lead to information leak vulnerabilities:

Cross-Origin Opener Policy (COOP) allows you to lock down your application to prevent such interactions. To enable COOP in your application, set the following HTTP response header:

If your application opens other sites as pop-ups, you may need to set the header value to same-origin-allow-popups instead; see this document for details.

We are currently testing Cross-Origin Opener Policy in several Google applications, and we're looking forward to enabling it broadly in the coming months.

COOP is available starting in Chrome 83 and in Firefox 79.

The Future

Creating a strong and vibrant web requires developers to be able to guarantee the safety of their users' data. Adding security mechanisms to the web platform – building them directly into browsers – is an important step forward for the ecosystem: browsers can help developers understand and control aspects of their sites which affect their security posture. As users update to recent versions of their favorite browsers, they will gain protections from many of the security flaws that have affected web applications in the past.

While the security features described in this post are not a panacea, they offer fundamental building blocks that help developers build secure web applications. We're excited about the continued deployment of these mechanisms across Google, and we're looking forward to collaborating with browser makers and the web standards community to improve them in the future.

For more information about web security mechanisms and the bugs they prevent, see the Securing Web Apps with Modern Platform Features Google I/O talk (video).