Deep learning has fueled tremendous progress in the field of computer vision in recent years, with neural networks repeatedly pushing the frontier of visual recognition technology. While many of those technologies such as object, landmark, logo and text recognition are provided for internet-connected devices through the Cloud Vision API, we believe that the ever-increasing computational power of mobile devices can enable the delivery of these technologies into the hands of our users, anytime, anywhere, regardless of internet connection. However, visual recognition for on device and embedded applications poses many challenges — models must run quickly with high accuracy in a resource-constrained environment making use of limited computation, power and space.

Today we are pleased to announce the release of MobileNets, a family of mobile-first computer vision models for TensorFlow, designed to effectively maximize accuracy while being mindful of the restricted resources for an on-device or embedded application. MobileNets are small, low-latency, low-power models parameterized to meet the resource constraints of a variety of use cases. They can be built upon for classification, detection, embeddings and segmentation similar to how other popular large scale models, such as Inception, are used.

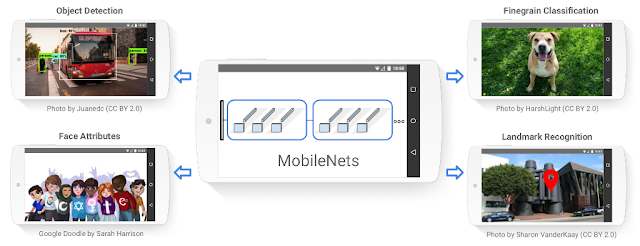

|

| Example use cases include detection, fine-grain classification, attributes and geo-localization. |

Model Checkpoint | Million MACs | Million Parameters | Top-1 Accuracy | Top-5 Accuracy |

569 | 4.24 | 70.7 | 89.5 | |

418 | 4.24 | 69.3 | 88.9 | |

291 | 4.24 | 67.2 | 87.5 | |

186 | 4.24 | 64.1 | 85.3 | |

317 | 2.59 | 68.4 | 88.2 | |

233 | 2.59 | 67.4 | 87.3 | |

162 | 2.59 | 65.2 | 86.1 | |

104 | 2.59 | 61.8 | 83.6 | |

150 | 1.34 | 64.0 | 85.4 | |

110 | 1.34 | 62.1 | 84.0 | |

77 | 1.34 | 59.9 | 82.5 | |

49 | 1.34 | 56.2 | 79.6 | |

41 | 0.47 | 50.6 | 75.0 | |

34 | 0.47 | 49.0 | 73.6 | |

21 | 0.47 | 46.0 | 70.7 | |

14 | 0.47 | 41.3 | 66.2 |

| Choose the right MobileNet model to fit your latency and size budget. The size of the network in memory and on disk is proportional to the number of parameters. The latency and power usage of the network scales with the number of Multiply-Accumulates (MACs) which measures the number of fused Multiplication and Addition operations. Top-1 and Top-5 accuracies are measured on the ILSVRC dataset. |

Acknowledgements

MobileNets were made possible with the hard work of many engineers and researchers throughout Google. Specifically we would like to thank:

Core Contributors: Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, Hartwig Adam

Special thanks to: Benoit Jacob, Skirmantas Kligys, George Papandreou, Liang-Chieh Chen, Derek Chow, Sergio Guadarrama, Jonathan Huang, Andre Hentz, Pete Warden