Five years ago, we founded the visual effects company Atomic Fiction with the goal of “creating the most believable fiction imaginable” for film and television. We had lofty aspirations despite the fact that the effects industry had entered a dark period, suffering a distressing rash of closures and bankruptcies. But we saw signs of hope: more effects work in movies was being done than ever before, and the entertainment industry’s longstanding technology model was clearly undergoing transition. Instead of building a giant “render farm” to perform the data processing required to create photo-realistic images for film, our idea was to build Atomic Fiction with the cloud as a core component of our business model. At the time, an overwhelming majority of our most experienced colleagues echoed the following advice to us: “It’ll never work. Don’t do it.”

With some ingenuity combined with bull-headed naïveté, our team encountered then overcame one hurdle after another. We were the first and only visual effects company to complete work on A-List projects including “Transformers,” “Flight,” “Star Trek,” “Cosmos,” and “Game of Thrones” entirely using cloud-based tools. But our biggest challenge was still to come.

Robert Zemeckis, director of “Back to the Future,” “Who Framed Roger Rabbit?,” and “Forrest Gump,” witnessed the benefits of our cloud-based workflow and tasked Atomic Fiction with crafting the iconic plane crash sequence in “Flight” (Denzel Washington). In 2013, on the heels of that project’s success, he approached us and said, “I want you guys to do “The Walk” for me. We’ve got an incredibly tight budget, but you’re going to need to recreate the Twin Towers, and they have to look absolutely real. And you need to build 1974 New York from scratch. Oh, and it’s all going to be featured in about 30 minutes’ worth of the movie.” Our hearts jumped into our throats. With our faith in the future of the cloud, we said, “Sure, Bob!”

To understand why the technical demands on “The Walk” were so intense, it’s important to illustrate the rendering process. In the beginning of a shot’s life, an artist works with a lightweight “wireframe”

Its relative simplicity makes the scene quick to interact with, so that a team of artists can work with programs like Maya and Katana to create “recipes” for how cameras move, how surfaces are textured, and how lighting falls on objects. Once that recipe is defined, it needs to be put into action. This process of calculating how light is received by and reflects off of millions of individual surfaces is called rendering. Here’s the final result:

A single iteration of one frame, which amounted to 1/24th of a single second of “The Walk,” had 83GB of input dependencies and took 7 hours to render on a 16-core instance. Now imagine 1,000 instances all being asked to do other similarly intensive parts of the film, all at the same moment in time. Each second of screen time would require 5,000 processor hours to realize. Given the nature of the deadlines, our teams needed the ability to spike to 15,000 cores simultaneously on-demand just to stay on schedule. And because we don’t realize profits until the end of the project, we needed to spend as little as possible to get up and running.

We knew that in order to achieve the necessary combination of scale and economics, we’d have to tap heavily into the cloud. Since no existing cloud rendering solution could address our needs at that scale, we decided to develop our own software atop Google Cloud Platform. Google’s efficiency, availability of resources, and per-minute billing formed the back-end of a product we call Conductor. Our artists used Conductor to manage the entire cloud rendering workflow from end-to end.

By the time Atomic Fiction had finished “The Walk,” our team had created effects sequences that Deadline Hollywood deemed the “best I have seen all year” and USA today crowned “a visual spectacle.”

The stats associated with “The Walk’s” production constitute the largest use of cloud computing in filmmaking to date. Here’s the final score card:

- 9.1 million core hours in total were used to render “The Walk.”

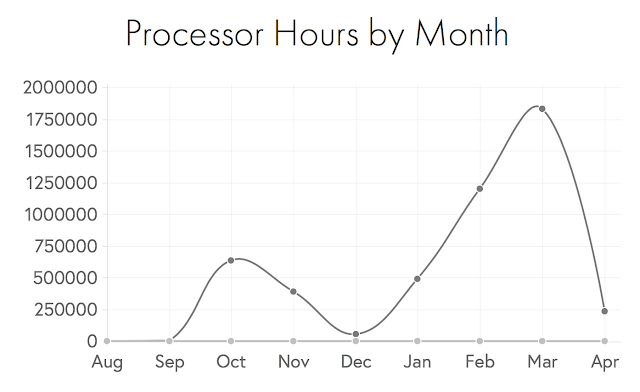

- Movie projects are heavily back-loaded, and “The Walk” was no exception. 2 million core hours were rendered in the final month alone.

- Peak scale of over 15,000 simultaneous cores was achieved rendering I/O intensive tasks.

- Average artist productivity increased 20%.

- Savings vs. traditional infrastructure was in excess of 50%.

- Because Atomic Fiction had Conductor at its disposal, director Zemeckis was able to realize 4 additional minutes of the movie’s climactic scene.

When looking at “The Walk’s” Conductor processor hours graphed out monthly, it becomes obvious how varied processing needs are over the course of a project.

Having just screened the final cut of the film, Robert Zemeckis called.“I think this is the most spectacular effects movie I’ve ever made. I can’t imagine making a movie in any other way.” Coming from the director who has pushed the art of visual effects perhaps more than any other contemporary director, our team feels it’s one of the highest honors to receive.

Take a look at the video below to see some of our work in action and learn how Google Cloud Platform helped bring it all to life.

Visit www.atomicfiction.com to see our recent work. Learn how you can render using Conductor like Atomic Fiction did on “The Walk” at www.renderconductor.com and explore Media Solutions by Google Cloud Platform to see how other companies are tapping Cloud Platform for their media workflows.

-Posted by Kevin Baillie, co-founder and visual effects supervisor, Atomic Fiction