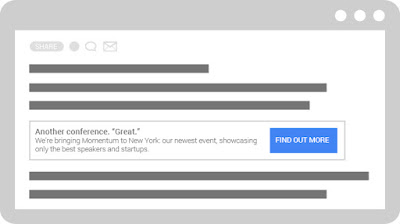

Search is one of the most important acquisition channels in a marketer’s toolkit. But it’s not enough to just optimize search ads. It’s essential to consider the entire customer journey and keep people engaged once they reach your site. That’s why we introduced an integration between Optimize and AdWords to make it easy for marketers to test and create personalized landing pages.

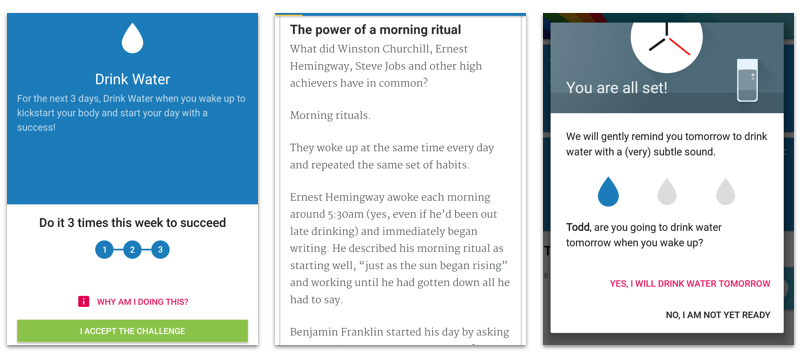

Spotify, one of the world’s leading audio streaming services, is just one example of a company that has successfully used the Optimize and AdWords integration to drive more conversions from their search campaigns. Spotify discovered that the most streamed content in Germany was actually audiobooks, not music. So they wanted to show German users that they have a wide selection of audiobooks, and also that the experience of listening to them is even better with a premium subscription.

Spotify, one of the world’s leading audio streaming services, is just one example of a company that has successfully used the Optimize and AdWords integration to drive more conversions from their search campaigns. Spotify discovered that the most streamed content in Germany was actually audiobooks, not music. So they wanted to show German users that they have a wide selection of audiobooks, and also that the experience of listening to them is even better with a premium subscription.

Using the AdWords integration with Optimize 360 (the enterprise version of Optimize), Spotify ran an experiment that focused on users in Germany who had searched for "audiobooks" on Google and clicked through on their search ad. Half of these users were shown a custom landing page dedicated to audiobooks, while the other half were shown the standard page. The custom landing page increased Spotify’s premium subscriptions by 24%.

"Before, it was a fairly slow process to get all these tests done. Now, with Optimize 360, we can have 20 or more tests running at the same time. It’s important that we test a lot, so it doesn’t matter if we fail as long as we keep on testing,” said Joost de Schepper, Spotify’s Head of Conversion Optimization."

With the Optimize and AdWords integration, driving results through A/B testing is fast and simple. Sign-up for an Optimize account at no charge and get started today.

Happy Optimizing!

How Spotify boosted conversions with Optimize and AdWords

Spotify, one of the world’s leading audio streaming services, is just one example of a company that has successfully used the Optimize and AdWords integration to drive more conversions from their search campaigns. Spotify discovered that the most streamed content in Germany was actually audiobooks, not music. So they wanted to show German users that they have a wide selection of audiobooks, and also that the experience of listening to them is even better with a premium subscription.

Spotify, one of the world’s leading audio streaming services, is just one example of a company that has successfully used the Optimize and AdWords integration to drive more conversions from their search campaigns. Spotify discovered that the most streamed content in Germany was actually audiobooks, not music. So they wanted to show German users that they have a wide selection of audiobooks, and also that the experience of listening to them is even better with a premium subscription. Using the AdWords integration with Optimize 360 (the enterprise version of Optimize), Spotify ran an experiment that focused on users in Germany who had searched for "audiobooks" on Google and clicked through on their search ad. Half of these users were shown a custom landing page dedicated to audiobooks, while the other half were shown the standard page. The custom landing page increased Spotify’s premium subscriptions by 24%.

"Before, it was a fairly slow process to get all these tests done. Now, with Optimize 360, we can have 20 or more tests running at the same time. It’s important that we test a lot, so it doesn’t matter if we fail as long as we keep on testing,” said Joost de Schepper, Spotify’s Head of Conversion Optimization."

Watch Spotify’s video case study to learn more

Driving your own results

Today, we’re announcing three new updates to make it easier for all marketers to realize the benefits that Spotify saw from easily testing and creating more relevant landing pages:1. Connect Optimize with the new AdWords experience

You can connect Optimize to AdWords in just a few steps. Follow these instructions to get started.

Not using the new AdWords experience yet? Make the switch to gain access to more actionable insights and faster access to new features.

2. Link multiple AdWords accounts at once

For advertisers that have many AdWords accounts under a manager account, individually linking each of those sub-accounts to Optimize can be time consuming.

Now, you can link your manager account directly to Optimize. This will pull in all your AdWords accounts at once, allowing you to immediately connect data from separate campaigns, ad groups, and more. To get started, switch to the new AdWords experience, and then you’ll see an option to link your manager account in your Linked accounts, learn more.

3. Gain more flexibility with your keywords

You can now run a single experiment for multiple keywords, even if they’re across different campaigns and ad groups. For example, test the same landing page for users that search for “chocolate chip cookies” in your “desserts” ad group and for users that search for “iced coffee” in your “beverages” ad group.

With the Optimize and AdWords integration, driving results through A/B testing is fast and simple. Sign-up for an Optimize account at no charge and get started today.

Happy Optimizing!