Motivation

CRDs was used to support two major categories of built-in validation:

- CRD structural schemas: Provide type checking of custom resources against schemas.

- OpenAPIv3 validation rules: Provide regex ('pattern' property), range limits ('minimum' and 'maximum' properties) on individual fields and size limits on maps and lists ('minItems', 'maxItems').

For use cases that cannot be covered by build-in validation support:

- Admission Webhooks: have validating admission webhook for further validation

- Custom validators: write custom checks in several languages such as Rego

While admission webhooks do support CRDs validation, they significantly complicate the development and operability of CRDs.

To provide an self-contained, in-process validation, an inline expression language - Common Expression Language (CEL), is introduced into CRDs such that a much larger portion of validation use cases can be solved without the use of webhooks.

It is sufficiently lightweight and safe to be run directly in the kube-apiserver, has a straight-forward and unsurprising grammar, and supports pre-parsing and typechecking of expressions, allowing syntax and type errors to be caught at CRD registration time.

CRD validation rule

CRD validation rules are promoted to GA in Kubernetes 1.29 to validate custom resources based on validation rules.

Validation rules use the Common Expression Language (CEL) to validate custom resource values. Validation rules are included in CustomResourceDefinition schemas using the x-kubernetes-validations extension.

The Rule is scoped to the location of the x-kubernetes-validations extension in the schema. And self variable in the CEL expression is bound to the scoped value.

All validation rules are scoped to the current object: no cross-object or stateful validation rules are supported.

For example:

...

openAPIV3Schema:

type: object

properties:

spec:

type: object

x-kubernetes-validations:

- rule: "self.minReplicas <= self.replicas"

message: "replicas should be greater than or equal to minReplicas."

- rule: "self.replicas <= self.maxReplicas"

message: "replicas should be smaller than or equal to maxReplicas."

properties:

...

minReplicas:

type: integer

replicas:

type: integer

maxReplicas:

type: integer

required:

- minReplicas

- replicas

- maxReplicas

will reject a request to create this custom resource:

apiVersion: "stable.example.com/v1"

kind: CronTab

metadata:

name: my-new-cron-object

spec:

minReplicas: 0

replicas: 20

maxReplicas: 10

with the response:

The CronTab "my-new-cron-object" is invalid:

* spec: Invalid value: map[string]interface {}{"maxReplicas":10, "minReplicas":0, "replicas":20}: replicas should be smaller than or equal to maxReplicas.

x-kubernetes-validations could have multiple rules. The rule under x-kubernetes-validations represents the expression which will be evaluated by CEL. The message represents the message displayed when validation fails.

Note: You can quickly test CEL expressions in CEL Playground.

Validation rules are compiled when CRDs are created/updated. The request of CRDs create/update will fail if compilation of validation rules fail. Compilation process includes type checking as well.

Validation rules support a wide range of use cases. To get a sense of some of the capabilities, let's look at a few examples:

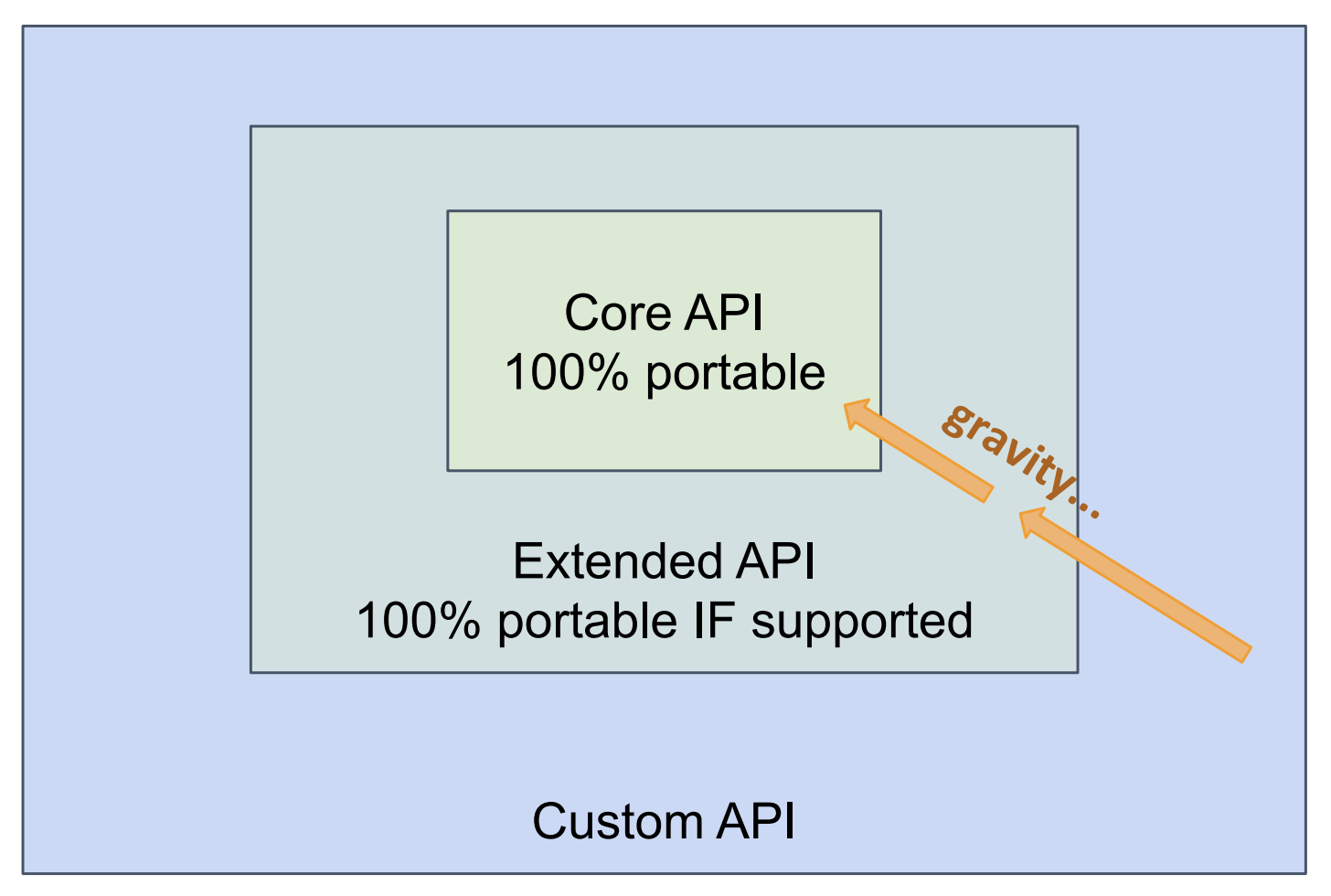

Validation rules are expressive and flexible. See the Validation Rules documentation to learn more about what validation rules are capable of.

CRD transition rules

Transition Rules make it possible to compare the new state against the old state of a resource in validation rules. You use transition rules to make sure that the cluster's API server does not accept invalid state transitions. A transition rule is a validation rule that references 'oldSelf'. The API server only evaluates transition rules when both an old value and new value exist.

Transition rule examples:

Using the Functions Libraries

Validation rules have access to a couple different function libraries:

- CEL standard functions, defined in the list of standard definitions

- CEL standard macros

- CEL extended string function library

- Kubernetes CEL extension library which includes supplemental functions for lists, regex, and URLs.

Examples of function libraries in use:

Resource use and limits

To prevent CEL evaluation from consuming excessive compute resources, validation rules impose some limits. These limits are based on CEL cost units, a platform and machine independent measure of execution cost. As a result, the limits are the same regardless of where they are enforced.

Estimated cost limit

CEL is, by design, non-Turing-complete in such a way that the halting problem isn’t a concern. CEL takes advantage of this design choice to include an "estimated cost" subsystem that can statically compute the worst case run time cost of any CEL expression. Validation rules are integrated with the estimated cost system and disallow CEL expressions from being included in CRDs if they have a sufficiently poor (high) estimated cost. The estimated cost limit is set quite high and typically requires an O(n2) or worse operation, across something of unbounded size, to be exceeded. Fortunately the fix is usually quite simple: because the cost system is aware of size limits declared in the CRD's schema, CRD authors can add size limits to the CRD's schema (maxItems for arrays, maxProperties for maps, maxLength for strings) to reduce the estimated cost.

Good practice:

Set maxItems, maxProperties and maxLength on all array, map (object with additionalProperties) and string types in CRD schemas! This results in lower and more accurate estimated costs and generally makes a CRD safer to use.

Runtime cost limits for CRD validation rules

In addition to the estimated cost limit, CEL keeps track of actual cost while evaluating a CEL expression and will halt execution of the expression if a limit is exceeded.

With the estimated cost limit already in place, the runtime cost limit is rarely encountered. But it is possible. For example, it might be encountered for a large resource composed entirely of a single large list and a validation rule that is either evaluated on each element in the list, or traverses the entire list.

CRD authors can ensure the runtime cost limit will not be exceeded in much the same way the estimated cost limit is avoided: by setting maxItems, maxProperties and maxLength on array, map and string types.

Adoption and Related work

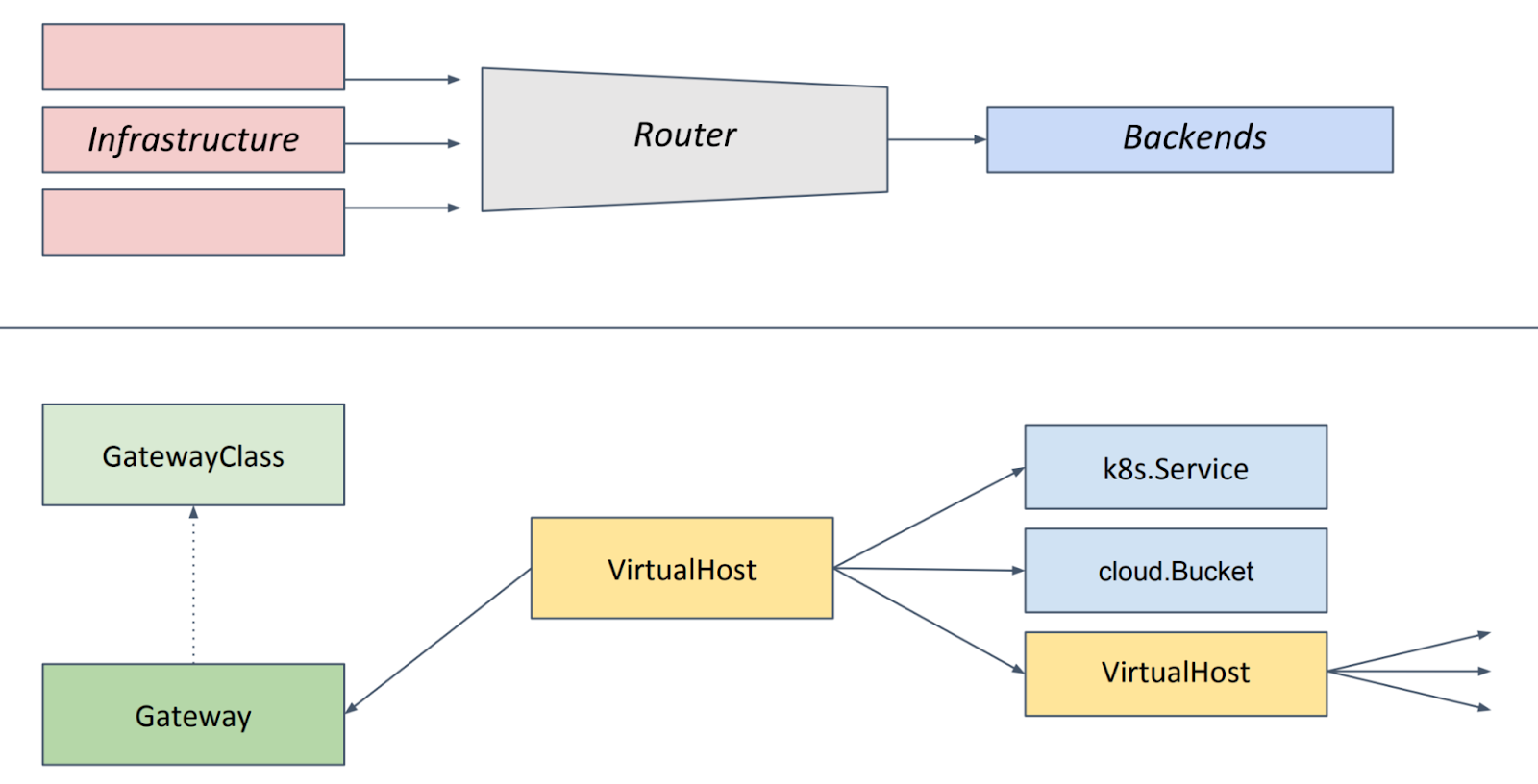

This feature has been turned on by default since Kubernetes 1.25 and finally graduated to GA in Kubernetes 1.29. It raised a lot of interest and is now widely used in the Kubernetes ecosystem. We are excited to share that the Gateway API was able to replace all the validating webhook previously used with this feature.

After CEL was introduced into Kubernetes, we are excited to expand the power to multiple areas including the Admission Chain and authorization config. We will have a separate blog to introduce further.

We look forward to working with the community on the adoption of CRD Validation Rules, and hope to see this feature promoted to general availability in upcoming Kubernetes releases.

Acknowledgements

Special thanks to Joe Betz, Kermit Alexander, Ben Luddy, Jordan Liggitt, David Eads, Daniel Smith, Dr. Stefan Schimanski, Leila Jalali and everyone who contributed to CRD Validation Rules!

By Cici Huang – Software Engineer