Posted by Shaina Mehta and Kristen Borg, Program Managers This week marks the beginning of the premier annual Computer Vision and Pattern Recognition conference (CVPR 2022), held both in-person in New Orleans, LA and virtually. As a leader in computer vision research and a Platinum Sponsor, Google will have a strong presence across CVPR 2022 with over 80 papers being presented at the main conference and active involvement in a number of conference workshops and tutorials.

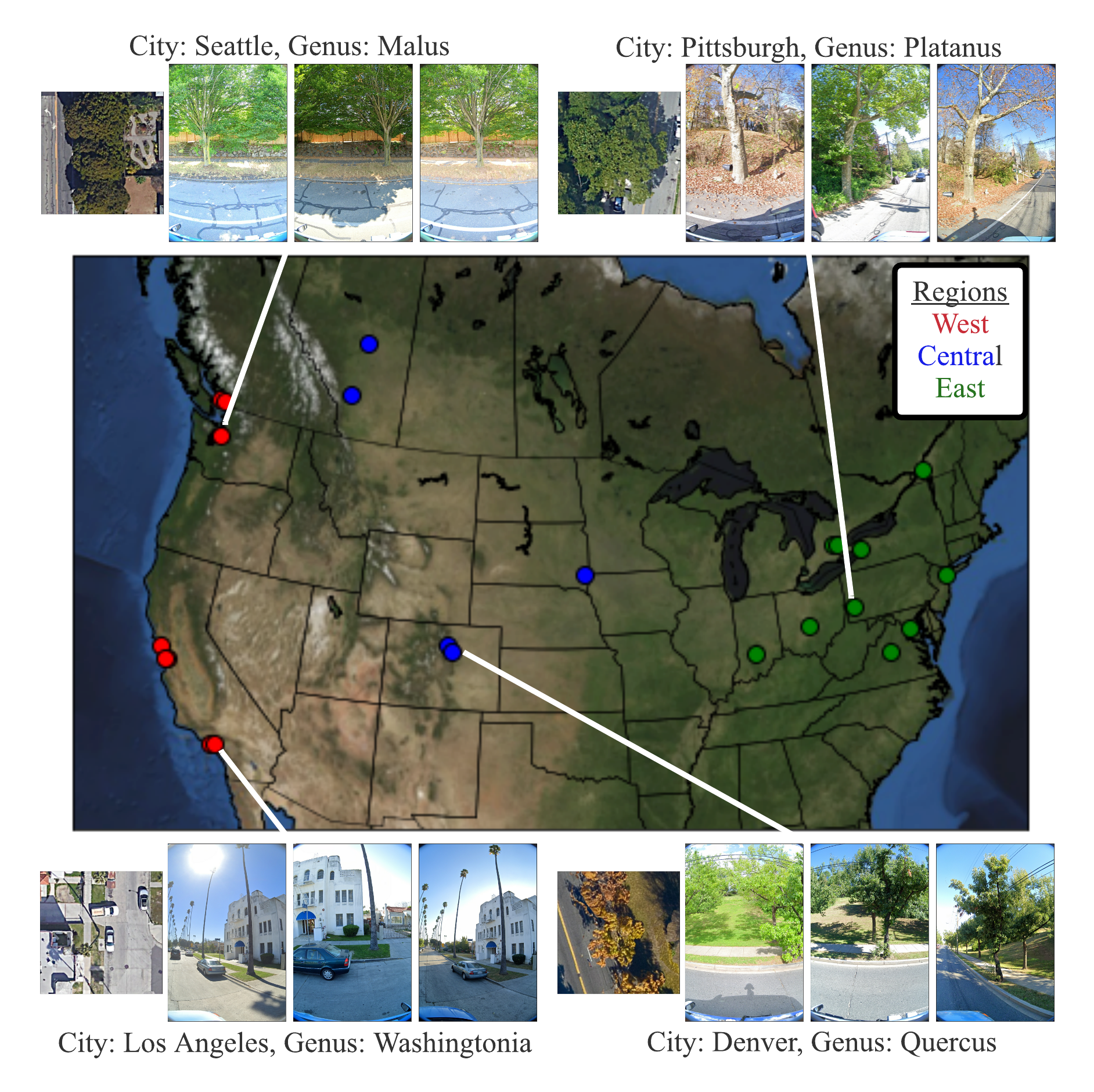

If you are attending CVPR this year, please stop by our booth and chat with our researchers who are actively exploring the latest machine learning techniques for application to various areas of machine perception. Our researchers will also be available to talk about and demo several recent efforts, including on-device ML applications with MediaPipe, the Auto Arborist Dataset for urban forest monitoring, and much more.

You can also learn more about our research being presented at CVPR 2022 in the list below (Google affiliations in bold).

Organizing Committee

Tutorials Chairs

Include: Boqing Gong

Website Chairs

Include: AJ Piergiovanni

Area Chairs

Include: Alireza Fathi, Cordelia Schmid, Deqing Sun, Jonathan Barron, Michael Ryoo, Supasorn Suwajanakorn, Susanna Ricco

Diversity, Equity, and Inclusion Chairs

Include: Noah Snavely

Panel Discussion: Embodied Computer Vision

Panelists include: Michael Ryoo

Publications

Learning to Prompt for Continual Learning (see blog post)

Zifeng Wang*, Zizhao Zhang, Chen-Yu Lee, Han Zhang, Ruoxi Sun, Xiaoqi Ren, Guolong Su, Vincent Perot, Jennifer Dy, Tomas Pfister

GCR: Gradient Coreset Based Replay Buffer Selection for Continual Learning

Rishabh Tiwari, Krishnateja Killamsetty, Rishabh Iyer, Pradeep Shenoy

Zero-Shot Text-Guided Object Generation with Dream Fields

Ajay Jain, Ben Mildenhall, Jonathan T. Barron, Pieter Abbeel, Ben Poole

Towards End-to-End Unified Scene Text Detection and Layout Analysis

Shangbang Long, Siyang Qin, Dmitry Panteleev, Alessandro Bissacco, Yasuhisa Fujii, Michalis Raptis

FLOAT: Factorized Learning of Object Attributes for Improved Multi-object Multi-part Scene Parsing

Rishubh Singh, Pranav Gupta, Pradeep Shenoy, Ravikiran Sarvadevabhatla

LOLNerf: Learn from One Look

Daniel Rebain, Mark Matthews, Kwang Moo Yi, Dmitry Lagun, Andrea Tagliasacchi

Photorealistic Monocular 3D Reconstruction of Humans Wearing Clothing

Thiemo Alldieck, Mihai Zanfir, Cristian Sminchisescu

Learning Local Displacements for Point Cloud Completion

Yida Wang, David Joseph Tan, Nassir Navab, Federico Tombari

Density-Preserving Deep Point Cloud Compression

Yun He, Xinlin Ren, Danhang Tang, Yinda Zhang, Xiangyang Xue, Yanwei Fu

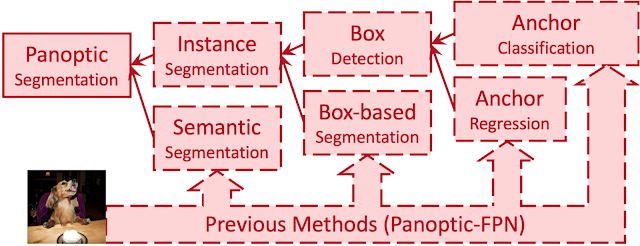

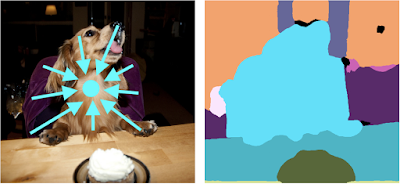

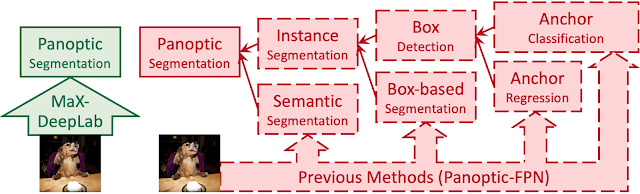

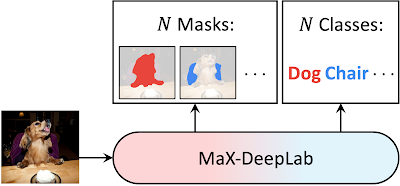

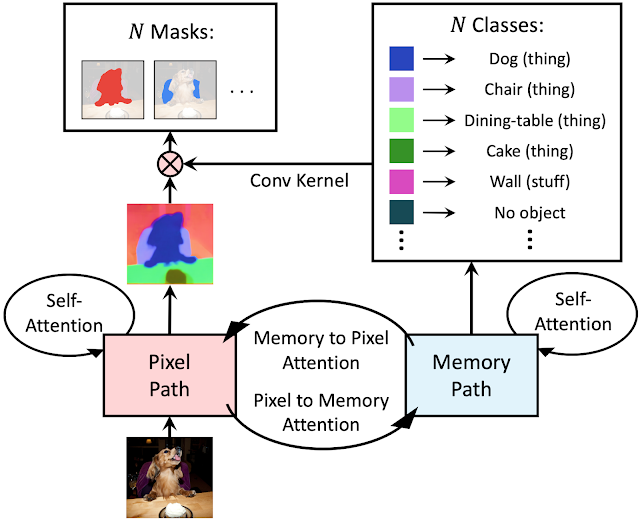

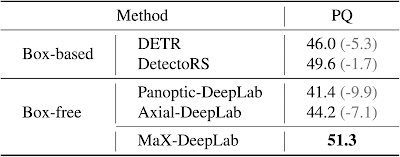

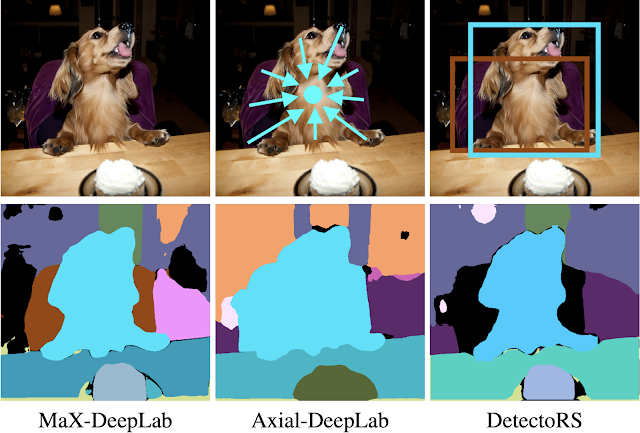

CMT-DeepLab: Clustering Mask Transformers for Panoptic Segmentation

Qihang Yu*, Huiyu Wang, Dahun Kim, Siyuan Qiao, Maxwell Collins, Yukun Zhu, Hartwig Adam, Alan Yuille, Liang-Chieh Chen

Deformable Sprites for Unsupervised Video Decomposition

Vickie Ye, Zhengqi Li, Richard Tucker, Angjoo Kanazawa, Noah Snavely

Learning with Neighbor Consistency for Noisy Labels

Ahmet Iscen, Jack Valmadre, Anurag Arnab, Cordelia Schmid

Multiview Transformers for Video Recognition

Shen Yan, Xuehan Xiong, Anurag Arnab, Zhichao Lu, Mi Zhang, Chen Sun, Cordelia Schmid

Kubric: A Scalable Dataset Generator

Klaus Greff, Francois Belletti, Lucas Beyer, Carl Doersch, Yilun Du, Daniel Duckworth, David J. Fleet, Dan Gnanapragasam, Florian Golemo, Charles Herrmann, Thomas Kipf, Abhijit Kundu, Dmitry Lagun, Issam Laradji, Hsueh-Ti (Derek) Liu, Henning Meyer, Yishu Miao, Derek Nowrouzezahrai, Cengiz Oztireli, Etienne Pot, Noha Radwan*, Daniel Rebain, Sara Sabour, Mehdi S. M. Sajjadi, Matan Sela, Vincent Sitzmann, Austin Stone, Deqing Sun, Suhani Vora, Ziyu Wang, Tianhao Wu, Kwang Moo Yi, Fangcheng Zhong, Andrea Tagliasacchi

3D Moments from Near-Duplicate Photos

Qianqian Wang, Zhengqi Li, David Salesin, Noah Snavely, Brian Curless, Janne Kontkanen

Mip-NeRF 360: Unbounded Anti-Aliased Neural Radiance Fields

Jonathan T. Barron, Ben Mildenhall, Dor Verbin, Pratul P. Srinivasan, Peter Hedman

RegNeRF: Regularizing Neural Radiance Fields for View Synthesis from Sparse Inputs

Michael Niemeyer*, Jonathan T. Barron, Ben Mildenhall, Mehdi S. M. Sajjadi, Andreas Geiger, Noha Radwan*

Ref-NeRF: Structured View-Dependent Appearance for Neural Radiance Fields

Dor Verbin, Peter Hedman, Ben Mildenhall, Todd Zickler, Jonathan T. Barron, Pratul P. Srinivasan

IRON: Inverse Rendering by Optimizing Neural SDFs and Materials from Photometric Images

Kai Zhang, Fujun Luan, Zhengqi Li, Noah Snavely

MAXIM: Multi-Axis MLP for Image Processing

Zhengzhong Tu*, Hossein Talebi, Han Zhang, Feng Yang, Peyman Milanfar, Alan Bovik, Yinxiao Li

Restormer: Efficient Transformer for High-Resolution Image Restoration

Syed Waqas Zamir, Aditya Arora, Salman Khan, Munawar Hayat, Fahad Shahbaz Khan, Ming-Hsuan Yang

Burst Image Restoration and Enhancement

Akshay Dudhane, Syed Waqas Zamir, Salman Khan, Fahad Shahbaz Khan, Ming-Hsuan Yang

Neural RGB-D Surface Reconstruction

Dejan Azinović, Ricardo Martin-Brualla, Dan B Goldman, Matthias Nießner, Justus Thies

Scene Representation Transformer: Geometry-Free Novel View Synthesis Through Set-Latent Scene Representations

Mehdi S. M. Sajjadi, Henning Meyer, Etienne Pot, Urs Bergmann, Klaus Greff, Noha Radwan*, Suhani Vora, Mario Lučić, Daniel Duckworth, Alexey Dosovitskiy*, Jakob Uszkoreit*, Thomas Funkhouser, Andrea Tagliasacchi*

ZebraPose: Coarse to Fine Surface Encoding for 6DoF Object Pose Estimation

Yongzhi Su, Mahdi Saleh, Torben Fetzer, Jason Rambach, Nassir Navab, Benjamin Busam, Didier Stricker, Federico Tombari

MetaPose: Fast 3D Pose from Multiple Views without 3D Supervision

Ben Usman, Andrea Tagliasacchi, Kate Saenko, Avneesh Sud

GPV-Pose: Category-Level Object Pose Estimation via Geometry-Guided Point-wise Voting

Yan Di, Ruida Zhang, Zhiqiang Lou, Fabian Manhardt, Xiangyang Ji, Nassir Navab, Federico Tombari

Rethinking Deep Face Restoration

Yang Zhao*, Yu-Chuan Su, Chun-Te Chu, Yandong Li, Marius Renn, Yukun Zhu, Changyou Chen, Xuhui Jia

Transferability Metrics for Selecting Source Model Ensembles

Andrea Agostinelli, Jasper Uijlings, Thomas Mensink, Vittorio Ferrari

Robust Fine-Tuning of Zero-Shot Models

Mitchell Wortsman, Gabriel Ilharco, Jong Wook Kim, Mike Li, Simon Kornblith, Rebecca Roelofs, Raphael Gontijo Lopes, Hannaneh Hajishirzi, Ali Farhadi, Hongseok Namkoong, Ludwig Schmidt

Block-NeRF: Scalable Large Scene Neural View Synthesis

Matthew Tancik, Vincent Casser, Xinchen Yan, Sabeek Pradhan, Ben Mildenhall, Pratul P. Srinivasan, Jonathan T. Barron, Henrik Kretzschmar

Light Field Neural Rendering

Mohammad Suhail*, Carlos Esteves, Leonid Sigal, Ameesh Makadia

Transferability Estimation Using Bhattacharyya Class Separability

Michal Pándy, Andrea Agostinelli, Jasper Uijlings, Vittorio Ferrari, Thomas Mensink

Matching Feature Sets for Few-Shot Image Classification

Arman Afrasiyabi, Hugo Larochelle, Jean-François Lalonde, Christian Gagné

Which Model to Transfer? Finding the Needle in the Growing Haystack

Cedric Renggli, André Susano Pinto, Luka Rimanic, Joan Puigcerver, Carlos Riquelme, Ce Zhang, Mario Lučić

Auditing Privacy Defenses in Federated Learning via Generative Gradient Leakage

Zhuohang Li, Jiaxin Zhang, Luyang Liu, Jian Liu

Estimating Example Difficulty Using Variance of Gradients

Chirag Agarwal, Daniel D'souza, Sara Hooker

More Than Words: In-the-Wild Visually-Driven Prosody for Text-to-Speech (see blog post)

Michael Hassid, Michelle Tadmor Ramanovich, Brendan Shillingford, Miaosen Wang, Ye Jia, Tal Remez

Robust Outlier Detection by De-Biasing VAE Likelihoods

Kushal Chauhan, Barath Mohan U, Pradeep Shenoy, Manish Gupta, Devarajan Sridharan

Deep 3D-to-2D Watermarking: Embedding Messages in 3D Meshes and Extracting Them from 2D Renderings

Innfarn Yoo, Huiwen Chang, Xiyang Luo, Ondrej Stava, Ce Liu*, Peyman Milanfar, Feng Yang

Knowledge Distillation: A Good Teacher Is Patient and Consistent

Lucas Beyer, Xiaohua Zhai, Amélie Royer*, Larisa Markeeva*, Rohan Anil, Alexander Kolesnikov

Urban Radiance Fields

Konstantinos Rematas, Andrew Liu, Pratul P. Srinivasan, Jonathan T. Barron, Andrea Tagliasacchi, Thomas Funkhouser, Vittorio Ferrari

Manifold Learning Benefits GANs

Yao Ni, Piotr Koniusz, Richard Hartley, Richard Nock

MaskGIT: Masked Generative Image Transformer

Huiwen Chang, Han Zhang, Lu Jiang, Ce Liu*, William T. Freeman

InOut: Diverse Image Outpainting via GAN Inversion

Yen-Chi Cheng, Chieh Hubert Lin, Hsin-Ying Lee, Jian Ren, Sergey Tulyakov, Ming-Hsuan Yang

Scaling Vision Transformers (see blog post)

Xiaohua Zhai, Alexander Kolesnikov, Neil Houlsby, Lucas Beyer

Fine-Tuning Image Transformers Using Learnable Memory

Mark Sandler, Andrey Zhmoginov, Max Vladymyrov, Andrew Jackson

PokeBNN: A Binary Pursuit of Lightweight Accuracy

Yichi Zhang*, Zhiru Zhang, Lukasz Lew

Bending Graphs: Hierarchical Shape Matching Using Gated Optimal Transport

Mahdi Saleh, Shun-Cheng Wu, Luca Cosmo, Nassir Navab, Benjamin Busam, Federico Tombari

Uncertainty-Aware Deep Multi-View Photometric Stereo

Berk Kaya, Suryansh Kumar, Carlos Oliveira, Vittorio Ferrari, Luc Van Gool

Depth-Supervised NeRF: Fewer Views and Faster Training for Free

Kangle Deng, Andrew Liu, Jun-Yan Zhu, Deva Ramanan

Dense Depth Priors for Neural Radiance Fields from Sparse Input Views

Barbara Roessle, Jonathan T. Barron, Ben Mildenhall, Pratul P. Srinivasan, Matthias Nießner

Trajectory Optimization for Physics-Based Reconstruction of 3D Human Pose from Monocular Video

Erik Gärtner, Mykhaylo Andriluka, Hongyi Xu, Cristian Sminchisescu

Differentiable Dynamics for Articulated 3D Human Motion Reconstruction

Erik Gärtner, Mykhaylo Andriluka, Erwin Coumans, Cristian Sminchisescu

Panoptic Neural Fields: A Semantic Object-Aware Neural Scene Representation

Abhijit Kundu, Kyle Genova, Xiaoqi Yin, Alireza Fathi, Caroline Pantofaru, Leonidas J. Guibas, Andrea Tagliasacchi, Frank Dellaert, Thomas Funkhouser

Pyramid Adversarial Training Improves ViT Performance

Charles Herrmann, Kyle Sargent, Lu Jiang, Ramin Zabih, Huiwen Chang, Ce Liu*, Dilip Krishnan, Deqing Sun

Proper Reuse of Image Classification Features Improves Object Detection

Cristina Vasconcelos, Vighnesh Birodkar, Vincent Dumoulin

SOMSI: Spherical Novel View Synthesis with Soft Occlusion Multi-Sphere Images

Tewodros Habtegebrial, Christiano Gava, Marcel Rogge, Didier Stricker, Varun Jampani

TubeFormer-DeepLab: Video Mask Transformer

Dahun Kim, Jun Xie, Huiyu Wang, Siyuan Qiao, Qihang Yu, Hong-Seok Kim, Hartwig Adam, In So Kweon, Liang-Chieh Chen

Contextualized Spatio-Temporal Contrastive Learning with Self-Supervision

Liangzhe Yuan, Rui Qian*, Yin Cui, Boqing Gong, Florian Schroff, Ming-Hsuan Yang, Hartwig Adam, Ting Liu

When Does Contrastive Visual Representation Learning Work?

Elijah Cole, Xuan Yang, Kimberly Wilber, Oisin Mac Aodha, Serge Belongie

Less Is More: Generating Grounded Navigation Instructions from Landmarks

Su Wang, Ceslee Montgomery, Jordi Orbay, Vighnesh Birodkar, Aleksandra Faust, Izzeddin Gur, Natasha Jaques, Austin Waters, Jason Baldridge, Peter Anderson

Forecasting Characteristic 3D Poses of Human Actions

Christian Diller, Thomas Funkhouser, Angela Dai

BEHAVE: Dataset and Method for Tracking Human Object Interactions

Bharat Lal Bhatnagar, Xianghui Xie, Ilya A. Petrov, Cristian Sminchisescu, Christian Theobalt, Gerard Pons-Moll

Motion-from-Blur: 3D Shape and Motion Estimation of Motion-Blurred Objects in Videos

Denys Rozumnyi, Martin R. Oswald, Vittorio Ferrari, Marc Pollefeys

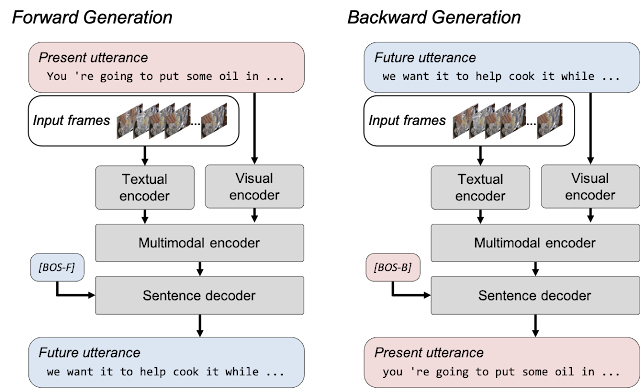

End-to-End Generative Pretraining for Multimodal Video Captioning (see blog post)

Paul Hongsuck Seo, Arsha Nagrani, Anurag Arnab, Cordelia Schmid

Uncertainty-Aware Adaptation for Self-Supervised 3D Human Pose Estimation

Jogendra Nath Kundu, Siddharth Seth, Pradyumna YM, Varun Jampani, Anirban Chakraborty, R. Venkatesh Babu

Learning ABCs: Approximate Bijective Correspondence for Isolating Factors of Variation with Weak Supervision

Kieran A. Murphy, Varun Jampani, Srikumar Ramalingam, Ameesh Makadia

HumanNeRF: Free-Viewpoint Rendering of Moving People from Monocular Video

Chung-Yi Weng, Brian Curless, Pratul P. Srinivasan, Jonathan T. Barron, Ira Kemelmacher-Shlizerman

Deblurring via Stochastic Refinement

Jay Whang*, Mauricio Delbracio, Hossein Talebi, Chitwan Saharia, Alexandros G. Dimakis, Peyman Milanfar

NeRF in the Dark: High Dynamic Range View Synthesis from Noisy Raw Images

Ben Mildenhall, Peter Hedman, Ricardo Martin-Brualla, Pratul P. Srinivasan, Jonathan T. Barron

CoNeRF: Controllable Neural Radiance Fields

Kacper Kania, Kwang Moo Yi, Marek Kowalski, Tomasz Trzciński, Andrea Tagliasacchi

A Conservative Approach for Unbiased Learning on Unknown Biases

Myeongho Jeon, Daekyung Kim, Woochul Lee, Myungjoo Kang, Joonseok Lee

DeepFusion: Lidar-Camera Deep Fusion for Multi-Modal 3D Object Detection (see blog post)

Yingwei Li*, Adams Wei Yu, Tianjian Meng, Ben Caine, Jiquan Ngiam, Daiyi Peng, Junyang Shen, Yifeng Lu, Denny Zhou, Quoc V. Le, Alan Yuille, Mingxing Tan

Video Frame Interpolation Transformer

Zhihao Shi, Xiangyu Xu, Xiaohong Liu, Jun Chen, Ming-Hsuan Yang

Global Matching with Overlapping Attention for Optical Flow Estimation

Shiyu Zhao, Long Zhao, Zhixing Zhang, Enyu Zhou, Dimitris Metaxas

LiT: Zero-Shot Transfer with Locked-image Text Tuning (see blog post)

Xiaohua Zhai, Xiao Wang, Basil Mustafa, Andreas Steiner, Daniel Keysers, Alexander Kolesnikov, Lucas Beyer

Are Multimodal Transformers Robust to Missing Modality?

Mengmeng Ma, Jian Ren, Long Zhao, Davide Testuggine, Xi Peng

3D-VField: Adversarial Augmentation of Point Clouds for Domain Generalization in 3D Object Detection

Alexander Lehner, Stefano Gasperini, Alvaro Marcos-Ramiro, Michael Schmidt, Mohammad-Ali Nikouei Mahani, Nassir Navab, Benjamin Busam, Federico Tombari

SHIFT: A Synthetic Driving Dataset for Continuous Multi-Task Domain Adaptation

Tao Sun, Mattia Segu, Janis Postels, Yuxuan Wang, Luc Van Gool, Bernt Schiele, Federico Tombari, Fisher Yu

H4D: Human 4D Modeling by Learning Neural Compositional Representation

Boyan Jiang, Yinda Zhang, Xingkui Wei, Xiangyang Xue, Yanwei Fu

Gravitationally Lensed Black Hole Emission Tomography

Aviad Levis, Pratul P. Srinivasan, Andrew A. Chael, Ren Ng, Katherine L. Bouman

Deep Saliency Prior for Reducing Visual Distraction

Kfir Aberman, Junfeng He, Yossi Gandelsman, Inbar Mosseri, David E. Jacobs, Kai Kohlhoff, Yael Pritch, Michael Rubinstein

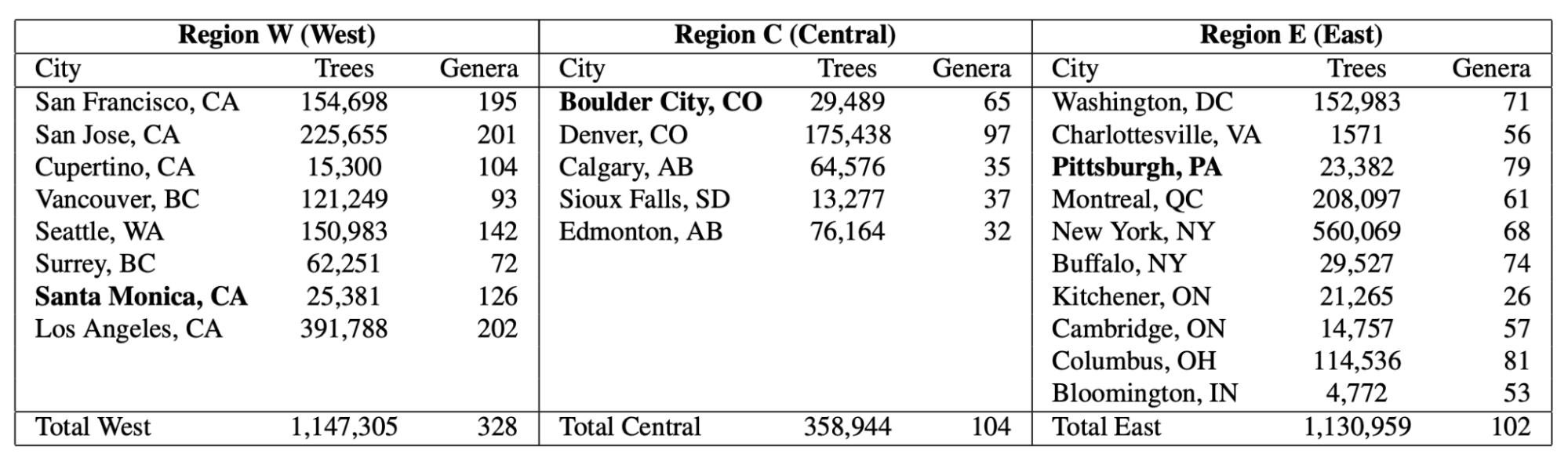

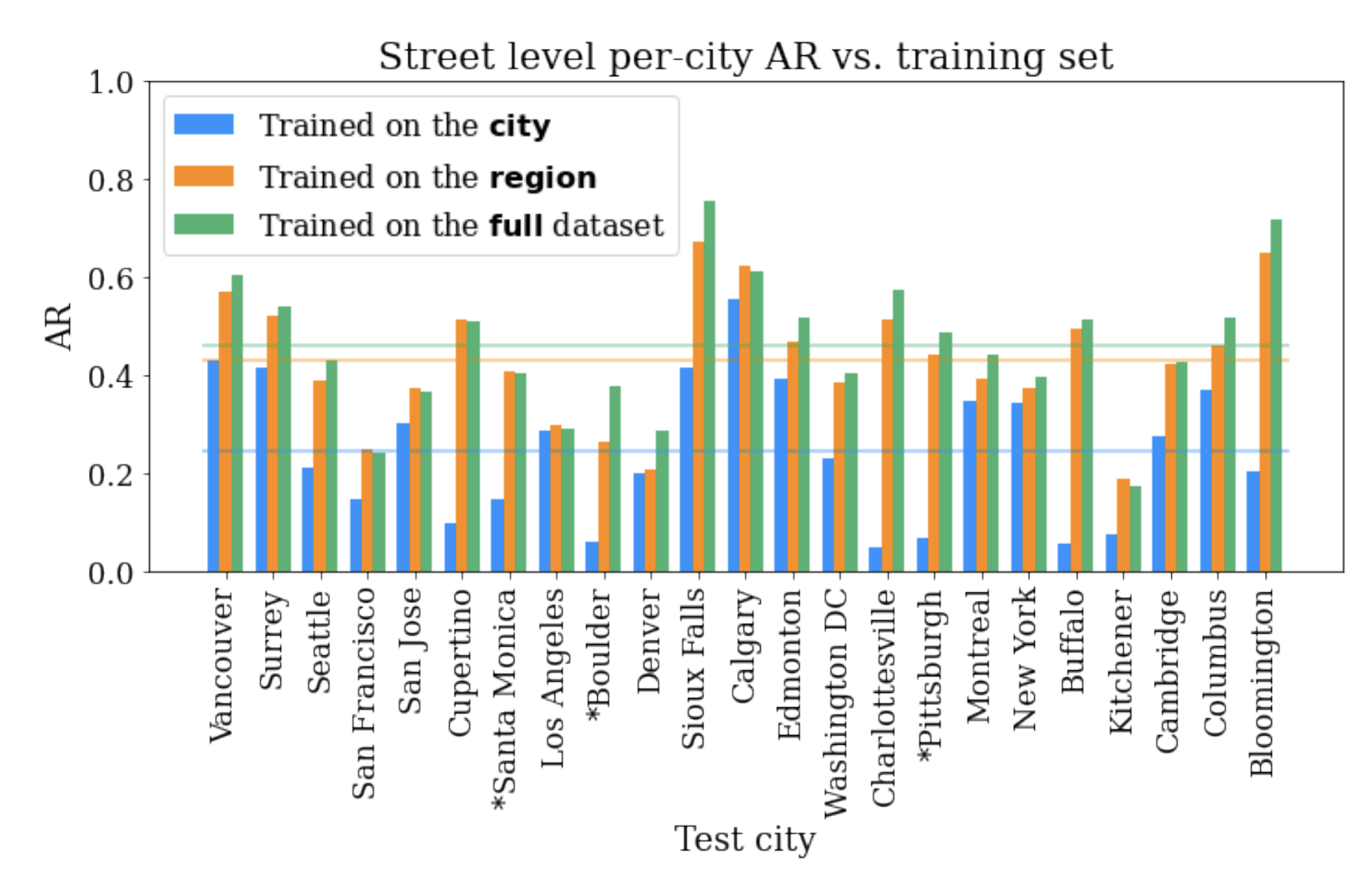

The Auto Arborist Dataset: A Large-Scale Benchmark for Multiview Urban Forest Monitoring Under Domain Shift

Sara Beery, Guanhang Wu, Trevor Edwards, Filip Pavetic, Bo Majewski, Shreyasee Mukherjee, Stanley Chan, John Morgan, Vivek Rathod, Jonathan Huang

Workshops

Ethical Considerations in Creative Applications of Computer Vision

Chairs and Advisors: Negar Rostamzadeh, Fernando Diaz, Emily Denton, Mark Diaz, Jason Baldridge

Dynamic Neural Networks Meet Computer Vision Organizers

Invited Speaker: Barret Zoph

Precognition: Seeing Through the Future

Organizer: Utsav Prabhu

Invited Speaker: Sella Nevo

Computer Vision in the Built Environment for the Design, Construction, and Operation of Buildings

Invited Speakers: Thomas Funkhouser, Federico Tombari

Neural Architecture Search: Lightweight NAS Challenge

Invited Speaker: Barret Zoph

Transformers in Vision

Organizer: Lucas Beyer

Invited Speakers and Panelists: Alexander Kolesnikov, Mathilde Caron, Arsha Nagrani, Lucas Beyer

Challenge on Learned Image Compression

Organizers: George Toderici, Johannes Balle, Eirikur Agustsson, Nick Johnston, Fabian Mentzer, Luca Versari

Invited Speaker: Debargha Mukherjee

Embodied AI

Organizers: Anthony Francis, Sören Pirk, Alex Ku, Fei Xia, Peter Anderson

Scientific Advisory Board Members: Alexander Toshev, Jie Tan

Invited Speaker: Carolina Parada

Sight and Sound

Organizers: Arsha Nagrani, William Freeman

New Trends in Image Restoration and Enhancement

Organizers: Ming-Hsuan Yang, Vivek Kwatra, George Toderici

EarthVision: Large Scale Computer Vision for Remote Sensing Imagery

Invited Speaker: John Quinn

LatinX in Computer Vision Research

Organizer: Ruben Villegas

Fine-Grained Visual Categorization

Organizer: Kimberly Wilber

The Art of Robustness: Devil and Angel in Adversarial Machine Learning

Organizer: Florian Tramèr

Invited Speaker: Nicholas Carlini

AI for Content Creation

Organizers: Deqing Sun, Huiwen Chang, Lu Jiang

Invited Speaker: Chitwan Saharia

LOng-form VidEo Understanding

Invited Speaker: Cordelia Schmid

Visual Perception and Learning in an Open World

Invited Speaker: Rahul Sukthankar

Media Forensics

Organizer : Christoph Bregler

Technical Committee Members: Shruti Agarwal, Scott McCloskey, Peng Zhou

Vision Datasets Understanding

Organizer: José Lezama

Embedded Vision

Invited Speaker: Matthias Grundmann

Federated Learning for Computer Vision

Invited Speaker: Zheng Xu

Large Scale Holistic Video Understanding

Organizer: David Ross

Invited Speaker: Anurag Arnab

Learning With Limited Labelled Data for Image and Video Understanding

Invited Speaker: Hugo Larochelle

Bridging the Gap Between Computational Photography and Visual Recognition

Invited Speaker: Xiaohua Zhai

Explainable Artificial Intelligence for Computer Vision

Invited Speaker: Been Kim

Robustness in Sequential Data

Organizers: Sayna Ebrahimi, Kevin Murphy

Invited Speakers: Sayna Ebrahimi, Balaji Lakshminarayanan

Sketch-Oriented Deep Learning

Organizer: David Ha

Invited Speaker: Jonas Jongejan

Multimodal Learning and Applications

Invited Speaker: Cordelia Schmid

Computational Cameras and Displays

Organizer: Tali Dekel

Invited Speaker: Peyman Millanfar

Artificial Social Intelligence

Invited Speaker: Natasha Jaques

VizWiz Grand Challenge: Algorithms to Assist People Who Are Blind

Invited Speaker and Panelist: Andrew Howard

Image Matching: Local Features & Beyond

Organizer: Eduard Trulls

Multi-Agent Behavior: Representation, Modeling, Measurement, and Applications

Organizer: Ting Liu

Efficient Deep Learning for Computer Vision

Organizers: Pete Warden, Andrew Howard, Grace Chu, Jaeyoun Kim

Gaze Estimation and Prediction in the Wild

Organizer: Thabo Beeler

Tutorials

Denoising Diffusion-Based Generative Modeling: Foundations and Applications

Invited Speaker: Ruiqi Gao

Algorithmic Fairness: Why It's Hard and Why It's Interesting

Invited Speaker: Sanmi Koyejo

Beyond Convolutional Neural Networks

Invited Speakers: Neil Houlsby, Alexander Kolesnikov, Xiaohua Zhai

Joint Ego4D and Egocentric Perception, Interaction & Computing

Invited Speaker: Vittorio Ferrari

Deep AUC Maximization

Invited Speakers: Tianbao Yang

Vision-Based Robot Learning

Organizers: Michael S. Ryoo, Andy Zeng, Pete Florence

Graph Machine Learning for Visual Computing

Organizers: Federico Tombari

Invited Speakers: Federico Tombari, Fabian Manhardt

*Work done while at Google. ↩