Posted by Dave Burke, VP of Engineering

Posted by Dave Burke, VP of Engineering

We're releasing the first Developer Preview of Android 15 today so you, our developers, can collaborate with us to build a better Android.

Android 15 continues our work to build a platform that helps improve your productivity while giving you new capabilities to produce superior media experiences, minimize battery impact, maximize smooth app performance, and protect user privacy and security all on the most diverse lineup of devices out there.

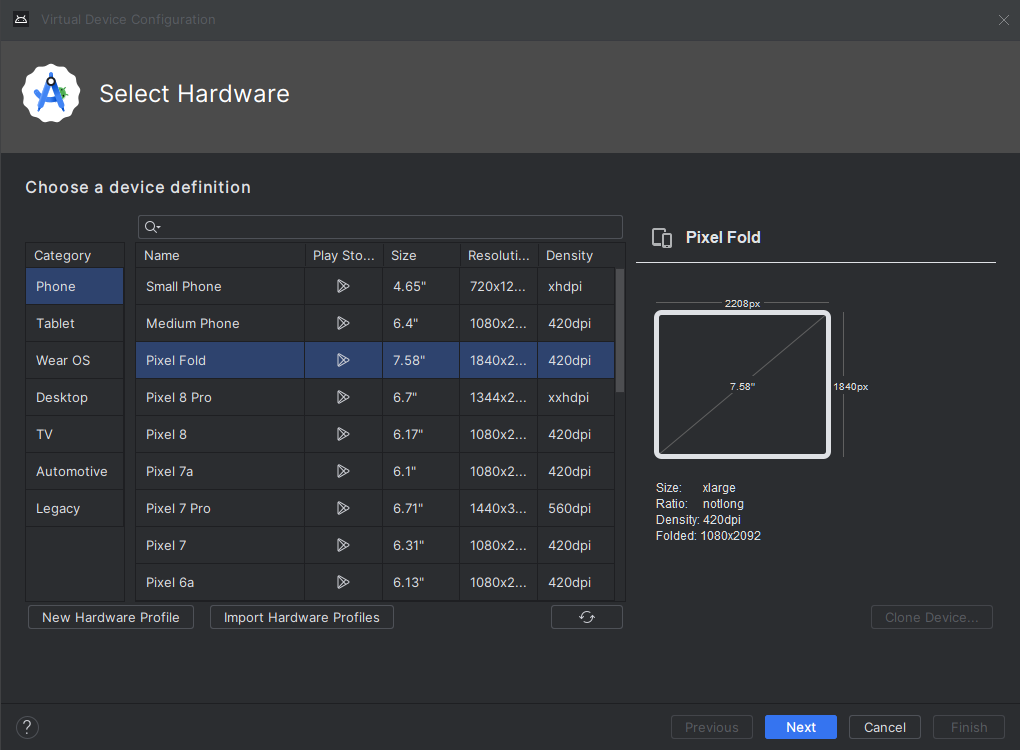

Android enables your apps to take advantage of premium device hardware, including high-end camera capabilities, powerful GPUs, dazzling displays, and AI processing. The demand for large-screen devices, including tablets, foldables and flippables, continues to grow, offering an opportunity to reach high-value users. Also, Android is committed to providing tooling and libraries to help your apps take advantage of the latest advances in AI.

Your feedback on the Android 15 Developer Preview and QPR beta program plays a key role in helping Android continuously improve. The Android 15 developer site has more information about the preview, including downloads for Pixel and detailed documentation about changes. This preview is just the beginning, and we’ll have lots more to share as we move through the release cycle. Thank you in advance for your help in making Android a platform that works for everyone.

Protecting user privacy and security

Android is constantly working to create solutions that maximize user privacy and security.

Privacy Sandbox on Android

Android 15 brings Android AD Services up to extension level 10, incorporating the latest version of the Privacy Sandbox on Android, part of our work to develop new technologies that improve user privacy and enable effective, personalized advertising experiences for mobile apps. Our website has more about the Privacy Sandbox on Android developer preview and beta programs to help you get started.

Health Connect

Android 15 integrates Android 14 extensions 10 around Health Connect by Android, a secure and centralized platform to manage and share app-collected health and fitness data. This update adds support for new data types across fitness, nutrition, and more.

File integrity

Android 15's FileIntegrityManager includes new APIs that tap into the power of the fs-verity feature in the Linux kernel. With fs-verity, files can be protected by custom cryptographic signatures, helping you ensure they haven't been tampered with or corrupted. This leads to enhanced security, protecting against potential malware or unauthorized file modifications that could compromise your app's functionality or data.

Partial screen sharing

Android 15 supports partial screen sharing so users can share or record just an app window rather than the entire device screen. This feature, enabled first in Android 14 QPR2, includes MediaProjection callbacks that allow your app to customize the partial screen sharing experience. Note that user consent is now required for each MediaProjection capture session.

Supporting creators

Android continues its work to give you access to tools and hardware to support creators to bring their vision to life on Android.

In-app Camera Controls

Android 15 adds new extensions for more control over the camera hardware and its algorithms on supported devices:

- Advanced flash strength adjustments enabling precise control of flash intensity in both SINGLE and TORCH modes while capturing images.

Virtual MIDI 2.0 Devices

Android 13 added support for connecting to MIDI 2.0 devices via USB, which communicate using Universal MIDI Packets (UMP). Android 15 extends UMP support to virtual MIDI apps, enabling composition apps to control synthesizer apps as a virtual MIDI 2.0 device just like they would with an USB MIDI 2.0 device.

Performance and quality

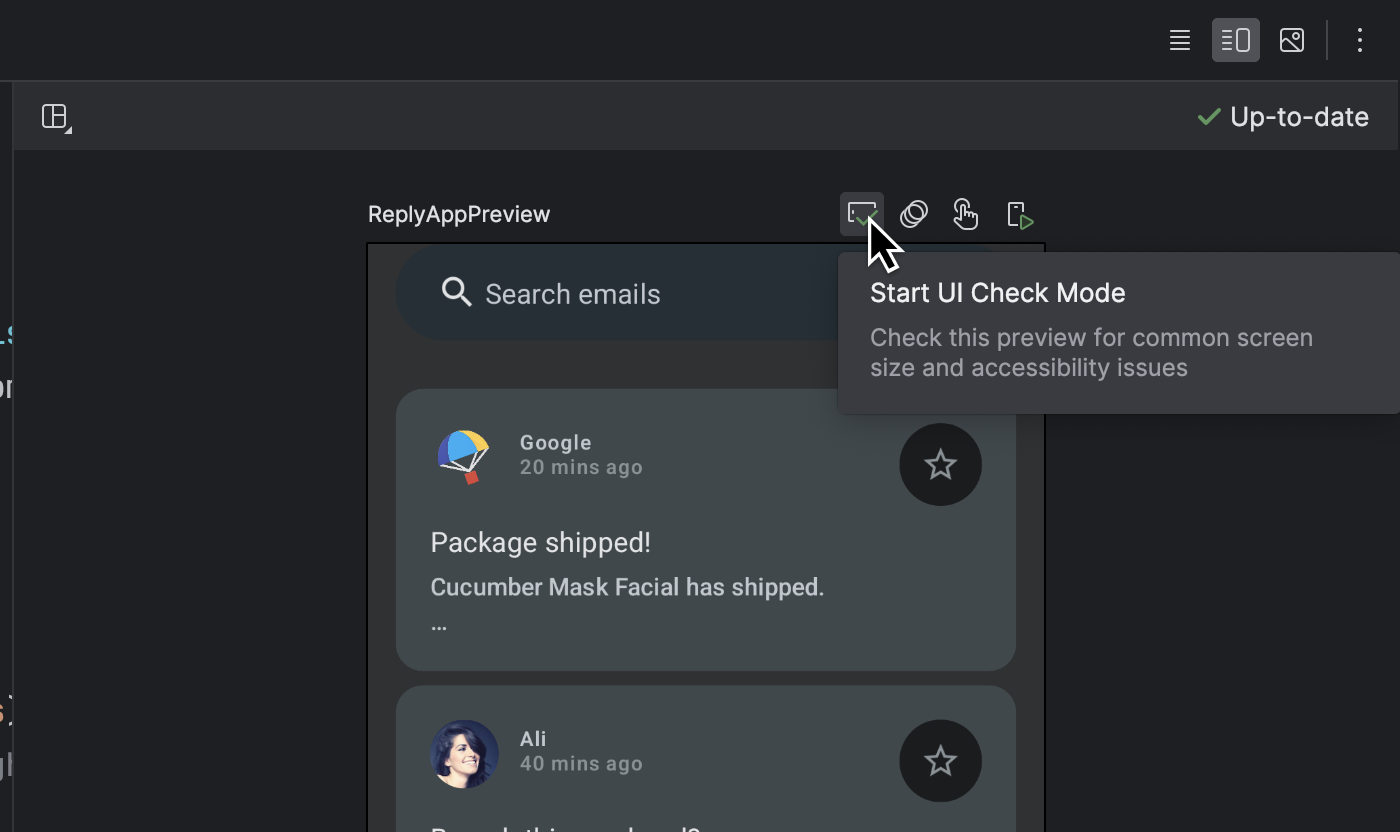

Android continues its focus on helping you improve the quality of your apps. Much of this focus is around tooling and libraries, including Jetpack Compose, Android Studio, and more.

Dynamic Performance

Android 15 continues our investment in the Android Dynamic Performance Framework (ADPF), a set of APIs that allow games and performance intensive apps to interact more directly with power and thermal systems of Android devices. On supported devices, Android 15 will add new ADPF capabilities:

- A power-efficiency mode for hint sessions to indicate that their associated threads should prefer power saving over performance, great for long-running background workloads.

- GPU and CPU work durations can both be reported in hint sessions, allowing the system to adjust CPU and GPU frequencies together to best meet workload demands.

To learn more about how to use ADPF in your apps and games, head over to the documentation.

Developer Productivity

Android 15 continues to add OpenJDK APIs, including quality-of-life improvements around NIO buffers, streams, security, and more. These APIs are updated on over a billion devices running Android 12+ through Google Play System updates, so you can target the latest programming features.

App compatibility

To give you more time to plan for app compatibility work, we’re letting you know our Platform Stability milestone well in advance.

At this milestone, we’ll deliver final SDK/NDK APIs and also final internal APIs and app-facing system behaviors. We’re expecting to reach Platform Stability in June 2024, and from that time you’ll have several months before the official release to do your final testing. The release timeline details are here.

Get started with Android 15

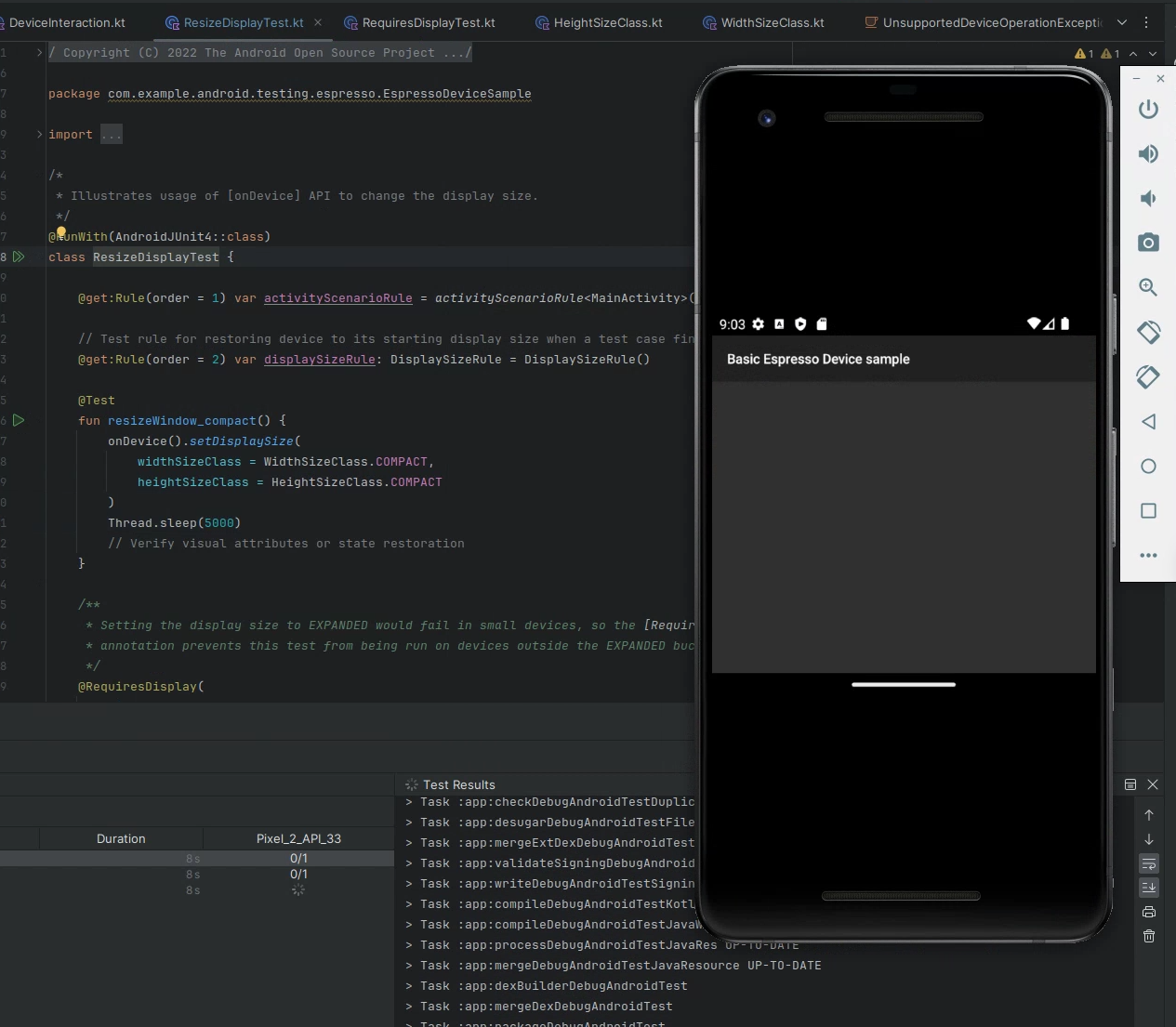

The Developer Preview has everything you need to try the Android 15 features, test your apps, and give us feedback. You can get started today by flashing a system image onto a Pixel 6, 7, or 8 series device, along with the Pixel Fold and Pixel Tablet. If you don’t have a Pixel device, you can use the 64-bit system images with the Android Emulator in Android Studio.

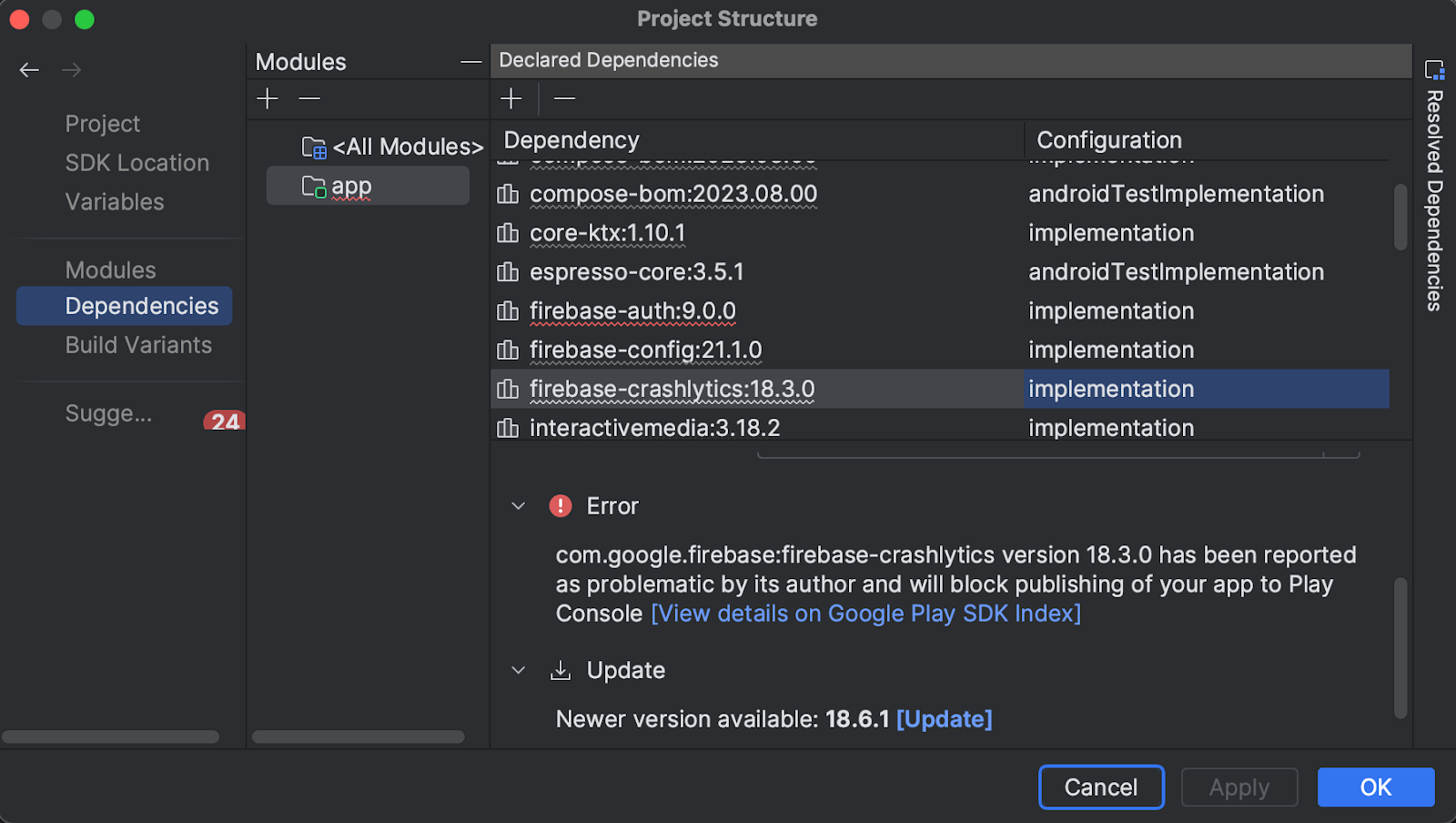

For the best development experience with Android 15, we recommend that you use the latest preview of Android Studio Jellyfish (or more recent Jellyfish+ versions). Once you’re set up, here are some of the things you should do:

- Try the new features and APIs – your feedback is critical during the early part of the developer preview. Report issues in our tracker on the feedback page.

- Test your current app for compatibility – learn whether your app is affected by changes in Android 15; install your app onto a device or emulator running Android 15 and extensively test it.

We’ll update the preview system images and SDK regularly throughout the Android 15 release cycle. This initial preview release is for developers only and not intended for daily or consumer use, so we're making it available by manual download only. Once you’ve manually installed a preview build, you’ll automatically get future updates over-the-air for all later previews and Betas. Read more here.

If you intend to move from the Android 14 QPR Beta program to the Android 15 Developer Preview program and don't want to have to wipe your device, we recommend that you move to Developer Preview 1 now. Otherwise you may run into time periods where the Android 14 Beta will have a more recent build date which will prevent you from going directly to the Android 15 Developer Preview without doing a data wipe.

As we reach our Beta releases, we'll be inviting consumers to try Android 15 as well, and we'll open up enrollment for the Android Beta program at that time. For now, please note that the Android Beta program is not yet available for Android 15.

For complete information, visit the Android 15 developer site.

Java and OpenJDK are trademarks or registered trademarks of Oracle and/or its affiliates.

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by Tatiana van Maaren – Global T&S Partnerships Lead, Privacy & Security, May Smith - Product Manager, and Anita Issagholyan – Policy Lead

Posted by Tatiana van Maaren – Global T&S Partnerships Lead, Privacy & Security, May Smith - Product Manager, and Anita Issagholyan – Policy Lead

Posted by Ouiam Koubaa – Product Manager and Yingzhe Li – Software Engineer

Posted by Ouiam Koubaa – Product Manager and Yingzhe Li – Software Engineer

Posted by Terence Zhang – Developer Relations Engineer, Google; in partnership with Tina Ho - Partner Engineering, TPM and Kun Wang – Partner Engineering, Partner Engineer

Posted by Terence Zhang – Developer Relations Engineer, Google; in partnership with Tina Ho - Partner Engineering, TPM and Kun Wang – Partner Engineering, Partner Engineer

Posted by Neville Sicard-Gregory – Senior Product Manager, Android Studio

Posted by Neville Sicard-Gregory – Senior Product Manager, Android Studio

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by

Posted by

Posted by Thomas Ezan – Sr. Developer Relations Engineer; Chengji Yan, Penny Li – ML Kit Engineers; David Miro Llopis – Product Manager

Posted by Thomas Ezan – Sr. Developer Relations Engineer; Chengji Yan, Penny Li – ML Kit Engineers; David Miro Llopis – Product Manager

Posted by

Posted by

Posted by Leticia Lago – Developer Marketing

Posted by Leticia Lago – Developer Marketing