Posted by Sophie Miller, Tango Business Development

Window shopping and showrooms let us imagine what that couch might look like in our living room or if that stool is the right height, but Tango can help take out the guesswork using augmented reality. Place virtual furniture in your real room, walk around, and try different colors.

Tango-enabled apps like WayfairView make it easy to visualize and rearrange new furniture in your home. We sat down with the Wayfair team to learn more about their app and see how Tango helps power new AR shopping experiences:

Google: Please tell us about your Tango app.

Mike: Wayfair offers a massive selection of products online. We believe that the ability for customers to visualize products in their living space augments our online experience, and solves real customer problems such as: Will this product fit in my space? and Will this match the rest of my environment?

Why are you excited for your customers to start using WayfairView?

One of the biggest barriers that online shopping poses is the inability for a customer to get a good sense of how a product would fit in their room, and what it would look like in their living space. With WayfairView, we aim to help our customers better visualize our products - going above and beyond a flat, 2D image and providing them with an accurate 3D rendering of what the full-size item could look like in their home. Not only is this a great extension of the customer experience, it’s also a practical approach to figure out how the product fits into the user’s space before ordering it.

How did you get started developing for Tango?

I signed up to buy a dev kit in 2014 because he was personally interested in scanning 3D objects and environments. I ended up using it for a hackathon to build the first prototype of what is now WayfairView. One of my teammates, Shrenik Sadalgi, has always been interested in AR technology and had participated in Tango hackathons in years prior. He thought this particular flavor of AR, i.e Markerless in the form factor of a mobile device, had the potential of providing a seamless, easy user experience for Wayfair customers.

Was there something unique to the Tango platform that made it particularly appealing?

AR technology has been around for a while, but Tango is making it accessible by providing the technology in a way that is user friendly. Specifically, the Tango platform excels in accurate tracking, which allowed Wayfair’s R&D team to focus on building a great experience for our customers. No markers, no HMDs, no cords that can get tangled, but still powerful.

What were some of the challenges you faced building for Tango?

The biggest challenge Wayfair faces with AR technology is more about the experience than the device, which is in big part thanks to Tango. Our goal was to introduce an entirely new way of shopping for furniture in a way that is user friendly. Not having to worry about the inner workings of Tango helped us focus on making the furniture look as real as possible, scaling the app with our massive catalog, and getting to market in a short period of time.

What surprised you during the Tango development process?

The learning curve for Tango was minimal. We were able to get started very quickly using example code. It was pretty remarkable how the stability of the platform (primarily the tracking) kept improving over the period of time that we worked on the app.

Which platform did you build your Tango app on, and why?

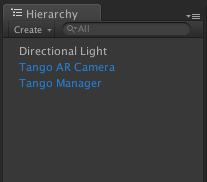

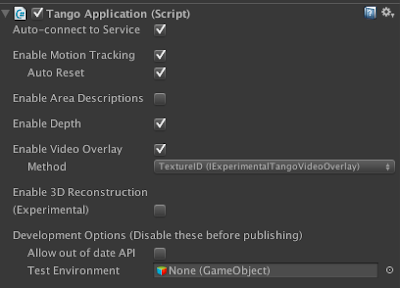

We wrote the core of the app using Unity in C# - we wanted all the 2D UI to be in native Android to match the Wayfair native Android experience. This also gave us the opportunity to re-use code from the existing Wayfair Android app. We saw significant performance improvements by using native Android to create the 2D UI as well, which also makes the UI easier to update when the next UI theme of Android comes along.

What features can customers look forward to in a future WayfairView update?

We would love to add the ability to search for products by space: imagine drawing a cube in your real space and finding all products that fit the space. We also want to allow users to stack virtual products on top of each other to help them visualize how a virtual table lamp would look on top of a virtual table. Of course, we also want to make the products look even more real and add more products that can be visualized on WayfairView.

How do you think that this will change the way people shop for household goods?

WayfairView makes it easier than ever for customers to visualize online goods in their home at full scale, giving them an extra level of confidence when making an online purchase. We believe Tango has the potential to become a ubiquitous technology, just like smartphone cameras and mobile GPS. Ultimately, we anticipate that this will further accelerate the shift from brick and mortar to online.

We also imagine that WayfairView will be a very useful tool for our designers as they share their design proposal and vision with their customers.