We are off to another exciting year in Artificial Intelligence (AI) and it's time to build more applications with Google AI technology! The Build with Google AI video series is for developers looking to build helpful and practical applications with AI. We focus on useful code projects you can implement and extend in an afternoon to bring the power of artificial intelligence into your workflow or organization. Our first season received over 100,000 views in six weeks! We are glad to see that so many of you liked the series, and we are excited to bring you even more Google AI application projects.

Today, we are launching Season 2 of the Build with Google AI series, featuring projects built with Google's Gemini API technology. The launch of Gemini and the Gemini API has brought developers even more advanced AI capabilities, including advanced reasoning, content generation, information synthesis, and image interpretation. Our goal with this season is to help you put those capabilities to work for you and your organizations.

AI app patterns

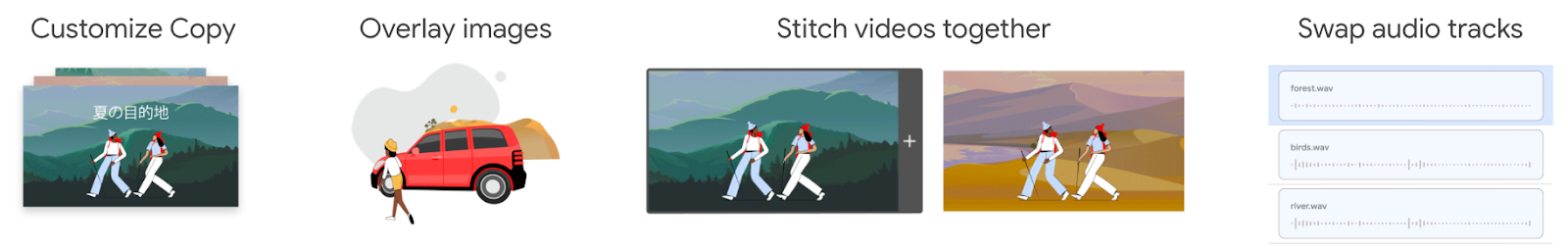

The Build with Google AI series features practical application code projects created for you to use and customize. However, we know that you are the best judge of what you or your organization needs to solve day-to-day problems and get work done. That's why each application we feature in this series is also meant to be used as an AI pattern. You can extend the applications immediately to solve problems and provide value for your business, and these applications show you a general coding pattern for getting value out of AI technology.

For this second season of this series, we show how you can leverage Google's Gemini AI model capabilities for applications. Here's what's coming up:

- AI Slides Reviewer with Google Workspace (3/20) - Image interpretation is one of the Gemini model's biggest new features. We show you how to make practical use of it with a presentation review app for Google Slides that you can customize with your organization's guidelines and recommendations.

- AI Flutter Code Agent with Gemini API (3/27) - Code generation was the most popular episode from last season, so we are digging deeper into this topic. Build a code generation extension to write Flutter code and explore user interface designs and looks with just a few words of description.

- AI Data Agent with Google Cloud (4/3) - Why write code to extract data when you can just ask for it? Build a web application that uses Gemini API's Function Calling feature to translate questions into code calls and data into plain language answers.

Season 1 upgraded to Gemini API: We've upgraded Season 1 tutorials and code projects to use the Gemini API so you can take advantage of the latest in generative AI technology from Google. Check them out!

Learn from the developers

Just like last season, we'll go back to the studio to talk with coders who built these projects so they can share what they learned along the way. How do you make the Gemini model review an entire presentation? What's the most effective way to generate code with AI? How do you get a database to answer questions with the Gemini API? Get insights into coding with AI to jump start your own development project.

New home for AI developer content

Developers interested in Google's AI offerings now have a new home at ai.google.dev. There you'll find a wealth of resources for building with AI from Google, including the Build with Google AI tutorials. Stay tuned for much more content through the rest of the year.

We are excited to bring you the second season of Build with Google AI – check out Season 2 right now! Use those video comments to let us know what you think and tell us what you'd like to see in future episodes.

Keep learning! Keep building!

Posted by Joe Fernandez – Google AI Developer Relations

Posted by Joe Fernandez – Google AI Developer Relations

Posted by Brian Craft, Satish Shreenivasa, Huikun Zhang, Manisha Arora and Paul Cubre – gTech Data Science Team

Posted by Brian Craft, Satish Shreenivasa, Huikun Zhang, Manisha Arora and Paul Cubre – gTech Data Science Team