This is the third article in our series on Hackability; also see the first and second article.

We have seen in our previous articles how Code Health and Debuggability can make a project much easier to work on. The third pillar is a solid infrastructure that gets accurate feedback to your developers as fast as possible. Speed is going to be major theme in this article, and we’ll look at a number of things you can do to make your project easier to hack on.

Build Systems Speed

Question: What’s a change you’d really like to see in our development tools?

“I feel like this question gets asked almost every time, and I always give the same answer:

I would like them to be faster.”

-- Ian Lance Taylor

“I feel like this question gets asked almost every time, and I always give the same answer:

I would like them to be faster.”

-- Ian Lance Taylor

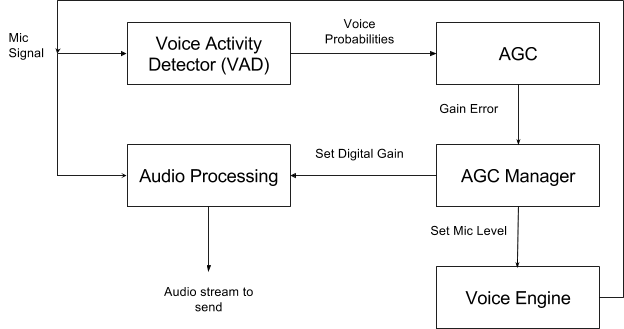

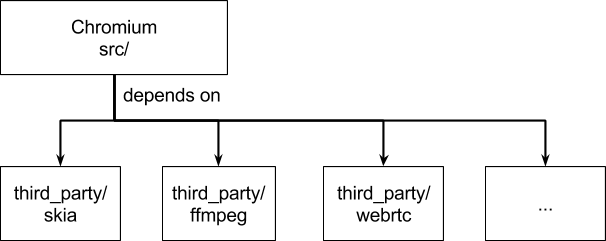

Replace make with ninja. Use the gold linker instead of ld. Detect and delete dead code in your project (perhaps using coverage tools). Reduce the number of dependencies, and enforce dependency rules so new ones are not added lightly. Give the developers faster machines. Use distributed build, which is available with many open-source continuous integration systems (or use Google’s system, Bazel!). You should do everything you can to make the build faster.

Figure 1: “Cheetah chasing its prey” by Marlene Thyssen.

Why is that? There’s a tremendous difference in hackability if it takes 5 seconds to build and test versus one minute, or even 20 minutes, to build and test. Slow feedback cycles kill hackability, for many reasons:

- Build and test times longer than a handful of seconds cause many developers’ minds to wander, taking them out of the zone.

- Excessive build or release times* makes tinkering and refactoring much harder. All developers have a threshold when they start hedging (e.g. “I’d like to remove this branch, but I don’t know if I’ll break the iOS build”) which causes refactoring to not happen.

* The worst I ever heard of was an OS that took 24 hours to build!

How do you actually make fast build systems? There are some suggestions in the first paragraph above, but the best general suggestion I can make is to have a few engineers on the project who deeply understand the build systems and have the time to continuously improve them. The main axes of improvement are:

- Reduce the amount of code being compiled.

- Replace tools with faster counterparts.

- Increase processing power, maybe through parallelization or distributed systems.

Note that there is a big difference between full builds and incremental builds. Both should be as fast as possible, but incremental builds are by far the most important to optimize. The way you tackle the two is different. For instance, reducing the total number of source files will make a full build faster, but it may not make an incremental build faster.

To get faster incremental builds, in general, you need to make each source file as decoupled as possible from the rest of the code base. The less a change ripples through the codebase, the less work to do, right? See “Loose Coupling and Testability” in Pillar 1 for more on this subject. The exact mechanics of dependencies and interfaces depends on programming language - one of the hardest to get right is unsurprisingly C++, where you need to be disciplined with includes and forward declarations to get any kind of incremental build performance.

Build scripts and makefiles should be held to standards as high as the code itself. Technical debt and unnecessary dependencies have a tendency to accumulate in build scripts, because no one has the time to understand and fix them. Avoid this by addressing the technical debt as you go.

Continuous Integration and Presubmit Queues

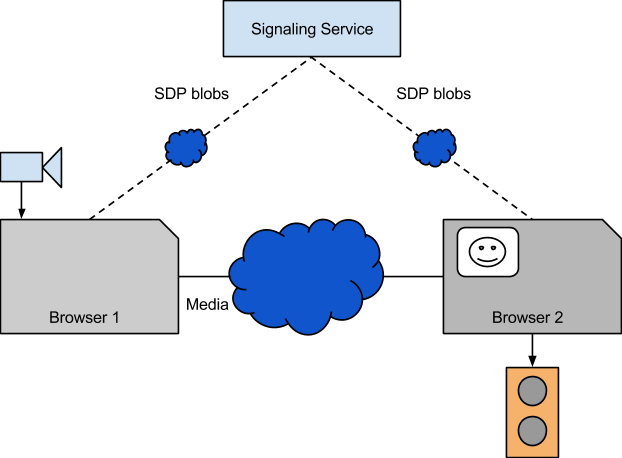

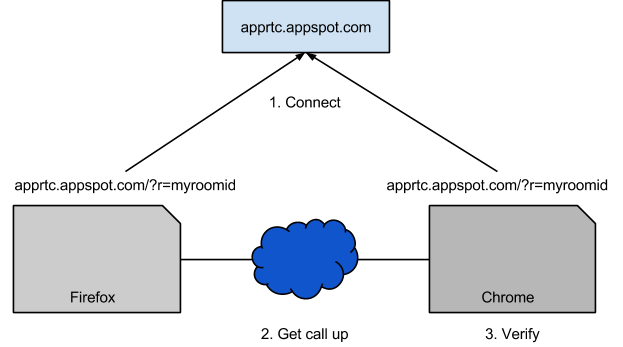

At a minimum, you should build and test on all platforms, but it’s even better if you do it in presubmit. The Chromium submit queue does this. It has developed over the years so that a normal patch builds and tests on about 30 different build configurations before commit. This is necessary for the 400-patches-per-day velocity of the Chrome project. Most projects obviously don’t have to go that far. Chromium’s infrastructure is based off BuildBot, but there are many other job scheduling systems depending on your needs.

As we discussed in Build Systems, speed and correctness are critical here. It takes a lot of ongoing work to keep build, tests, and presubmits fast and free of flakes. You should never accept flakes, since developers very quickly lose trust in flaky tests and systems. Tooling can help a bit with this; for instance, see the Chromium flakiness dashboard.

Test Speed

Speed is a feature, and this is particularly true for developer infrastructure. In general, the longer a test takes to execute, the less valuable it is. My rule of thumb is: if it takes more than a minute to execute, its value is greatly diminished. There are of course some exceptions, such as soak tests or certain performance tests.

If you have tests that are slower than 60 seconds, they better be incredibly reliable and easily debuggable. A flaky test that takes several minutes to execute often has negative value because it slows down all work in the code it covers. You probably want to build better integration tests on lower levels instead, so you can make them faster and more reliable.

If you have many engineers on a project, reducing the time to run the tests can have a big impact. This is one reason why it’s great to have SETIs or the equivalent. There are many things you can do to improve test speed:

- Sharding and parallelization. Add more machines to your continuous build as your test set or number of developers grows.

- Continuously measure how long it takes to run one build+test cycle in your continuous build, and have someone take action when it gets slower.

- Remove tests that don’t pull their weight. If a test is really slow, it’s often because of poorly written wait conditions or because the test bites off more than it can chew (maybe that unit test doesn’t have to process 15000 audio frames, maybe 50 is enough).

- If you have tests that bring up a local server stack, for instance inter-server integration tests, making your servers boot faster is going to make the tests faster as well. Faster production code is faster to test! See Running on Localhost, in Pillar 2 for more on local server stacks.

Workflow Speed

We’ve talked about fast builds and tests, but the core developer workflows are also important, of course. Chromium undertook multi-year project to switch from Subversion to Git, partly because Subversion was becoming too slow. You need to keep track of your core workflows as your project grows. Your version control system may work fine for years, but become too slow once the project becomes big enough. Bug search and management must also be robust and fast, since this is generally systems developers touch every day.

Release Often

It aids hackability to deploy to real users as fast as possible. No matter how good your product's tests are, there's always a risk that there's something you haven't thought of. If you’re building a service or web site, you should aim to deploy multiple times per week. For client projects, Chrome’s six-weeks cycle is a good goal to aim for.

You should invest in infrastructure and tests that give you the confidence to do this - you don’t want to push something that’s broken. Your developers will thank you for it, since it makes their jobs so much easier. By releasing often, you mitigate risk, and developers will rush less to hack late changes in the release (since they know the next release isn’t far off).

Easy Reverts

If you look in the commit log for the Chromium project, you will see that a significant percentage of the commits are reverts of a previous commits. In Chromium, bad commits quickly become costly because they impede other engineers, and the high velocity can cause good changes to stack onto bad changes.

Figure 4: Chromium’s revert button.

This is why the policy is “revert first, ask questions later”. I believe a revert-first policy is good for small projects as well, since it creates a clear expectation that the product/tools/dev environment should be working at all times (and if it doesn’t, a recent change should probably be reverted).

It has a wonderful effect when a revert is simple to make. You can suddenly make speculative reverts if a test went flaky or a performance test regressed. It follows that if a patch is easy to revert, so is the inverse (i.e. reverting the revert or relanding the patch). So if you were wrong and that patch wasn’t guilty after all, it’s simple to re-land it again and try reverting another patch. Developers might initially balk at this (because it can’t possibly be their patch, right?), but they usually come around when they realize the benefits.

For many projects, a revert can simply be

git revert 9fbadbeef

git push origin master

If your project (wisely) involves code review, it will behoove you to add something like Chromium’s revert button that I mentioned above. The revert button will create a special patch that bypasses review and tests (since we can assume a clean revert takes us back to a more stable state rather than the opposite). See Pillar 1 for more on code review and its benefits.

For some projects, reverts are going to be harder, especially if you have a slow or laborious release process. Even if you release often, you could still have problems if a revert involves state migrations in your live services (for instance when rolling back a database schema change). You need to have a strategy to deal with such state changes.

Reverts must always put you back to safer ground, and everyone must be confident they can safely revert. If not, you run the risk of massive fire drills and lost user trust if a bad patch makes it through the tests and you can’t revert it.

Performance Tests: Measure Everything

Is it critical that your app starts up within a second? Should your app always render at 60 fps when it’s scrolled up or down? Should your web server always serve a response within 100 ms? Should your mobile app be smaller than 8 MB? If so, you should make a performance test for that. Performance tests aid hackability since developers can quickly see how their change affects performance and thus prevent performance regressions from making it into the wild.

You should run your automated performance tests on the same device; all devices are different and this will reflect in the numbers. This is fairly straightforward if you have a decent continuous integration system that runs tests sequentially on a known set of worker machines. It’s harder if you need to run on physical phones or tablets, but it can be done.

A test can be as simple as invoking a particular algorithm and measuring the time it takes to execute it (median and 90th percentile, say, over N runs).

Figure 5: A VP8-in-WebRTC regression (bug) and its fix displayed in a Catapult dashboard.

Write your test so it outputs performance numbers you care about. But what to do with those numbers? Fortunately, Chrome’s performance test framework has been open-sourced, which means you can set up a dashboard, with automatic regression monitoring, with minimal effort. The test framework also includes the powerful Telemetry framework which can run actions on web pages and Android apps and report performance results. Telemetry and Catapult are battle-tested by use in the Chromium project and are capable of running on a wide set of devices, while getting the maximum of usable performance data out of the devices.

Sources

Figure 1: By Malene Thyssen (Own work) [CC BY-SA 3.0 (http://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons