In a recent post, we broadly talked about What Test Engineers do at Google. In this post, I talk about one aspect of the work TEs may do: building and improving test infrastructure to make engineers more productive.

Refurbishing legacy systems makes new tools necessary

A few years ago, I joined an engineering team that was working on replacing a legacy system with a new implementation. Because building the replacement would take several years, we had to keep the legacy system operational and even add features, while building the replacement so there would be no impact on our external users.

The legacy system was so complex and brittle that the engineers spent most of their time triaging and fixing bugs and flaky tests, but had little time to implement new features. The goal for the rewrite was to learn from the legacy system and to build something that was easier to maintain and extend. As the team's TE, my job was to understand what caused the high maintenance cost and how to improve on it. I found two main causes:

- Tight coupling and insufficient abstraction made unit testing very hard, and as a consequence, a lot of end-to-end tests served as functional tests of that code.

- The infrastructure used for the end-to-end tests had no good way to create and inject fakes or mocks for these services. As a result, the tests had to run the large number of servers for all these external dependencies. This led to very large and brittle tests that our existing test execution infrastructure was not able to handle reliably.

At first, I explored if I could split the large tests into smaller ones that would test specific functionality and depend on fewer external services. This proved impossible, because of the poorly structured legacy code. Making this approach work would have required refactoring the entire system and its dependencies, not just the parts my team owned.

In my second approach, I also focussed on large tests and tried to mock services that were not required for the functionality under test. This also proved very difficult, because dependencies changed often and individual dependencies were hard to trace in a graph of over 200 services. Ultimately, this approach just shifted the required effort from maintaining test code to maintaining test dependencies and mocks.

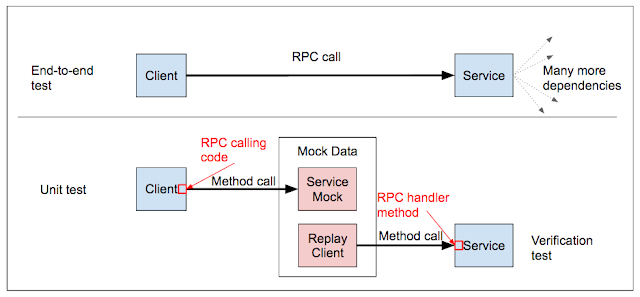

My third and final approach, illustrated in the figure below, made small tests more powerful. In the typical end-to-end test we faced, the client made RPCcalls to several services, which in turn made RPC calls to other services. Together the client and the transitive closure over all backend services formed a large graph (not tree!) of dependencies, which all had to be up and running for the end-to-end test. The new model changes how we test client and service integration. Instead of running the client on inputs that will somehow trigger RPC calls, we write unit tests for the code making method calls to the RPC stub. The stub itself is mocked with a common mocking framework like Mockito in Java. For each such test, a second test verifies that the data used to drive that mock "makes sense" to the actual service. This is also done with a unit test, where a replay client uses the same data the RPC mock uses to call the RPC handler method of the service.

This pattern of integration testing applies to any RPC call, so the RPC calls made by a backend server to another backend can be tested just as well as front-end client calls. When we apply this approach consistently, we benefit from smaller tests that still test correct integration behavior, and make sure that the behavior we are testing is "real".

To arrive at this solution, I had to build, evaluate, and discard several prototypes. While it took a day to build a proof-of-concept for this approach, it took me and another engineer a year to implement a finished tool developers could use.

Adoption

The engineers embraced the new solution very quickly when they saw that the new framework removes large amounts of boilerplate code from their tests. To further drive its adoption, I organized multi-day events with the engineering team where we focussed on migrating test cases. It took a few months to migrate all existing unit tests to the new framework, close gaps in coverage, and create the new tests that validate the mocks. Once we converted about 80% of the tests, we started comparing the efficacy of the new tests and the existing end-to-end tests.

The results are very good:

- The new tests are as effective in finding bugs as the end-to-end tests are.

- The new tests run in about 3 minutes instead of 30 minutes for the end-to-end tests.

- The client side tests are 0% flaky. The verification tests are usually less flaky than the end-to-end tests, and never more.

Building and improving test infrastructure to help engineers be more productive is one of the many things test engineers do at Google. Running this project from requirements gathering all the way to a finished product gave me the opportunity to design and implement several prototypes, drive the full implementation of one solution, lead engineering teams to adoption of the new framework, and integrate feedback from engineers and actual measurements into the continuous refinement of the tool.