Posted by Frank van Diggelen, Principal Engineer and Jennifer Wang, Product Manager

At Android, we want to make it as easy as possible for developers to create the most helpful apps for their users. That’s why we aim to provide the best location experience with our APIs like the Fused Location Provider API (FLP). However, we’ve heard from many of you that the biggest location issue is inaccuracy in dense urban areas, such as wrong-side-of-the-street and even wrong-city-block errors.

This is particularly critical for the most used location apps, such as rideshare and navigation. For instance, when users request a rideshare vehicle in a city, apps cannot easily locate them because of the GPS errors.

The last great unsolved GPS problem

This wrong-side-of-the-street position error is caused by reflected GPS signals in cities, and we embarked on an ambitious project to help solve this great problem in GPS. Our solution uses 3D mapping aided corrections, and is only feasible to be done at scale by Google because it comprises 3D building models, raw GPS measurements, and machine learning.

The December Pixel Feature Drop adds 3D mapping aided GPS corrections to Pixel 5 and Pixel 4a (5G). With a system API that provides feedback to the Qualcomm® Snapdragon™ 5G Mobile Platform that powers Pixel, the accuracy in cities (or “urban canyons”) improves spectacularly.

Picture of a pedestrian test, with Pixel 5 phone, walking along one side of the street, then the other. Yellow = Path followed, Red = without 3D mapping aided corrections, Blue = with 3D mapping aided corrections.

Why hasn’t this been solved before?

The problem is that GPS constructively locates you in the wrong place when you are in a city. This is because all GPS systems are based on line-of-sight operation from satellites. But in big cities, most or all signals reach you through non line-of-sight reflections, because the direct signals are blocked by the buildings.

The GPS chip assumes that the signal is line-of-sight and therefore introduces error when it calculates the excess path length that the signals traveled. The most common side effect is that your position appears on the wrong side of the street, although your position can also appear on the wrong city block, especially in very large cities with many skyscrapers.

There have been attempts to address this problem for more than a decade. But no solution existed at scale, until 3D mapping aided corrections were launched on Android.

How 3D mapping aided corrections work

The 3D mapping aided corrections module, in Google Play services, includes tiles of 3D building models that Google has for more than 3850 cities around the world. Google Play services 3D mapping aided corrections currently supports pedestrian use-cases only. When you use your device’s GPS while walking, Android’s Activity Recognition API will recognize that you are a pedestrian, and if you are in one of the 3850+ cities, tiles with 3D models will be downloaded and cached on the phone for that city. Cache size is approximately 20MB, which is about the same size as 6 photographs.

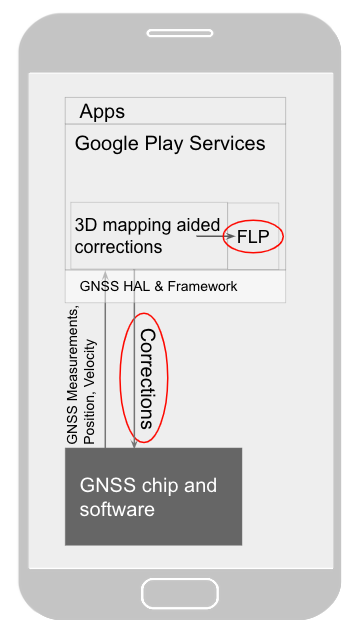

Inside the module, the 3D mapping aided corrections algorithms solve the chicken-and-egg problem, which is: if the GPS position is not in the right place, then how do you know which buildings are blocking or reflecting the signals? Having solved this problem, 3D mapping aided corrections provide a set of corrected positions to the FLP. A system API then provides this information to the GPS chip to help the chip improve the accuracy of the next GPS fix.

With this December Pixel feature drop, we are releasing version 2 of 3D mapping aided corrections on Pixel 5 and Pixel 4a (5G). This reduces wrong-side-of-street occurrences by approximately 75%. Other Android phones, using Android 8 or later, have version 1 implemented in the FLP, which reduces wrong-side-of-street occurrences by approximately 50%. Version 2 will be available to the entire Android ecosystem (Android 8 or later) in early 2021.

Android’s 3D mapping aided corrections work with signals from the USA’s Global Positioning System (GPS) as well as other Global Navigation Satellite Systems (GNSSs): GLONASS, Galileo, BeiDou, and QZSS.

Our GPS chip partners shared the importance of this work for their technologies:

“Consumers rely on the accuracy of the positioning and navigation capabilities of their mobile phones. Location technology is at the heart of ensuring you find your favorite restaurant and you get your rideshare service in a timely manner. Qualcomm Technologies is leading the charge to improve consumer experiences with its newest Qualcomm® Location Suite technology featuring integration with Google's 3D mapping aided corrections. This collaboration with Google is an important milestone toward sidewalk-level location accuracy,” said Francesco Grilli, vice president of product management at Qualcomm Technologies, Inc.

“Broadcom has integrated Google's 3D mapping aided corrections into the navigation engine of the BCM47765 dual-frequency GNSS chip. The combination of dual frequency L1 and L5 signals plus 3D mapping aided corrections provides unprecedented accuracy in urban canyons. L5 plus Google’s corrections are a game-changer for GNSS use in cities,” said Charles Abraham, Senior Director of Engineering, Broadcom Inc.

“Google's 3D mapping aided corrections is a major advancement in personal location accuracy for smartphone users when walking in urban environments. MediaTek’s Dimensity 5G family enables 3D mapping aided corrections in addition to its highly accurate dual-band GNSS and industry-leading dead reckoning performance to give the most accurate global positioning ever for 5G smartphone users,” said Dr. Yenchi Lee, Deputy General Manager of MediaTek’s Wireless Communications Business Unit.

How to access 3D mapping aided corrections

Android’s 3D mapping aided corrections automatically works when the GPS is being used by a pedestrian in any of the 3850+ cities, on any phone that runs Android 8 or later. The best way for developers to take advantage of the improvement is to use FLP to get location information. The further 3D mapping aided corrections in the GPS chip are available to Pixel 5 and Pixel 4a (5G) today, and will be rolled out to the rest of the Android ecosystem (Android 8 or later) in the next several weeks. We will also soon support more modes including driving.

Android’s 3D mapping aided corrections cover more than 3850 cities, including:

- North America: All major cities in USA, Canada, Mexico.

- Europe: All major cities. (100%, except Russia & Ukraine)

- Asia: All major cities in Japan and Taiwan.

- Rest of the world: All major cities in Brazil, Argentina, Australia, New Zealand, and South Africa.

As our Google Earth 3D models expand, so will 3D mapping aided corrections coverage.

Google Maps is also getting updates that will provide more street level detail for pedestrians in select cities, such as sidewalks, crosswalks, and pedestrian islands. In 2021, you can get these updates for your app using the Google Maps Platform. Along with the improved location accuracy from 3D mapping aided corrections, we hope we can help developers like you better support use cases for the world’s 2B pedestrians that use Android.

Continuously making location better

In addition to 3D mapping aided corrections, we continue to work hard to make location as accurate and useful as possible. Below are the latest improvements to the Fused Location Provider API (FLP):

- Developers wanted an easier way to retrieve the current location. With the new

getCurrentLocation() API, developers can get the current location in a single request, rather than having to subscribe to ongoing location changes. By allowing developers to request location only when needed (and automatically timing out and closing open location requests), this new API also improves battery life. Check out our latest Kotlin sample.

- Android 11's Data Access Auditing API provides more transparency into how your app and its dependencies access private data (like location) from users. With the new support for the API's attribution tags in the

FusedLocationProviderClient, developers can more easily audit their apps’ location subscriptions in addition to regular location requests. Check out this Kotlin sample to learn more.

Qualcomm and Snapdragon are trademarks or registered trademarks of Qualcomm Incorporated.

Qualcomm Snapdragon and Qualcomm Location Suite are products of Qualcomm Technologies, Inc. and/or its subsidiaries.