Available now with Google Photos

Our photo picker has always been the gateway to your local media library, providing a secure, date-sorted interface for users to grant apps access to selected images and videos. But now, we're taking it a step further by integrating cloud photos from your chosen cloud media app directly into the photo picker experience.

Unifying your media library

Backed-up photos, also known as "cloud photos," will now be merged with your local ones in the photo picker, eliminating the need to switch between apps. Additionally, any albums you've created in your cloud storage app will be readily accessible within the photo picker's albums tab. If your cloud media provider has a concept of “favorites,” they will be showcased prominently within the albums tab of the photo picker for easy access. This feature is currently rolling out with the February Google System Update to devices running Android 12 and above.

Available now with Google Photos, but open to all

Google Photos is already supporting this new feature, and our APIs are open to any cloud media app that qualifies for our pilot program. Our goal is to make accessing your lifetime of memories effortless, regardless of the app you prefer.

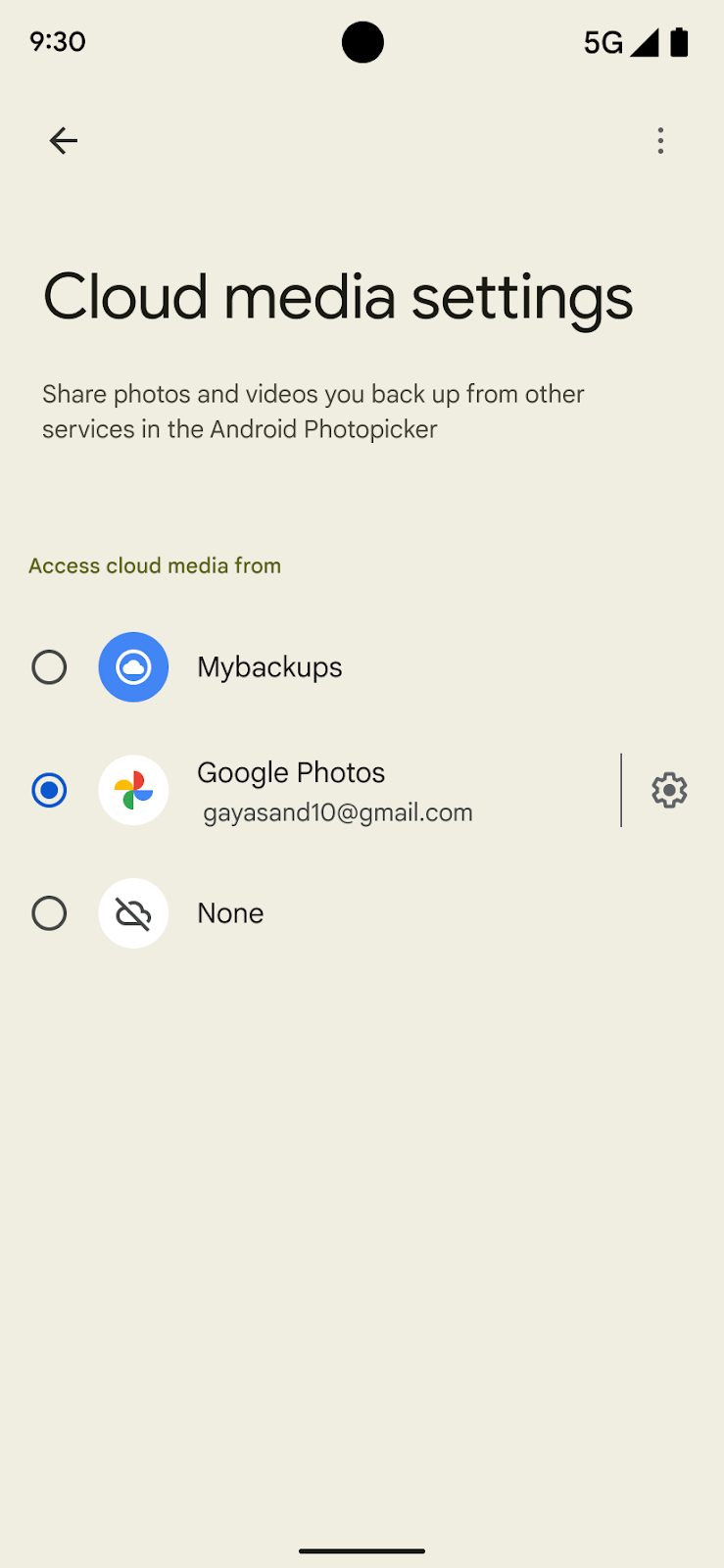

The Android photo picker will attempt to auto-select a cloud media app for you, but you can change or remove your selected cloud media app at any time from photo picker settings.

Migrate today for an enhanced, frictionless experience

The Android photo picker substantially reduces friction by not requiring any runtime permissions. If you switch from using a custom photo picker to the Android photo picker, you can offer this enhanced experience with cloud photos to your users, as well as reduce or entirely eliminate the overhead involved with acquiring and managing access to photos on the device. (Note that apps without a need for persistent and/or broad scale access to photos - for example - to set a profile picture, must adopt the Android photo picker in lieu of any sensitive file permissions to adhere to Google Play policy).

The photo picker has been backported to Android 4.4 to make it easy to migrate without needing to worry about device compatibility. Access to cloud content will only be available for users running Android 12 and higher, but developers do not need to consider this when implementing the photo picker into their apps. To use the photo picker in your app, update the ActivityX dependency to version 1.7.x or above and add the following code snippet:

// Registers a photo picker activity launcher in single-select mode.

val pickMedia = registerForActivityResult(PickVisualMedia()) { uri ->

// Callback is invoked after the user selects a media item or closes the

// photo picker.

if (uri != null) {

Log.d("PhotoPicker", "Selected URI: $uri")

} else {

Log.d("PhotoPicker", "No media selected")

}

}

// Launch the photo picker and let the user choose images and videos.

pickMedia.launch(PickVisualMediaRequest(PickVisualMedia.ImageAndVideo))

// Launch the photo picker and let the user choose only images.

pickMedia.launch(PickVisualMediaRequest(PickVisualMedia.ImageOnly))

// Launch the photo picker and let the user choose only videos.

pickMedia.launch(PickVisualMediaRequest(PickVisualMedia.VideoOnly))

More customization options are listed in our developer documentation.

Posted by Roxanna Aliabadi Walker – Product Manager

Posted by Roxanna Aliabadi Walker – Product Manager

Posted by Kira Rich – Senior Product Marketing Manager, AR and Bradford Lee – Product Marketing Manager, AR

Posted by Kira Rich – Senior Product Marketing Manager, AR and Bradford Lee – Product Marketing Manager, AR

Posted by the IDX team

Posted by the IDX team

Posted by

Posted by

Posted by Karan Gambhir – Director, Global Trust and Safety Partnerships

Posted by Karan Gambhir – Director, Global Trust and Safety Partnerships

Posted by Rachel Francois, Global Program Manager, Google Developer Student Clubs

Posted by Rachel Francois, Global Program Manager, Google Developer Student Clubs

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by Anirudh Dewani, Director of Android Developer Relations

Posted by Bradford Lee – Product Marketing Manager, Augmented Reality, and Ahsan Ashraf – Product Marketing Manager, Google Maps Platform

Posted by Bradford Lee – Product Marketing Manager, Augmented Reality, and Ahsan Ashraf – Product Marketing Manager, Google Maps Platform