We’re sharing a few ways to use the new Find My Device experience on Android.

We’re sharing a few ways to use the new Find My Device experience on Android.

5 ways to use the new Find My Device on Android

We’re sharing a few ways to use the new Find My Device experience on Android.

We’re sharing a few ways to use the new Find My Device experience on Android.

We’re sharing a few ways to use the new Find My Device experience on Android.

We’re sharing a few ways to use the new Find My Device experience on Android.

Cybercriminals continue to invest in advanced financial fraud scams, costing consumers more than $1 trillion in losses. According to the 2023 Global State of Scams Report by the Global Anti-Scam Alliance, 78 percent of mobile users surveyed experienced at least one scam in the last year. Of those surveyed, 45 percent said they’re experiencing more scams in the last 12 months.

The Global Scam Report also found that phone calls are the top method to initiate a scam. Scammers frequently employ social engineering tactics to deceive mobile users.

The key place these scammers want individuals to take action are in the tools that give access to their money. This means financial services are frequently targeted. As cybercriminals push forward with more scams, and their reach extends globally, it’s important to innovate in the response.

One such innovator is Monzo, who have been able to tackle scam calls through a unique impersonation detection feature in their app.

Founded in 2015, Monzo is the largest digital bank in the UK with presence in the US as well. Their mission is to make money work for everyone with an ambition to become the one app customers turn to to manage their entire financial lives.

Impersonation fraud is an issue that the entire industry is grappling with and Monzo decided to take action and introduce an industry-first tool. An impersonation scam is a very common social engineering tactic when a criminal pretends to be someone else so they can encourage you to send them money. These scams often involve using urgent pretenses that involve a risk to a user’s finances or an opportunity for quick wealth. With this pressure, fraudsters convince users to disable security safeguards and ignore proactive warnings for potential malware, scams, and phishing.

Android offers multiple layers of spam and phishing protection for users including call ID and spam protection in the Phone by Google app. Monzo’s team wanted to enhance that protection by leveraging their in-house telephone systems. By integrating with their mobile application infrastructure they could help their customers confirm in real time when they’re actually talking to a member of Monzo’s customer support team in a privacy preserving way.

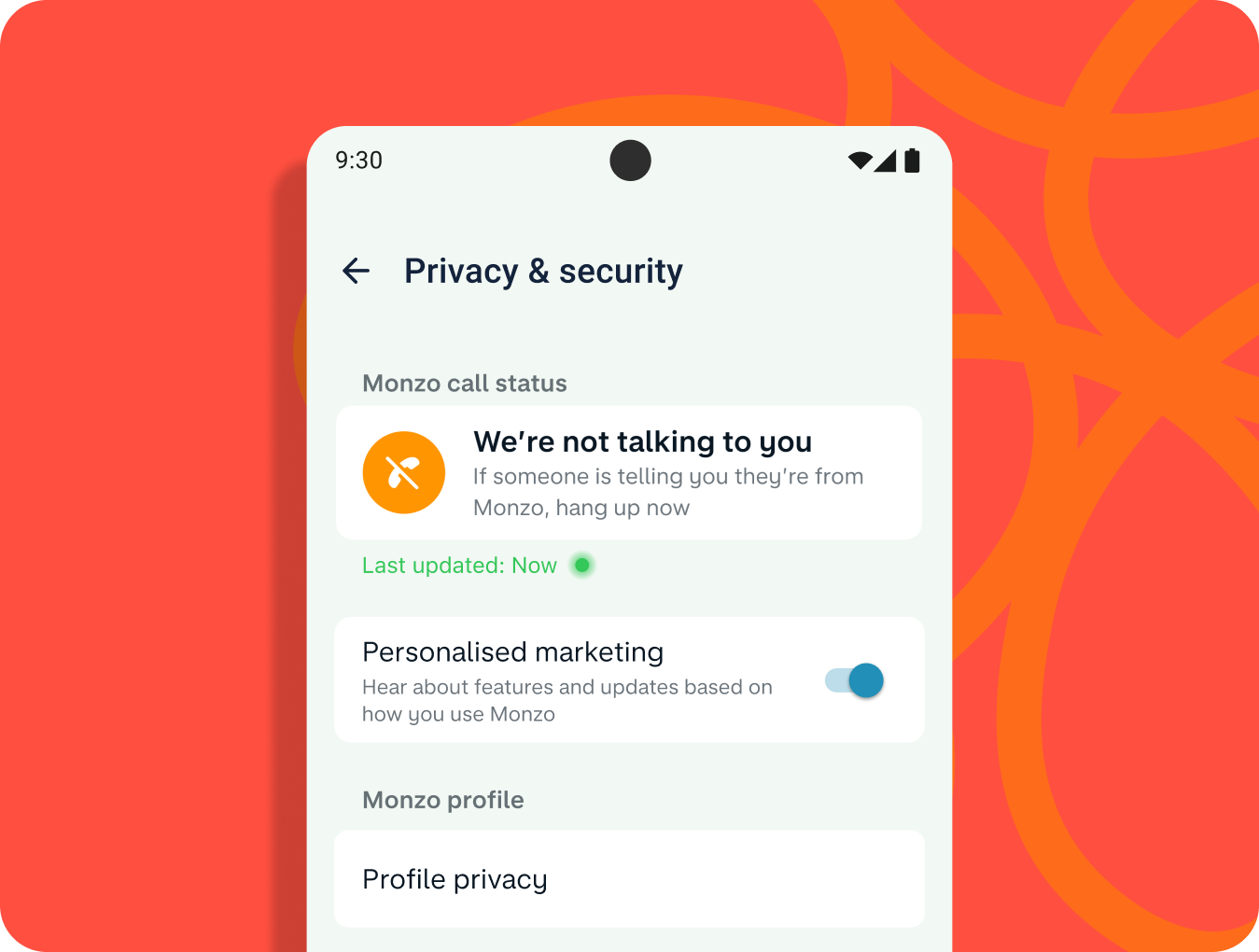

If someone calls a Monzo customer stating they are from the bank, their users can go into the app to verify this. In the Monzo app’s Privacy & Security section, users can see the ‘Monzo Call Status’, letting them know if there is an active call ongoing with an actual Monzo team member.

“We’ve built this industry-first feature using our world-class tech to provide an additional layer of comfort and security. Our hope is that this could stop instances of impersonation scams for Monzo customers from happening in the first place and impacting customers.”- Priyesh Patel, Senior Staff Engineer, Monzo’s Security team

If a user is not talking to a member of Monzo’s customer support team they will see that as well as some helpful information. If the ‘Monzo call status’ is showing that you are not speaking to Monzo, the call status feature tells you to hang up right away and report it to their team. Their customers can start a scam report directly from the call status feature in the app.

If a genuine call is ongoing the customer will see the information.

Monzo has integrated a few systems together to help inform their customers. A cross functional team was put together to build a solution.

Monzo’s in-house technology stack meant that the systems that power their app and customer service phone calls can easily communicate with one another. This allowed them to link the two and share details of customer service calls with their app, accurately and in real-time.

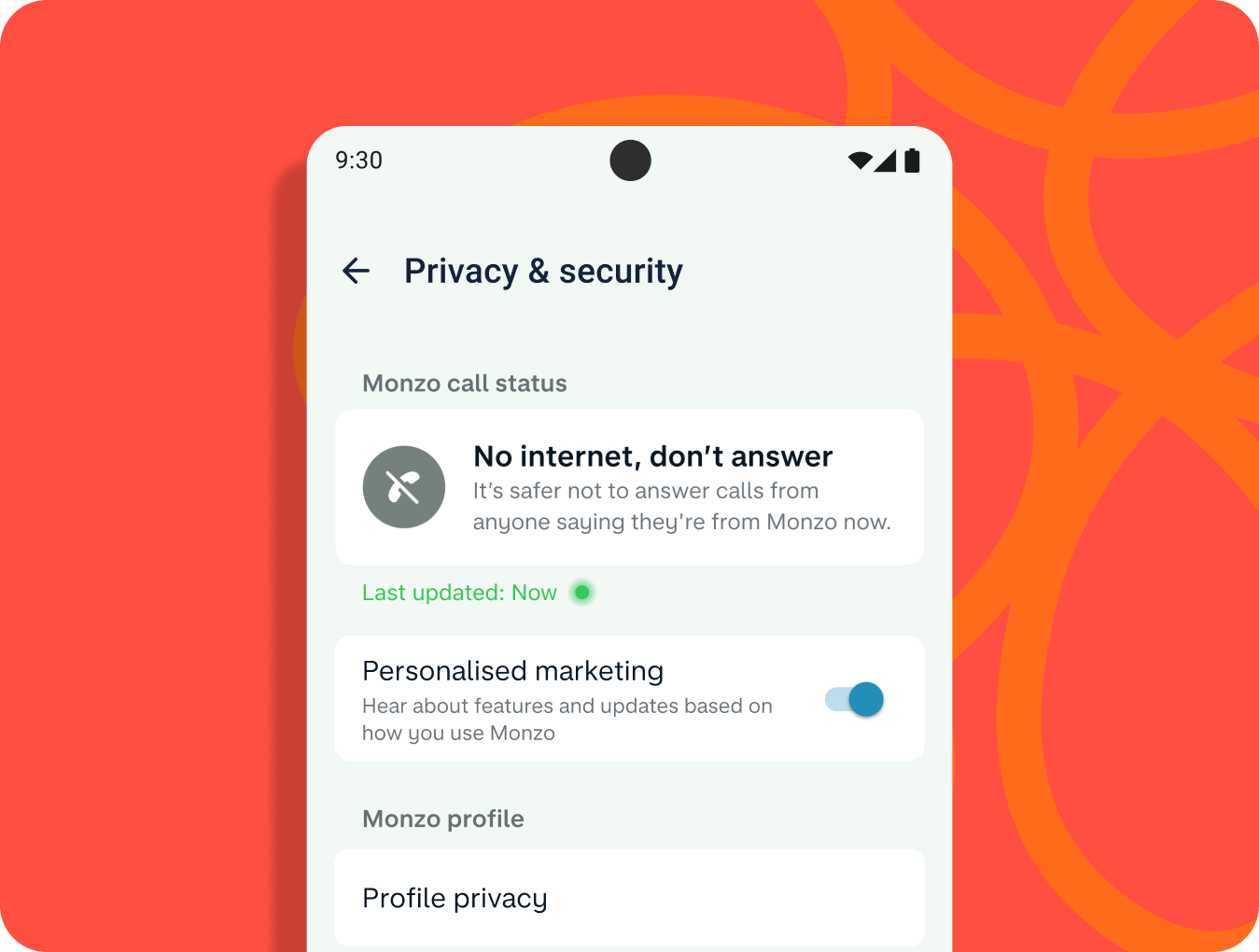

The team then worked to identify edge cases, like when the user is offline. In this situation Monzo recommends that customers don’t speak to anyone claiming they’re from Monzo until you’re connected to the internet again and can check the call status within the app.

The feature has proven highly effective in safeguarding customers, and received universal praise from industry experts and consumer champions.

“Since we launched Call Status, we receive an average of around 700 reports of suspected fraud from our customers through the feature per month. Now that it’s live and helping protect customers, we’re always looking for ways to improve Call Status - like making it more visible and easier to find if you’re on a call and you want to quickly check that who you’re speaking to is who they say they are.”- Priyesh Patel, Senior Staff Engineer, Monzo’s Security team

Monzo continues to invest and innovate in fraud prevention. The call status feature brings together both technological innovation and customer education to achieve its success, and gives their customers a way to catch scammers in action.

A layered security approach is a great way to protect users. Android and Google Play provide layers like app sandboxing, Google Play Protect, and privacy preserving permissions, and Monzo has built an additional one in a privacy-preserving way.

To learn more about Android and Play’s protections and to further protect your app check out these resources:

With steady improvements to Android userspace and kernel security, we have noticed an increasing interest from security researchers directed towards lower level firmware. This area has traditionally received less scrutiny, but is critical to device security. We have previously discussed how we have been prioritizing firmware security, and how to apply mitigations in a firmware environment to mitigate unknown vulnerabilities.

In this post we will show how the Kernel Address Sanitizer (KASan) can be used to proactively discover vulnerabilities earlier in the development lifecycle. Despite the narrow application implied by its name, KASan is applicable to a wide-range of firmware targets. Using KASan enabled builds during testing and/or fuzzing can help catch memory corruption vulnerabilities and stability issues before they land on user devices. We've already used KASan in some firmware targets to proactively find and fix 40+ memory safety bugs and vulnerabilities, including some of critical severity.

Along with this blog post we are releasing a small project which demonstrates an implementation of KASan for bare-metal targets leveraging the QEMU system emulator. Readers can refer to this implementation for technical details while following the blog post.

Address sanitizer is a compiler-based instrumentation tool used to identify invalid memory access operations during runtime. It is capable of detecting the following classes of temporal and spatial memory safety bugs:

ASan relies on the compiler to instrument code with dynamic checks for virtual addresses used in load/store operations. A separate runtime library defines the instrumentation hooks for the heap memory and error reporting. For most user-space targets (such as aarch64-linux-android) ASan can be enabled as simply as using the -fsanitize=address compiler option for Clang due to existing support of this target both in the toolchain and in the libclang_rt runtime.

However, the situation is rather different for bare-metal code which is frequently built with the none system targets, such as arm-none-eabi. Unlike traditional user-space programs, bare-metal code running inside an embedded system often doesn’t have a common runtime implementation. As such, LLVM can’t provide a default runtime for these environments.

To provide custom implementations for the necessary runtime routines, the Clang toolchain exposes an interface for address sanitization through the -fsanitize=kernel-address compiler option. The KASan runtime routines implemented in the Linux kernel serve as a great example of how to define a KASan runtime for targets which aren’t supported by default with -fsanitize=address. We'll demonstrate how to use the version of address sanitizer originally built for the kernel on other bare-metal targets.

Let’s take a look at the KASan major building blocks from a high-level perspective (a thorough explanation of how ASan works under-the-hood is provided in this whitepaper).

The main idea behind KASan is that every memory access operation, such as load/store instructions and memory copy functions (for example, memmove and memcpy), are instrumented with code which performs verification of the destination/source memory regions. KASan only allows the memory access operations which use valid memory regions. When KASan detects memory access to a memory region which is invalid (that is, the memory has been already freed or access is out-of-bounds) then it reports this violation to the system.

The state of memory regions covered by KASan is maintained in a dedicated area called shadow memory. Every byte in the shadow memory corresponds to a single fixed-size memory region covered by KASan (typically 8-bytes) and encodes its state: whether the corresponding memory region has been allocated or freed and how many bytes in the memory region are accessible.

Therefore, to enable KASan for a bare-metal target we would need to implement the instrumentation routines which verify validity of memory regions in memory access operations and report KASan violations to the system. In addition we would also need to implement shadow memory management to track the state of memory regions which we want to be covered with KASan.

The very first step in enabling KASan for firmware is to reserve a sufficient amount of DRAM for shadow memory. This is a memory region where each byte is used by KASan to track the state of an 8-byte region. This means accommodating the shadow memory requires a dedicated memory region equal to 1/8th the size of the address space covered by KASan.

KASan maps every 8-byte aligned address from the DRAM region into the shadow memory using the following formula:

shadow_address = (target_address >> 3 ) + shadow_memory_base where target_address is the address of a 8-byte memory region which we want to cover with KASan and shadow_memory_base is the base address of the shadow memory area.

Once we have the shadow memory tracking the state of every single 8-byte memory region of DRAM we need to implement the necessary runtime routines which KASan instrumentation depends on. For reference, a comprehensive list of runtime routines needed for KASan can be found in the linux/mm/kasan/kasan.h Linux kernel header. However, it might not be necessary to implement all of them and in the following text we focus on the ones which were needed to enable KASan for our target firmware as an example.

The routines __asan_loadXX_noabort, __asan_storeXX_noabort perform verification of memory access at runtime. The symbol XX denotes size of memory access and goes as a power of 2 starting from 1 up to 16. The toolchain instruments every memory load and store operations with these functions so that they are invoked before the memory access operation happens. These routines take as input a pointer to the target memory region to check it against the shadow memory.

If the region state provided by shadow memory doesn’t reveal a violation, then these functions return to the caller. But if any violations (for example, the memory region is accessed after it has been deallocated or there is an out-of-bounds access) are revealed, then these functions report the KASan violation by:

The routine __asan_set_shadow_YY is used to poison shadow memory for a given address. This routine is used by the toolchain instrumentation to update the state of memory regions. For example, the KASan runtime would use this function to mark memory for local variables on the stack as accessible/poisoned in the epilogue/prologue of the function respectively.

This routine takes as input a target memory address and sets the corresponding byte in shadow memory to the value of YY. Here is an example of some YY values for shadow memory to encode state of 8-byte memory regions:

The routines __asan_register_globals, __asan_unregister_globals are used to poison/unpoison memory for global variables. The KASan runtime calls these functions while processing global constructors/destructors. For instance, the routine __asan_register_globals is invoked for every global variable. It takes as an argument a pointer to a data structure which describes the target global variable: the structure provides the starting address of the variable, its size not including the red zone and size of the global variable with the red zone.

The red zone is extra padding the compiler inserts after the variable to increase the likelihood of detecting an out-of-bounds memory access. Red zones ensure there is extra space between adjacent global variables. It is the responsibility of __asan_register_globals routine to mark the corresponding shadow memory as accessible for the variable and as poisoned for the red zone.

As the readers could infer from its name, the routine __asan_unregister_globals is invoked while processing global destructors and is intended to poison shadow memory for the target global variable. As a result, any memory access to such a global will cause a KASan violation.

The KASan compiler instrumentation routines __asan_loadXX_noabort, __asan_storeXX_noabort discussed above are used to verify individual memory load and store operations such as, reading or writing an array element or dereferencing a pointer. However, these routines don't cover memory access in bulk-memory copy functions such as memcpy, memmove, and memset. In many cases these functions are provided by the runtime library or implemented in assembly to optimize for performance.

Therefore, in order to be able to catch invalid memory access in these functions, we would need to provide sanitized versions of memcpy, memmove, and memset functions in our KASan implementation which would verify memory buffers to be valid memory regions.

Another routine required by KASan is __asan_handle_no_return, to perform cleanup before a noreturn function and avoid false positives on the stack. KASan adds red zones around stack variables at the start of each function, and removes them at the end. If a function does not return normally (for example, in case of longjmp-like functions and exception handling), red zones must be removed explicitly with __asan_handle_no_return.

Bare-metal code in the vast majority of cases provides its own heap implementation. It is our responsibility to implement an instrumented version of heap memory allocation and freeing routines which enable KASan to detect memory corruption bugs on the heap.

Essentially, we would need to instrument the memory allocator with the code which unpoisons KASan shadow memory corresponding to the allocated memory buffer. Additionally, we may want to insert an extra poisoned red zone memory (which accessing would then generate a KASan violation) to the end of the allocated buffer to increase the likelihood of catching out-of-bounds memory reads/writes.

Similarly, in the memory deallocation routine (such as free) we would need to poison the shadow memory corresponding to the free buffer so that any subsequent access (such as, use-after-free) would generate a KASan violation.

We can go even further by placing the freed memory buffer into a quarantine instead of immediately returning the free memory back to the allocator. This way, the freed memory buffer is suspended in quarantine for some time and will have its KASan shadow bytes poisoned for a longer period of time, increasing the probability of catching a use-after-free access to this buffer.

With all the necessary building blocks implemented we are ready to enable KASan for our bare-metal code by applying the following compiler options while building the target with the LLVM toolchain.

The -fsanitize=kernel-address Clang option instructs the compiler to instrument memory load/store operations with the KASan verification routines.

We use the -asan-mapping-offset LLVM option to indicate where we want our shadow memory to be located. For instance, let’s assume that we would like to cover address range 0x40000000 - 0x4fffffff and we want to keep shadow memory at address 0x4A700000. So, we would use -mllvm -asan-mapping-offset=0x42700000 as 0x40000000 >> 3 + 0x42700000 == 0x4A700000.

To cover globals and stack variables with KASan we would need to pass additional options to the compiler: -mllvm -asan-stack=1 -mllvm -asan-globals=1. It’s worth mentioning that instrumenting both globals and stack variables will likely result in an increase in size of the corresponding memory which might need to be accounted for in the linker script.

Finally, to prevent significant increase in size of the code section due to KASan instrumentation we instruct the compiler to always outline KASan checks using the -mllvm -asan-instrumentation-with-call-threshold=0 option. Otherwise, the compiler might inline

__asan_loadXX_noabort, __asan_storeXX_noabort routines for load/store operations resulting in bloating the generated object code.

LLVM has traditionally only supported sanitizers with runtimes for specific targets with predefined runtimes, however we have upstreamed LLVM sanitizer support for bare-metal targets under the assumption that the runtime can be defined for the particular target. You’ll need the latest version of Clang to benefit from this.

Following these steps we managed to enable KASan for a firmware target and use it in pre-production test builds. This led to early discovery of memory corruption issues that were easily remediated due to the actionable reports produced by KASan. These builds can be used with fuzzers to detect edge case bugs that normal testing fails to trigger, yet which can have significant security implications.

Our work with KASan is just one example of the multiple techniques the Android team is exploring to further secure bare-metal firmware in the Android Platform. Ideally we want to avoid introducing memory safety vulnerabilities in the first place so we are working to address this problem through adoption of memory-safe Rust in bare-metal environments. The Android team has developed Rust training which covers bare-metal Rust extensively. We highly encourage others to explore Rust (or other memory-safe languages) as an alternative to C/C++ in their firmware.

If you have any questions, please reach out – we’re here to help!

Acknowledgements: Thank you to Roger Piqueras Jover for contributions to this post, and to Evgenii Stepanov for upstreaming LLVM support for bare-metal sanitizers. Special thanks also to our colleagues who contribute and support our firmware security efforts: Sami Tolvanen, Stephan Somogyi, Stephan Chen, Dominik Maier, Xuan Xing, Farzan Karimi, Pirama Arumuga Nainar, Stephen Hines.

In celebration of Women’s History month, we’re celebrating the founders behind groundbreaking apps and games from around the world - made by women or for women. Let's discover four of my favorites in this latest batch of nine #WeArePlay stories.

Royelles Revolution / Royelles Revolution: Gaming For Girls (USA)

Múkami's journey began when she noticed the lack of representation for girls in the gaming industry. Determined to change this narrative, she created Royelles, a game designed to inspire girls and non-binary people to pursue careers in STEAM (science, technology, engineering, art, math) fields. The game is anchored in fierce female avatars like the real life NASA scientist Mara who voices a character. Royelles is revolutionizing the gaming landscape and empowering the next generation of innovators. Múkami's excited to release more gamified stories and learning modules, and a range of extended reality and AI-powered avatars based on the game’s characters.

"If we're going to effectively educate Gen Z and Gen Alpha, we have to meet them in the metaverse and leverage gamified play as a means of driving education, awareness, inspiration and empowerment.”- Múkami

Reblood: Blood Services App (Indonesia)

When her university friend needed an urgent blood transfusion but discovered there was none available in the blood bank, Leonika became aware of the blood donation shortage in Indonesia. Her mission to address this led her to create Reblood, an app connecting blood donors with those in need. With over 140,000 blood donations facilitated to date, Reblood is not only saving lives but also promoting healthier lifestyles with a recently added feature that allows people to find the most affordable medical checkups.

“Our goal is to save more lives by raising awareness of blood donation in Indonesia and promoting healthier lifestyles for blood donors.”- Leonika

CARSUL / Car Sul: Urban Mobility App (Brazil)

Luciane was devastated when she lost her son in a car accident. Her and her husband Renato's loss led them to develop Carsul, an urban mobility app prioritizing safety and security. By providing safe transportation options and partnering with government health programs to chauffeur patients long distances to larger hospitals, Carsul is not only preventing accidents but also saving lives. Luciane and Renato's dedication to protecting others from the pain they've experienced is ongoing and they plan to expand to more cities in Brazil.

“Carsul was born from this story of loss, inspiring me to protect other lives. Redefining myself in this way is very rewarding.”- Luciane

Resonantes / App-Elles: Safety App for Women (France)

After hearing the stories of young people who had experienced abuse that was similar to her own, Spoken word artist Diata developed App-Elles – an app that allows women to send alerts when they're in danger. By connecting users with support networks and professional services, App-Elles is empowering women to reclaim their safety and seek help when needed.Diata also runs writing and recording workshops to help victims overcome their experiences with violence and has plans to expand her app with the introduction of a discreet wearable that sends out alerts.

“I realized from my work on the ground that there were victims of violence who needed help and support systems. This was my inspiration to create App-Elles."- Diata

Discover more #WeArePlay stories and share your favorites.

Today marks the second chapter of the Android 15 story with the release of Android 15 Developer Preview 2!

Android 15 continues our work to build a platform that helps improve your productivity while giving you new capabilities to produce superior media and AI experiences, take advantage of device form factors, minimize battery impact, maximize smooth app performance, and protect user privacy and security, all on the most diverse lineup of devices out there.

Android continues to add features enabling your apps to take advantage of premium device hardware, including the latest telecommunications features, high-end media capabilities, dazzling displays, foldable/filppable form factors, and AI processing.

Your feedback on the Android 15 Developer Preview and Beta program plays a key role in helping Android continuously improve. The Android 15 developer site has more information about the preview, including downloads for Pixel and detailed documentation about changes. This preview is just the beginning, and we’ll have lots more to share as we move through the release cycle. Thank you in advance for your help in making Android a platform that works for everyone.

Android 15 updates the platform to give your app access to the latest advances in communication.

Android 15 continues to extend platform support for satellite connectivity and includes some UI elements to ensure a consistent user experience across the satellite connectivity landscape.

Apps can use ServiceState.isUsingNonTerrestrialNetwork() to detect when a device is connected to a satellite, giving them more awareness of why full network services may be unavailable. Additionally, Android 15 provides support for SMS/ MMS applications as well as preloaded RCS applications to use satellite connectivity for sending and receiving messages.

Android 15 is working to make the tap to pay experience more seamless and reliable while continuing to support Android's robust NFC app ecosystem. On supported devices, apps can request the NfcAdapter enter observe mode, where the device will listen but not respond to NFC readers, sending the app's NFC service PollingFrame objects to process. The PollingFrame objects

can be used to auth ahead of the first communication to the NFC reader, allowing for a one tap transaction in many cases.While most of our work to improve your productivity centers around tools like Android Studio, Jetpack Compose, and the Android Jetpack libraries, we always look for ways in the platform to help you more easily realize your vision.

Android 15 Developer Preview 2 includes an early preview of substantial improvements to the PdfRenderer APIs, giving apps capabilities to incorporate advanced features such as rendering password-protected files, annotations, form editing, searching, and selection with copy. Linearized PDF optimizations are supported to speed local PDF viewing and reduce resource use.

The PdfRenderer has been moved to a module that can be updated using Google Play system updates independent of the platform release, and we're supporting these changes back to Android R by creating a compatible pre-Android 15 version of the API surface, called PdfRendererPreV.

We value your feedback on the enhancements we've made to the PdfRenderer API surface, and we plan to make it much easier to incorporate these APIs into your app with an upcoming Android Jetpack library. Stay tuned.

Android 14 added on-device multi-language audio recognition with automatic switching between languages, but this can cause words to get dropped, especially when languages switch with less of a pause between the two utterances. Android 15 has added additional controls to allow apps to help tune this switching for their use case. EXTRA_LANGUAGE_SWITCH_INITIAL_ACTIVE_DURATION_TIME_MILLIS confines the automatic switching to the beginning of the audio session, while EXTRA_LANGUAGE_SWITCH_MATCH_SWITCHES deactivates the language switching after a defined number of switches. This can be a useful refinement, particularly if the expectation is that there will be a single language spoken during the session that should be autodetected.

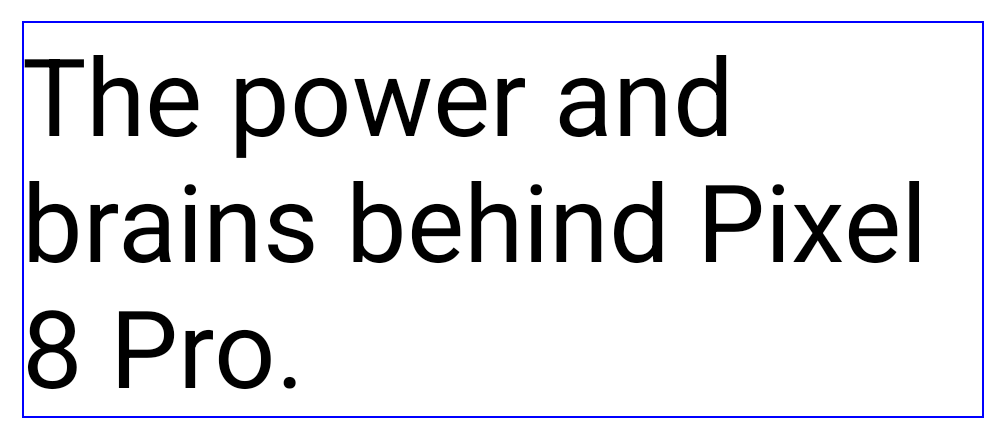

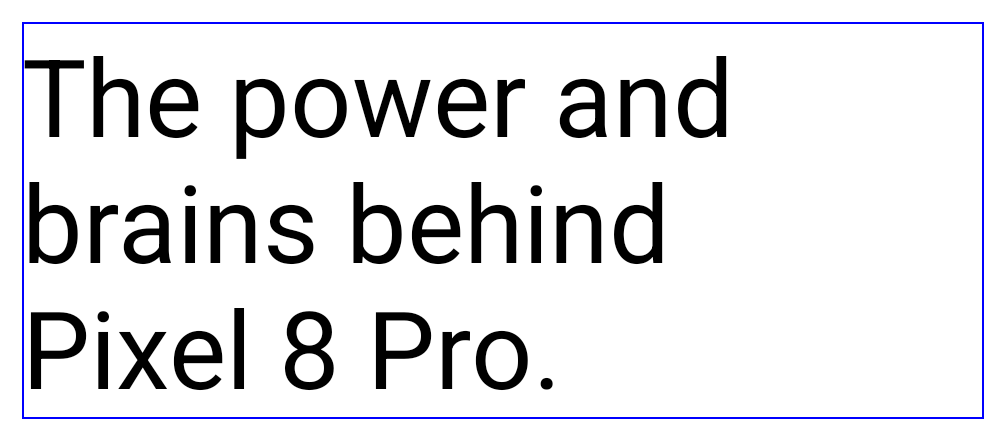

Starting in Android 15, the TextView and the underlying line breaker can preserve the given portion of text in the same line to improve readability. You can take advantage of this line break customization by using the <nobreak> tag in string resources or createNoBreakSpan. Similarly, you can preserve words from hyphenation by using the <nohyphen> tag or createNoHyphenationSpan.

Examples and screenshots:

<resources>

<string name="pixel8pro">The power and brains behind Pixel 8 Pro.</string>

</resources>

<resources>

<string name="pixel8pro">The power and brains behind <nobreak>Pixel 8 Pro.</nobreak></string>

</resources>

Android 15 builds-in support for more precise Intent resolution through UriRelativeFilterGroup, which contain a set of UriRelativeFilter objects that form a set of Intent matching rules that must each be satisfied, including URL query parameters, URL fragments, and blocking/exclusion rules. This helps applications better keep up with the dynamic demands of web-hosted deep links.

These rules can be defined in the AndroidManifest with the new <uri-relative-filter-group> tag which can optionally include an android:allow tag. These tags can contain tags that use existing data tag attributes as well as the new android:query and android:fragment attributes.

An example of the AndroidManifest syntax that will be supported:

<intent-filter>

<action android:name="android.intent.action.VIEW" />

<category android:name="android.intent.category.BROWSABLE" />

<data android:scheme="http" />

<data android:scheme="https" />

<data android:domain="astore.com" />

<uri-relative-filter-group>

<data android:pathPrefix="/auth" />

<data android:query="region=na" />

</uri-relative-filter-group>

<uri-relative-filter-group android:allow="false">

<data android:pathPrefix="/auth" />

<data android:query="mobileoptout=true" />

</uri-relative-filter-group>

<uri-relative-filter-group android:allow="false">

<data android:pathPrefix="/auth" />

<data android:fragmentPrefix="faq" />

</uri-relative-filter-group>

</intent-filter>

Android 15 continues to add OpenJDK APIs. Developer Preview 2 includes support for additional math/strictmath methods, lots of util updates including sequenced collection/map/set, ByteBuffer support in Deflater, and security key updates. These APIs are updated on over a billion devices running Android 12+ through Android 15 through Google Play System updates so you can target the latest programming features.

Android 15 gives your apps the support to get the most out of Android's form factors, including large screens, flippables, and foldables.

Your app can declare a property that Android 15 uses to allow your Application or Activity to be presented on the small cover screens of supported flippable devices. These screens are too small to be considered as compatible targets for Android apps to run on, but your app can opt-in to supporting them, making your app available in more places.

We're always looking to give users more transparency and control over their data while enhancing the core security features of the platform.

Android 15 adds support for apps to detect that they are being recorded. A callback is invoked whenever the app transitions between being visible or invisible within a screen recording. (An app is considered visible if activities owned by the registering process's UID are being recorded.) This way, if your app is performing a sensitive operation, you can inform the user that they're being recorded.

val mCallback = Consumer<Int> { state ->

if (state == SCREEN_RECORDING_STATE_VISIBLE) {

// we're being recorded

} else {

// we’re not being recorded

}

}

override fun onStart() {

super.onStart()

val initialState =

windowManager.addScreenRecordingCallback(mainExecutor, mCallback)

mCallback.accept(initialState)

}

override fun onStop() {

super.onStop()

windowManager.removeScreenRecordingCallback(mCallback)

}

We are introducing new APIs that can help you gather insights about your apps, continuing to optimize the way background applications work, and providing APIs to help make tasks in your app more efficient to execute.

App startup on Android has always been a bit of a mystery. There was no easy way to know within your app whether it started from a cold, warm, or hot state. It was difficult to know how long your app spent during the various launch phases: forking the process, calling onCreate, drawing the first frame, and more. When your application class was instantiated, you had no way of knowing whether the app started from a broadcast, a content provider, a job, a backup, boot complete, an alarm, or an Activity.

The ApplicationStartInfo API on Android 15 gives you all of this and more. You can even choose to add your own timestamps into the flow to make it easy to collect timing data in one place. In addition to collecting metrics, you can use ApplicationStartInfo to help directly optimize app startup; for example, you can eliminate the costly instantiation of UI-related libraries within your Application class when your app is starting up due to a broadcast.

Android 15 includes several improvements to the PackageManager’s Stopped State. Apps that are in a Stopped State should only be leaving this state through direct user action. Furthermore, apps entering the Stopped State will have their PendingIntents removed. To help developers re-register their pending intents, apps will now receive the BOOT_COMPLETED broadcast once they are removed from the Stopped State. Lastly, the new ApplicationStartInfo will also include the ApplicationStartInfo.wasForceStopped() to let developers know that their app was put into the Stopped State.

Android has offered an API, StorageStats.getAppBytes(), that summarizes the installed size of an app as a single number of bytes, which is a sum of the APK size, the size of files extracted from the APK, and files that were generated on the device such as ahead-of-time (AOT) compiled code. This number is not very insightful in terms of how your app is using storage.

Android 15 adds the StorageStats.getAppBytesByDataType([type]) API, which allows you to get insight into how your app is using up all that space, including apk file splits, AOT and speedup related code, dex metadata, libraries, and guided profiles.

Android 14 began requiring Foreground Service Types. The documentation mentions that the dataSync Foreground Service type will be deprecated in a future version of Android.

To support migrating away from the dataSync Foreground Service type, Android 15 includes the mediaProcessing Foreground Service type, which is used to perform time-consuming operations on media assets, like converting media to different formats. In a future Beta release, this service will have a runtime limit of 6 hours.

Android 15 introduces new SQLite APIs that expose advanced features from the underlying SQLite engine that target specific performance issues that can manifest in apps.

Developers should consult best practices for SQLite performance to get the most out of their SQLite database, especially when working with large databases or when running latency-sensitive queries.

Each release of Android focuses on improving the media experience.

Android 15 chooses HDR headroom that is appropriate for the underlying device capabilities and bit-depth of the panel; for pages that have lots of SDR content such as a messaging app displaying a single HDR thumbnail, this can end up adversely influencing the perceived brightness of the SDR content. Android 15 allows you to control the HDR headroom with setDesiredHdrHeadroom to strike a balance between SDR and HDR content.

To enable this feature, you need to ensure loudness metadata is available in your AAC content and enable the platform feature in your app. For this, you instantiate a LoudnessCodecController object by calling its create factory method with the audio session ID from the associated AudioTrack; this automatically starts applying audio updates. You can pass an OnLoudnessCodecUpdateListener to modify/filter loudness parameters before they are applied on the MediaCodec.

// media contains metadata of type MPEG_4 OR MPEG_D

val mediaCodec = ...

val audioTrack = AudioTrack.Builder()

.setSessionId(sessionId)

.build()

...

// create new loudness controller that applies the parameters to the MediaCodec

try {

val lcController = LoudnessCodecController.create(mSessionId)

// starts applying audio updates for each added MediaCodec

AndroidX media3 ExoPlayer will soon be updated to leverage LoudnessCodecController APIs for a seamless app integration.

Android 12 included the Spatializer class, which enables querying the capabilities and behavior of sound spatialization on the device. In Android 15, we're deprecating the Virtualizer class; instead use AudioAttributes.Builder.setSpatializationBehavior to characterize how you want your content to be played when spatialization is supported.

AndroidX media3 ExoPlayer 1.0 enables spatial audio by default for multichannel audio when the device supports it. See the blog post and documentation for more information, including APIs to control the feature.

AutomaticZenRules allow apps to customize Attention Management (Do Not Disturb) rules and decide when to activate/deactivate them. Android 15 greatly enhances these rules with the goal of improving the user experience. It does this by:

Because backward compatibility is so important to us, we try to limit impactful behavior changes, but some are inevitable.

Once your app targets Android 15, the elegantTextHeight TextView attribute becomes true by default, replacing the compact font used by default with some scripts that have large vertical metrics with one that is much more readable. The compact font was introduced to prevent breaking layouts; Android 13 prevents many of these breakages by allowing the text layout to stretch the vertical height utilizing the fallbackLineSpacing attribute. In Android 15, the compact font still remains in the system, so your app can set elegantTextHeight to false to get the same behavior as before, but it is unlikely to be supported in upcoming releases. So, if your application supports the following scripts: Arabic, Lao, Myanmar, Tamil, Gujarati, Kannada, Malayalam, Odia, Telugu or Thai, please test your applications by setting elegantTextHeight to true.

Examples and screenshots

Default behavior as of Android 14

Default behavior for applications that target Android 15

To give you more time to plan for app compatibility work, we’re letting you know our Platform Stability milestone well in advance.

At this milestone, we’ll deliver final SDK/NDK APIs and also final internal APIs and app-facing system behaviors. We’re expecting to reach Platform Stability in June 2024, and from that time you’ll have several months before the official release to do your final testing. The release timeline details are here.

The Developer Preview has everything you need to try the Android 15 features, test your apps, and give us feedback. You can get started today by flashing a system image onto a Pixel 6, 7, or 8 series device, along with the Pixel Fold and Pixel Tablet. We are not offering sideload images for Developer Preview 2. If you don’t have a Pixel device, you can use the 64-bit system images with the Android Emulator in Android Studio. If you've already installed Android 15 Developer Preview 1, you should get an over-the-air update to Android 15 Developer Preview 2.

For the best development experience with Android 15, we recommend that you use the latest preview of Android Studio Jellyfish (or more recent Jellyfish+ versions). Once you’re set up, here are some of the things you should do:

We’ll update the preview system images and SDK regularly throughout the Android 15 release cycle. This preview release is for developers only and not intended for daily or consumer use, so we're making it available by manual download only. Once you’ve manually installed a preview build, you’ll automatically get future updates over-the-air for all later previews and Betas. Read more here.

If you intend to move from the Android 14 QPR Beta program to the Android 15 Developer Preview program and don't want to have to wipe your device, we recommend that you move to Developer Preview 2 now. Otherwise you may run into time periods where the Android 14 Beta will have a more recent build date which will prevent you from going directly to the Android 15 Developer Preview without doing a data wipe.

As we reach our Beta releases, we'll be inviting consumers to try Android 15 as well, and we'll open up enrollment for the Android Beta program at that time. For now, please note that the Android Beta program is not yet available for Android 15.

For complete information, visit the Android 15 developer site.

Java and OpenJDK are trademarks or registered trademarks of Oracle and/or its affiliates.

Version 23.0.0 of the Android Google Mobile Ads SDK is now available. We recommend upgrading as soon as possible to get our latest features and performance improvements.

Starting in version 23.0.0, the Google Mobile Ads SDK requires all apps to be on a minimum Android API level 21 to run. To adjust the API level, change the value of minSdk in your app-level build.gradle file to 21 or higher.

In version 23.0.0, AdManagerAdRequest.Builder methods inherited from its parent can be chained together to build an AdManagerAdRequest using a single call:

var newRequest = AdManagerAdRequest.Builder()

.addCustomTargeting("age", "25") // AdManagerAdRequest.Builder method.

.setContentUrl("https://www.example.com") // Method inherited from parent.

.build() // Builds an AdManagerAdRequest.

A side effect of this change is AdManagerAdRequest.Builder no longer inherits from AdRequest.Builder.

With this Android major version 23 launch and the iOS major version 11 launch last month, we are announcing new deprecation and sunset dates for older major releases. Specifically:

For the full list of changes in v23.0.0, check the release notes. Check our migration guide to ensure your mobile apps are ready to upgrade. As always, if you have any questions or need additional help, contact us via the developer forum.

Google I/O is arriving this year on May 14th and you’re invited to join us online! I/O offers something for everyone, whether you are developing a new application, modernizing an existing one, or transforming it into a business.

The Gemini era unlocks new possibilities for developers to build creative and productive AI-enabled applications. I/O is where you’ll hear how you can get from idea to production AI applications faster. We’re excited to share what’s new for mobile, web, and multiplatform development, and how to scale your applications in the cloud. You will be able to dive deeper into topics that interest you with over 100 sessions, workshops, codelabs, and demos.

Visit the Google I/O site and register to stay informed about I/O and other related events coming soon. The livestreamed keynotes start May 14 at 10am PT, so mark your calendar.

If you haven’t already, go try out our newest Google I/O puzzle and head to @googlefordevs on Instagram if you need a hint.

We’re on a mission to make Google Play the best destination for gaming, and your amazing games are what make it possible. That’s why we’re investing in tools that empower you to achieve more by increasing user engagement, accelerating your business growth, and maximizing your reach.

Today, we shared more details about those investments at the Google for Games Developer Summit. Check out the Keynote session on demand, or keep reading for key product updates from the summit.

We know that identity, progress, and achievements are important to gamers. With Play Games Services (PGS), gamers can switch between devices and jump back into gameplay instantly, on any device connected with PGS1. Based on your feedback, we’ve made it easier for you to integrate PGS and provide more relevant experiences for your users:

To start creating these engaging experiences within your game, integrate PGS today — and stay tuned for more capabilities coming soon.

We’ve also been working to tailor our store experience to better engage users who’ve already installed your game. Starting today, we’re rolling out enhancements to store listings to more prominently display your game updates, new content, and promotions directly within Play3. Your store listing page will source relevant content to keep your audience engaged with your game, including your latest YouTube videos, AI-generated FAQs, and more.

These improvements are currently limited to English language users and to select titles that are part of the early access program. If you’re interested in testing the enhanced store listing for your game and helping shape this feature with your feedback, you can express interest here.

We’re also expanding our most popular programs to help you accelerate your business growth.

Finally, to help you reach more users with your games, we’re making it easier to scale and grow your multi-platform games across mobile, tablets, Chromebooks, and Windows PCs5.

For more news from the Google for Games Developer Summit, check out g.co/gamedevsummit. You can also connect with us at GDC in San Francisco. We’ll be hosting a full day of sessions on March 19 and a week of hands-on product experience at the Moscone Center, West Hall, Level 2 Lobby from March 18 to March 22.

As always, thank you for partnering with us to bring amazing games to players everywhere.

Making informed marketing decisions relies on identifying your most valuable user acquisition channels for your games. By tracking referral data, you can understand which traffic sources send the most users to download your app from the Google Play store. These insights can help you make the most of your advertising spend and maximize ROI. That’s why in 2017, we launched the Play Install Referrer API, which gives you an easy, reliable way to track your apps’ referral information directly from the Play store.

Until now, this feature was only available for your games in the mobile Play store. Today, we’re pleased to announce support for Google Play Games on PC, allowing you to attribute conversions from your marketing activities on the Web1. If you use the Google Play Install Referrer API to track your referral sources, you can now attribute conversions to specific campaigns directly from Google Play by manually retrieving referral information, or using third-party analytics tools from Google’s App Attribution Partners.

Getting started is easy. First, generate a Google Play URL for your game’s Google Play store listing page and add a referrer query parameter for your Web campaign. Then, when a PC user clicks the link, they will be redirected to your game’s listing page on the Google Play Web store, which will give them the option to “Install on Windows.” Once the user launches your game, you’ll be able to track the referral using the Google Play Install Referrer library.

“With integration support from Adjust, developers can quickly and efficiently measure marketing campaigns for their games on Google Play Games on PC. We’re excited about the opportunity this brings for developers to broaden their games’ reach and strengthen cross-platform measurement.”- Gijsbert Pols, Director of Connected TV & New Channels, Adjust

Learn more about third-party referral codes for Google Play Games on PC and start optimizing your marketing performance today.

1Subject to device compatibility with Google Play Games on PC.

Today, we’re excited to reveal Google Play’s Indie Games Accelerator class of 2024.

Selected game studios from around the world will take part in the 10-week accelerator designed to take their businesses to the next level on Google Play. The program includes:

Due to the number of impressive applications, we’ve doubled this year’s class size from 30 to 60 studios. Without further ado, meet the class of 2024 and join us in congratulating them!

Congratulations again to all the founders selected; we can’t wait to see your games grow on our platform.

We’re committed to helping app and game businesses of all sizes reach their full potential. Discover more about Google Play’s programs, resources and tools for indie games developers.