Posted by the Assistant Developer Platform team

Since the launch of the Google Assistant, our developer ecosystem has been instrumental in delivering compelling voice experiences to more than 500 million active users. Today, we’re taking a major step forward in helping you build these custom voice apps and services by introducing a suite of new and improved developer tools: Actions Builder and Actions SDK. These tools make building Conversational Actions for the Assistant easier and more streamlined than ever.

Better design and development tools

Actions Builder is a web-based IDE that lets you develop, test, and deploy directly in the Actions console. The graphical interface lets you visualize the conversational flow, manage Natural Language Understanding (NLU) training data, and debug with advanced tools.

For those of you who prefer local IDEs, the updated Actions SDK provides a file based representation of your Actions project. This lets you author NLU training data and conversational flows locally as well as bulk import and export training data. We've also updated the CLI that accompanies Actions SDK, so you can build and manage Actions projects completely with code, using your favorite source control and continuous integration tools.

Together, Actions Builder and Actions SDK create a seamless, consolidated development experience. No matter what tool you start with, you can switch between them based on what works best for your workflow. For example, you can use Actions Builder to lay out conversational flows and provide NLU training data, Actions SDK to write fulfillment code, and the CLI to synchronize the two. These tools create an environment where all team members can contribute effectively and focus on what they do best: design and code.

New interaction model

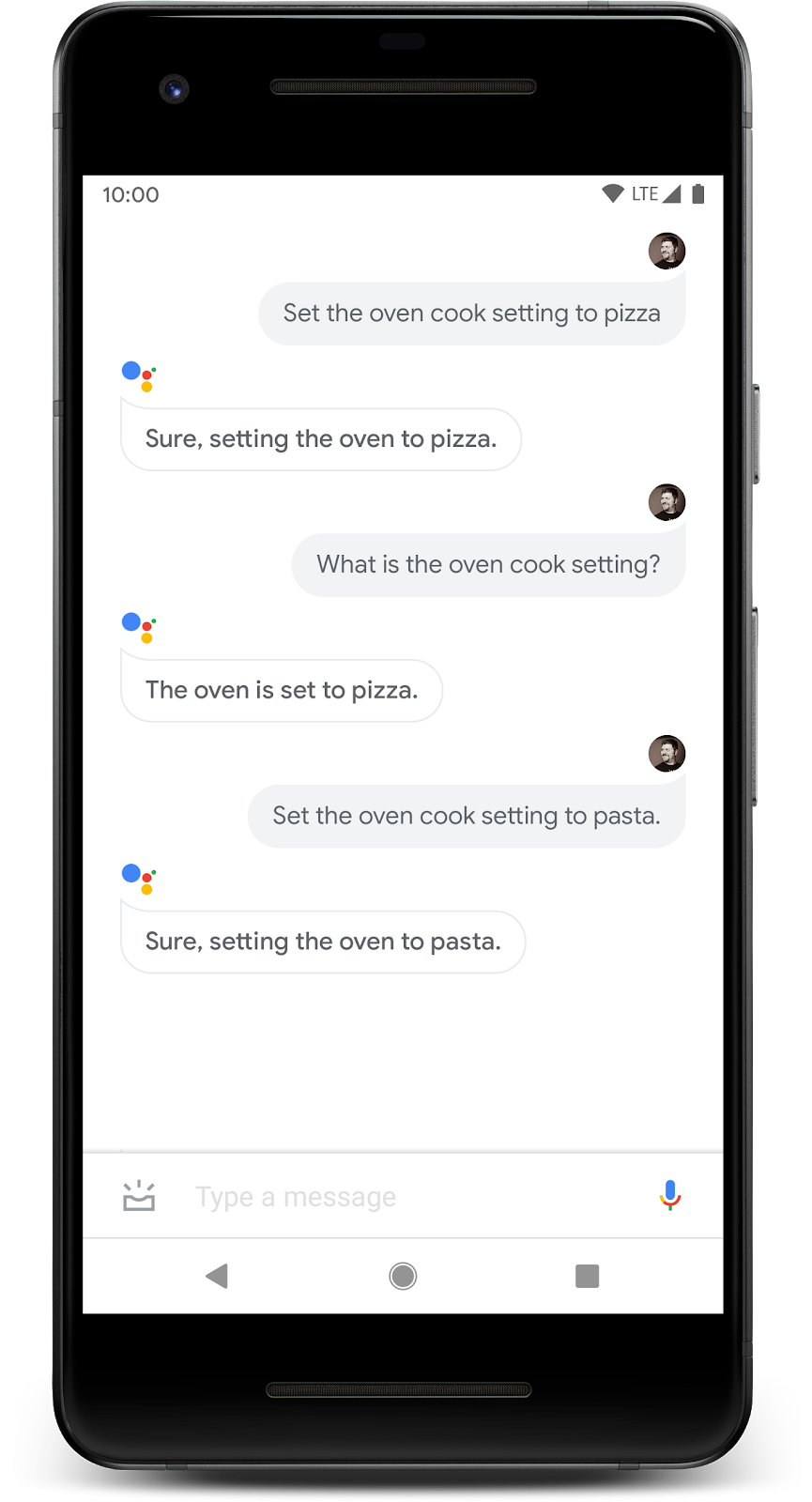

A new, powerful interaction model lets you design conversations quickly and efficiently. Intents and scenes let you define robust NLU training data and behavior for specific conversational contexts. Using scenes as building blocks, you define active intents, declare context specific error handling, collect data through slot filling, and respond with prompts.

Scenes also separate conversational flow definitions from fulfillment logic, so you can reuse the same flows across multiple conversations. Transitions between scenes let you define when one conversational context switches to another. All your scenes and transitions describe a full conversational flow and all possible dialog turns.

You can express the entire interaction model with either the Actions Builder or Actions SDK. A typical way to develop is to use Actions Builder to view and edit your scenes and then use Actions SDK to sync changes to your local file system. This lets you version control your project, modify your project files, and build fulfillment in your favorite development environment.

Faster and smarter runtime engine

Under the hood, we also made a lot of improvements that your users will appreciate. We sped up the Assistant runtime engine, so users get faster responses and a smoother experience. We’ve also made the runtime engine smarter, so your Actions can understand users better with the same amount of training data.

Production ready platform

We've worked with Pretzel Labs and Galinha Pintadinha to test the capabilities of the new platform and to refine the interaction model and runtime engine improvements.

Pretzel Labs built Kids Court with Actions Builder, creating a full conversational flow with no code and added fulfilment for advanced functionality.

"Having the combination of a visual layout with webhook blocks for code helps us collaborate clearly and more efficiently. Something I liked very much about this was the separation between the designer and the developers' parts, making it very intuitive to make design changes without affecting backend logic."

-- Adva Levin, founder of Pretzel Labs

Galinha Pintadinha runs one of the biggest YouTube channels and built one the most popular Conversational Actions in their country. Their development team migrated to the new platform to optimize their workflow and simplify future Action development. Galinha Pintadinha’s Actions now contain half the number of intents and have a radically simplified conversation tree. Using features like contextual error handling, they were able to improve the user experience and quality with little to no cost.

"Actions Builder is a robust and well designed toolbox for developing conversational apps. The concept of scenes and transitions helped us define the flow of our Action in a much more streamlined way."

-- Mário Neto, engineer at Galinha Pintadinha

Get started

To learn more about Actions Builder and SDK and to start developing your next Actions, check out our new developer resources. Our codelabs will walk you through using the new tooling and interaction model. Samples for all major features are also available, so you can start playing with code immediately. See the full set of documentation to start building today.

Stay tuned for more platform updates and happy coding!

Posted by Dave Smith, Developer Advocate

Posted by Dave Smith, Developer Advocate

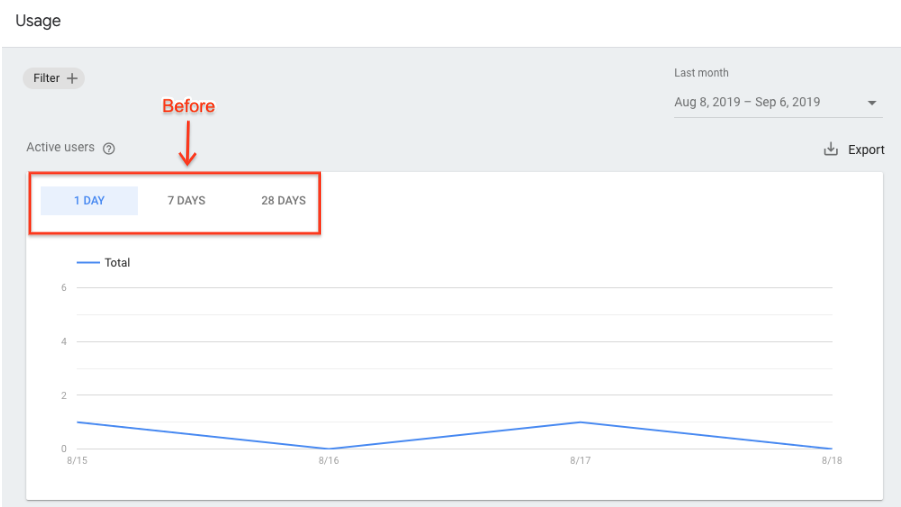

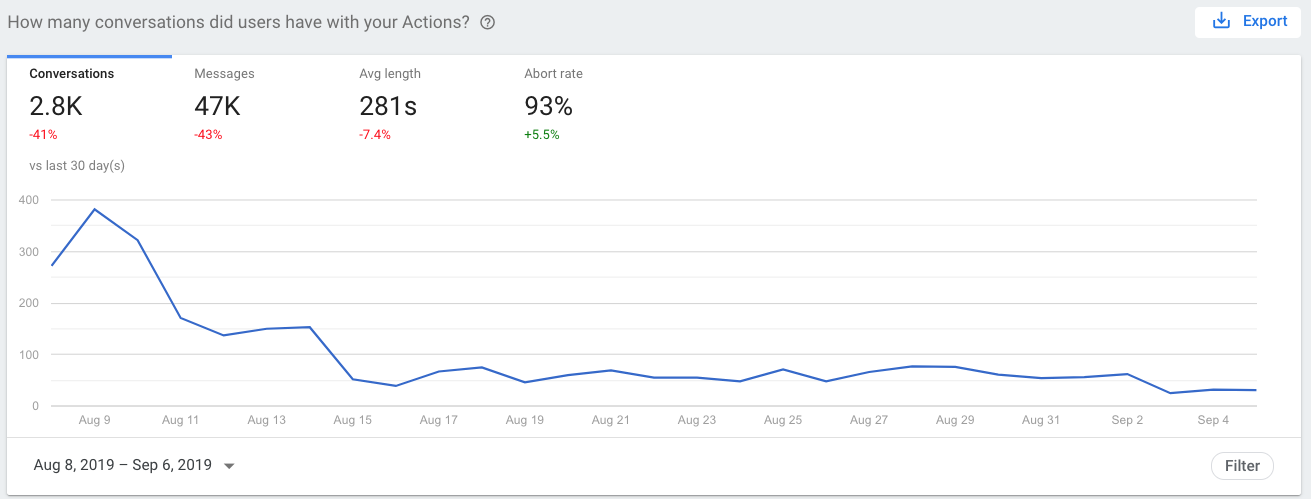

After:

After:

Posted by Dave Smith, Developer Advocate

Posted by Dave Smith, Developer Advocate