Posted by Fei Ye, Software Engineer and Kazushi Nagayama, Ninja Spamologist

Google Play ratings and reviews are extremely important in helping users decide which apps to install. Unfortunately, fake and misleading reviews can undermine users' trust in those ratings. User trust is a top priority for us at Google Play, and we are continuously working to make sure that the ratings and reviews shown in our store are not being manipulated.

There are various ways in which ratings and reviews may violate our developer guidelines:

- Bad content: Reviews that are profane, hateful, or off-topic.

- Fake ratings: Ratings and reviews meant to manipulate an app's average rating or top reviews. We've seen different approaches to manipulate the average rating; from 5-star attacks to positively boost an app's average rating, to 1-star attacks to influence it negatively.

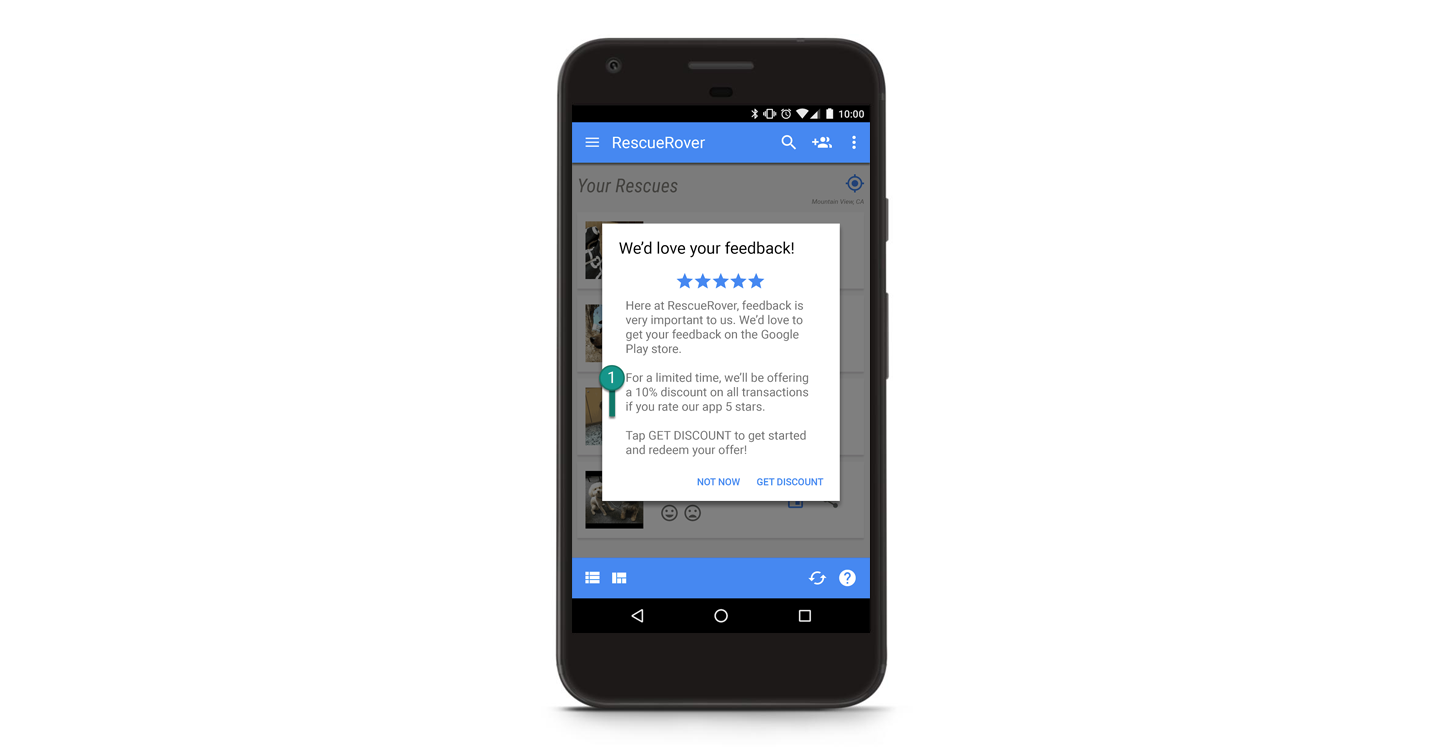

- Incentivized ratings: Ratings and reviews given by real humans in exchange for money or valuable items.

When we see these, we take action on the app itself, as well as the review or rating in question.

In 2018, the Google Play Trust & Safety teams deployed a system that combines human intelligence with machine learning to detect and enforce policy violations in ratings and reviews. A team of engineers and analysts closely monitor and study suspicious activities in Play's ratings and reviews, and improve the model's precision and recall on a regular basis. We also regularly ask skilled reviewers to check the decisions made by our models for quality assurance.

It's a big job. To give you a sense of the volume we manage, here are some numbers from a recent week:

- Millions of reviews and ratings detected and removed from the Play Store.

- Thousands of bad apps identified due to suspicious reviews and rating activities on them.

Our team can do a lot, but we need your help to keep Google Play a safe and trusted place for apps and games.

If you're a developer, you can help us by doing the following:

- Don't buy fake or incentivized ratings.

- Don't run campaigns, in-app or otherwise, like "Give us 5 stars and we'll give you this in-app item!" That counts as incentivized ratings, and it's prohibited by policy.

- Do read the Google Play Developer Policy to make sure you are not inadvertently making violations.

Example of a violation: incentivized ratings is not allowed

If you're a user, you can follow these simple guidelines as well:

- Don't accept or receive money or goods (even virtual ones) in exchange for reviews and ratings.

- Don't use profanity to criticize an app or game; keep your feedback constructive.

- Don't post gibberish, hateful, sexual, profane or off-topic reviews; they simply aren't allowed.

- Do read the comment posting policy. It's pretty concise and talks about all the things you should consider when posting a review to the public.

Finally, if you find bad ratings and reviews on Google Play, help us improve by sending your feedback! Users can mark the review as "Spam" and developers can submit feedback through the Play Console.

Tooltip to flag the review as Spam.

Thanks for helping us keep Google Play a safe and trusted place to discover some of the world's best apps and games.

How useful did you find this blog post?