When you launch your Container Engine cluster, you can enable Cloud Monitoring with one click. Check it out!

Information will be collected about the CPU usage, memory usage and disk usage for all of the containers in your cluster. This information is annotated and stored in Cloud Monitoring, where you can choose to either access it via the API or in the Cloud Monitoring UI. From Cloud Monitoring, you can easily examine not only the container level resource usage but also see this aggregated across pods and clusters.

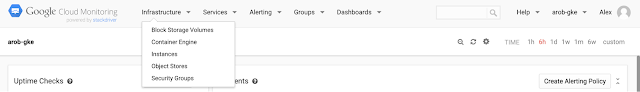

If you head over to the Cloud Monitoring dashboard and click on the Infrastructure dropdown, you can see a new option for Container Engine.

If you have more than one cluster with monitoring enabled, you'll see a page listing the clusters in your project along with how many pods and instances are in them. However, if you only have one cluster, you'll be directed straight to details about it, as shown below.

This page gives you a view of your cluster. It lists all the pods running in your cluster, recent events from the cluster, as well as resource usage aggregated across the nodes in your cluster. In this case, you can see that this cluster has the system components in it (DNS, UI, logging and monitoring) as well as the frontend and redis pods from the guestbook tutorial in the Container Engine documentation.

From here, you can easily drill down to the details of individual pods and containers, where you'll see metadata about the pod and its containers, such as how many times they've been restarted, along with metrics about the pod's resource usage.

But this is just the first piece. Since Cloud Monitoring makes heavy use of tags (the equivalent of Container Engine's labels), you can create groups based on how you've labeled your containers or pods. For example, if you're running a web app in a replication controller, you may have all of your frontend web containers labeled with “role=frontend.” In Cloud Monitoring, you can now create a group “Frontend” that matches all resources with the tag role and the value frontend.

You can also make queries that aggregate across pods without needing to create a group, making it possible to visualize the performance of an entire replication controller or service on a single graph. You can do this by creating a new dashboard from the top-level menu option named Dashboards, and adding a chart. In the example below, you can see the aggregated memory usage of all the php-redis frontend pods in the cluster.

With these tools, you can create powerful alerting policies that trigger when the aggregate across the group or any container within the group violates a threshold, for example, using too much memory. You can also tag your group as a cluster so that Cloud Monitoring's cluster insights detection will show outliers across the set of containers when they're detected, potentially helping you to pinpoint cases where your load isn't evenly distributed or nodes don't have even workloads.

And since this is all based on tags, it will update automatically, even as your containers move across the nodes of your cluster, even if you're auto-scaling and adding and removing nodes over time.

We have a lot more work planned to continue to integrate Container Engine and Cloud Monitoring and make it easy to collect your application and service metrics as well as system metrics that you can use today.

Do you have ideas of what we should do to make things better? Let us know by sending feedback through the Cloud Monitoring console or directly at [email protected]. You can find more information on the available metrics in our docs.

- Posted by Alex Robinson, Software Engineer, Google Container Engine and Jeremy Katz, Software Engineer, Google Cloud Monitoring