Google for Games is coming to GDC in San Francisco! Join us on March 19 for the Game Developers Conference (GDC) at the Moscone Center, where game developers from across the world will gather to learn, network, problem-solve, and help shape the future of the industry. From March 18 to March 22, experience our comprehensive suite of multi-platform game development tools and explore the new features from Play Pass at the West Hall, Level 2 Lobby.

This year, we’re proud to host eight sessions for developers, designers, business and marketing teams, and everyone else in the gaming community with an interest to grow their game business. Take a look at this year’s sessions below and if you’re interested in learning more about topics from Google Play and Android, check out key product updates from the Google for Games Developer Summit.

Scaling your game development

We’re hosting three sessions designed to help scale your game development using tools from Firebase, Android, and Google Cloud. Learn more about building high quality games with case studies from industry experts.

Beyond "Set and Forget": Advanced Debugging with Firebase Crashlytics

Tuesday, March 19, 9:30 am - 10:00 am

Crashlytics has added a number of features that make detecting, tracking, and understanding bugs even easier, from high-level to native code. Take your fixes to another level with native stack traces, memory debugging, issue annotation, and the ability to log uncaught exceptions as fatal.

Enhancing Game Performance: Vulkan and Android Adaptability Technology

Tuesday, March 19, 10:50 am - 11:50 am

Learn how to leverage Vulkan graphics API to improve your graphics quality or performance, including performance tuning with dynamic upscaling. Find out how the Android Dynamic Performance Framework (ADPF) can enhance game performance and power in Unity and native C++, with easy integration through the Unreal Engine plugin. We're also sharing how NCSoft Lineage W improved thermal status and performance using ADPF.

Creating a global-scale game with Google Cloud

Tuesday, March 19, 4:40 pm - 5:10 pm

This session will cover the best of Google Cloud's open source projects (Agones, Open Match, and more) and products (GKE, Spanner, Anthos Service Mesh, Cloud Build, Cloud Deploy, and more) to teach you how to build, deploy, and scale world-scale multiplayer games with Google Cloud.

Increasing user engagement

We’re hosting two sessions designed to help you increase engagement by creating dynamic gameplay experiences using generative AI and expanding opportunities on Google Play to grow your community of players with exclusive rewards.

Reimagine the Future of Gaming with Google AI

Tuesday, March 19, 10:50 am - 11:50 am

In our keynote session, senior executives from Google Cloud, Google Play, and Labs will share their unique perspectives on generative AI in the gaming landscape. Learn more about cutting-edge AI solutions from Google Cloud, Android, Google Play, and Labs designed to simplify game development, publishing, and business operations, plus actionable strategies to leverage AI for faster development, better player experiences, and sustainable growth.

Grow your community of loyal gamers with Google Play

Tuesday, March 19, 1:20 pm - 1:50 pm

In this session, we’ll cover new features and insights from Google Play to create rewarding experiences for gamers using Play Pass, Play Points, and Play Games Services. Get a behind-the-scenes look at how Google Play rewards a growing community of passionate gamers, and how to use this to super-charge your business.

Maximizing reach across screens

These sessions, from Google Play, Android, and Flutter, introduce ways to expand your mobile games to PC. Learn about the latest tools that will help you accelerate growth across large screens.

Bringing more users to your Google Play Games on PC game

Tuesday, March 19, 2:10 pm - 2:40 pm

Join us for an overview of Google Play Games on PC, how it has grown in the past year, and a walkthrough of how to optimize and attribute your PC advertisements for your Google Play Games on PC titles. Learn how to use Google Play Games to increase your reach and acquisition of PC users for your mobile game, as well as how to effectively use the Google Play Install Referrer API to attribute and optimize your ads across mobile and PC.

Android input on desktop: How to delight your users

Tuesday, March 19, 3:00 pm - 3:30 pm

Give your players a first-class gaming experience with our best practices for handling input between mobile and PC games, including technical details on how to implement these best practices across mobile, tablets, Chromebooks and Windows PCs1. Learn how Android handles keyboard, mouse, and controller input across different form factors, with case studies for designing for both touch and hardware input.

Building Multiplatform Games with Flutter

Tuesday, March 19, 3:50 pm - 4:20 pm

Learn why game developers are choosing Flutter to build casual games on mobile, desktop, and web browsers. We’ll cover the free, open-source tools and resources available through the Casual Games Toolkit, a collection of free and open-source tools, templates, and resources to make game dev more productive with Flutter.

Learn more about all of our sessions coming to you on March, 19, at GDC in San Francisco.

________________

1Windows is a trademark of the Microsoft group of companies.

Large Language Models On-Device with MediaPipe and TensorFlow Lite

TensorFlow Lite has been a powerful tool for on-device machine learning since its release in 2017, and MediaPipe further extended that power in 2019 by supporting complete ML pipelines. While these tools initially focused on smaller on-device models, today marks a dramatic shift with the experimental MediaPipe LLM Inference API.

This new release enables Large Language Models (LLMs) to run fully on-device across platforms. This new capability is particularly transformative considering the memory and compute demands of LLMs, which are over a hundred times larger than traditional on-device models. Optimizations across the on-device stack make this possible, including new ops, quantization, caching, and weight sharing.

The experimental cross-platform MediaPipe LLM Inference API, designed to streamline on-device LLM integration for web developers, supports Web, Android, and iOS with initial support for four openly available LLMs: Gemma, Phi 2, Falcon, and Stable LM. It gives researchers and developers the flexibility to prototype and test popular openly available LLM models on-device.

On Android, the MediaPipe LLM Inference API is intended for experimental and research use only. Production applications with LLMs can use the Gemini API or Gemini Nano on-device through Android AICore. AICore is the new system-level capability introduced in Android 14 to provide Gemini-powered solutions for high-end devices, including integrations with the latest ML accelerators, use-case optimized LoRA adapters, and safety filters. To start using Gemini Nano on-device with your app, apply to the Early Access Preview.

LLM Inference API

Starting today, you can test out the MediaPipe LLM Inference API via our web demo or by building our sample demo apps. You can experiment and integrate it into your projects via our Web, Android, or iOS SDKs.

Using the LLM Inference API allows you to bring LLMs on-device in just a few steps. These steps apply across web, iOS, and Android, though the SDK and native API will be platform specific. The following code samples show the web SDK.

1. Pick model weights compatible with one of our supported model architectures

2. Convert the model weights into a TensorFlow Lite Flatbuffer using the MediaPipe Python Package

from mediapipe.tasks.python.genai import converter config = converter.ConversionConfig(...) converter.convert_checkpoint(config)

3. Include the LLM Inference SDK in your application

import { FilesetResolver, LlmInference } from "https://cdn.jsdelivr.net/npm/@mediapipe/tasks-genai”

4. Host the TensorFlow Lite Flatbuffer along with your application.

5. Use the LLM Inference API to take a text prompt and get a text response from your model.

const fileset = await FilesetResolver.forGenAiTasks("https://cdn.jsdelivr.net/npm/@mediapipe/tasks-genai/wasm"); const llmInference = await LlmInference.createFromModelPath(fileset, "model.bin"); const responseText = await llmInference.generateResponse("Hello, nice to meet you"); document.getElementById('output').textContent = responseText;

Please see our documentation and code examples for a detailed walk through of each of these steps.

Here are real time gifs of Gemma 2B running via the MediaPipe LLM Inference API.

|

| Gemma 2B running on-device in browser via the MediaPipe LLM Inference API |

|

| Gemma 2B running on-device on iOS (left) and Android (right) via the MediaPipe LLM Inference API |

Models

Our initial release supports the following four model architectures. Any model weights compatible with these architectures will work with the LLM Inference API. Use the base model weights, use a community fine-tuned version of the weights, or fine tune weights using your own data.

|

Model |

Parameter Size |

|

Falcon 1B |

1.3 Billion |

|

Gemma 2B |

2.5 Billion |

|

Phi 2 |

2.7 Billion |

|

Stable LM 3B |

2.8 Billion |

Model Performance

Through significant optimizations, some of which are detailed below, the MediaPipe LLM Inference API is able to deliver state-of-the-art latency on-device, focusing on CPU and GPU to support multiple platforms. For sustained performance in a production setting on select premium phones, Android AICore can take advantage of hardware-specific neural accelerators.

When measuring latency for an LLM, there are a few terms and measurements to consider. Time to First Token and Decode Speed will be the two most meaningful as these measure how quickly you get the start of your response and how quickly the response generates once it starts.

|

Term |

Significance |

Measurement |

|

Token |

LLMs use tokens rather than words as inputs and outputs. Each model used with the LLM Inference API has a tokenizer built in which converts between words and tokens. |

100 English words ≈ 130 tokens. However the conversion is dependent on the specific LLM and the language. |

|

Max Tokens |

The maximum total tokens for the LLM prompt + response. |

Configured in the LLM Inference API at runtime. |

|

Time to First Token |

Time between calling the LLM Inference API and receiving the first token of the response. |

Max Tokens / Prefill Speed |

|

Prefill Speed |

How quickly a prompt is processed by an LLM. |

Model and device specific. Benchmark numbers below. |

|

Decode Speed |

How quickly a response is generated by an LLM. |

Model and device specific. Benchmark numbers below. |

The Prefill Speed and Decode Speed are dependent on model, hardware, and max tokens. They can also change depending on the current load of the device.

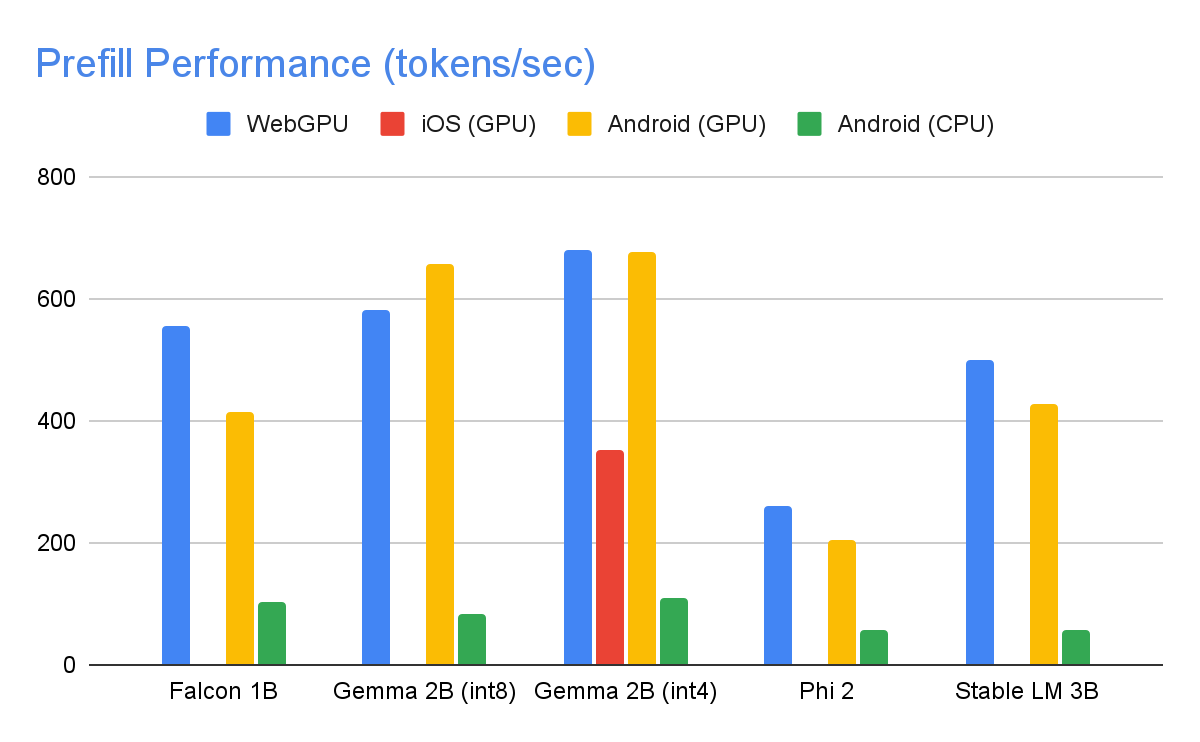

The following speeds were taken on high end devices using a max tokens of 1280 tokens, an input prompt of 1024 tokens, and int8 weight quantization. The exception being Gemma 2B (int4), found here on Kaggle, which uses a mixed 4/8-bit weight quantization.

Benchmarks

|

|

| On the GPU, Falcon 1B and Phi 2 use fp32 activations, while Gemma and StableLM 3B use fp16 activations as the latter models showed greater robustness to precision loss according to our quality eval studies. The lowest bit activation data type that maintained model quality was chosen for each. Note that Gemma 2B (int4) was the only model we could run on iOS due to its memory constraints, and we are working on enabling other models on iOS as well. |

Performance Optimizations

To achieve the performance numbers above, countless optimizations were made across MediaPipe, TensorFlow Lite, XNNPack (our CPU neural network operator library), and our GPU-accelerated runtime. The following are a select few that resulted in meaningful performance improvements.

Weights Sharing: The LLM inference process comprises 2 phases: a prefill phase and a decode phase. Traditionally, this setup would require 2 separate inference contexts, each independently managing resources for its corresponding ML model. Given the memory demands of LLMs, we've added a feature that allows sharing the weights and the KV cache across inference contexts. Although sharing weights might seem straightforward, it has significant performance implications when sharing between compute-bound and memory-bound operations. In typical ML inference scenarios, where weights are not shared with other operators, they are meticulously configured for each fully connected operator separately to ensure optimal performance. Sharing weights with another operator implies a loss of per-operator optimization and this mandates the authoring of new kernel implementations that can run efficiently even on sub-optimal weights.

Optimized Fully Connected Ops: XNNPack’s FULLY_CONNECTED operation has undergone two significant optimizations for LLM inference. First, dynamic range quantization seamlessly merges the computational and memory benefits of full integer quantization with the precision advantages of floating-point inference. The utilization of int8/int4 weights not only enhances memory throughput but also achieves remarkable performance, especially with the efficient, in-register decoding of 4-bit weights requiring only one additional instruction. Second, we actively leverage the I8MM instructions in ARM v9 CPUs which enable the multiplication of a 2x8 int8 matrix by an 8x2 int8 matrix in a single instruction, resulting in twice the speed of the NEON dot product-based implementation.

Balancing Compute and Memory: Upon profiling the LLM inference, we identified distinct limitations for both phases: the prefill phase faces restrictions imposed by the compute capacity, while the decode phase is constrained by memory bandwidth. Consequently, each phase employs different strategies for dequantization of the shared int8/int4 weights. In the prefill phase, each convolution operator first dequantizes the weights into floating-point values before the primary computation, ensuring optimal performance for computationally intensive convolutions. Conversely, the decode phase minimizes memory bandwidth by adding the dequantization computation to the main mathematical convolution operations.

|

| During the compute-intensive prefill phase, the int4 weights are dequantized a priori for optimal CONV_2D computation. In the memory-intensive decode phase, dequantization is performed on the fly, along with CONV_2D computation, to minimize the memory bandwidth usage. |

Custom Operators: For GPU-accelerated LLM inference on-device, we rely extensively on custom operations to mitigate the inefficiency caused by numerous small shaders. These custom ops allow for special operator fusions and various LLM parameters such as token ID, sequence patch size, sampling parameters, to be packed into a specialized custom tensor used mostly within these specialized operations.

Pseudo-Dynamism: In the attention block, we encounter dynamic operations that increase over time as the context grows. Since our GPU runtime lacks support for dynamic ops/tensors, we opt for fixed operations with a predefined maximum cache size. To reduce the computational complexity, we introduce a parameter enabling the skipping of certain value calculations or the processing of reduced data.

Optimized KV Cache Layout: Since the entries in the KV cache ultimately serve as weights for convolutions, employed in lieu of matrix multiplications, we store these in a specialized layout tailored for convolution weights. This strategic adjustment eliminates the necessity for extra conversions or reliance on unoptimized layouts, and therefore contributes to a more efficient and streamlined process.

What’s Next

We are thrilled with the optimizations and the performance in today’s experimental release of the MediaPipe LLM Inference API. This is just the start. Over 2024, we will expand to more platforms and models, offer broader conversion tools, complimentary on-device components, high level tasks, and more.

You can check out the official sample on GitHub demonstrating everything you’ve just learned about and read through our official documentation for even more details. Keep an eye on the Google for Developers YouTube channel for updates and tutorials.

Acknowledgements

We’d like to thank all team members who contributed to this work: T.J. Alumbaugh, Alek Andreev, Frank Ban, Jeanine Banks, Frank Barchard, Pulkit Bhuwalka, Buck Bourdon, Maxime Brénon, Chuo-Ling Chang, Yu-hui Chen, Linkun Chen, Lin Chen, Nikolai Chinaev, Clark Duvall, Rosário Fernandes, Mig Gerard, Matthias Grundmann, Ayush Gupta, Mohammadreza Heydary, Ekaterina Ignasheva, Ram Iyengar, Grant Jensen, Alex Kanaukou, Prianka Liz Kariat, Alan Kelly, Kathleen Kenealy, Ho Ko, Sachin Kotwani, Andrei Kulik, Yi-Chun Kuo, Khanh LeViet, Yang Lu, Lalit Singh Manral, Tyler Mullen, Karthik Raveendran, Raman Sarokin, Sebastian Schmidt, Kris Tonthat, Lu Wang, Tris Warkentin, and the Gemma Team

Google Cloud Next ’24 session library is now available

Google Cloud Next 2024 is coming soon, and our session library is live!

Next ‘24 covers a ton of ground, so choose your adventure. There's something on the menu for everyone, not just AI.

Developer-focused

Developers, this is your time. We have got a huge collection of edutainment for you in store for Next, including:

- Thousands of Googlers on-site to connect and chat

- Demos you can play with, try out, poke and see inside of (rather than just watching)

- Talks from Champion Innovators about how they put cloud to use

- Gathering spots for classes, interest groups, trainings and hanging out

This year we have more than double the number of advanced technical sessions, and recommendations for startups, small and medium businesses, and sustainability for all. Data scientists and data engineers can shard themselves out into 60+ big data sessions, including going to the cutting edge with BigQuery multi-modal data.

Artificial intelligence

If you want to build your own AI model, LLM or chatbot we've got sessions for that, covering ways to use Vertex AI to spin up your own large-language models on cloud, to search your multimedia library and to maintain equity in your data used for training.

Diversity, equity, and inclusion

Equity and inclusion go way past AI, and we’re really excited to have talks this year addressing allyship for your Muslim colleagues, growing inclusion in your org, and dialogues for change.

|

Security and data privacy

Don't forget security (really, who does?). Whether you are tackling security at the infrastructure, platform, machine or workload level, we've got sessions for you. Even if you're on multiple clouds, with multiple teams, you still need to get insight into the security and compliance of it all.

Speaking of all these fun chips, what about the salsa? We've got supply chain security with talks on SLSA and GUAC, plus numerous options for serverless workload security and ML data privacy.

Come join us

So, still on the fence?

Come for the magnificent shows in Vegas.

Come for the chance to sit down with expert developers and engineers.

Come for the amazing technical talks and tutorials.

Or just come for the spectacle. We've got it all at Google Cloud Next ‘24.

Check out sessions and secure your spot for three days of learning, community-building, and cloud tech with experts and peers at Mandalay Bay Convention Center in Las Vegas, April 9–11.

Introducing Gemma models in Keras

The Keras team is happy to announce that Gemma, a family of lightweight, state-of-the art open models built from the same research and technology that we used to create the Gemini models, is now available in the KerasNLP collection. Thanks to Keras 3, Gemma runs on JAX, PyTorch and TensorFlow. With this release, Keras is also introducing several new features specifically designed for large language models: a new LoRA API (Low Rank Adaptation) and large scale model-parallel training capabilities.

If you want to dive directly into code samples, head here:

- Get started with Gemma models

- Fine-tune Gemma models with LoRA

- Fine-tune Gemma models on multiple GPUs/TPUs

Get started

Gemma models come in portable 2B and 7B parameter sizes, and deliver significant advances against similar open models, and even some larger ones. For example:

- Gemma 7B scores a new best-in class 64.3% of correct answers in the MMLU language understanding benchmark (vs. 62.5% for Mistral-7B and 54.8% for Llama2-13B)

- Gemma adds +11 percentage points to the GSM8K benchmark score for grade-school math problems (46.4% for Gemma 7B vs. Mistral-7B 35.4%, Llama2-13B 28.7%)

- and +6.1 percentage points of correct answers in HumanEval, a coding challenge (32.3% for Gemma 7B, vs. Mistral 7B 26.2%, Llama2 13B 18.3%).

Gemma models are offered with a familiar KerasNLP API and a super-readable Keras implementation. You can instantiate the model with a single line of code:

gemma_lm = keras_nlp.models.GemmaCausalLM.from_preset("gemma_2b_en")

And run it directly on a text prompt – yes, tokenization is built-in, although you can easily split it out if needed - read the Keras NLP guide to see how.

gemma_lm.generate("Keras is a", max_length=32)

> "Keras is a popular deep learning framework for neural networks..."

Try it out here: Get started with Gemma models

Fine-tuning Gemma Models with LoRA

Thanks to Keras 3, you can choose the backend on which you run the model. Here is how to switch:

os.environ["KERAS_BACKEND"] = "jax" # Or "tensorflow" or "torch".

import keras # import keras after having selected the backend

Keras 3 comes with several new features specifically for large language models. Chief among them is a new LoRA API (Low Rank Adaptation) for parameter-efficient fine-tuning. Here is how to activate it:

gemma_lm.backbone.enable_lora(rank=4)

# Note: rank=4 replaces the weights matrix of relevant layers with the

# product AxB of two matrices of rank 4, which reduces the number of

# trainable parameters.

This single line drops the number of trainable parameters from 2.5 billion to 1.3 million!

Try it out here: Fine-tune Gemma models with LoRA.

Fine-tuning Gemma models on multiple GPU/TPUs

Keras 3 also supports large-scale model training and Gemma is the perfect model to try it out. The new Keras distribution API offers data-parallel and model-parallel distributed training options. The new API is meant to be multi-backend but for the time being, it is implemented for the JAX backend only, because of its proven scalability (Gemma models were trained with JAX).

To fine-tune the larger Gemma 7B, a distributed setup is useful, for example a TPUv3 with 8 TPU cores that you can get for free on Kaggle, or an 8-GPU machine from Google Cloud. Here is how to configure the model for distributed training, using model parallelism:

device_mesh = keras.distribution.DeviceMesh(

(1, 8), # Mesh topology

["batch", "model"], # named mesh axes

devices=keras.distribution.list_devices() # actual accelerators

)

# Model config

layout_map = keras.distribution.LayoutMap(device_mesh)

layout_map["token_embedding/embeddings"] = (None, "model")

layout_map["decoder_block.*attention.*(query|key|value).*kernel"] = (

None, "model", None)

layout_map["decoder_block.*attention_output.*kernel"] = (

None, None, "model")

layout_map["decoder_block.*ffw_gating.*kernel"] = ("model", None)

layout_map["decoder_block.*ffw_linear.*kernel"] = (None, "model")

# Set the model config and load the model

model_parallel = keras.distribution.ModelParallel(

device_mesh, layout_map, batch_dim_name="batch")

keras.distribution.set_distribution(model_parallel)

gemma_lm = keras_nlp.models.GemmaCausalLM.from_preset("gemma_7b_en")

# Ready: you can now train with model.fit() or generate text with generate()

What this code snippet does is set up the 8 accelerators into a 1 x 8 matrix where the two dimensions are called “batch” and “model”. Model weights are sharded on the “model” dimension, here split between the 8 accelerators, while data batches are not partitioned since the “batch” dimension is 1.

Try it out here: Fine-tune Gemma models on multiple GPUs/TPUs.

What’s Next

We will soon be publishing a guide showing you how to correctly partition a Transformer model and write the 6 lines of partitioning setup above. It is not very long but it would not fit in this post.

You will have noticed that layer partitionings are defined through regexes on layer names. You can check layer names with this code snippet. We ran this to construct the LayoutMap above.

# This is for the first Transformer block only,

# but they all have the same structure

tlayer = gemma_lm.backbone.get_layer('decoder_block_0')

for variable in tlayer.weights:

print(f'{variable.path:<58} {str(variable.shape):<16}')

Full GSPMD model parallelism works here with just a few partitioning hints because Keras passes these settings to the powerful XLA compiler which figures out all the other details of the distributed computation.

We hope you will enjoy playing with Gemma models. Here is also an instruction-tuning tutorial that you might find useful. And by the way, if you want to share your fine-tuned weights with the community, the Kaggle Model Hub now supports user-tuned weights uploads. Head to the model page for Gemma models on Kaggle and see what others have already created!

Build with Gemini models in Project IDX

A few weeks ago, we announced a series of product updates to Project IDX to help streamline and simplify full-stack, multiplatform software development. This week, we’re excited to share how Project IDX uses Gemini models to provide you with AI features to further speed up and refine your end-to-end developer workflow.

Project IDX launched with support for AI-powered code completion, an assistive chatbot, and contextual code actions like "add comments" and “explain this code” to help you write high-quality code faster. Since launch, and thanks to your feedback, we’ve been working hard to add new AI functionality to help boost your productivity even more.

Work faster with inline AI assistance

You can now get inline AI assistance inside any file by pressing Cmd/Ctrl + I. Simply describe the changes you want to make to your code and IDX inline AI assistance will provide real-time error correction, code suggestions, and auto-completion in your code.

We integrated these AI enhancements directly into Project IDX’s centralized workspace to equip you with the necessary tools and resources for full-stack app development where and when you need them. From setting up your workspace to testing your app, IDX AI assistance helps accelerate and improve your workflow, ensuring that your end-to-end development experience is faster, easier, and higher quality.

For example, let’s say you want to add an authenticated API endpoint to your server. You can tell IDX AI to write the code necessary to enable secure task management using Firebase Authentication and Cloud Firestore. Given an input prompt, IDX AI assistance can write the code to construct the route, determine which APIs to use to verify the token, and save the data to the database. Instead of writing boilerplate code, you can focus on higher-level design and problem solving.

|

| Input prompt for reference: Create a POST endpoint named /tasks. Get the ID Token from a cookie named _session. Verify this token with the Firebase Admin SDK. Use the UID property to assign the item to the user. Then save a task item with a servertime stamp for createdAt to the Firestore database using the admin SDK. |

Then, let's say you want to clean up your code a bit to improve its quality, readability, and maintainability. IDX AI assistance can help you quickly and easily refactor your code, so you can get right into optimizing your work without the hassle of manual refactoring.

|

| Input prompt for reference: Refactor to use Node’s promise API. |

And, as you wrap up your project, IDX AI can help you test and debug your code to make sure your application is running smoothly before deployment. Tell IDX AI assistance to write you a unit test for a function to ensure it’s working properly, saving you time and effort as you inspect the quality of your app.

|

| Input prompt for reference: Create a unit test for this function |

Easily add AI features with the Gemini API template

We’re also simplifying the process of building with the Gemini API with Project IDX’s new Gemini API template. The Gemini API template uses the Gemini Pro model to embed AI-powered features into your applications without additional configuration on your end, so you can get started working with the Gemini API quickly and easily. There's even an option to use the Gemini API via the popular LangChain framework to simplify the process of building LLM-powered apps.

The Gemini API template is multimodal, meaning it can provide context-aware prompt output for a myriad of input modalities including images, text and, of course, code. This can help you add features like conversational interfaces, summarization of user reviews, translation, and automatic image caption creation.

To demonstrate its functionality, we pre-configured the Gemini API template with ‘Baking with the Gemini API’, a recipe builder application that, using the Gemini model’s multimodal capabilities, can reverse-engineer possible recipes for baked goods from just a picture.

|

But this recipe builder is just one example of the Gemini API template in action – with support for different input modalities and context-aware output generation, you can use IDX’s Gemini API template to create a myriad of innovative and impactful applications that deliver AI-enhanced experiences to your users.

Stay tuned for more AI updates

These updates are a continuation of our efforts to leverage Google’s AI innovations for Project IDX, so make sure to keep an eye out for more announcements to come, including the expansion of AI in IDX to more than 150 countries/regions in the coming weeks.

Thank you for your continued support and engagement – please keep the feedback coming by filing bugs and feature requests. For walkthroughs and more information on all the features mentioned above, check out our documentation. If you haven’t already, visit our website to sign up to try Project IDX and join us on our journey. Also, be sure to check out our new Project IDX Blog for the latest product announcements and updates from the team.

We can’t wait to see what you create with Project IDX!

Gemini 1.5: Our next-generation model, now available for Private Preview in Google AI Studio

Last week, we released Gemini 1.0 Ultra in Gemini Advanced. You can try it out now by signing up for a Gemini Advanced subscription. The 1.0 Ultra model, accessible via the Gemini API, has seen a lot of interest and continues to roll out to select developers and partners in Google AI Studio.

Today, we’re also excited to introduce our next-generation Gemini 1.5 model, which uses a new Mixture-of-Experts (MoE) approach to improve efficiency. It routes your request to a group of smaller "expert” neural networks so responses are faster and higher quality.

Developers can sign up for our Private Preview of Gemini 1.5 Pro, our mid-sized multimodal model optimized for scaling across a wide-range of tasks. The model features a new, experimental 1 million token context window, and will be available to try out in Google AI Studio. Google AI Studio is the fastest way to build with Gemini models and enables developers to easily integrate the Gemini API in their applications. It’s available in 38 languages across 180+ countries and territories.

1,000,000 tokens: Unlocking new use cases for developers

Before today, the largest context window in the world for a publicly available large language model was 200,000 tokens. We’ve been able to significantly increase this — running up to 1 million tokens consistently, achieving the longest context window of any large-scale foundation model. Gemini 1.5 Pro will come with a 128,000 token context window by default, but today’s Private Preview will have access to the experimental 1 million token context window.

We’re excited about the new possibilities that larger context windows enable. You can directly upload large PDFs, code repositories, or even lengthy videos as prompts in Google AI Studio. Gemini 1.5 Pro will then reason across modalities and output text.

- Upload multiple files and ask questions

- Query an entire code repository

- Add a full length video

We’ve added the ability for developers to upload multiple files, like PDFs, and ask questions in Google AI Studio. The larger context window allows the model to take in more information — making the output more consistent, relevant and useful. With this 1 million token context window, we’ve been able to load in over 700,000 words of text in one go.

The large context window also enables a deep analysis of an entire codebase, helping Gemini models grasp complex relationships, patterns, and understanding of code. A developer could upload a new codebase directly from their computer or via Google Drive, and use the model to onboard quickly and gain an understanding of the code.

Gemini 1.5 Pro can help developers boost productivity when learning a new codebase. [Video sped up for demo purposes]

Gemini 1.5 Pro can also reason across up to 1 hour of video. When you attach a video, Google AI Studio breaks it down into thousands of frames (without audio), and then you can perform highly sophisticated reasoning and problem-solving tasks since the Gemini models are multimodal.

Gemini 1.5 Pro can perform reasoning and problem-solving tasks across video and other visual inputs. [Video sped up for demo purposes]

More ways for developers to build with Gemini models

In addition to bringing you the latest model innovations, we’re also making it easier for you to build with Gemini:

- Easy tuning. Provide a set of examples, and you can customize Gemini for your specific needs in minutes from inside Google AI Studio. This feature rolls out in the next few days.

- New developer surfaces. Integrate the Gemini API to build new AI-powered features today with new Firebase Extensions, across your development workspace in Project IDX, or with our newly released Google AI Dart SDK.

- Lower pricing for Gemini 1.0 Pro. We’re also updating the 1.0 Pro model, which offers a good balance of cost and performance for many AI tasks. Today’s stable version is priced 50% less for text inputs and 25% less for outputs than previously announced. The upcoming pay-as-you-go plans for AI Studio are coming soon.

Since December, developers of all sizes have been building with Gemini models, and we’re excited to turn cutting edge research into early developer products in Google AI Studio. Expect some latency in this preview version due to the experimental nature of the large context window feature, but we’re excited to start a phased rollout as we continue to fine-tune the model and get your feedback. We hope you enjoy experimenting with it early on, like we have.

Google Pay – Enabling liability shift for eligible Visa device token transactions globally

We are excited to announce the general availability [1] of liability shift for Visa device tokens for Google Pay.

For Mastercard device tokens the liability already lies with the issuing bank, whereas, for Visa, only eligible device tokens with issuing banks in the European region benefit from liability shift.

What is liability shift?

If liability shift is granted for a transaction, the responsibility of covering the losses from fraudulent transactions is moving from the merchant to the issuing bank. With this change, qualifying Google Pay Visa transactions done with a device token will benefit from this liability shift.

How to know if the liability was shifted to the issuing bank for my transaction?

Eligible Visa transactions will carry an eciIndicator value of 05. PSPs can access the eciIndicator value after decrypting the payment method token. Merchants can check with their PSPs to get a report on liability shift eligible transactions.

{

"gatewayMerchantId": "some-merchant-id",

"messageExpiration": "1561533871082",

"messageId": "AH2Ejtc8qBlP_MCAV0jJG7Er",

"paymentMethod": "CARD",

"paymentMethodDetails": {

"expirationYear": 2028,

"expirationMonth": 12,

"pan": "4895370012003478",

"authMethod": "CRYPTOGRAM_3DS",

"eciIndicator": "05",

"cryptogram": "AgAAAAAABk4DWZ4C28yUQAAAAAA="

}

}

| A decrypted payment token for a Google Pay Visa transaction with an eciIndicator value of 05 (liability shifted) |

Check out the following table for a full list of eciIndicator values we return for our Visa and Mastercard device token transactions:

|

eciIndicator value |

Card Network |

Liable Party |

authMethod |

|

"" (empty) |

Mastercard |

Merchant/Acquirer |

CRYPTOGRAM_3DS |

|

"02" |

Mastercard |

Card issuer |

CRYPTOGRAM_3DS |

|

"06" |

Mastercard |

Merchant/Acquirer |

CRYPTOGRAM_3DS |

|

"05" |

Visa |

Card issuer |

CRYPTOGRAM_3DS |

|

"07" |

Visa |

Merchant/Acquirer |

CRYPTOGRAM_3DS |

|

"" (empty) |

Other networks |

Merchant/Acquirer |

CRYPTOGRAM_3DS |

Any other eciIndicator values for VISA and Mastercard that aren't present in this table won't be returned.

How to enroll

Merchants may opt-in from within the Google Pay & Wallet console starting this month. Merchants in Europe (already benefiting from liability shift) do not need to take any actions as they will be auto enrolled.

In order for your Google Pay transaction to qualify for enabling liability shift, the following API parameters are required:

|

totalPrice |

Make sure that totalPrice matches with the amount that you use to charge the user. Transactions with totalPrice=0 will not qualify for liability shift to the issuing bank. |

|

totalPriceStatus |

Valid values are: FINAL or ESTIMATED Transactions with the totalPriceStatus value of NOT_CURRENTLY_KNOWN do not qualify for liability shift. |

Not all transactions get liability shift

Ineligible merchants

In the US, the following MCC codes are excluded from getting liability shift:

|

4829 |

Money Transfer |

|

5967 |

Direct Marketing – Inbound Teleservices Merchant |

|

6051 |

Non-Financial Institutions – Foreign Currency, Non-Fiat Currency (for example: Cryptocurrency), Money Orders (Not Money Transfer), Account Funding (not Stored Value Load), Travelers Cheques, and Debt Repayment |

|

6540 |

Non-Financial Institutions – Stored Value Card Purchase/Load |

|

7801 |

Government Licensed On-Line Casinos (On-Line Gambling) (US Region only) |

|

7802 |

Government-Licensed Horse/Dog Racing (US Region only) |

|

7995 |

Betting, including Lottery Tickets, Casino Gaming Chips, Off-Track Betting, Wagers at Race Tracks and games of chance to win prizes of monetary value |

Ineligible transactions

In order for your Google Pay transactions to qualify for liability shift, make sure to include the above mentioned parameters totalPrice and totalPriceStatus. Transactions with totalPrice=0 or a hard coded totalPrice (always the same amount but the users get charged a different amount) will not qualify for liability shift.

Processing transactions

Google Pay API transactions with Visa device tokens are qualified for liability shift at facilitation time if all the conditions are met, but a transaction qualified for liability shift can be downgraded by network during transaction authorization processing.

Getting started with Google Pay

Not yet using Google Pay? Refer to the documentation to start integrating Google Pay today. Learn more about the integration by taking a look at our sample application for Android on GitHub or use one of our button components for your web integration. When you are ready, head over to the Google Pay & Wallet console and submit your integration for production access.

Follow @GooglePayDevs on X (formerly Twitter) for future updates. If you have questions, tag @GooglePayDevs and include #AskGooglePayDevs in your tweets.

[1] For merchants and PSPs using dynamic price updates or other callback mechanisms the Visa device token liability shift changes will be rolled out later this year.

#WeArePlay | How two sea turtle enthusiasts are revolutionizing marine conservation

When environmental science student Caitlin returned home from a trip monitoring sea turtles in Western Australia, she was inspired to create a conservation tool that could improve tracking of the species. She connected with a French developer and fellow marine life enthusiast Nicolas to design their app We Spot Turtles!, allowing anyone to support tracking efforts by uploading pictures of them spotted in the wild.

Caitlin and Nicolas shared their journey in our latest film for #WeArePlay, which showcases the amazing stories behind apps and games on Google Play. We caught up with the pair to find out more about their passion and how they are making strides towards advancing sea turtle conservation.

Tell us about how you both got interested in sea turtle conservation?

Caitlin: A few years ago, I did a sea turtle monitoring program for the Department of Biodiversity, Conservation and Attractions in Western Australia. It was probably one of the most magical experiences of my life. After that, I decided I only really wanted to work with sea turtles.

Nicolas: In 2010, in French Polynesia, I volunteered with a sea turtle protection project. I was moved by the experience, and when I came back to France, I knew I wanted to use my tech background to create something inspired by the trip.

How did these experiences lead you to create We Spot Turtles!?

Caitlin: There are seven species of sea turtle, and all are critically endangered. Or rather there’s not enough data on them to inform an accurate endangerment status. This means the needs of the species are going unmet and sea turtles are silently going extinct. Our inspiration is essentially to better track sea turtles so that conservation can be improved.

Nicolas: When I returned to France after monitoring sea turtles, I knew I wanted to make an app inspired by my experience. However, I had put the project on hold for a while. Then, when a friend sent me Caitlin’s social media post looking for a developer for a sea turtle conservation app, it re-ignited my inspiration, and we teamed up to make it together.

|

What does We Spot Turtles! do?

Caitlin: Essentially, members of the public upload images of sea turtles they spot – and even get to name them. Then, the app automatically geolocates, giving us a date and timestamp of when and where the sea turtle was located. This allows us to track turtles and improve our conservation efforts.

How do you use artificial intelligence in the app?

Caitlin: The advancements in AI in recent years have given us the opportunity to make a bigger impact than we would have been able to otherwise. The machine learning model that Nicolas created uses the facial scale and pigmentations of the turtles to not only identify its species, but also to give that sea turtle a unique code for tracking purposes. Then, if it is photographed by someone else in the future, we can see on the app where it's been spotted before.

How has Google Play supported your journey?

Caitlin: Launching our app on Google Play has allowed us to reach a global audience. We now have communities in Exmouth in Western Australia, Manly Beach in Sydney, and have 6 countries in total using our app already. Without Google Play, we wouldn't have the ability to connect on such a global scale.

Nicolas: I’m a mobile application developer and I use Google’s Flutter framework. I knew Google Play was a good place to release our title as it easily allows us to work on the platform. As a result, we’ve been able to make the app great.

|

What do you hope to achieve with We Spot Turtles!?

Caitlin: We Spot Turtles! puts data collection in the hands of the people. It’s giving everyone the opportunity to make an impact in sea turtle conservation. Because of this, we believe that we can massively alter and redefine conservation efforts and enhance people’s engagement with the natural world.

What are your plans for the future?

Caitlin: Nicolas and I have some big plans. We want to branch out into other species. We'd love to do whale sharks, birds, and red pandas. Ultimately, we want to achieve our goal of improving the conservation of various species and animals around the world.

Discover other inspiring app and game founders featured in #WeArePlay.

Calling all students: Learn how to become a Google Developer Student Club Lead

Does the idea of leading a student community at your university appeal to you? Are you enthusiastic about Google technologies or interested in learning more about them? Do you love planning tech-related events and new ways for your campus community to build skills? If so, consider leading a Google Developer Student Club!

What are Google Developer Student Clubs?

Google Developer Student Clubs (GDSC) are community groups for university students interested in learning and building with Google technologies. There are over 2000 GDSC chapters, represented in over 100 countries around the world where undergraduate and graduate students explore Artificial Intelligence, Machine Learning, Google Cloud, Android development, Flutter, and other innovative technologies together. GDSC chapters host in-person, project-based events, such as hackathons and Solution Challenge with guest speakers and technical experts provided by Google.

Apply to Lead a Google Developer Student Club

You can learn more about the 2024-2025 GDSC Lead application process here.

Leading a GDSC is a great opportunity to learn new programming skills, dive deep into Google technologies and create local impact, while also building your network.

Google Developer Student Club Leads hone their technical and leadership skills as they manage a campus-based community for peers. GDSC Leads:

- Receive mentorship from Google

- Join a global community of leaders

- Train peers to use Google technologies in their developer journey

- Use technology to find solutions for real-world challenges

|

Meet Drashtant Chudasama, Lakehead University Google Developer Student Club lead. Drashtant hosted a 2-day DevFest On Campus event in Canada to help foster technology in his local area. The city's first DevFest included a handful of guest speakers and a hackathon. These are the types of things you will have the opportunity to do as a GDSC Lead.

If this sounds like your skill set or you’d like to explore a new leadership opportunity in technology, we encourage you to apply to become a GDSC Lead. You can check for application deadlines in your region here.

Google Developer Student Clubs Around the World

|

After a year’s hiatus, GDSC HITS lead, Amitasha Verma and her team defied the odds to bring an interactive event to life. More than 80+ students came together for a 3-hour "Unlocking the Power of Blockchain" event in India. This event demonstrated the unwavering spirit of students eager to explore the world of blockchain.

|

GDSC Fast National University in Islamabad collaborated with 15 other GDSC chapters to host the exciting "Techbuzz" competition, bringing together a diverse group of tech enthusiasts to showcase their skills through a variety of engaging activities. The event featured intense rapid-fire tech sessions that tested the participants' knowledge and quick thinking, while bringing a game-based learning platform to add an element of fun and excitement.

How to become a GDSC Lead

Learn more about the GDSC Lead role and criteria here. To get started click here.

Note: Google Developer Student Clubs are student-led independent organizations, and their presence does not indicate a relationship between Google and the students' universities.

Federated Credential Management (FedCM) Migration for Google Identity Services

Chrome is phasing out support for third-party cookies this year, subject to addressing any remaining concerns of the CMA. A relatively new web API, Federated Credential Management (FedCM), will enable sign-in for the Google Identity Services (GIS) library after the phase out of third-party cookies. Starting in April, GIS developers will be automatically migrated to the FedCM API. For most developers, this migration will occur seamlessly through backwards-compatible updates to the GIS library. However, some websites with custom integrations may require minor changes. We encourage all developers to experiment with FedCM, as previously announced through the beta program, to ensure flows will not be interrupted. Developers have the ability to temporarily exempt traffic from using FedCM until Chrome enforces the restriction of third-party cookies.

Audience

This update is for all GIS web developers who rely on the Chrome browser and use:

- One Tap, or

- Automatic Sign-In

Context

As part of the Privacy Sandbox initiative to keep people’s activity private and support free experiences for everyone, Chrome is phasing out support for third-party cookies, subject to addressing any remaining concerns of the CMA. Scaled testing began at 1% in January and will continue throughout the year.

GIS currently uses third-party cookies to allow users to sign up and sign in to websites easily and securely by reducing reliance on passwords. The FedCM API is a new privacy-preserving alternative to third-party cookies for federated identity providers. It allows Google to continue providing a secure, streamlined experience for signing up and signing in to websites. Last August, the Google Identity team announced a beta program for developers to test the Chrome browser’s new FedCM API supporting GIS.

What to Expect in the Migration

Partners who offer GIS’s One Tap and Automatic Sign-In features will automatically be migrated to FedCM in April. For most developers, this migration will occur seamlessly through backwards-compatible updates to the GIS JavaScript library; the GIS library will call the FedCM APIs behind the scenes, without requiring any developer changes. The new FedCM APIs have minimal impact to existing user flows.

Some Developers May be Required to Make Changes

Some websites with custom integrations may require minor changes, such as updates to custom layouts or positioning of sign-in prompts. Websites using embedded iframes for sign-in or a non-compliant Content Security Policy may need to be updated. To learn if your website will require changes, please review the migration guide. We encourage you to enable and experiment with FedCM, as previously announced through the beta program, to ensure flows will not be interrupted.

Migration Timeline

If you are using GIS One Tap or Automatic Sign-in on your website, please be aware of the following timelines:

- January 2024: Chrome began scaled testing of third-party cookie restrictions at 1%.

- April 2024: GIS begins a migration of all websites to FedCM on the Chrome browser.

- Q3 2024: Chrome begins ramp-up of third-party cookie restrictions, reaching 100% of Chrome clients by the end of Q4, subject to adddressing any remaining concerns of the CMA.

Once the Chrome browser restricts third-party cookies by default for all Chrome clients, the use of FedCM will be required for partners who use GIS One Tap and Automatic Sign-In features.

Checklist for Developers to Prepare

✅ Be aware of migration plans and timelines that will affect your traffic. Determine your migration approach. Developers will be migrated by default starting in April.

✅ All developers should verify that their website will be unaffected by the migration. Opt-in to FedCM to test and make any necessary changes to ensure a smooth transition. For developers with implementations that require changes, make changes ahead of the migration deadline.

✅ For developers that use Automatic Sign-In, review the FedCM changes to the user gesture requirement. We recommend all automatic sign-in developers migrate to FedCM as soon as possible, to reduce disruption to automatic sign-in conversion rates.

✅ If you need more time to verify FedCM functionality on your site and make changes to your code, you can temporarily exempt your traffic from using FedCM until the enforcement of third-party cookie restrictions by Chrome.

To get started and learn more about FedCM, visit our developer site and check out the google-signin tag on Stack Overflow for technical assistance. We invite developers to share their feedback with us at [email protected].

Posted by Aurash Mahbod – General Manager, Games on Google Play

Posted by Aurash Mahbod – General Manager, Games on Google Play

Posted by Mark Sherwood – Senior Product Manager and Juhyun Lee – Staff Software Engineer

Posted by Mark Sherwood – Senior Product Manager and Juhyun Lee – Staff Software Engineer

Posted by

Posted by  Posted by Ali Satter – AI Lead, Roman Nurik – Design Lead

Posted by Ali Satter – AI Lead, Roman Nurik – Design Lead

Posted by Jaclyn Konzelmann and Wiktor Gworek – Google Labs

Posted by Jaclyn Konzelmann and Wiktor Gworek – Google Labs

Posted by Dominik Mengelt– Developer Relations Engineer, Payments and Florin Modrea - Product Solutions Engineer, Google Pay

Posted by Dominik Mengelt– Developer Relations Engineer, Payments and Florin Modrea - Product Solutions Engineer, Google Pay

Posted by Leticia Lago – Developer Marketing

Posted by Leticia Lago – Developer Marketing

Posted by Rachel Francois, Global Program Manager, Google Developer Student Clubs

Posted by Rachel Francois, Global Program Manager, Google Developer Student Clubs

Posted by Gina Biernacki, Product Manager

Posted by Gina Biernacki, Product Manager